Image registration

Image registration is an important process in digital image processing and is used to bring two or more images of the same scene, or at least similar scenes, into agreement with one another as well as possible. One of the images is set as a reference image , the others are called object images . In order to optimally adapt this to the reference image, a compensating transformation is calculated. The images to be registered differ from one another because they were recorded from different positions, at different times or with different sensors.

Image registration processes are particularly common in medical image processing. The images recorded with different imaging processes ( modalities ) are adjusted to one another in order to gain better knowledge from their combination. Are z. B. MRI images that show soft tissue or brain structures well, superimposed with PET images that make certain metabolic processes visible, one can understand in which brain areas certain metabolic processes take place. The overlay is also known as image fusion .

Another application example is the merging of several satellite images into a large map. Since the earth's surface is curved and the position of the satellite changes from image to image, there are small distortions within the images, which can be adjusted to one another using registration processes - see also image correlation .

The aim of image registration is to find that transformation T which brings a given source image (object image) F into agreement with a target image (reference image) G as well as possible . For this purpose, a measure D is characterized for the equality or the inequality of the images. Image registration is thus an optimization problem in which D (T (F), G) has to be minimized (if D measures the inequality) or maximized (if D measures the equality).

overview

As can be seen above, the application of image registration can be divided into the following areas:

- Different camera positions: The images to be registered show the same object or the same scene, but were recorded from different camera positions (see parallax ). The registration can then be used to obtain a larger two-dimensional field of view or also for 3D reconstruction.

- Different points in time: The images to be registered show the same object or the same scene, but at different points in time. Changes that have arisen over time can now be determined by means of registration ( time series analysis ).

- Different sensors : The images to be registered contain the same object or scene, but were recorded with different sensors, i. H. with different cameras or different imaging methods . The aim of image registration here is to obtain more and more detailed information from the images.

- Scene-to-model registration: One or more images of an object or a scene are registered with a model of the object or the scene. The registered images can then be compared with the given model.

Because of the wide range of possible applications and different types of images, there is no registration process that can be used universally. Rather, registration procedures are specially developed for various applications for which they then function optimally. Even so, most registration procedures can be broken down into four main steps:

- Feature extraction: Features, such as e.g. B. corners, edges , contours or the like detected manually or automatically.

- Feature adaptation: The correspondence of the extracted feature points is established.

- Transformation calculation: A suitable transformation type, e.g. B. affine, projective or the like, selected and calculated the transformation parameters.

- Transformation: The object image is transformed with the transformation calculated in the previous step. Interpolation techniques are also used here.

Feature extraction

Registration procedures can be divided into two categories, the feature-based and the area-based procedures. With the area-based methods, the registration is carried out directly with the intensity values; no features have to be extracted. The feature extraction step is omitted in these methods.

The second category is the feature-based method in which a certain, usually relatively small, number of features is extracted from the images. This is done either manually or automatically. With manual feature extraction, significant points are marked in the images by a person. With automatic processes, the images are searched for distinctive features that can be found in all images. The selected features should be distributed as far as possible over the entire image and not concentrate on specific regions. The registration then takes place in that the selected features are brought into agreement. The individual groups of characteristics are explained in more detail below:

- Regions: Areas in the image that stand out clearly from the areas surrounding them are suitable as regional features. This can be done in satellite images e.g. B. be lakes. Regions are usually represented by their focus and can be detected using segmentation methods.

- Lines: Lines or edges can be present in the image as contours of regions or as lines themselves. They can be represented by the pairs of their end points or their center point and extracted using edge detection .

- Points: Points can be given in the image as intersections of lines or corners of contours. They can be extracted by corner detectors.

The advantage of the feature-based method compared to the area-based method is, on the one hand, the generally lower computational effort for the feature adaptation, since the number of features is not selected too large, and on the other hand, the lower susceptibility to noise, since the registration is not carried out directly with the intensity values. The disadvantage, however, is the feature extraction itself, which represents an additional processing step. With automatic feature extraction, it is often not easy to choose features that can be easily found in all images, or to keep the number of features as small as possible. For this reason, feature-based methods should only be selected if it is to be expected that there will be a few features that are evenly distributed over the image and can be easily extracted in all images.

Feature adjustment

Area-based methods

In the area-based method, the step of feature extraction is mixed with that of feature adaptation, since here, in a certain sense, every pixel is a feature point. The production of the correspondence between the object image and the reference image can take place through windows of a certain size, so that the correspondence is established window by window. However, the entire image can also be used. For the registration, either a function which indicates the difference between the object image and the reference image or a function which indicates the correspondence between the object image and the reference image is used. This function must then be minimized or maximized accordingly.

Correlation Methods

A widespread approach in area-based methods is the cross-correlation function . This is usually used for template matching or pattern recognition . Let f and g be two image sections of the same dimension from the object or reference image. Using the normalized cross-correlation function

a value is calculated which lies in the range [0..1] and represents the similarity of f and g . The higher the value, the more similar the image sections are. This degree of similarity is now calculated for pairs of image sections from the object and reference image. The image sections with the highest value are then defined as the corresponding ones. This method only works if the difference between the object image and the reference image consists of translations . In the case of rotation , scaling or other deformations, the process fails in this form.

Fourier methods

If the images contain frequency-dependent noise, the Fourier methods offer a better solution than the correlation methods. In addition, the calculation time can be reduced compared to the correlation methods. Translation , rotation and scaling have their corresponding counterparts in the frequency domain and can therefore be implemented using these methods. The calculation of the Fourier coefficients of an image can be realized efficiently, either by implementation in hardware or by using the fast Fourier transform (FFT) .

One possibility of registering two images that differ from one another only by a translation is the phase correlation. The phase correlation is based on the shift theorem.

Let two images f and g be given, which differ by a translation (u, v) , ie f (x, y) = g (x + u, y + v) . Then their Fourier transforms depend on each other as follows:

- .

The Fourier transformation of images f and g only differs in a phase shift that is directly related to the translation . Using the cross power spectrum of images f and g

one can calculate the phase shift . To do this, one looks for the maximum in the inverse Fourier transform of the cross-power spectrum . The position of the maximum then provides the translation parameters.

Methods based on transinformation

Methods that use the transinformation of the images show particularly good results with images in which the intensity values vary greatly. This variation occurs e.g. B. on images that were recorded with different sensors, such as MRI and CT.

Entropy is important for the explanation of the mutual information

- ,

where X is a random variable , x is a discrete value of the random variable X and p is a probability density . In the case of image registration, it is obvious that the random variable X represents the intensity values of an image. The entropy is then a measure of an image disorder. If all intensity values of an image are equally likely, then the entropy is greatest. If the image contains only a single intensity value, the entropy is zero. For image registration, however, you need the shared entropy of two random variables X and Y

- ,

since at least two pictures have to be compared with each other. If this measure is minimal, then the images are in the best possible agreement. But the common entropy not only decreases when the images are better matched to one another, but also when the entropy of one of the images decreases. Therefore, a measure of the agreement should also take into account the entropies of the individual images. The transinformation

- ,

is such a measure. The transinformation becomes maximum when the common entropy decreases. The picture on the right shows Venn diagrams showing the various dimensions. There it can also be seen once again that the transinformation is maximal when the common entropy is minimal. When registering with transinformation, the aim is to maximize it, ie the images are in the best possible match when the transinformation is at its maximum. In order to get good estimates for the transinformation it is necessary to have a good estimate for the probability density p . As many pixels as possible must therefore be included, which is also a disadvantage since the registration is therefore very complex.

Feature-based procedures

Let two sets of features be given. One contains the features in the object image, the other the features in the reference image. The features are represented by so-called control points. These can be the features themselves, if the features are points, or end points of lines, focal points of regions or the like. The aim of the feature adjustment is to establish the paired correspondence of the features of the object image with those of the reference image.

Methods that use spatial relations

With these methods, the information about the distance between the control points and their spatial distribution is used in order to establish the correspondence between the control points of the object image and those of the reference image.

One way to make the adjustment is as follows. There are n selected control points in the object image. Thereafter, n control points are defined in the reference image as those corresponding to the selected control points in the object image. The transformation is calculated and carried out on the basis of this correspondence. Then it is checked how many of the remaining control points are on top of each other or at least sufficiently close to each other. If the percentage of the remaining control points lying on top of one another is below a certain threshold value, then two new control points must be determined in the reference image and the process is repeated.

Methods that use invariant descriptors

Another method of establishing the correspondence between the features is to use certain properties that characterize the features. These properties are called descriptors and should be as invariant as possible with respect to the expected image distortions. The descriptors should meet the following conditions:

- Invariance: The descriptors of the corresponding features of the object image and the reference image should be the same.

- Uniqueness: Two different characteristics should have different descriptors.

- Stability: The descriptors of a feature that is deformed should be similar to those of the undeformed feature.

- Independence: If the descriptors are a vector, its elements should be functionally independent of each other.

However, not all of these conditions can always be met at the same time. So a suitable compromise has to be found in the choice of descriptors. The selection of the descriptors depends on the characteristics of the features and the expected deformation between the object image and the reference image. Are the features e.g. B. Regions and if the deformation consists only of translation and rotation, the area of a region can be selected as the descriptor, since this remains the same for rotation and translation. However, if scaling is added, the selected property is no longer invariant with regard to the transformation. During the feature adaptation, the features from the object image and the reference image whose descriptors are most similar are determined as corresponding.

Invariant descriptors can also be used if features have not been explicitly extracted beforehand, but a window runs over the entire image and the invariants are then calculated for this window.

Transformation calculation

After the correspondence between the features was established in the last section, this section describes how the transformation is constructed with which the object image is transformed in order to adapt it to the reference image. The correspondence of the control points of the object image and the reference image, as well as the requirement that the corresponding control points are to be transformed as closely as possible to one another, flow into the design of the transformation.

The task to be solved is the selection of a family of functions and the calculation of the parameters of the mapping function. The family of functions must be selected with regard to the expected differences in the image and the required accuracy of the transformation. The simplest case is a translation . Only two parameters have to be calculated. More complex are e.g. B. affine or perspective transformations. The more complex the family of functions, the greater the number of parameters to be calculated. The reason for the difference in the images also has an influence on the choice of the family of functions. So is z. B. in the case of perspective distortions due to different camera positions, the choice of the family of functions as perspective transformation is obvious.

The transformations can be divided into two broad categories, depending on the amount of data used. Global transformations use all control points to compute a set of parameters for the entire image. A global transformation thus consists of a single function that is applied to every pixel. With the local transformations, the image is divided into several areas - in extreme cases, each pixel is a separate area. Then the parameters are calculated for each area. This means that local differences in the strengths of the images can also be treated. A local transformation consists of several functions, each for an area.

Global transformations

One of the widespread global transformation models uses bivariate polynomials of low, mostly first degree. The similarity transformation is the simplest model. The following equations map the point (x, y) to the point (x ', y') :

- ,

where the angle of rotation, s are the scaling factor and and are the translation parameters . This transformation is also called shape-preserving, as it means that angles , distances and length ratios remain unchanged. One advantage is that only two control points are required here. The disadvantage, however, is that only rotation , translation and scaling can be implemented in this way.

A more general model is the affine transformation . The point (x, y) is mapped to the point (x ', y') as follows :

- ,

where and are the scaling factors, and are the shear factors and and are the translation parameters . Three control points are required here, but the shear can also be implemented.

If perspective distortions are to be expected in the images to be registered, the perspective transformation

- ,

be used. Four control points are now required here.

If more complex distortions are to be expected in the images, polynomials of the second or third degree can also be used. Polynomials of higher order are usually not used. As a rule, however, more control points are used for the registration than the minimum number specified here. The parameters of the selected transformation are then usually calculated using the least squares method , so that the transformation equations minimize the sum of the squared errors of the control points. As a result, however, it can happen that the control points are not transformed exactly on top of each other, but only as close to each other as possible.

Local transformations

Due to global transformations, local differences of varying magnitude can only be adjusted poorly or not at all in the images. Local transformations are better suited for this. The transformation consists of several functions. All control points are then no longer used for a function, but each function has its control points.

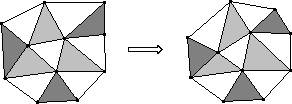

One method that realizes local transformations is piecewise interpolation. A function is determined that interpolates between the matched control points of the object image and the reference image. One possibility is triangulation. The picture on the right shows how an image can be divided into triangular areas with the help of the control points. The triangles in the picture on the right have different colors to make the corresponding triangles easier to see. Several functions are used in the transformation, each of which is valid within a triangle. In order to obtain sufficiently good results with this procedure, the corner points of each triangle must not be too far apart, ie there must be a sufficient number of control points. Since the number of control points required is very high, the calculation effort is correspondingly high.

Radial basis functions

The radial basis functions belong to the global transformations, but are also able to adapt local differences. Any function f that has the following property is a radial basis function:

- ,

where c is the center of function f . When registering with radial basis functions, each control point is the center of a basis function. The entire transformation is then a linear combination of all these radial basis functions plus a low-degree polynomial . Let N control points be given, the coordinates of the i th control point and weights which indicate how strongly the function, the center of which is the i th control point, is included in the overall transformation. The pixel (x, y) is then transferred to the pixel (x ', y') as follows :

- .

The control points are matched by means of the polynomial in the above equations and the remaining image points between the control points are then interpolated by means of the radial basis functions.

The most commonly used form for registration with radial basic functions are the thin-plate splines. The radial basis function f is defined as follows:

- .

The registration with thin-plate splines can be very time-consuming if many control points are used.

Elastic models

Another approach to registering images that have very complex local distortions is registration using elastic models. The registration usually takes place iteratively by minimizing an energy functional, the shape

with the functional that describes the inequality of the images, the bilinear form that describes a suitable penalty term (also regularization term), and a positive regularization parameter . The bilinear form is often given by the elliptic operator

with Neumann boundary conditions and the elasticity constants and given. The registration works by iteratively solving the Euler-Lagrange equations

- .

As a result, the images are modeled as elastic surfaces or viscous liquids on which external forces act and thereby deform them. The deformation is influenced by the internal forces and appropriately scaled by the parameter . The steps of adapting the features and calculating the transformation coincide here.

transformation

The calculated transformations are now used to transform the object image and thus to register the images. The transformation can be carried out forwards or backwards. If it is carried out forwards, a new position is calculated for each image point of the object image using the transformation functions. However, this approach has decisive disadvantages. On the one hand, several image points of the object image can be transformed to one and the same new image point and, on the other hand, holes can arise in the transformed image. A hole is created when there is a point (x, y) in the transformed image to which no image point of the object image is transformed.

If the transformation is carried out backwards, the intensity value at the position (x, y) in the transformed image is calculated as follows. First, starting from the position (x, y), a grid position (x ', y') in the object image is calculated by means of the inverse transformation . Then the intensity value in the transformed image is calculated by interpolation from the (x ', y') surrounding pixels. Often used interpolation techniques are e.g. B. bilinear or bicubic interpolation. In this way, an intensity value is calculated for each pixel of the transformed image.

literature

- R. Bajcsy, S. Kovacic: Multiresolution elastic matching, Computer Vision, Graphics and Image Processing , Vol. 46, 1989, pp. 1-21

- Jan Modersitzki: Numerical Methods for Image Registration , Oxford University Press, 2004.

- M. Bro-Nielsen, C. Gramkow: Fast fluid registration of medical images , in Visualization in Biomedical Computing. 4th International Conference, VBC '96 Proceedings , 1996, pp. 267-276

- FL Bookstein: Principal Warps: Thin Plate Splines and the Decomposition of Deformations , IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 11, No. 6, 1989, pp. 567-585

- RN Bracewell: The Fourier Transform and Its Applications , McGraw-Hill, New York, 1965

- LG Brown: A Survey of Image Registration Techniques , ACM Computing Surveys, Vol. 24, No. 4, 1992, pp. 325-376

- Stefan Henn, Florian Jarre and Kristian Witsch: Mathematical Image Processing - An Overview of Different Models and Methods for Registering Digital Image Data , Yearbook of Heinrich Heine University Düsseldorf 2002, pp. 178–188: http: //dup.oa.hhu. de / 64/1 / pagesjarre.pdf

- Stefan Henn, Kristian Witsch: Iterative Multigrid Regularization Techniques For Image Matching , SIAM Journal on Scientific Computing (SISC), 23 (4) (2001), 1077-1093.

- B. Zitova, J. Flusser: Image registration methods: a survey, Elsevier. Image and Vision Computing , Vol. 21, 2003, pp. 977-1000

- Boris Peter Selby, Georgios Sakas, Uwe Stilla et al. A Radiometry tolerant method for direct 3D / 2D registration of computed tomography data to X-ray images: Transfer function independent registration. Image processing for medicine (BVM) 2010: http://www.selbytec.de/publications/

- A. Sotiras, C. Davatzikos, N. Paragios: Deformable Medical Image Registration: A Survey , IEEE Transactions on Medical Imaging, Vol. 32, No. 7, 2013, pp. 1153-1190