Proportional error reduction measures

Proportional reduction in error Dimensions ( proportional reduction in error ( PFR ) english proportionate reduction of error , in short: PRE , hence PRE-degree ) enter indirectly the strength of the relationship between two variables and to.

definition

Proportional measures of error reduction are defined as

- ,

wherein the error in predicting the dependent variable without knowledge of the relationship and the error in predicting the dependent variable with knowledge of the relationship is with .

As is true (because it is believed that the knowledge of the relationship is correct, so the prediction error increases when using the knowledge from ) followed . A value of one means that knowing the independent variable, the value of the dependent variable can be perfectly predicted. A value of zero means that knowing the independent variable does not result in any improvement in predicting the dependent variable.

The advantage is that all proportional error reduction measures can be interpreted in the same way regardless of the scale level . The coefficient of determination can therefore serve as a benchmark , since it is a proportional error reduction measure , or the following rule of thumb:

- : No relationship,

- : Weak relationship,

- : Medium relationship and

- : Strong relationship.

The downside is that

- the direction of the relationship cannot be taken into account, since directions can only be specified for ordinal or metric variables and

- the size of the error reduction depends on how the prediction is made with knowledge of the context. A small value of the proportional error reduction measure does not mean that there is no connection between the variables.

Since one variable is dependent and the other is independent, a distinction is made between symmetrical and asymmetrical proportional error reduction measures:

| Scale level of | Measure | |||

|---|---|---|---|---|

| independent variable X | dependent variable Y | Surname | comment | |

| nominal | nominal | Goodman and Kruskals | There is a symmetrical and an asymmetrical dimension. | |

| nominal | nominal | Goodman and Kruskals | There is a symmetrical and an asymmetrical dimension. | |

| nominal | nominal | Uncertainty coefficient or part U | There is a symmetrical and an asymmetrical dimension. | |

| ordinal | ordinal | Goodman and Kruskals | There is only one symmetrical dimension. | |

| nominal | metric | There is only one asymmetrical dimension. | ||

| metric | metric | Coefficient of determination | There is only one symmetrical dimension. | |

Coefficient of determination

For the prediction with ignorance of the relationship between two scale variables and only values of the dependent variable may be used. The simplest approach is to assume a constant value. This value should meet the optimality property, i.e. minimize the sum of the squared deviations . It follows that the arithmetic mean is so . Hence the prediction error is ignorant of the context

- .

For the prediction with knowledge of the relationship, we use linear regression :

- .

The coefficient of determination is then a proportional error reduction measure , since the following applies

If the roles of the dependent and independent variables are swapped, the result is the same value for . Therefore there is only one symmetrical dimension.

Goodman and Kruskals λ and τ

Goodman and Kruskals λ

The prediction with ignorance of the relationship is the modal category of the dependent variable and the prediction error

with the absolute frequency in the modal category and the number of observations.

The prediction with knowledge of the relationship is the modal category of the dependent variable depending on the categories of the independent variable and the prediction error

with the absolute frequency for the respective category of the independent variable and the absolute frequency of the modal category depending on the categories of the independent variable.

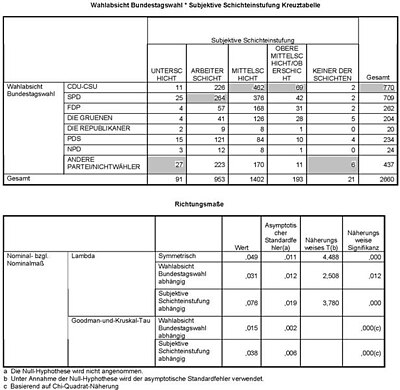

example

In the example on the right, the predictive value “CDU / CSU” and thus an error prediction results for the dependent variable “Intention to vote in the Bundestag election” if the correlation is not known .

Depending on the characteristics of the variable “Subjective class classification”, the predictive value “CDU / CSU” (category: middle class, upper middle class / upper class), “SPD” (category: working class) or “other party / Non-voters ”(all other categories). The prediction error and .

This means that in the present example, the error in predicting the respondent's intention to vote in the Bundestag election can be reduced by 3.1% if one knows one's own subjective class classification.

Goodman and Kruskals τ

In Goodman and Kruskals , a randomly drawn value from the distribution of Y is assumed as the predictive value instead of the modal category, i.e. H. with probability category 1 is drawn, with probability category 2 is drawn and so on. The prediction error then results as

with the absolute frequency of the category of the dependent variable. The prediction error results analogously , only that the prediction is now made accordingly for each category of the independent variable and the prediction error results as the sum of the weighted prediction errors in each category of the independent variable.

with the absolute frequency for the common occurrence of the categories and .

Symmetrical dimensions

For Goodman and Kruskals and can make forecast errors

- and , if the dependent variable is and

- and , if the dependent variable is,

be calculated. The symmetrical measures for Goodman and Kruskals and then result as

- .

Uncertainty coefficient

entropy

The uncertainty coefficient measures the uncertainty of the information with the help of entropy . If the relative frequency of occurrence is the category , then the entropy or uncertainty is defined as

Uncertainty is zero if there is but one for all possible categories . Predicting which category value a variable will take on is then trivial. If ( equal distribution ), then the uncertainty and also maximum.

Asymmetric uncertainty coefficient

The measure of error with ignorance of the relationship is therefore the uncertainty for the dependent variable

The measure of error with knowledge of the relationship is the weighted sum of the uncertainty for each category of the dependent variable

This expression can also be written as

with the uncertainty based on the joint distribution of and and the uncertainty of the independent variable .

The uncertainty coefficient then results as

Symmetrical uncertainty coefficient

For the uncertainty coefficient, the prediction errors

- and , if the dependent variable is and

- and , if the dependent variable is,

be calculated. The symmetrical uncertainty coefficient results, as in Goodman and Kruskals and , als

- .

Goodman and Kruskals γ

be the number of concordant pairs ( and ) and the number of discordant pairs ( and ). If we don't have ties in common and the number of observations is, then .

Under ignorance of the context, we can not comment on whether a couple is concordant or discordant. Therefore we predict probability 0.5 of a concordant or discordant pair. The total error for all possible pairs is given as

Under knowledge of the relationship concordance will always be predicted, if , or more discordance when . The mistake is

and it follows

The Goodman and Kruskals amount is thus a symmetrical, proportional error reduction measure.

η 2

As with the coefficient of determination , the predictive value for the dependent scale variable is ignorant of the context and the prediction error

- .

If it is known to which of the groups of the nominal or ordinal independent variable the observation belongs, the predictive value is precisely the group mean . The prediction error results as

with if the observation belongs to the group and otherwise zero. This results in

- .

The roles of the dependent and independent variables cannot be reversed because they have different scale levels. Therefore there is only one (asymmetrical) dimension.

Cohen (1998) gives as a rule of thumb:

- no context,

- little connection,

- medium relationship and

- strong connection.

example

In the example, the error in the prediction of the net income can be reduced by just under 10% if the social class is known. The second comes about if you swap the role of the variables, which is nonsensical here. Therefore this value must be ignored.

literature

- YMM Bishop, SE Feinberg, PW Holland (1975). Discrete Multivariate Analysis: Theory and Practice. Cambridge, MA: MIT Press.

- LC Freemann (1986). Order-based Statistics and Monotonicity: A Family of Ordinal Measures of Association. Journal of Mathematical Sociology, 12 (1), pp. 49-68

- J. Bortz (2005). Statistics for human and social scientists (6th edition), Springer Verlag.

- B. Rönz (2001). Script "Computer-assisted Statistics II", Humboldt University Berlin, Chair for Statistics.

Individual evidence

- ↑ a b J. Cohen (1988). Statistical Power Analysis for Behavioral Science. Erlbaum, Hilsdale.

- ↑ a b c L.A. Goodman, WH Kruskal (1954). Measures of association for cross-classification. Journal of the American Statistical Association, 49, pp. 732-764.

- ^ H. Theil (1972), Statistical Decomposition Analysis, Amsterdam: North-Holland Publishing Company (discusses the uncertainty coefficient).

![{\ displaystyle E_ {2} = \ sum _ {j} {\ frac {h _ {\ bullet, j}} {n}} \ underbrace {\ left [- \ sum _ {k} {\ frac {h_ {k , j}} {h _ {\ bullet, j}}} \ log \ left ({\ frac {h_ {k, j}} {h _ {\ bullet, j}}} \ right) \ right]} _ {\ begin {matrix} {\ text {uncertainty in category}} j \\ {\ text {of the independent variable}} \ end {matrix}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7c7b4e5d0cb0e668e48387ccf0a35e5b29bb4203)

![{\ displaystyle E_ {2} = U_ {XY} -U_ {X} = \ left [- \ sum _ {j, k} {\ frac {h_ {k, j}} {n}} \ log \ left ( {\ frac {h_ {k, j}} {n}} \ right) \ right] - \ left [- \ sum _ {j} {\ frac {h _ {\ bullet, j}} {n}} \ log \ left ({\ frac {h _ {\ bullet, j}} {n}} \ right) \ right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/40a92eda0a1e1657562d4ab4cbd259a3a41a53a1)