Stationary distribution

An invariant distribution or a stationary distribution is a term from the theory of Markov chains ; these in turn are special stochastic processes and thus objects of investigation of stochastics .

A Markov chain has a stationary distribution if and only if there is a starting distribution that does not change over time.

Stationary distributions are not to be confused with the stationarity of a stochastic process or stationary transition probabilities .

definition

Given is a homogeneous Markov chain in discrete time with at most a countable state space . Then a distribution on stationary distribution is called if

holds for all , where is the transition probability from state to state (which is independent of the point in time ). In the case of a finite state space, this corresponds

- ,

where is the transition matrix and a probability vector is written as a row vector. In this case, the stationary distributions are precisely the left eigenvectors of the transition matrix for eigenvalue 1, which were normalized with respect to the sum norm .

Existence and uniqueness

In general, stationary distributions do not have to exist. An example of this are transient Markov chains . These never have stationary distributions. Conversely, it can also be shown that irreducible Markov chains have at most a stationary distribution. The following statements apply to the uniqueness of the stationary distributions:

- An irreducible Markov chain has a stationary distribution if and only if it is positive recurrent . The distribution is then given by

- .

- Here, the return period in the state , if started in this.

- In the case of a finite state space, the irreducibility of the Markov chain and the irreducibility of the transition matrix are equivalent. From this it follows immediately with Perron-Frobenius' theorem that a clear invariant distribution (or left-hand eigenvector) exists and thus that the Markov chain is positive-recurrent. Thus positive recurrence follows from irreducibility.

- Accordingly, an irreducible Markov chain with finite state space always has a stationary distribution. This corresponds exactly to the normalized left eigenvector for eigenvalue 1 of the transition matrix or the standardized eigenvector of the transposed transition matrix for eigenvalue 1.

- If a distribution satisfies the detailed balance equation , then this distribution is a stationary distribution.

convergence

An irreducible, positively recurrent Markov chain converges to a stationary distribution if and only if it is aperiodic. Convergence here means that

holds for every start distribution of and every state .

If the state space is finite, then the lines of converge towards the stationary distribution.

At finite state spaces often finds the convergence criteria that must exist so that the transition matrix applies that is. This corresponds to checking the matrix for aperiodicity and irreducibility.

If the aperiodicity is dispensed with, the following statement can be made: If a Markov chain is irreducible and recurrent, then

- .

The mean value of the probability of occurrence thus converges component-wise to the stationary distribution.

More generally, if the Markov chain is not irreducible, it breaks down into several subsets of states that communicate with each other and all have the same period . Is such a set with an arbitrary but fixed state from this set. Then each state can be reached from this set in a clear number of steps from. If the subset is now recurrent, then the following applies

- .

Convergence speed

For some applications it is particularly interesting how quickly the stationary distribution is achieved. It can be shown that if for holds and all eigenvalues are simple, then the following estimate holds:

For any start distribution . The most important influencing factor on the speed of convergence is therefore the second largest eigenvalue in terms of absolute value.

Comparable statements can still be made for weaker prerequisites for the transition matrix, but correction terms for the Jordan blocks must be introduced.

Examples

Finite state space

existence

Let us consider the Markov chain with the following transition matrix:

This Markov chain has two absorbing quantities : and . Since these states can no longer be left, they have an influence on the existence of the stationary distributions. This can also be seen in the normalized eigenvectors. These are and . The stationary distribution is therefore not unambiguous here.

Uniqueness

Let us consider the Markov chain with the transition graph shown on the right. For the sake of simplicity, we set all transition probabilities to 0.5. The Markov chain is irreducible, as one can move from any state to any other state in a maximum of two steps. But it is also periodic, since a return to the starting point is only possible at even times. The also irreducible transition matrix is then

Perron-Frobenius' theorem guarantees the uniqueness of the eigenvector, since the matrix is also double-stochastic , it has the stationary distribution . However, the matrix powers do not converge, in particular is and

But if we now consider the mean values, they converge to the corresponding component of the stationary distribution:

- .

This follows the transition matrix above with the help of the corresponding entries (in the example the first row and column).

convergence

Consider the Markov chain shown on the right with the transition probabilities as given in the transition matrix:

It then applies

Thus the Markov chain is irreducible (and thus also positively recurrent), aperiodic and accordingly converges to a stationary distribution. An eigenvector of to the eigenvalue 1 is , normalization to 1 with respect to the sum norm delivers as a unique invariant distribution

- .

If you calculate the matrix powers, then if two decimal places already match the exact solution, if there are more than four decimal places. Conversely, from the stationary distribution calculated as the left eigenvector, one can immediately specify the limit value of the matrix powers in the event of convergence, since these converge line by line to the stationary distribution:

The expected return time can also be calculated from the stationary distribution; this is exactly the reciprocal of the corresponding component of the distribution. So here is the average time from starting in 1 to returning

- .

Variant of the random walk

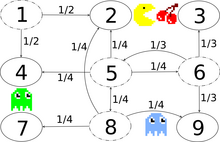

The game character Pac-Man eats small balls and cherries in a maze and is followed by ghosts. For the sake of simplicity, the game world in this example is a small grid and the monsters move purely at random. Every horizontally and vertically adjacent playing field is the next location of the ghost with the same probability, with the exception of a secret passage between the states and (see transition graphs on the right).

The state space is { }. In the transition matrix that now follows , entries have probably been removed in order to obtain a better overview:

This Markov chain is irreducible because the ghosts can move from any state to any state in a finite time. Thanks to the secret passage, only a maximum of three status changes are necessary. Through the secret passage, the Markov chain is also aperiodic, because the monsters can change from any state to any state in both an even and an odd number of state changes. Without the secret passage, the Markov chain would be periodic, because then a transition from an even to an even state or from an odd to an odd state would only be possible with an even number of changes of state.

Because of the irreducibility and aperiodicity there is exactly one stable equilibrium distribution which the Markov chain assumes after an infinitely long time. The probabilities of staying in the individual states (almost) no longer change after a long time. The stationary distribution can be naively determined by entering the equation

a large one is substituted for any starting distribution because the matrix powers converge as in the example above. To compute an analytical solution is the linear system of equations

to be resolved, under the secondary condition of a line total of . Substituting the naive solution into this equation serves as a control. The above equation is equivalent to

- .

The transition matrix is therefore transposed and the identity matrix subtracted. The desired vector of the state probabilities is now a column vector. The solution of the linear system of equations gives

- %.

The ghosts are most often in the center, less often on the edge and least often in the corner. The marginal states and , which are visited just as often on average as the central playing field are an exception .

Countably infinite state space

Let us consider a Markov chain on the state space with transition probabilities

So there is a certain probability that you will rise to a number one higher, if this does not happen you will start again at zero. The following applies:

- all states communicate with each other, since every state can reach 0 and 0 reaches every state. Accordingly, the Markov chain is irreducible.

- the return times are 0 and therefore the 0 has the period 1, so it is aperiodic. Because of the irreducibility, the entire Markov chain is then also aperiodic.

- The expected return time to 0 is given by

Thus the 0 is positively recurrent and, due to the irreducibility of the Markov chain, the entire chain is also positively recurrent.

The chain thus converges to an invariant distribution that is independent of the start distribution. Since we already know that , we can use the definition as a recursion equation and conclude that . The exception is that it is possible to calculate the stationary distribution directly.

use

Stationary distributions have numerous practical uses. If one imagines the Markov chain as a point moving randomly through a graph, then the i-th component of the stationary distribution is exactly the relative probability of encountering it in state i. An example of this is the Ehrenfest model . It models the diffusion of molecules through a membrane. The steady state is exactly the concentration when a diffusion equilibrium has been established. Another example is the calculation of the PageRank using the random surfer model. Here the Markov chain models the behavior of an Internet user: there is a certain probability that he will select one of the links on the homepage on which he is located, otherwise he will select another homepage via browser. The stationary distribution is then the relative probability that the random surfer hits a certain page. This is then a measure of the importance of this page and also the standardized PageRank of this page.

In addition, stationary distributions play an important role in the Markov Chain Monte Carlo process. They are exactly the distributions that you want to sample.

literature

- Ulrich Krengel : Introduction to probability theory and statistics . 8th edition, Vieweg, 2005. ISBN 978-3-8348-0063-3

- Hans-Otto Georgii: Stochastics: Introduction to Probability Theory and Statistics , 4th edition, de Gruyter, 2009. ISBN 978-3-11-021526-7

- Christian Hesse: Applied probability theory : a well-founded introduction with over 500 realistic examples and tasks, Vieweg, Braunschweig / Wiesbaden 2003, ISBN 978-3-528-03183-1 .

- Achim Klenke: Probability Theory. 2nd Edition. Springer-Verlag, Berlin Heidelberg 2008, ISBN 978-3-540-76317-8