Apache Kafka

| Apache Kafka

|

|

|---|---|

|

|

| Basic data

|

|

| Maintainer | Apache Software Foundation |

| developer | Apache Software Foundation , LinkedIn |

| Publishing year | April 12, 2014 |

| Current version |

2.6.0 ( August 3, 2020 ) |

| operating system | Platform independence |

| programming language | Scala , Java |

| category | Stream processor |

| License | Apache license, version 2.0 |

| kafka.apache.org | |

Apache Kafka is free software from the Apache Software Foundation , which is used in particular to process data streams . Kafka is designed to store and process data streams and provides an interface for loading and exporting data streams to third-party systems. The core architecture is a distributed transaction log.

Apache Kafka was originally developed by LinkedIn and has been part of the Apache Software Foundation since 2012. In 2014, the developers founded the company Confluent out of LinkedIn, which focuses on the further development of Apache Kafka. Apache Kafka is a distributed system that is scalable and fault-tolerant and therefore suitable for big data applications.

functionality

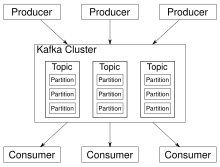

The core of the system is a computer network (cluster) consisting of so-called brokers . Brokers store key-value messages in topics along with a time stamp . Topics, in turn, are divided into partitions that are distributed and replicated in the Kafka cluster. Within a partition, the messages are stored in the order in which they were written. Read and write access bypasses the main memory by directly connecting the hard disks to the network adapter (zero copy) , so that fewer copying processes are necessary before writing or sending messages.

Applications that write data to a Kafka cluster are called producers , applications that read data from there are called consumers . Kafka Streams can be used for data stream processing. Kafka Streams is a Java library that reads data from Kafka, processes it and writes the results back to Kafka. Kafka can also be used with other stream processing systems. From version 0.11.0.0 “transactional writing” is supported, so that it can be guaranteed that messages are processed exactly once when an application uses Kafka streams (exactly-once processing) .

Kafka supports two types of topics: "normal" and "compacted" topics. Normal topics guarantee to keep messages for a configurable period of time or not to exceed a certain memory requirement. If there are messages that are older than the configured “retention time” or if the memory limit of a partition has been exceeded, Kafka can delete old messages in order to free up hard disk space. By default, Kafka saves messages for 7 days, but it is also possible to save messages forever. In addition to "normal" topics, Kafka also offers "compacted" topics that are not subject to time or space restrictions. Instead, newer messages are interpreted as updates to old messages with the same key. This guarantees that the latest message per key is never deleted. However, users can explicitly delete messages by writing a special message (so-called tombstone ) with a null value for the corresponding key.

Kafka offers four main interfaces:

- Producer API - For applications that want to write data to a Kafka cluster.

- Consumer API - For applications that want to read data from a Kafka cluster.

- Connect API - import / export interface for connecting third-party systems.

- Streams API - Java library for stream processing.

The consumer and producer interfaces are based on the Kafka message protocol and can be viewed as a reference implementation in Java. The actual Kafka message protocol is a binary protocol and thus allows consumer and producer clients to be developed in any programming language. This means that Kafka is not tied to the JVM ecosystem. A list of available non-Java clients is maintained in the Apache Kafka Wiki .

Kafka Connect API

Kafka Connect (or Connect API) offers an interface for loading / exporting data from / to third-party systems. It is available from version 0.9.0.0 and is based on the consumer and producer API. Kafka Connect implements so-called connectors, which take over the actual communication with the third-party system. The Connect API defines the programming interfaces that must be implemented by a connector. There are already many freely available and commercial connectors that can be used. Apache Kafka itself does not provide product-ready connectors.

Kafka Streams API

Kafka Streams (or Streams API) is a Java library for data stream processing and is available from version 0.10.0.0. The library enables stateful stream processing programs to be developed that are both scalable, elastic, and fault tolerant. For this purpose, Kafka Streams offers its own DSL , which has operators for filtering, mapping or grouping. Furthermore, time windows, joins and tables are supported. In addition to DSL, it is also possible to implement your own operators in the Processor API. These operators can also be used in the DSL. RocksDB is used to support stateful operators. This allows operator states to be kept locally and states that are larger than the available main memory to be swapped out as RocksDB data on the hard disk. In order to save the application status without loss, all status changes are also logged in a Kafka topic. In the event of a failure, all state transitions can be read from the topic in order to restore the state.

Kafka operator for Kubernetes

In August 2019, an operator for building a cloud-native Kafka platform with Kubernetes was published. This enables the automation of the provision of pods of the components of the Kafka ecosystem (ZooKeeper, Kafka, Connect, KSQL, Rest Proxy), monitoring of SLAs by Confluent Control Center or Prometheus, the elastic scaling of Kafka, as well as the handling of failures and a Automation of rolling updates.

Version compatibility

Up to version 0.9.0, Kafka brokers are backwards compatible with older client versions. As of version 0.10.0.0, brokers can also communicate with new clients in a forward-compatible manner. For the Streams API, this compatibility does not start until version 0.10.1.0.

literature

- Ted Dunning, Ellen MD Friedman: Streaming Architecture . New Designs Using Apache Kafka and MapR . O'Reilly Verlag , Sebastopol 2016, ISBN 978-1-4919-5392-1 .

- Neha Narkhede, Gwen Shapira, Todd Palino: Kafka: The Definitive Guide . Real-time data and stream processing at scale . O'Reilly Verlag , Sebastopol 2017, ISBN 978-1-4919-3616-0 .

- William P. Bejeck Jr .: Kafka Streams in Action . Real-time apps and microservices with the Kafka Streams API . Manning, Shelter Island 2018, ISBN 978-1-61729-447-1 .

Web links

- Official website

- Producer API

- Consumer API

- Connector API

- Streams API

- Introduction to Kafka (German)

- Apache Kafka on GitHub

Individual evidence

- ↑ a b projects.apache.org . (accessed on April 8, 2020).

- ↑ Release 2.6.0 . August 3, 2020 (accessed August 4, 2020).

- ↑ The Apache Kafka Open Source Project on Open Hub: Languages Page . In: Open Hub . (accessed on December 16, 2018).

- ↑ Alexander Neumann: Apache Kafka developers receive 24 million US dollars. In: heise Developer. Heise Medien GmbH & Co. KG, July 9, 2015, accessed on July 21, 2016 .

- ↑ Thomas Joos: How to analyze log files with open source software. Realtime Analytics with Apache Kafka. In: BigData Insider. Vogel Business Media GmbH & Co. KG, August 24, 2015, accessed on July 21, 2016 .

- ↑ a b Kafka operator for Kubernetes. Informatik Aktuell, August 2, 2019, accessed on August 4, 2019 .