Gradient method

The gradient method is used in numerics to solve general optimization problems. In doing so, one proceeds (using the example of a minimization problem) from a starting point along a direction of descent until no further numerical improvement is achieved. If the negative gradient is selected as the direction of descent, i.e. the direction of the locally steepest descent, the method of the steepest descent is obtained . Sometimes the terms gradient method and steepest descent method are used interchangeably. In general, the gradient method denotes an optimization method in which the direction of descent is obtained from gradient information, that is, it is not necessarily limited to the negative gradient.

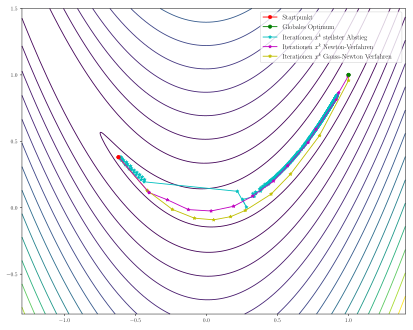

The steepest descent procedure often converges very slowly as it approaches the stationary point with a strong zigzag course. Other methods for calculating the direction of descent sometimes achieve significantly better convergence speeds , for example the conjugate gradient method is suitable for solving symmetrically positive definite linear systems of equations . The gradient descent is connected to the hill climbing ( hill climbing ) is used.

The optimization problem

The gradient method can be used to minimize a real-valued, differentiable function :

This is a problem of optimization without constraints, also called an unrestricted optimization problem .

The procedure

Starting from a starting point, the gradient method generates a sequence of points according to the iteration rule

where is a positive step size and a direction of descent . Both and in each iteration step are determined in such a way that the sequence converges to a stationary point of .

Determine the direction of descent

A direction of descent in the point is a vector that

Fulfills. Intuitively, this means that the angle between and is greater than 90 °. Since the gradient points in the direction of the steepest rise, there is a direction along which the function value decreases.

Many gradient methods use it to calculate the direction of descent

where is a positive definite matrix. In this case, the condition for the direction of descent is

and is always fulfilled thanks to the positive definiteness .

With the choice of the matrix the following algorithms are obtained:

- , where is the identity matrix , gives the steepest descent method . The Absteigsrichtung in this case is just the negative gradient, .

- , where so positive is definite, is a diagonally scaled steepest descent . They are often chosen as an approximation of the inverse of the 2nd derivative, that is .

- , the inverse Hesse matrix , gives Newton's method for solving nonlinear minimization problems.

- Since the calculation of the Hessian matrix is often complex, there is a class of algorithms which use an approximation . Such methods are called quasi-Newton methods ; there are different ways in which the approximation is calculated. An important representative from the class of quasi-Newton methods is the BFGS algorithm .

- If the optimization problem is given in the special form , i.e. as the sum of squares of functions, one obtains the Gauss-Newton method with , where the Jacobi matrix of is in the point .

Determine the step size

The determination of the step size is an important part of the gradient method, which can have a great influence on the convergence. Starting from iteration considering the value of along the line , that is . In this context, one often speaks of a line search . The ideal choice would be to calculate the step size as the value that minimizes the function , i.e. the one-dimensional problem

to solve. This is referred to as an exact line search and is rarely used in this form in practice, since even for simple optimization problems the exact determination of the step size is very computationally expensive.

As an alternative to the exact line search, the requirements are relaxed and the function value is reduced “sufficiently” with each iteration step. This is also known as an inexact line search . The simplest possibility is to reduce the step size starting from a start value (e.g. ) until it is reached. This method often works satisfactorily in practice, but it can be shown that for some pathological functions this line search reduces the function value in each step, but the sequence does not converge to a stationary point.

Armijo condition

The Armijo condition formalizes the concept "sufficient" in the required reduction of the function value. The condition is modified to

with . The Armijo condition circumvents the convergence problems from the previous simple condition by requiring that the reduction is at least proportional to the step size and the direction derivative , with a proportionality constant . In practice, very small values are often used, e.g. B. .

Backtracking line search

The Armijo condition always applies when the step size is sufficiently small and can thus lead to a standstill of the gradient process - the step is so small that no more significant progress is made. A simple combination of repeated reduction of the step size and the Armijo condition is the backtracking line search. It ensures that the step size is small enough to meet the Armijo condition, but on the other hand not too small. In pseudocode:

Wähle Startwert für , z. B. , wähle Konstanten

while end

Setze

The backtracking line search repeatedly reduces the step size by the factor until the Armijo condition is met. It is guaranteed to terminate after a finite number of steps and is often used in practice because of its simplicity.

convergence

In general, the gradient method converges neither to a global nor to a local minimum. Only the convergence to a stationary point , i.e. a point with , can be guaranteed . If one restricts the class of objective functions to convex functions , stronger guarantees are possible, see convex optimization .

Convergence speed

For the general case, a statement can neither be made about the speed of convergence of the sequence nor about the speed of convergence of the sequence . If is a Lipschitz constant of , one can show that the norm of the gradients converges towards 0 with the rate , where is a positive constant.

example

The Rosenbrock function

is often used as a test for optimization methods because it is challenging because of the narrow and shallow valley in which iterative methods can only take small steps. The constants are usually chosen with , the global optimum in this case is with the function value .

The gradient and the Hessian matrix result as

such as

- .

This allows the algorithms of the steepest descent and Newton's method to be implemented directly. In order to apply the Gauss-Newton method , the Rosenbrock function must first be brought into the form “sum of squares of functions”. This is explained in detail on the page on the Gauss-Newton method .

For line search backtracking is used in all procedures with the following parameters used: start value , , . The starting point is chosen.

Even after 1000 iterations, the process of the steepest descent does not find the global optimum and is stuck in the flat valley, where only very small steps are possible. In contrast, both the Newton method and the Gauss-Newton algorithm find the global optimum in just a few iterations.

See also

literature

- Yurii Nesterov: Introductory Lectures on Convex Optimization: A Basic Course. Springer Science & Business Media, 2003, ISBN 1-4419-8853-X .

- Dimitri P. Bertsekas: Nonlinear Programming. 2nd Edition. Athena Scientific, 1995, ISBN 1-886529-14-0 .

- Jorge Nocedal, Stephen Wright: Numerical Optimization. Springer Science & Business Media, 2000, ISBN 0-387-98793-2 .

- Andreas Meister: Numerics of linear systems of equations. 2nd Edition. Vieweg, Wiesbaden 2005, ISBN 3-528-13135-7 .

Individual evidence

- ↑ Dimitri P. Bertsekas: Nonlinear programming . 3. Edition. Athena Scientific, 2016, ISBN 978-1-886529-05-2 .