Skinner box

A Skinner box (sometimes also: problem box , puzzle box ) is an extremely low-stimulus cage for a test animal in which it can learn a new type of behavior in a standardized and largely automated manner. The name Skinner-Box refers to Burrhus Frederic Skinner , through whom it became widely known. The device is a variant of the conundrum, problem or test cage developed by Edward Lee Thorndike . The Skinner originally chosen name is operant conditioning chamber (chamber for operant conditioning ); only later did the name become an eponym .

By the representatives of behaviorism the view is partially maintained that an animal's behavior (and punishment for unwanted behavior - - less reliable) fully reward for desirable behavior can be influenced, ie by operant conditioning. The conditioning of behavior (synonyms: behavior formation, shaping ) in a Skinner box is considered a particularly efficient and, moreover, objective method, as it allows the test animal to display a wide variety of behaviors without too unnatural restrictions and the experimenter does not intervene in a steering direction ( magazine training ).

functionality

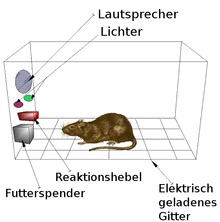

Typically, a Skinner box consists of a completely empty cage with smooth walls, in which a small lever (e.g. for rats) or a small pick disc (e.g. for pigeons) is attached, an output chute for food and often a small one Light source. The levers or picking discs are connected to a device that registers both the number and the chronological sequence of lever presses or picking on the disc. The experimental set-up is very simple: a hungry animal that is completely unfamiliar with the Skinner box is placed in the box - and the test leader waits to see what happens.

The “building blocks” of the apparatus can be interconnected in such learning tests, for example, as follows: As long as the light source lights up, pressing the lever or touching the picking disc causes some food to fall into the output shaft; if the light source does not light up, touching the lever or picking disc does not result in feed being dispensed.

A rather unusual variant is shown in the figure: The test animal receives a weak, “punitive” electric shock on its feet if it does not press the lever within a specified period of time after an acoustic or visual signal.

Since levers or pick discs are usually the only "unusual" elements inside the Skinner box, the test animal will repeatedly (and often particularly intensely) sniff these elements or touch them more vigorously - coincidentally, just once while the light is on . Since food is then poured out, the probability increases that the test animal will sniff or peck again in the same place in the cage - as a result, both rats and pigeons learn extremely quickly to associate the lighting of the lamp with lever pressure or picking disc and food distribution. Without the experimenter having to intervene in any way, the test animals learn in the Skinner box that a certain action leads to the dispensing of food.

With rats and other rodents, such learning tests can be reproduced practically anywhere and at the same time are extremely clear and impressive, so that in some places they also belong to the repertoire of school lessons.