Runaway

In statistics , one speaks of an outlier when a measured value or finding does not fit into an expected series of measurements or does not generally meet expectations. The "expectation" is usually defined as the range of variation around the expected value in which most of all measured values come to lie, e.g. B. the interquartile range Q 75 - Q 25 . Values that are more than 1.5 times the interquartile range outside this interval are (mostly arbitrarily) referred to as outliers. In the box plot particularly high outliers are presented separately. The robust statistics deal with the outlier problem. In data mining , too , one deals with the detection of outliers. Influential observations have to be distinguished from outliers .

Check for measurement errors

It is then crucial to check whether the outlier is actually a reliable and genuine result, or whether there is a measurement error .

- Example: The ozone hole over the Antarctic has already been measured for a number of years, but the measured values were assessed as obviously incorrectly measured (ie interpreted as “outliers” and ignored) and their scope was therefore not recognized.

Outlier tests

Another approach has been u. a. proposed by Ferguson in 1961. It is then assumed that the observations come from a hypothetical distribution . Outliers are then observations that do not come from the hypothetical distribution. The following outlier tests all assume that the hypothetical distribution is a normal distribution and check whether one or more of the extreme values do not come from the normal distribution:

- Grubbs outlier test

- Outlier test according to Nalimov

- David, Hartley and Pearson outlier test

- Dixon outlier test

- Outlier test according to Hampel

- Baarda outlier test

- Pope outlier test

The Walsh outlier test , however, is not based on the assumption of a specific distribution of the data. As part of the time series analysis , time series for which an outlier is suspected can be tested for it and then modeled with an outlier model.

Differences to extreme values

A popular approach is to use the box plot to identify “outliers”. The observations outside the whiskers are arbitrarily referred to as outliers. For the normal distribution one can easily calculate that almost 0.7% of the mass of the distribution lies outside the whiskers. From a sample size of from one would expect (on average) at least one observation outside of the whiskers (or observations outside of the whiskers for ). It therefore makes more sense initially to speak of extreme values instead of outliers.

Multivariate outliers

The situation becomes even more complicated in several dimensions. In the graphic on the right, the outlier in the lower right corner cannot be identified by inspecting every single variable; it is not visible in the box plots. Nevertheless, it will significantly influence a linear regression .

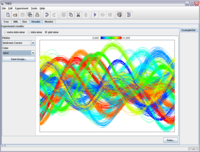

Andrews curves

Andrews (1972) suggested that each multivariate observation be represented by a curve:

This means that every multivariate observation is mapped onto a two-dimensional curve in the interval . Because of the sine and cosine terms, the function repeats itself outside the interval .

For every two observations and the following applies:

The formula (1) to the left of the equal sign corresponds (at least approximately) to the area between the two curves, and the formula (2) to the right is (at least approximately) the multivariate Euclidean distance between the two data points.

So if the distance between two data points is small, then the area between the curves must also be small; i.e., the curves and must be close together. However, if the distance between two data points is large, the area between the curves must also be large; i.e., the curves and must run very differently. A multivariate outlier would be visible as a curve that clearly differs in its course from all other curves.

Andrew's curves have two disadvantages:

- If the outlier is visible in exactly one variable, the further ahead this variable appears, the better the person perceives the different curves. Ideally, it should be the variable . In other words, it makes sense to sort the variables, e.g. B. becomes the variable with the greatest variance, or one takes the first principal component .

- If one has many observations, many curves have to be drawn so that the course of a single curve is no longer visible.

Stahel-Donoho Outlyingness

Stahel (1981) and David Leigh Donoho (1982) defined the so-called outlyingness in order to obtain a measure of how far an observation value is from the bulk of the data. By calculating all possible linear combinations , i. H. the projection of the data point onto the vector , with the outlyingness

- ,

where the median of the projected points and the mean absolute deviation of the projected points as a robust measure of dispersion. The median serves as a robust position, the mean absolute deviation as a robust measure of dispersion. is a normalization.

In practice, the Outlyingness is calculated by determining the maximum for several hundred or thousands of randomly selected projection directions .

Outlier detection in data mining

The English term outlier detection refers to the sub-area of data mining , which is about identifying atypical and conspicuous data sets. Application for this is, for example, the detection of (potentially) fraudulent credit card transactions in the large number of valid transactions. The first algorithms for outlier detection were closely based on the statistical models mentioned here, but due to calculation and, above all, runtime considerations, the algorithms have since moved away from them. An important method for this is the density-based local outlier factor .

See also

literature

- R. Khattree, DN Naik: Andrews Plots for Multivariate Data: Some New Suggestions and Applications . In: Journal of Statistical Planning and Inference . tape 100 , no. 2 , 2002, p. 411-425 , doi : 10.1016 / S0378-3758 (01) 00150-1 .

Web links

- Basics of statistic outlier tests

- Learning by Simulations Simulation of the effect of an outlier on the linear regression

Individual evidence

- ^ Volker Müller-Benedict: Basic course in statistics in the social sciences . 4th, revised edition. VS Verlag für Sozialwissenschaften, Wiesbaden 2007, ISBN 978-3-531-15569-2 , p. 99 .

- ↑ Karl-Heinz Ludwig: A short history of the climate: From the creation of the earth to today. 2nd Edition. Beck Verlag 2007, ISBN 978-3-406-56557-1 , p. 149.

- ^ TS Ferguson: On the Rejection of Outliers . In: Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability . tape 1 , 1961, pp. 253-287 ( projecteuclid.org [PDF]).

- ↑ D. Andrews: Plots of high-dimensional data. In: Biometrics. 28, 1972, pp. 125-136, JSTOR 2528964 .

- ↑ WA Stahel: Robust Estimates: Infinitesimal Optimality and Estimates of Covariance Matrices. PhD thesis, ETH Zurich, 1981.

- ^ DL Donoho: Breakdown properties of multivariate location estimators. Qualifying paper, Harvard University, Boston 1982.

- ↑ H.-P. Kriegel, P. Kröger, A. Zimek: Outlier Detection Techniques . Tutorial. In: 13th Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD 2009) . Bangkok, Thailand 2009 ( lmu.de [PDF; accessed on March 26, 2010]).

![[- \ pi; \ pi]](https://wikimedia.org/api/rest_v1/media/math/render/svg/1e483c5368694d4985a9181e5288540173d602c4)