Kruskal's algorithm

The Kruskal's algorithm is a greedy algorithm of graph theory for computing minimum spanning trees of undirected graphs . In addition , the graph must be connected , edge-weighted and finite.

The algorithm comes from Joseph Kruskal , who published it in 1956 in the journal "Proceedings of the American Mathematical Society ". He described him there as follows:

- Carry out the following step as often as possible: Choose the shortest edge from the not yet selected edges of (the graph) that does not form a circle with the edges already selected.

The shortest edge denotes the edge with the smallest edge weight. After completing the algorithm, the selected edges form a minimal spanning tree of the graph.

If the algorithm is applied to disconnected graphs, it calculates a minimal spanning tree for each connected component of the graph. These trees form a minimal spanning forest .

idea

The Kruskal's algorithm uses the county property minimum spanning trees ( English m inimum s panning t ree , MST). To do this, the edges are sorted in ascending order according to their weight in the first phase. In the second phase, iterates over the sorted edges. If an edge connects two nodes that are not yet connected by a path of previous edges, that edge is added to the MST.

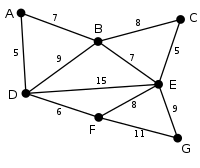

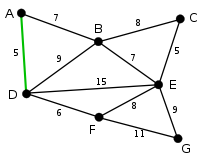

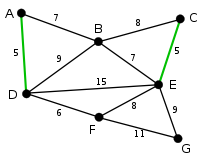

example

Formalized algorithm

The basic idea is to traverse the edges in order of increasing edge weights and add any edge to the solution that does not form a circle with all of the previously chosen edges . So-called components are successively connected to the minimal spanning tree .

Input

A connected, edge-weighted graph is used as input . denotes the set of nodes (vertices) , the set of edges (edges) . The weight function assigns an edge weight to each edge.

output

The algorithm provides a minimum spanning tree with .

algorithm

Kruskal's algorithm works non-deterministically; In other words, it may produce different results when executed repeatedly. All of these results are minimal spanning trees .

G = (V,E,w): ein zusammenhängender, ungerichteter, kantengewichteter Graph kruskal(G) 1 2 3 Sortiere die Kanten in L aufsteigend nach ihrem Kantengewicht. 4 solange 5 wähle eine Kante mit kleinstem Kantengewicht 6 entferne die Kante aus 7 wenn der Graph keinen Kreis enthält 8 dann 9 M = (V,E') ist ein minimaler Spannbaum von G.

The same algorithm can be used analogously for a maximum spanning tree. Let be a connected edge-weighted graph. Then you enter with , and in Kruskal's algorithm. Finally, the output is a minimum spanning tree of and thus a maximum of .

A union find structure can be used to test whether nodes and are in different subtrees . Then the runtime is . Here is the time required to sort the edge weights and the inverse of the Ackermann function . For realistic entries is always less than or equal

Variant 1: Parallel sorting

The sorting of the first phase can be parallelized. In the second phase, however, it is important for correctness that the edges are processed one after the other. With processors can be sorted in parallel in linear time. This reduces the total runtime .

Variant 2: Filter-Kruskal

A variant of Kruskal's algorithm called Filter-Kruskal was developed by Osipov et al. and is better suited for parallelization. The basic idea is to partition the edges in a similar way to Quicksort and then to sort out edges that connect nodes in the same subtree in order to reduce the costs for further sorting. Filter-Kruskal is better suited for parallelization because sorting, partitioning and filtering can easily be done in parallel by dividing the edges between the processors. The algorithm is shown in the following pseudocode.

filterKruskal(): falls KruskalSchwellwert: return kruskal() pivot = zufällige Kante aus , partition(, pivot) filterKruskal() filter() filterKruskal() return

partition(, pivot): für alle : falls gewicht() gewicht(pivot): sonst return (, )

filter(): für alle : falls find-set(u) find-set(v): return

Proof of correctness

Let be a connected edge-weighted graph and the output of Kruskal's algorithm. In order to prove the correctness of the algorithm, the following has to be shown:

- the algorithm terminates (it does not contain an infinite loop).

-

is a minimal spanning tree of , so:

- is an exciting subgraph of .

- does not contain a circle.

- is contiguous.

- is minimal in terms of .

The following are some ideas that demonstrate the validity of each statement:

- Termination

- Line 6 removes exactly one element from in each loop pass . In addition, no further operation will change it. Due to line 4, elements are only removed until . Since it was set in the algorithm at the beginning and is only finite by definition, the loop is also only run through finitely often. It follows that Kruskal's algorithm terminates.

- M is the spanning subgraph of G

- Since the set of nodes is equal to and according to the definition of the algorithm and obviously applies because of line 8 , is the spanning subgraph of .

- M does not contain a circle

- Line 7 makes it trivial that no circle can contain.

- M is contiguous

- In the following it is shown indirectly that is connected. Be thus not contiguous. Then there are two nodes and that are not connected by a path. But since and in are connected by a path, there is an edge in which does not exist in . The algorithm looks guaranteed in line 7 of each edge and with it . The graph in line 7 must be a circle because there is no path between and in . Line 8 is then inserted into. However, this contradicts the fact that it is not included in. Thus our assumption is invalid and yet coherent.

- M is minimal with respect to G.

- We show by induction that the following statement is true:

If is the set of edges that was generated in the -th step of the algorithm, then there is a minimal spanning tree that contains. The assertion is true, i.e. H. (i.e. no edge is planned yet). Every minimal spanning tree fulfills the claim and a minimal spanning tree exists, since a weighted, connected graph always has a minimal spanning tree. Now we assume that the claim for is satisfied and is the set of edges generated by the algorithm after step . Let it be the minimal spanning tree that contains. We are now looking at the case . For this, assume the last edge inserted by the algorithm.

- If

- Then the assertion is also fulfilled for, since the minimal spanning tree is extended by an edge from the minimal spanning tree .

- If

- Then contains a circle and there is an edge that is in the circle but not in . (If there weren't any such edge , then could n't have been added because that would have created a circle.) So that's a tree. Furthermore, the weight of cannot be less than the weight of , otherwise the algorithm would have added instead of . It follows that . But since there is a minimal spanning tree, it also applies and it follows . Thus there is a minimal spanning tree that contains and the claim is satisfied.

This means that the Kruskal algorithm generates a set according to steps that can be expanded to a minimal spanning tree. But since the result after steps of the algorithm is already a tree (as shown above), this must be minimal.

Time complexity

The following is the number of edges and the number of nodes. The runtime of the algorithm is made up of the necessary sorting of the edges according to their weight and checking whether the graph is circular. Sorting takes a runtime of . With a suitable implementation, checking for freedom from circles is possible more quickly, so that sorting determines the total runtime. In this respect, Prim's algorithm is more efficient, especially for graphs with many edges .

If the edges are already presorted, Kruskal's algorithm works faster. Now consider how quickly it is possible to check for freedom from a circle. In order to achieve the best possible runtime, all nodes are stored in a union find structure . This contains information about which nodes are related. At the beginning, no edge is entered in the spanning tree, so each node is in a separate partition . If an edge is to be added, it is checked whether and are in different partitions. The Find (x) operation is used for this : It supplies a representative of the partition in which the node x is located. If Find ( ) and Find ( ) give different results, then the edge can be added and the partitions of the two nodes are united ( union ). Otherwise, adding the edge would create a circle, so the edge is discarded. Overall, the Find operation (for each edge) and the Union operation are called times. When using the heuristics Union-By-Size and Path Compression , an amortized runtime analysis for the algorithm results in a complexity of . Where is defined as

and practically constant. Theoretically, however, this function grows infinitely, which is why it cannot be omitted in the O notation.

Parallel implementation

Due to data dependencies between the iterations, the Kruskal algorithm is fundamentally difficult to parallelize. However, it is possible to sort the edges in parallel at the beginning or alternatively to use a parallel implementation of a binary heap in order to find the edge with the lowest weight in each iteration. By sorting in parallel, which is possible on processors in time, the runtime of the algorithm can be reduced to even with previously unsorted edges .

A variant of Kruskal's algorithm called Filter-Kruskal was developed by Osipov et al. and is better suited for parallelization. The basic idea is to partition the edges in a similar way as with Quicksort and then to sort out edges that connect nodes in the same subtree in order to reduce the costs for the sorting. The algorithm is shown in the following pseudocode .

FILTER-KRUSKAL(G): 1 if |G.E| < KruskalThreshhold: 2 return KRUSKAL(G) 3 pivot = CHOOSE-RANDOM(G.E) 4 , = PARTITION(G.E, pivot) 5 A = FILTER-KRUSKAL() 6 = FILTER() 7 A = A ∪ FILTER-KRUSKAL() 8 return A

PARTITION(E, pivot): 1 = ∅, = ∅ 2 foreach (u, v) in E: 3 if weight(u, v) <= pivot: 4 = ∪ {(u, v)} 5 else 6 = ∪ {(u, v)} 5 return ,

FILTER(E): 1 = ∅ 2 foreach (u, v) in E: 3 if FIND-SET(u) ≠ FIND-SET(v): 4 = ∪ {(u, v)} 5 return

Filter-Kruskal is better suited for parallelization, since sorting and partitioning, as well as filtering can simply be carried out in parallel by dividing the edges between the processors.

Further variants for a parallelization of Kruskal's algorithm are also possible. For example, there is the option of executing the sequential algorithm on several subgraphs in parallel in order to then merge them until finally only the final minimal spanning tree remains. A simpler form of the filter kruscal, in which helper threads are used to remove edges in the background that are clearly not part of the minimal spanning tree, can also be used.

Others

The algorithm was originally used by Kruskal as an aid to a simplified proof that a graph with pairwise different edge weights has a unique minimal spanning tree.

Web links

- Interactive applet for learning, trying out and demonstrating the algorithm

- Ronny Harbich: Complete proof of the correctness of Kruskal's algorithm. 2006

- Clear presentation of the algorithm in the context of the computer science year 2006

Individual evidence

- ^ Joseph Kruskal : On the shortest spanning subtree and the traveling salesman problem. In: Proceedings of the American Mathematical Society , 7, 1956, pp. 48-50

- ↑ Vitaly Osipov, Peter Sanders, Johannes Singler: The filter-kruskal minimum spanning tree algorithm . In: Proceedings of the Eleventh Workshop on Algorithm Engineering and Experiments (ALENEX). Society for Industrial and Applied Mathematics . 2009, pp. 52-61.

- ↑ Michael J. Quinn, Narsingh Deo: Parallel graph algorithms . In: ACM Computing Surveys (CSUR) 16.3 . 1984, pp. 319-348.

- Jump up ↑ Ananth Grama, Anshul Gupta, George Karypis, Vipin Kumar: Introduction to Parallel Computing 2003, ISBN 978-0-201-64865-2 , pp. 412-413.

- ↑ a b Vitaly Osipov, Peter Sanders, Johannes Singler: The filter-kruskal minimum spanning tree algorithm . In: Proceedings of the Eleventh Workshop on Algorithm Engineering and Experiments (ALENEX). Society for Industrial and Applied Mathematics . 2009, pp. 52-61.

- ↑ Vladimir Lončar, Srdjan Škrbić, Antun Balaž: Parallelization of Minimum Spanning Tree Algorithms Using Distributed Memory Architectures . In: Transactions on Engineering Technologies. . 2014, pp. 543-554.

- ↑ Anastasios Katsigiannis, Nikos Anastopoulos, Nikas Konstantinos, Nectarios Koziris: An approach to parallelize kruskal's algorithm using helper threads . In: Parallel and Distributed Processing Symposium Workshops & PhD Forum (IPDPSW), 2012 IEEE 26th International . 2012, pp. 1601-1610.