Normalization (unicode)

The Unicode standard knows various normal forms of Unicode character strings and algorithms for normalization , i.e. for converting a character string into such a normal form.

Normalization is necessary because there are several different possibilities for many characters to represent them as a chain of Unicode characters. Only if the character strings to be compared are in the same normal form is it possible to decide whether they represent the same text or not.

Normal forms

There are four normal forms:

- NFD: the canonical decomposition

- NFC: the canonical decomposition followed by a canonical composition

- NFKD: the compatible decomposition

- NFKC: the compatible decomposition followed by a canonical composition.

| canonical equivalence | compatible equivalence | |

|---|---|---|

| disassembled | NFD | NFKD |

| combined | NFC | NFKC |

- In the decomposed forms NFD and NFKD, all characters that can also be represented with the help of combining characters are decomposed.

- In the compound forms NFC and NFKC, a single character is selected for a sequence of basic characters and combining characters, if this is possible.

Canonical equivalence

If two character strings are canonically equivalent , then they represent exactly the same content , only different sequences of Unicode characters may have been selected. There are several reasons why there are several representations:

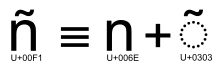

- The Unicode standard defines a separate character for many letters with diacritical marks. Such characters can also be represented as the basic letter, followed by a combining diacritical mark .

Example: The letter Ä exists as a separate character U + 00C4, but can also be encoded as a sequence U + 0041 U + 0308 . - Followed by a character different combining characters at different stand points of the basic character, so does their order for display no role.

BothU+0061 U+0308 U+0320andU+0061 U+0320 U+0308result in ä̠, whileU+0061 U+0308 U+0304ǟ results in andU+0061 U+0304 U+0308against ā̈. - Some characters are included twice in the standard, for example Å, which is encoded both in the position U + 00C5 as “Latin capital letter A with ring” and in the position U + 212B as “ Ångström character ”.

Compatible equivalency

If two character strings are only compatible and equivalent , they represent the same content , but the representation can be slightly different . The following deviations can occur:

- High - / Subscript characters: The superscript 2 (², U + 00B2) is a variant of the compatibility number 2, as well as the subscript (₂, U + 2082).

- Other font: The capital letter Z with a double bar (ℤ, U + 2124) corresponds to the normal Z, only it is available in a different font .

- Initial / medial / final / isolated form of a character: Although Unicode only provides one character for each letter in Arabic , even if it has a different form depending on its position, these individual forms are also encoded as separate characters. These characters are marked as corresponding compatibility variants of the preferred character in the Unicode standard.

- Without break : Some characters only differ from one another when the Unicode line break algorithm is used , so the non-breaking space is just a space that does not allow a break.

- Circled: The Unicode block of enclosed alphanumeric characters and other blocks contain many circled characters, such as the circled number 1 (①, U + 2460), which is a variant of the ordinary 1.

- Break : breaks such as ½ (U + 00BD) can also use the fraction bar (U + 2044) are written.

- Different width or orientation, square: East Asian typography knows characters in different widths, and those that appear rotated by 90 ° in the vertical layout compared to the usual representation.

- Other: Some normalizations don't fall into any of these categories, including ligature resolution .

normalization

The conversion of a character string into one of the four normal forms is called normalization. To do this, the Unicode standard defines several properties :

-

Decomposition_Mappingindicates for each character the character string into which it can be broken down, if this is possible. The property names both the canonical and the compatible decompositions. -

Decomposition_Typeindicates whether the decomposition is a canonical or a compatible decomposition. In the latter case, it is also indicated what type it is. -

Canonical_Combining_Class(shortccc) is a number between 0 and 254, which roughly indicates the position of the basic character for combining characters. If two combining characters have different values, they do not interact graphically with each other and can be swapped without changing the representation. - A character has the

Full_Composition_Exclusionproperty if it has a canonical decomposition but should not be used in the compound normal forms. -

Hangul_Syllable_Typeis used in for the decomposition of Korean syllable blocks .

algorithm

The following steps are carried out to convert to one of the normal forms:

In the first step, the character string is completely broken down: For each character, it is determined whether a breakdown exists and it is replaced by this if necessary. This step must be carried out repeatedly, as the characters into which a character is broken down can themselves be broken down again.

Only canonical decompositions are used for the canonical normal forms; for compatible normalizations, both the canonical and the compatible ones. The breakdown of Korean syllable blocks into individual Jamo is carried out by a separate algorithm.

Then the combining characters that belong to the same basic character are sorted as follows: If two characters A and B follow one another, for which applies ccc(A) > ccc(B) and ccc(B) > 0 , these two characters are swapped. This step is repeated until there are no more adjacent pairs of characters that can be swapped.

For the compound normal forms, there is a third step, the canonical composition: To do this, it is checked (starting with the second character) for each character C whether a preceding character L has the following properties:

-

ccc(L) = 0 - For all characters A between L and C applies 0 <

ccc(A) <ccc(C) - There is a Unicode character P that is not

Full_Composition_Exclusionmarked as and has the canonical decomposition <L, C>.

In this case, L is replaced by P and C is removed.

In order to get syllable blocks with their own Unicode code points from sequences of Jamo, the algorithm for decomposing the syllable blocks is used in reverse.

properties

Text that consists only of ASCII characters is already in each of the normal forms, text made of Latin 1 characters in NFC.

The concatenation of two character strings in normal form is sometimes not in normal form, and an exchange of lower and upper case letters can destroy the normal form.

All normalizations are idempotent , so if you apply them a second time, the string remains as it is. Any sequence of normalizations can be replaced by a single normalization; the result is a compatible normalization if one of the normalizations involved is compatible, otherwise a canonical one.

The Unicode standard provides some properties with which it can be efficiently tested whether a given character string is in normal form or not.

stability

For the purpose of downward compatibility , it is guaranteed that a character string that is available in a normal form will also be available in future versions of the Unicode standard, provided it does not contain any characters that have not yet been assigned.

As of version 4.1, it is also guaranteed that the normalization itself does not change. Before that, there had been some corrections that resulted in some strings in different versions having different normal forms.

For applications that require absolute stability even beyond this version limit, there are simple algorithms to switch between the different normalizations.

Applications

The most common normal form in applications is NFC. It is recommended by the World Wide Web Consortium for XML and HTML and is also used for JavaScript by converting the code into this form before further processing.

The canonical normalizations ensure that equivalent data are not persisted in different forms and thus ensure consistent data management.

The compatible normalizations can be used, for example, for a search in which small optical differences should not matter. General normalizations can build on top of the Unicode normalizations.

See also

swell

- Julie D. Allen et al .: The Unicode Standard. Version 6.2 - Core Specification. The Unicode Consortium, Mountain View, CA, 2012. ISBN 978-1-936213-07-8 . ( online )

- Mark Davis, Ken Whistler: Unicode Standard Annex # 15: Unicode Normalization Forms. Revision 37 .

Individual evidence

- ↑ Unicode Character Encoding Stability Policy : Normalization Stability. Retrieved December 1, 2012.

- ^ Martin J. Dürst et al .: Character Model for the World Wide Web 1.0: Fundamentals. online , accessed December 1, 2012

- ^ ECMAScript Language Specification. 5.1 Edition, online , accessed December 1, 2012

Web links

- Unicode FAQ on normalization

- Normalization Browser of the ICU Project (English)