Call stack

A call stack ( English call stack , procedure stack ) is understood in software engineering and computer science to be a particularly used stack memory that contains the status of the subroutines that have just been called during the runtime of a program . It is an intended component of most processor architectures and its use is therefore supported or even required by special instructions and registers . As Stack Machine (Engl. For stacking machine , not to be confused with pushdown automaton ) is a class of processor architectures referred constructed entirely around a call stack around, in contrast, use register machines , although usually a call stack, but not exclusively dependent on its use. The management of the call stack is usually abstracted in high-level languages and is instead taken over by the compiler and operating system. In contrast to the paradigmatic stack , however, in many architectures the access options to the call stack are not restricted to the topmost element and the classification as a stack results from its use as a stack for return addresses of subroutines. In addition, the content of the memory is very inhomogeneous and links user data with administrative data.

functionality

Subroutine calls

Structured programming usually requires dividing programs into smaller subprograms in order to implement a certain functionality exactly once each time and thus avoid duplication of code. Since these are to be called from different points in the program, a subroutine cannot know a specific address at which execution is to be continued when the subroutine has ended. For this reason, addresses must be saved when the call is made at which the execution continues after the subroutine. This is the address immediately after the address of the subroutine call. At the end of the subroutine, the processor reloads the stored address into the instruction counter and program execution continues in the call level after the subroutine call; the return address is taken from the stack. Filling and emptying the call stack, however, is not the responsibility of the programmer if programming in high-level languages such as Java . Then compilers generate the necessary commands to use the call stack. After completion of a subroutine, the call stack must be returned to its original state so that a subroutine can be called as often as required without the call stack experiencing an overflow or underflow . Whether the subroutine itself is responsible for freeing the memory or the calling code has to do this depends on the calling convention used .

Local state

In addition to the return address, many architectures also save the local status of the subroutines on the call stack. Thus, in the x86 architecture , the stack into so-called stack frame (for example, for this purpose English stackframes divided) and stored next to the return address always a reference to the beginning of the last stack frames. Sufficient memory space is reserved in the current stack frame for the data of the current subroutine and this can be addressed using conventional memory access mechanisms of the processor architecture. A special register points to the current frame and the subroutine addresses its status relative to this address. So subroutines can call each other by getting new stack frame allocated to and the register is shifted by the size of the frame, respectively. The status of the previous, not yet completed subroutine calls is retained deeper in the stack.

Parameters and return values

Depending on the calling convention, some or all of the parameters for a subroutine call are also transferred via the stack. Result values of subroutines can also be returned via the call stack, depending on the calling convention. They then occupy the same block as the call parameters. The size of this area must therefore be chosen so that each of the two types fits into it. When returning, the call parameters are no longer required, so the return values simply overwrite the call parameters and are then available to the calling code.

implementation

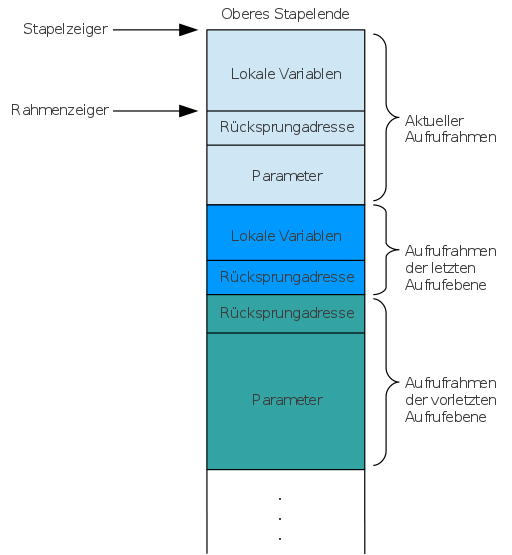

The stack frame contains the following structures for each active subroutine call:

- optional input parameters (shared with optional return values that are generated later)

- Return address

- optional local variables

After a subroutine call, the following structure remains:

- optional return values of the subroutine

The following diagram shows three call levels as an example.

- Call level (turquoise) uses parameters but has no local variables.

- Call level (blue) does not use any parameters, but has local variables.

- Call level (light blue) uses parameters as well as local variables and return values.

| During the subroutine call | After the subroutine has returned | After accepting the return values |

|---|---|---|

|

|

|

Concurrency and parallelism

A call stack can only ever represent an unbranched sequence of subroutine calls. When using processes and threads , a separate call stack must be set up for each process and thread so that return addresses and local variables do not overwrite each other. Modern approaches to concurrency sometimes also require the replacement of the call stack with more flexible mechanisms (see Activation Records ).

Stack overflow, recursion, and tail recursion

The memory for the call stack is of course not arbitrarily large. This becomes a problem especially when methods call each other or themselves very often ( recursion ). If a recursive problem is too big, the stack reaches its memory limit and no further stack frames can be reserved: The program crashes. It is called a stack overflow ( English stack overflow ). The problem can only be avoided completely if the programmer takes precautions to prevent recursion that is too deep. In addition, compilers can help optimize so-called end recursion . The compiler translates recursive calls into loops without changing the semantic meaning of the code. The resulting loop works exclusively in a single stack frame.

Segmented call stacks

A segmented call stack offers a further possibility to avoid the stack overflowing. If a subroutine is called, it checks whether there is enough memory space for its stack frame. If this is not the case, it calls another subprogram that a new so-called Stacklet ( English Stäpelchen allocated) and stores them in a list. This newly allocated block is then used for the following stack frames. Previous blocks can be found using the list as soon as the stack is reduced again. However, a segmented call stack leads to a problem that the Go community calls the “hot-split problem”. If a program constantly creates a new block for the call stack in a loop and releases it again immediately, this leads to a drop in performance. Because of this, the Rust and Go programming languages , which both used segmented call stacks in previous versions, have now replaced them with alternative solutions.

Stack trace

If an error occurs in a program that was not expected and handled by the programmer and does not allow the program to continue running, the program crashes. In doing so, information is usually collected that is intended to narrow down the cause of the crash and to simplify troubleshooting. This often includes what is known as a stack trace , which reflects the hierarchy of the call stack at the time of the error. This makes it possible to track in which subroutine the error occurred and how the program got there (since a subroutine could possibly be called from many places). In the case of a terminal recursive function, however, a considerable part of the stack trace is missing, which can be solved using a shadow stack .

Safety considerations

The proximity of local variables to the return address can allow the software to be compromised by buffer overflows.

If malware succeeds in overwriting the return address stored on the call stack in a defined manner in the subroutine that has just been called, it can influence which code is executed after the return. If your own malicious code was previously stored in the main memory, this procedure can be used to transfer control to the malicious code.

Is z. If, for example, a buffer is under the local variables and if the external malware succeeds in exploiting programming errors to ensure that this buffer is written beyond its defined end, the storage location of the return address can be reached. Since the call stack is typically written from higher to lower addresses (but the buffer from lower to higher addresses), older contents of the call stack can be changed by overwriting the buffer (see also buffer overflow ).

Shadow stack

The security risk with respect to the overwriting of return addresses, by a so-called shadow stack ( English shadow stack are mitigated). The return addresses are collected in an additional memory area that is separate from the actual stack and is thus separated from user data. Modified return addresses can thus be recognized and program execution terminated in good time. This makes typical stack-based attacks more difficult, but does not prevent them entirely, as an attacker may also gain access to the shadow stack. Shadow stacks are also used to add incomplete stack traces during debugging of programs with final recursion . For this purpose, all subroutine calls, including those that actually no longer take place due to the optimization of final recursion, are additionally stored in a shadow stack in order to facilitate troubleshooting.

interpreter

When implementing an interpreter , a new runtime environment is modeled that can have its own call stack. Some implementations design subroutine calls using a procedure callthat is called recursively during recursion, which leads to a coupling of the two call stacks. Since the size of the call stack is often limited in a machine program, the maximum recursion depth of the subroutine executed by the interpreter is also limited. One solution to this problem is to callembed in the main loop of the interpreter, which is equivalent to transforming the recursion into an iteration.

Alternatives

Register stack

Depending on the calling convention , parameters are transferred to subroutines in registers . However, since subroutines themselves require registers and can call further subroutines with different parameters, the contents of these registers often have to be stored on the call stack in order to reconstruct them later. A register stack engine prevents these cost-intensive memory accesses by simulating a stack of registers and only relocating them to the call stack when it becomes too large. If register content is to be placed on the stack and new content is to be written to the register, the register is instead renamed and a new register is used instead of the original one. Renaming is reversed to restore the contents of the register.

Activation Records

Alternatively, the stack frame (also called activation record) can be allocated on a heap . A partial heap allocation is necessary for closures , a full allocation for coroutines that keep their state between two calls. For this reason, the status cannot be managed on a stack, since this status would be overwritten if the program were paused.

Continuation-Passing Style

If the stack frame is firmly embedded in the machine program, the call stack is omitted, but this initially prevents the use of recursion. In the continuation-passing style , the return address is not even saved because a subroutine only calls new subroutines and never returns, which means that recursion is available again.

History

McCarthy reports the use of call stacks in a LISP implementation from 1958 onwards.

supporting documents

- ↑ Intel Corporation: Intel® 64 and IA-32 Architectures Software Developer's Manual (§6.1). Intel Corporation, May 2019, accessed June 18, 2019 .

- ↑ Intel Corporation: Intel® 64 and IA-32 Architectures Software Developer's Manual (§3.4.1). Intel Corporation, May 2019, accessed June 18, 2019 .

- ↑ __cdecl. In: C ++ Language Reference. Microsoft, September 10, 2018, accessed June 18, 2019 .

- ↑ Itanium C ++ ABI. Retrieved June 18, 2019 .

- ↑ Harold Abelson, Gerald Jay Sussman, Julie Sussman: Structure and Interpretation of Computer Programs . 2nd Edition. Cambridge, Massachusetts February 2, 2016, p. 45 f . ( [1] [PDF; accessed June 26, 2019]).

- ↑ Segmented Stacks in LLVM. In: LLVM Compiler Infrastructure. LLVM Project, June 26, 2019, accessed June 26, 2019 .

- ^ Contiguous stacks. In: Go Design Documents. Retrieved June 26, 2019 .

- ^ Brian Anderson: Abandoning segmented stacks in Rust. In: mail.mozilla.org Mailing Lists. November 4, 2013, accessed June 26, 2019 .

- ↑ Saravanan Sinnadurai, Qin Zhao, Weng-Fai Wong: Transparent Runtime Shadow Stack: Protection against malicious return address modifications . Singapore 2006 ( psu.edu [PDF; accessed June 26, 2019]).

- ↑ ShadowChicken.h. In: Webkit Code Base. Retrieved June 26, 2019 .

- ↑ Intel® Itanium® Architecture Software Developer's Manual. In: intel.com. Intel Corporation, May 2010, accessed June 26, 2019 .

- ^ J. McCarthy: History of Lisp. In: RL Wexelblat: History of Programming Languages. Academic Press, New York 1981, pp. 173-185.