Process (computer science)

A process (in some operating systems task called) is a computer program to maturity . More precisely, a process is the concrete instantiation of a program for its execution within a computer system , supplemented by further (administrative) information and resource allocations of the operating system for this execution.

A process is the runtime environment for a program on a computer system, as well as the binary code of the program embedded in it during execution. A process is dynamically controlled by the operating system through certain actions with which the operating system sets it in corresponding states. The entire status information of a running program is also referred to as a process. In contrast to this, a program is the (static) procedural rule for processing on a computer system.

The processes are managed by the operating system's process scheduler . This can either let a process compute until it ends or blocks ( non-interrupting scheduler ), or ensure that the currently running process is interrupted after a short period of time, and the scheduler back and forth between different active processes can change ( interrupting scheduler ), which creates the impression of simultaneity, even if no more than one process is being processed at any point in time. The latter is the predominant scheduling strategy of today's operating systems.

A concurrent execution unit within a process is called a thread . In modern operating systems, each process has at least one thread that executes the program code. Often processes are no longer executed concurrently, but only the threads within a process.

Differentiation between program and process

A program is a sequence of instructions (consisting of declarations and instructions ) that complies with the rules of a certain programming language in order to process or solve certain functions or tasks or problems with the aid of a computer . A program does nothing until it has been started. If a program is started - more precisely: a copy (in the main memory) of the program (on the read-only memory ), this instance becomes an ( operating system ) process that must be assigned to a processor core to run.

Andrew S. Tanenbaum metaphorically illustrates the difference between a program and a process using the process of baking a cake:

- The recipe for the cake is the program (i.e. an algorithm written in a suitable notation ), the baker is the processor core, and the ingredients for the cake are the input data. The process is the activity that consists of the baker reading the recipe, fetching the ingredients, and baking the cake. It can now happen, for example, that the baker's son comes running into the bakery screaming like on a spit. In this case, the baker makes a note of where he is in the recipe (the status of the current process is saved) and switches to a process with a higher priority, namely “providing first aid”. To do this, he pulls out a first aid manual and follows the instructions there. The two processes “baking” and “providing first aid” are based on a different program: cookbook and first aid manual. After medical attention has been given to the baker's son, the baker can return to "baking" and continue from the point at which it was interrupted. The example shows that several processes can share one processor core. A scheduling strategy decides when work on one process is interrupted and another process is served.

A process provides the runtime environment for a program on a computer system and is the instantiation of a program. As a running program, a process contains its instructions - they are a dynamic sequence of actions that bring about changes in status. The entire status information of a running program is also referred to as a process.

In order for a program to run, certain resources must be allocated to it. This includes u. a. a sufficient amount of main memory in which to run the relevant process. In general, the compiler translates a program into an executable file that the operating system can load into the address space of a process. A program can only become a process when the executable is loaded into main memory. There are two common techniques that a user can use to instruct the operating system to load and run an executable file: by double-clicking an icon that represents the executable file, or by entering the file name on the command line . In addition, running programs also have the option of starting other processes with programs without the user being involved.

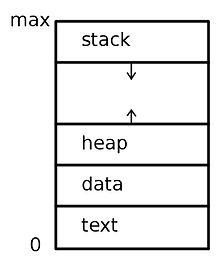

One process in particular includes

- the value of the command counter ,

- the contents of the processor registers belonging to the process ,

- the program segment that contains the program's executable code,

- the stack segment, which contains temporary data such as return addresses and local variables,

- the data segment containing global variables, and

- possibly a heap that contains dynamically requested memory that can also be released again.

Even if two processes belong to the same program, they are still considered to be two different execution units. For example, different users can run different copies of the same mail program, or a single user can activate different copies of a browser program. Each of these is a separate process. Even if the program segment is the same for different instances of a program, the data, heap and stack segments differ.

A process can in turn be an execution environment for other program code. One example of this is the Java runtime environment . This usually consists of the Java Virtual Machine (JVM), which is responsible for executing the Java applications . With it, Java programs are executed largely independently of the underlying operating system. This is how a compiled Java program can be executed Program.classwith the command line command java Program. The command javacreates an ordinary process for the JVM, which in turn executes the Java program Programin the virtual machine .

In connection with the memory areas that a running program can use as a process, one speaks of a process address space. A process can only process data that has been previously loaded into its address space; Data that is currently being used by a machine instruction must also be in the (physical) main memory. The address space of a process is generally virtual; The virtual memory designates the address space that is independent of the main memory that is actually available and that is made available to a process by the operating system. The Memory Management Unit (MMU) manages access to the main memory. It converts a virtual address into a physical address (see also memory management and paging ).

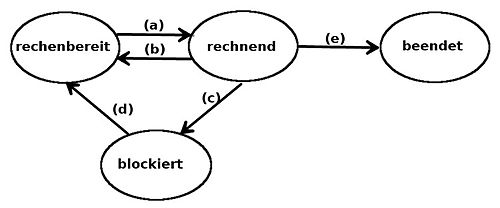

Process states

A distinction is made between

- the execution status of a process - which data are in the registers, how much memory it has at which addresses, current execution point in the program (instruction counter) etc. (process context) and

- the process status from the point of view of the operating system: for example “currently computing”, “blocked”, “waiting”, “finished”.

A processor (core) can only process one process at a time. With the first computers, the processes were therefore always processed one after the other as a whole, only one process could run at a time (exclusively). To reduce the waiting times when accessing z. B. to be able to use slow peripheral units, the possibility was created to only partially execute processes, to interrupt them and to continue them later ("restart"). In contrast to single-program operation (single processing), the term multiprogramming (also multi-program operation or multiprocessing) describes the possibility of "simultaneous" or "quasi-simultaneous" execution of programs / processes in an operating system. Most modern operating systems such as Windows or Unix support multi-process operation , whereby the number of concurrent processes is usually much higher than the number of existing processor cores.

A process thus goes through various states during its lifetime. If the operating system decides to assign the processor to another process for a certain period of time, the currently calculating process is first stopped and switched to the state ready for calculation . The other process can then be switched to the calculating state. It is also possible that a process is blocked because it cannot continue working. Typically this is done by waiting for input data that is not yet available. In simplified terms, four states can be distinguished:

- Computing ( running , also active ): The process is currently being executed on the CPU, i.e. H. the program commands are processed. The CPU resource can also be withdrawn from a process in the computing state ; it is then set to the ready state.

- (Computing) ready (. Engl ready ): In the state ready there are processes that have been stopped to have expected a different process. In theory, you can continue your process and wait for the CPU to be reassigned to you. Even if a new process is created, it first enters the ready state .

- Blocks (. Engl blocked ): Processes in the state blocked waiting for certain events that are necessary for the further process sequence. For example, I / O devices are very slow components compared to the CPU; if a process has instructed the operating system with an I / O device communication, it must wait until the operating system has completed this.

- Ended (Engl. Terminated ): The process has finished its execution, the operating system needs to "clean up".

This can be modeled as a state machine of a process with four states:

There are u. a. the following state transitions:

- (a) The processes that are in the state ready for processing are managed in a ready queue . Transition (a) is performed by a part of the operating system called the process scheduler . Usually several processes are ready to compute at the same time and compete for the CPU. The scheduler makes the choice which process runs next.

- (b) This transition takes place when the computing time allocated to the process by the scheduler has expired and another process is to receive computing time, or the active process voluntarily interrupts its computing time prematurely, for example with a system call .

- (c) If a process in the computing state cannot continue at the moment, mostly because it requires an operating system service or a required resource is not (yet) available at the moment, the operating system places it in the blocked state . For example, a process is blocked if it reads from a pipe or a special file (e.g. a command line ) and no data is yet available there.

- (d) If the cause of the blocked process no longer exists, for example because the operating system has provided the service, a resource is now available or an external event has occurred that the blocked process was waiting for (e.g. pressing a button , Mouse click), it is set back to the ready-to-compute state.

- (e) When a process has done its job, it terminates, i. H. it reports to the operating system that it is ending (with exactly this message); the operating system puts it in the terminated state . Another reason for a termination is if the process has caused a fatal error and the operating system has to abort it, or the user explicitly requests a termination.

The operating system now has time to free up resources, close open files, and perform similar cleanups.

Of course, the state machines of modern operating systems are a bit more complex, but in principle they are designed in a similar way. The designations of the process states also vary between the operating systems. The basic principle is that only one process per processor (core) can be in the computing state , whereas many processes can be ready to compute and blocked at the same time .

Process context

All of the information that is important for running and managing processes is called the process context .

The process context includes a. the information that is managed in the operating system for a process and the contents of all processor registers belonging to the process (e.g. general purpose registers , instruction counters , status registers and MMU registers ). The register contents are an essential part of the so-called hardware context. If a computing process loses the CPU, what is known as a context switch takes place: First, the hardware context of the active process is saved; then the context of the scheduler is established and this is executed; he now decides whether / which process should follow next. The scheduler then saves its own context, loads the hardware context of the process to be newly activated into the processor core and then starts it.

Process management

Process control block

Each process of an operating system is represented by a process control block (PCB, also task control block ). The process control block contains a lot of information related to a particular process. All important information about a process is saved when it changes from the computing state to the ready to compute or blocked state: The hardware context of a process to be suspended is saved in its PCB, the hardware context of the newly activated process from its PCB loaded into the runtime environment. This allows the blocked or ready-to-compute process to continue at a later point in time, exactly as it was before the interruption (with the exception of the change that previously blocked it, if applicable).

The information that is stored in the PCB about a process includes:

- The process identifier (PID for short, also process number, process identifier or process ID): This is a unique key which is used to uniquely identify processes. The PID does not change during the runtime of the process. The operating system ensures that no number occurs twice throughout the system.

- The process status : ready to compute , computing , blocked or terminated .

- The contents of the command counter , which contains the address of the next command to be executed.

- The contents of all other CPU registers that are available to / related to the process.

- Scheduling information: This includes the priority of the process, pointers to the scheduling queues and other scheduling parameters.

- Information for memory management: This information can contain, for example, the values of the base and limit registers and pointers to the code segment, data segment and stack segment.

- Accounting: The operating system also keeps a record of how long a process has been calculating, how much memory it occupies, etc.

- I / O status information: This includes a list of the I / O devices (or resources) that are connected to / are occupied by the process, for example also open files.

- Parent process, process group, CPU time of child processes, etc.

- Access and user rights

Process table

The operating system maintains a table with current processes in a core data structure, the so-called process table . When a new process is created, a process control block is created as a new entry. Many accesses to a process are based on searching for the associated PCB in the process table via the PID. Since PCBs contain information about the resources used, they must be directly accessible in memory. The most efficient access possible is therefore an essential criterion for the data structure in which the table is stored in the operating system.

Operations on processes

Process creation

Processes that interact with (human) users must "run in the foreground", that is, they must allocate and use input and output resources. Background processes, on the other hand, fulfill functions that do not require user interaction; they can usually be assigned to the operating system as a whole rather than to a specific user. Processes that remain in the background are called “ daemons ” on Unix-based operating systems and “ services ” on Windows -based operating systems . When the computer starts up, many processes are usually started that the user does not notice. For example, a background process for receiving e-mails could be started, which remains in the "blocked" state until an e-mail is received. In Unix-like systems , the running processes are displayed with the ps command ; the task manager is used for this under Windows .

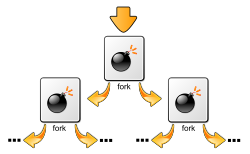

A running process can create (or have) other processes by means of a system call - an example system call for this is the fork () function in Unixoid systems: This creates an additional, new process (child process) from an existing process (parent process). The child process is created as a copy of the parent process, but receives its own process identifier (PID) and is subsequently executed as an independent instance of a program and independently of the parent process. In Windows, a process can be started using the CreateProcess () function .

In some operating systems, a certain relationship still exists between parent and child processes. If the child process creates further processes, a process hierarchy is created. In Unix, a process and its descendants form a process family. For example, if a user sends a signal using keyboard input, this is forwarded to all those processes in the process family that are currently connected to the keyboard. Each process can now decide for itself how to handle the signal.

In Windows, however, there is no concept of process hierarchy. All processes are equivalent. There is only a special token ( called a handle ) that allows a parent process to control its child process.

Process termination

A process normally ends when it declares itself to be ended by means of a system call at the end of its program sequence. Screen-oriented programs such as word processors and web browsers provide an icon or menu item that the user can click to instruct the process to voluntarily terminate. A process should close all open files and return all resources before it ends. The terminating system call is the exit system call under Unix and exitProcess under Windows .

A process can also be terminated (terminated) by another process. A system call that instructs the operating system to terminate another process is called kill under Unix and TerminateProcess under Windows .

Another reason to terminate is when a process causes a catastrophic failure. Often this happens due to programming errors . For example, if the process wants to access resources that are not (no longer) allocated to it (for example, writing to an already closed file, reading from a memory area that has already been returned to the operating system). Likewise, the operating system cancels a process that tries to carry out illegal actions (e.g. wants to execute bit combinations as commands that the CPU does not know, or direct hardware access that only the operating system is allowed to do).

If a process has been terminated in Unix-like operating systems, it can still be listed in the process table and still occupy allocated resources and have attributes. A process in this state is called a zombie process . When a child process is terminated, the parent process can then ask the operating system how it was terminated: successfully, with error, crashed, aborted, etc. To enable this query, a process remains in the process table even after it has been terminated stand until the parent process carries out this query - regardless of whether this information is needed or not. Until then, the child process is in the zombie state.

Process owner and process rights

One aspect of IT security requires that users must authenticate themselves to the computer system . Otherwise the operating system cannot judge which files or other resources a user is allowed to access. The most common form of authentication asks the user to enter a login name and password. Each user receives a unique user ID (UID). In turn, users can be organized into groups that are assigned a group ID (GID).

Access to certain data should be restricted and controlled in that only authorized users or programs are allowed to access the information. For example, an important security mechanism in Unix systems is based on this user concept: Each process bears the user ID and group ID of its caller. The login process tells the operating system whose user ID is to start a process. A process is said to belong to the user who started it. So the user is the owner of the process. If, for example, a file is created, it gets the UID and GID of the creating process. The file therefore also belongs to the user in whose name (with his UID) it was created. When a process accesses a file, the operating system uses the UID of the file to check whether it belongs to the owner of the process and then decides whether access is permitted.

A special case is the superuser (or root user), who has the most extensive access rights. In Unix systems this user account has the UID 0. Processes with the UID 0 are also allowed to make a small number of protected system calls that are blocked for normal users. The setuid bit enables an extended protection mechanism. If the setuid bit is set then the effective UID for this process is set to the owner of the executable instead of the user who called it. This approach enables unprivileged users and processes controlled access to privileged resources.

Process switching

In the case of a process switch ( context switch ), the processor is assigned to another process. In the case of non-interruptible processes, a process switch takes place at the start and end of a process execution. In the case of interruptible processes, a switchover is possible whenever the processor allows it. One speaks of the suspension of one process by another when a process with a higher priority receives the CPU.

Process scheduling

A process scheduler regulates the timing of several processes. The strategy he uses is called a scheduling strategy. This is the strategy that the scheduler uses to switch processes. This should enable the "best possible" allocation of the CPU to the processes, although different goals can be pursued (depending on the executing system). In interactive systems, for example, a short response time is desired; H. the shortest possible reaction time of the system to the input of a user. For example, when he keyed in a text editor, the text should appear immediately . As one of several users, a certain fairness should also be guaranteed: No (user) process should have to wait disproportionately long while another is preferred.

Single-processor systems manage exactly one processor (core) (CPU). So there is always only one process that is in the computing state ; all other (computational) processes must wait until the scheduler assigns the CPU to them for a certain time. Even with multiprocessor systems , the number of processes is usually greater than the number of processor cores and the processes compete for the scarce resource, CPU time. Another general goal of the scheduling strategy is to keep all parts of the system busy as possible: if the CPU and all input / output devices can be kept running all the time, more work is done per second than if some components of the Computer system are idle.

Scheduling strategies can be roughly divided into two categories: A non-interruptive ( nonpreemptive ) scheduling strategy selects a process and lets it run until it blocks (e.g. because of input / output or because it is waiting for another process ) or until he voluntarily gives up the CPU. In contrast to this, an interrupting ( preemptive ) scheduling strategy selects a process and does not let it run longer than a specified time. If it is still calculating after this time interval, it is terminated and the scheduler selects another process from the processes that are ready to run. Interrupting scheduling requires a timer interrupt that returns control of the CPU to the scheduler at the end of the time interval.

Interruptions

The hardware or software can temporarily interrupt a process in order to process another, usually short but time-critical process (see interrupt ). Since interruptions in systems are frequent events, it must be ensured that an interrupted process can be continued later without losing work that has already been done. Interrupt handling is inserted into the interrupted process. This is carried out in the operating system.

The triggering event is called an interrupt request (IRQ). After this request, the processor executes an interrupt routine (also called interrupt handler , interrupt service routine or ISR for short). The interrupted process is then continued from where it was interrupted.

Synchronous interruptions

Synchronous interruptions occur in the case of internal events that always occur at the same point in the program, especially with identical framework conditions (program execution with the same data). The point of interruption in the program is therefore predictable.

The term synchronous indicates that these interruptions are linked to the execution of a command in the computer core itself. The computer core recognizes exceptions (engl. Exceptions or traps ) as part of its processing (. For example a division by zero ). The computer core must therefore react immediately to internal interrupt requests and initiate the handling of the (error) situation in the interrupt handling. So you are not delayed.

According to their typical meaning, special terms have been used for some interruption requests, for example:

- A command alarm occurs when a user process tries to execute a privileged command.

- A page fault occurs in virtual memory management with paging when a program accesses a memory area that is not currently in main memory but has been swapped to the hard disk, for example.

- An arithmetic alarm occurs when an arithmetic operation cannot be performed, such as when dividing by zero.

Asynchronous interruptions

Asynchronous interruptions (also called (asynchronous) interrupts) are interruptions that are not linked to the processing process. They are caused by external events and are not related to CPU processing. Accordingly, such interruptions are unpredictable and not reproducible.

Often an asynchronous interrupt is triggered by an input / output device. These usually consist of two parts: a controller and the device itself. The controller is a chip (or several chips) that controls the device on the hardware level. It receives commands from the operating system, such as reading data from the device, and executes them. As soon as the input / output device has finished its task, it generates an interrupt. For this purpose, a signal is placed on the bus, which is recognized by the corresponding interrupt controller, which then generates an interrupt request in the CPU that has to be processed with the help of a suitable program section (usually an interrupt service routine, or ISR for short ).

Examples of devices generating an interrupt request are:

- Network card : when data has been received and is available in the buffer

- Hard disk : when the previously requested data has been read and is ready to be retrieved (reading from the hard disk takes a relatively long time)

- Graphics card : when the current picture has been drawn

- Sound card : if sound data is needed again for playback before the buffer is empty.

The interrupt service routines are mostly addressed to interrupt vectors that are stored in an interrupt vector table. An interrupt vector is an entry in this table that contains the memory address of the interrupt service routines.

At the end of an interrupt processing routine, the ISR sends an acknowledgment to the interrupt controller. The old processor status is then restored and the interrupted process can continue working at the point where it was interrupted. A corresponding scheduling decision can also enable a process with a higher priority to receive the CPU first, before the interrupted process has its turn. This depends on the scheduling strategy of the operating system.

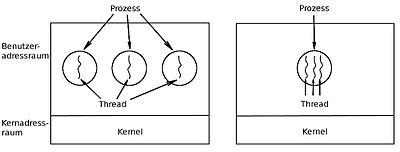

Threads

Since the management of processes is relatively complex, modern operating systems also support a resource-saving concept, the so-called threads (German: threads, execution strands). A thread represents a concurrent execution unit within a process. In contrast to the heavy-weight processes, threads are characterized as light-weight processes (LWP for short). These are easier to create and then destroy again: In many systems, creating a thread is 10-100 times faster than creating a process. This property is particularly advantageous when the number of threads required changes dynamically and quickly.

Threads exist within processes and share their resources. A process can contain several threads or - if parallel processing is not provided for in the course of the program - only a single thread. An essential difference between a process and a thread is that each process has its own address space, while no new address space has to be set up for a restarted thread, but the threads can access the shared memory of the process. Threads also share other operating system-dependent resources such as processors, files and network connections within a process. Because of this, the administrative effort for threads is usually less than that for processes. A significant efficiency advantage of threads is, on the one hand, that, in contrast to processes, a complete change of the process context is not necessary when changing threads, since all threads use a common part of the process context, on the other hand, in the simple communication and fast data exchange between threads. However, threads of a process are not protected against each other and must therefore coordinate (synchronize) when accessing the common process resources.

The implementation of threads depends on the particular operating system. It can be done at the kernel or at the user level. In Windows operating systems, threads are implemented at the kernel level; in Unix, thread implementations are possible both at the kernel and at the user level. In the case of threads at the user level, the corresponding thread library performs the scheduling and switching between the threads. Each process manages a private thread control block (analogous to the PCB) and the kernel has no knowledge of whether a process is using multiple threads or not. With kernel threads, the threads are managed in kernel mode. A special thread library is not required for the application programmer. The core is involved in creating and switching threads.

A thread package at user level (left) and a thread package managed by the operating system kernel (right)

Interprocess communication

The term inter-process communication (Engl. Interprocess communication , short IPC) said various methods of information exchange between the processes of a system. In the " shared memory " variant, communication takes place in that several processes can access a common data memory , for example common areas of the main memory . In the case of a message queue, however, “messages” (data packets) are appended to a list (“ message queue ”) by a process; from there these can be picked up by another process. The third variant is the " pipe ", a (byte) data stream between two processes based on the FIFO principle . In order to be able to transfer longer data packets efficiently, a pipe is usually supplemented by a send and / or receive buffer .

Communication between processes should be done in a well-structured manner. In order to avoid race conditions , the critical sections in which shared resources are accessed should be protected from (quasi-) simultaneous access. This can be implemented using various mechanisms such as semaphores or monitors . If a process waits for a resource to be released or for a message to be received that another process does not release / send, because the process in turn waits for the former to release another resource / send a message, a so-called deadlock situation arises . More than two processes can block each other.

Classic problems of interprocess communication are the producer-consumer problem , the philosopher problem and the reader-writer problem.

Improvement of the CPU utilization

The use of multiprogramming can improve the CPU utilization. If a process a portion of its term for the completion of I / O waits, then the probability that such processes are waiting for the I / O . This corresponds to the probability that the CPU would be idle. The CPU utilization can thereby be expressed as a function of , which is called the degree of multiprogramming:

- CPU utilization =

It is quite common for an interactive process to spend 80% or more in the I / O wait state. This value is also realistic on servers that do a lot of disk input / output. Assuming that processes spend 80% of their time in the blocked state, at least 10 processes must be running for the CPU to be wasted less than 10% of the time.

Of course, this probabilistic model is only an approximation. It assumes that all processes are independent. However, several processes cannot run simultaneously in a single CPU. It should also be taken into account that a process that is ready to compute has to wait while the CPU is running. An exact model can be constructed using the queuing theory . Nevertheless, the model illustrates the improvement in CPU utilization: With multiprogramming, processes can use the CPU that would otherwise be idle. At least rough predictions about the CPU performance can be made in this way.

Program examples

Create a child process with the fork () call

With the help of the fork function, a process creates an almost identical copy of itself. In English, the name means something like “fork, branch or split”: The calling process comes to a fork in the road where the parent and child process separate.

The following C program declares a counter variable counterand first assigns the value 0 to it. A fork()child process is then created by which is an identical copy of the parent process. If fork()successful, the system call returns the PID of the newly created child to the parent process. In the child, on the other hand, the function returns the return value 0. With the help of this return value, information can now be obtained about whether it is a parent or child process and can continue accordingly in an if-else branch . To find your own PID is getpid()necessary.

After calling fork(), two processes run quasi-parallel, both of which increase their own version of the counter variable counterfrom 0 to 1000. You now have no influence on which process is processed at which point in time. Accordingly, the output on the console can vary from one run to the next.

#include <stdio.h>

#include <unistd.h>

int main(void)

{

printf("PROGRAMMSTART\n");

int counter = 0;

pid_t pid = fork();

if (pid == 0)

{

// Hier befinden wir uns im Kindprozess

int i = 0;

for (; i < 1000; ++i)

{

printf(" PID: %d; ", getpid());

printf("Kindprozess: counter=%d\n", ++counter);

}

}

else if (pid > 0)

{

// Hier befinden wir uns im Elternprozess

int j = 0;

for (; j < 1000; ++j)

{

printf("PID: %d; ", getpid());

printf("Elternprozess: counter=%d\n", ++counter);

}

}

else

{

// Fehler bei fork()

printf("fork() fehlgeschlagen!\n");

return 1;

}

printf("PROGRAMMENDE\n");

return 0;

}

Forkbomb

The following sample program creates a fork bomb . It starts a process which in a continuous loop by means fork()generates repeatedly child processes that behave the same way as the parent process. This uses up the available system resources (process tables, CPU, etc.). A fork bomb thus realizes a denial-of-service attack , but can also "go off" if the fork call is used carelessly.

#include <unistd.h>

int main(void)

{

while(1)

{

fork();

}

return 0;

}

The concrete effect of the fork bomb depends primarily on the configuration of the operating system. For example, allows PAM on Unix , the number of processes and the maximum consuming memory limit per user. If a fork bomb "explodes" on a system that uses these possibilities of limitation, the attempt to start new copies of the fork bomb fails at some point and growth is curbed.

See also

literature

- Albert Achilles: Operating Systems. A compact introduction to Linux. Springer: Berlin, Heidelberg, 2006.

- Uwe Baumgarten, Hans-Jürgen Siegert: Operating systems. An introduction. 6th, revised, updated and expanded edition, Oldenbourg Verlag: Munich, Vienna, 2007.

- Erich Ehses, Lutz Köhler, Petra Riemer, Horst Stenzel, Frank Victor: System programming in UNIX / Linux. Basic operating system concepts and practice-oriented applications. Vieweg + Teubner: Wiesbaden, 2012.

- Robert Love: Linux Kernel Development. A thorough guide to the design and implementation of the Linux kernel. Third Edition, Addison-Wesley: Upper Saddle River (NJ), u. a., 2010. ( Online )

- Peter Mandl: Basic course operating systems. Architectures, resource management, synchronization, process communication, virtualization. 4th edition, Springer Vieweg: Wiesbaden, 2014. (Older edition used: Basic course operating systems. Architectures, resource management, synchronization, process communication. 1st edition, Vieweg + Teubner: Wiesbaden, 2008.)

- Abraham Silberschatz, Peter Baer Galvin, Greg Gagne: Operating System Concepts. Ninth Edition, John Wiley & Sons: Hoboken (New Jersey), 2013.

- Andrew S. Tanenbaum : Modern Operating Systems. 3rd, updated edition. Pearson studies, Munich a. a., 2009, ISBN 978-3-8273-7342-7 .

- Jürgen Wolf: Linux-UNIX programming. The comprehensive manual. 3rd, updated and expanded edition, Rheinwerk: Bonn, 2009.

References and comments

- ↑ Christian Ullenboom: Java is also an island. Introduction, training, practice. 11th, updated and revised edition, Galileo Computing: Bonn, 2014, p. 902.

- ↑ ISO / IEC 2382-1: 1993 defines "computer program": "A syntactic unit that conforms to the rules of a particular programming language and that is composed of declarations and statements or instructions needed to solve a certain function, task, or problem . ”Until 2001, DIN 44300 defined“ Information processing terms ”identically.

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, pp. 126-127.

- ^ Mandl: Basic course operating systems. 4th ed., 2014, p. 78.

- ↑ Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, pp. 106-107.

- ↑ Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, p. 106.

- ↑ a b Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, p. 107.

- ^ Mandl: Basic course operating systems. 4th edition, 2014, p. 80.

- ^ Mandl: Basic course operating systems. 4th ed., 2014, pp. 35–36.

- ^ Mandl: Basic course operating systems. 2008, p. 78; Tanenbaum: Modern Operating Systems. 3rd ed., 2009, pp. 131-132.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 131-133; also Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, pp. 107, 111-112.

- ↑ Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, p. 107.

- ↑ a b Mandl: Basic course operating systems. 4th edition, 2014, p. 79.

- ^ Mandl: Basic course operating systems. 4th ed., 2014, p. 81.

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, pp. 133-135.

- ↑ Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, pp. 107-109; Tanenbaum: Modern Operating Systems. 2009, pp. 133-134.

- ↑ Note : Under certain circumstances, a process can be explicitly required to have the same PID as another, e.g. B. by ! CLONE_PID .

- ↑ Note : ps without options only shows processes that were started from text consoles or shell windows. The x option also shows processes that are not assigned a terminal. There is also the top command : This arranges the processes according to how much they load the CPU and shows the currently active processes first.

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, p. 127.

- ↑ UNIXguide.net by Hermelito Go: What does fork () do? (accessed on April 20, 2016)

- ^ Windows Dev Center: Creating Processes

- ↑ Note : The easiest way to display the process hierarchy is with the shell command pstree .

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, pp. 130-131.

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, pp. 129-130.

- ↑ Tanenbaum: Modern Operating Systems. 3rd ed., 2009, pp. 742-743.

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, p. 923.

- ^ Peter H. Ganten, Wulf Alex: Debian GNU / Linux. 3rd edition, Springer: Berlin, u. a., 2007, p. 519.

- ↑ Tanenbaum: Modern Operating Systems. 3rd ed., 2009, pp. 924-925.

- ↑ Dieter Zöbel: Real-time systems. Basics of planning. Springer: Berlin, Heidelberg, 2008, p. 44.

- ^ Mandl: Basic course operating systems. 4th ed., 2014, p. 79; Silberschatz, Galvin, Gagne: Operating System Concepts. 2013, pp. 110-112.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 198.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 195-196.

- ^ Mandl: Basic course operating systems. 2014, p. 53; Hans-Jürgen Siegert, Uwe Baumgarten: Operating systems. An introduction. 6th edition, Oldenbourg Verlag: München, Wien, 2007, p. 54.

- ^ Hans-Jürgen Siegert, Uwe Baumgarten: Operating systems. An introduction. 6th edition, Oldenbourg Verlag: München, Wien, 2007, p. 54.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 60-62, 406-410; Mandl: Basic course operating systems. 2014, p. 55.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 60-62, 406-410; Mandl: Basic course operating systems. 2014, p. 55.

- ^ Mandl: Basic course operating systems. 2014, pp. 55–58.

- ^ Mandl: Basic course operating systems. 2008, pp. 78-79; Tanenbaum: Modern Operating Systems. 3rd edition 2009, pp. 137-140; Elisabeth Jung: Java 7. The exercise book. Volume 2, mitp: Heidelberg, u. a., 2012, pp. 145–146.

- ^ Mandl: Basic course operating systems. 2008, pp. 79-82.

- ↑ Tanenbaum: Modern Operating Systems. 3rd edition, 2009, pp. 135-136.

- ↑ The sample program is based on Ehses, u. a .: System programming in UNIX / Linux. 2012, pp. 50-51; see also Wolf: Linux-UNIX-Programming. 3rd ed., 2009, pp. 211-219 and Markus Zahn: Unix network programming with threads, sockets and SSL. Springer: Berlin, Heidelberg, 2006, pp. 79-89.