Paging

When paging (see. Engl. Page " Memory page ") refers to the method of storage management by page addressing by operating systems . The German term tile management is rarely used.

Paging enables virtual memory management . Virtual memory describes the address space that is independent of the actual physical main memory and that is made available to a process by the operating system . Since there are usually more virtual addresses than can be implemented in the physical main memory, some memory areas are temporarily transferred to the hard disk .

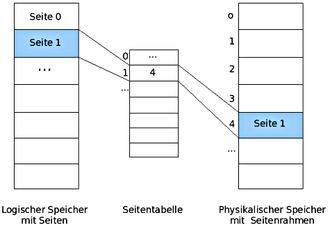

When paging, the virtual address space is divided into equal pieces, which one (Engl. As pages pages called). The physical address space is also subdivided in this way. The corresponding units in the physical memory are called page frames or page frames . The pages are in the so-called page table (Engl. Pagetable ) manages that contains information about where a (virtual) side of the corresponding (real) page frame in memory is actually to find, and usually additional information as to validity, write access or whether (and possibly where) the page has been outsourced.

If a process addresses a virtual address that is not assigned to a physical memory address, a system call is triggered. This call will be side error (Engl. Page fault ) called. The immediate consequence of the page fault is a synchronous process interruption ( trap ). The operating system then checks whether an associated page frame exists and has just been swapped out - then it selects a rarely used page frame, writes its contents back to the hard drive, loads the required page frame into the free memory area, changes the allocation table and sets the interrupted process continue with the failed command.

If there is no swapped out page frame for the requested virtual address, the operating system can either assign an empty real page frame (possibly previously “scooped up”) and continue the process, or cancel the process with the message “Page fault”. Operating systems that are currently in use abort a process in this case.

functionality

Virtual address space

The physical address space is given by the actually available working memory (main memory). A physical address is a real address of a memory cell in the main memory. In most cases, however , processes no longer use physical, but only virtual (logical) addresses. The principle of virtual memory management enables all active processes to occupy more memory than is actually available in the physical address space. The virtual addresses form the virtual address space. Now they do not go directly to the memory bus, but to the Memory Management Unit (MMU, dt. Memory Management Unit ) that maps the virtual addresses to physical addresses.

From the point of view of the programmer who works with virtual addresses, the address space appears (almost) unlimited. He does not need to take into account whether these addresses really exist in the physical main memory that actually exists. The operating system solves this task by temporarily relocating storage areas to a mass storage device , usually the hard disk . In memory management , data is swapped in and out of the main memory on the hard disk.

Often times, a system has much more virtual memory than physical memory. Specifically, the installed RAM determines the size of the physical memory, while the virtual address space depends on the architecture of the instruction set. With a 32-bit processor you can address a maximum of bytes (i.e. 4 GiB ) of memory, with a 64-bit system you can address bytes (16 EiB ), even if, for example, only 512 MiB RAM are actually installed.

The concept of virtual memory management is usually the basis for multitasking , since each task can have its own address space, which supports the shielding of the tasks from one another; In addition, the size of the task address space is no longer dependent on how much main memory other tasks occupy.

To organize the virtual memory, there is on the one hand the segment-oriented approach (see segmentation ), in which the memory is divided into units of different sizes, and on the other hand the page-oriented approach (paging), in which all memory units are the same length.

Paging

When paging, the virtual address space is divided into equal pieces, which you as pages (English, pages called). The physical address space is also subdivided in this way. The corresponding units in the physical memory are called page frames or page frames . Pages and page frames are usually the same size, for example 4 KiB .

Example : The physical size of the main memory is 64 KiB. A program requires a total of 200 KiB of memory. In order to still be able to execute the larger program on the smaller main memory, the address space can be divided into pages, for example four pages of 64 KiB, whereby the last page is only partially filled. One of the four pages is then located in the physical main memory, the other three are swapped out to the hard disk.

The part of the hard drive that is used for the paged pages is called the paging area or shadow memory. There are many different strategies for storing and removing pages. Basically, an attempt is always made to keep the pages in the main memory that will also be used in the near future in order to perform paging as rarely as possible.

As long as the memory accesses concern pages that are in the main memory, the system works normally. However, if a page is addressed in the swapped memory, the requested page must be swapped in (and possibly another page swapped out). In addition, an address mapping must be activated. The whole process is called paging.

Side tables

Address mapping

In multiprogramming mode , the memory manager provides each process with its own virtual address space, i. H. a set of addresses that a process can use to address memory. The virtual address space of a process is now broken down into units during paging, the so-called pages. These are the so-called page table (page table) managed that contains information about where the respective side frames are actually found in the memory for a page. Mathematically, the table can be understood as a function that takes the virtual page number as an argument and returns the page frame number (page frame number) as the result. This allows a virtual address to be mapped onto a physical address.

A virtual address is divided into two parts: a virtual page number (more significant bits) and an offset (less significant bits). The virtual page number is used as an index for the entry in the page table. The offset represents the relative distance between an address and a base address. It is therefore the distance that specifies the exact byte address within a page. With the help of the virtual page number as an index, the associated entry can be found in the page table. This contains a reference to the page frame. The page frame number is added to the physical address and the physical address is obtained together with the distance (offset). The size of the offset should therefore be chosen so that every address within a page can be addressed.

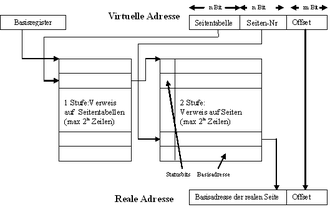

The following graphic illustrates how a physical address (here: real address) is calculated from a virtual address:

The virtual address consists of two binary numbers , the n-bit long page number and the m-bit long offset. The higher-value part of the physical address (here: base address of the real page) is taken from the page table, with the page number as the index. If this is concatenated with the offset , a new number results which is exactly the physical memory address. Such calculations are carried out by the memory management unit.

The addresses on the hard disk where the swapped-out pages are located are not saved in the page table. This only contains information that the hardware needs to convert a virtual address into a physical one. When handling page faults , separate tables in the operating system are used.

Structure of a page table entry

An entry in the page table can be addressed using the page number as an index. The exact structure of such an entry is very dependent on the machine. However, the information it contains is roughly the same from machine to machine. According to Andrew S. Tanenbaum (2009), a typical page table entry looks like this:

The individual entries have the following meanings:

- Page Frame Number: A physical address in memory to which the entry refers (see section above).

- Present / Absent bit: This indicates whether the page is currently in the main memory (bit set to 1) or not (bit set to 0). The latter creates a page fault.

- Protection bits (also known as protection bits): These regulate access to the page. In the simplest case, the field contains only 1 bit, with 0 for read and write access and 1 for write access. A more sophisticated method uses three bits, one each for read permission, one for write permission, and one for permission to execute the page.

- M bit (modified bit, also dirty bit): The M bit is set when a program writes to a page. This is what the operating system uses when a page is swapped out: If the page has been changed, the page frame must be written back to the hard disk; if not, it can simply be overwritten because the copy of the page on the hard disk is still up-to-date.

- R-Bit (Referenced-Bit): The R-Bit is set each time the page is accessed, regardless of whether it is read or written. It helps the operating system decide which page to swap out in the event of a page fault. Pages that have not been used are better candidates than those that are constantly accessed.

- Bit for switching off caching : With this bit the caching can be switched off. This is particularly relevant for pages that are mapped to device registers rather than memory. Computers that have a separate I / O address space and do not use memory-mapped input / output do not need this bit.

Page fault

If a process addresses an address that is not loaded in the main memory, the MMU generates a so-called page fault , which is handled by the operating system . There are two general causes of a page fault:

- The actually associated page frame is only temporarily not available in the main memory, for example because it has just been swapped out or the process is accessing this page for the first time.

- The page is invalid or the process has accessed a virtual address that is not permitted. A “segmentation fault” or “general protection fault” is triggered.

The immediate consequence of a page fault is a synchronous process interruption ( trap ): a so-called page fault interrupt is triggered. In kernel mode, the operating system jumps to a special interrupt routine for processing the page error and tries to load the page into a page frame, taking into account the page replacement strategy and the allocation strategy. The interrupted process then receives the processor again either immediately or later (see process states ). In particular, the following steps are performed by the operating system:

- It is checked whether the request is allowed.

- A free page frame is searched for in the main memory. If no free page frame can be found, space must first be created by relocating the contents of a page frame to the hard disk.

- The required information is searched for on the hard disk and copied into the page frame found.

- The associated entry in the page table is adjusted, i.e. H. the address of the page is entered and the corresponding present / absent bit is set.

To find a free page frame, a freelist can be managed, for example. A bit vector that contains one bit for each page frame is suitable for this . If the bit is set, it is used, otherwise it is free and can be assigned. As soon as a page frame is occupied or released, the freelist must of course be adjusted accordingly.

Translation lookaside buffer

Translation lookaside buffers (TLB for short) are used to accelerate the translation of virtual into physical addresses.

If only the page table were kept in memory, the execution speed would be significantly reduced. For example, for a 1-byte instruction that copies one register to another, only one memory access is required to fetch the instruction from memory without paging. At least one additional memory access to the page table is required with paging. So if two memory accesses per instruction are required, the performance of the CPU would be roughly halved.

Therefore, the last determined values for the address of the physical memory page are cached in the Translation Lookaside Buffer (TLB) , so that new accesses to addresses on this page do not have to be determined again, but can be taken from this list. The TLB can hold a limited number of these references and can thus significantly accelerate the execution of memory accesses. This is implemented using associative order registers that allow parallel access. The TLB is usually part of the MMU. The fields of the TLB are normally taken one-to-one from the page table, with the exception of the virtual page number, which is not required in the page table. Another field also indicates whether the entry is currently being used.

The number of fields in a TLB depends on the computer architecture and is often between 8 and 256. IA-64 architectures , for example, have 128 entries in the TLB. Due to the high locality of many programs, a considerable increase in performance can be achieved with just eight entries.

When a virtual address is sent to the MMU for translation, it first checks whether there is a corresponding entry in the TLB by comparing the virtual page number with all entries simultaneously (in parallel). If a suitable entry is found, the page number from the TLB can be used. Otherwise a TLB error occurs. The MMU fetches the entry accordingly from the page table and also writes it to the TLB.

Other properties

Page size

The size of the page frames has a significant influence on memory utilization. For large pages you get more hits per page view, i.e. H. fewer storages are necessary. In addition, a smaller page table is sufficient. However, fewer pages can then be in the main memory at the same time and there is greater internal fragmentation , which results from the fact that a process is allocated a larger memory area than it actually needs. Specifically, internal fragmentation consists of part of the last page of a process. If, on the other hand, the page frames are too small, you need very long page tables. The optimal page size is a balance between these effects.

A default page size is usually dictated by the hardware. Page size tends to grow with memory, but not linearly. Most of the time, when memory quadruples, the page size doesn't even double.

The Pentium 4 and all other IA-32 processors provide paging with a page size of 4 KiB. From the Pentium Pro , the page size can optionally be set to 4 MiB. The AMD64 architecture supports physical page sizes of 4 KiB, 2 MiB, 4 MiB, and 1 GiB. Some processors also support multiple page sizes, some of which can be used at the same time. For example, a large page size can be provided for the operating system and graphics memory, e.g. B. 8 MiB.

Even if the hardware specifies certain page sizes, the operating system can influence them. For example, if the hardware was designed for 4 KiB pages, the operating system can treat page frames 0 and 1, 2 and 3, 4 and 5, etc. as 8 KiB pages by having two consecutive page frames in the event of a page fault reloads.

The following table shows page sizes under Windows depending on the processor architecture:

| Processor architecture | Small page size | Large page size |

|---|---|---|

| x86 | 4 KiB | 4 MiB |

| AMD64 | 4 KiB | 2 MiB |

| Intel 64 | 8 KiB | 16 MiB |

Side tables for large memory areas

Since the number of entries in a (single-level) page table depends on the size of the virtual address space and the selected page size, a problem arises if the virtual address space is too large and / or the selected page size is too small. On a 64-bit computer with an address space of bytes and 4 Kibibyte ( ) pages, the page table would have entries. For example, with 8 bytes per entry (52 bits for addressing, 7 status bits according to the above example ) the page table would be over 33 million gibibytes (32 pebibytes), in contrast to a size of only 4 mebibytes with the same page size on a 32-bit -Computer with e.g. 4-byte page table entries (20 bits for addressing, 7 status bits according to the same example above). Other solutions are therefore necessary for virtual 64-bit address spaces with paging. Therefore, the concepts of the multilevel page table and the inverted page table were developed.

Multi-level page table

The address conversion with the aid of a k -step page table is done by dividing a virtual address into k * n more significant bits as page table references and m less significant bits as offset. With the k th reference in the virtual address, the base address of the page table of level k + 1 is read from the k th page table . The last stage then contains the actual reference to the real base address. The base address of the real memory page read from the last level of the page table together with the unchanged offset result in the real address.

The advantage of this approach over the single-level page table is that it is not always necessary to keep all of the page tables in memory. In particular, the tables that are not required should not be kept in memory for no use. The page tables themselves can therefore also be swapped out; they are also subject to paging.

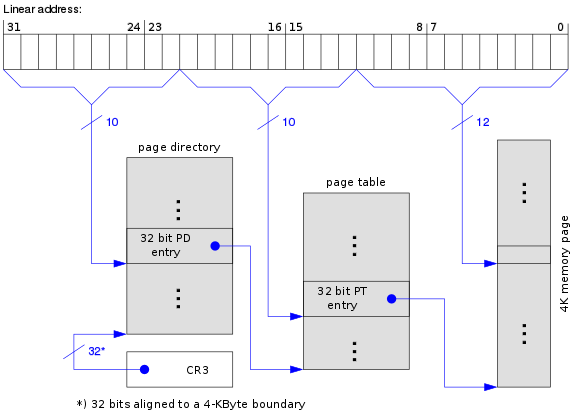

For the IA-32 processors, for example, it was decided to use a two-stage page management system in which a central page directory refers to up to 1024 page tables . The virtual address was divided into three parts for implementation:

- The most significant 10 bits are used to select one of the max. 1024 entries in the page directory that contains a reference to the page table.

- Bits 12-21 form the page number in the corresponding table.

- The least significant 12 bits form the offset.

With x64 Windows, the page table even has four levels. A 48-bit wide virtual address is divided into 4 indices of 9 bits each and an offset of 12 bits.

Inverted page table

With the inverted page table method, an entry is no longer created in the page table for each virtual page, but only one entry for each physical page frame. If the virtual address space is much larger than the physical memory, this saves a lot of space in the table.

Each entry in the table saves the associated pair (process number, page number). However, access to the page table now requires a search process: If a process with the process number n wants to access the virtual page p, the hardware must search the entire table for the entry (n, p). This makes memory access much more complex. This can be remedied by adding a hash table with the virtual addresses as hash values. All pages that have the same hash value are chained.

In addition, memory access is accelerated by a translation lookaside buffer (TLB). If all frequently used pages fit into the TLB, the address conversion is just as fast as with conventional methods. In the event of a TLB error, however, the inverted page table must be searched by the software.

Demand paging and prepaging

With so-called demand paging (storage on demand), storage only takes place on request, i.e. when the data is actually needed. In the purest form of demand paging, a process does not start with a single page in memory. As soon as the CPU tries to load the first instruction, there is a page fault and the operating system stores the corresponding page. More page faults follow until the process has "compiled" the most important pages and can run with relatively few page faults.

With so-called prepaging, on the other hand, pages that have not yet been requested can also be fetched into main memory. The spatial property of locality is used . This means that after access to an address range, there is a high probability that the next access to an address in the immediate vicinity will take place.

Combinations of demand paging and prepaging are also used in practice: for example, when a requested page is fetched, the neighboring pages or other pages can be loaded into the main memory according to a specific algorithm.

Thrashing and working set model

If too many page faults occur in a computer system in too short a time, the system is mainly busy with reloading and swapping out pages. The available computing power is significantly reduced. This condition is called thrashing (literally: thrashing ) or side flutter.

For example, thrashing occurs when there are too many processes in memory. Due to the additional load with processes, on the one hand the number of pages available per process in the main memory decreases and on the other hand the number of page exchange activities increases. Processes no longer run without encountering non-existent pages after a short time. However, only pages that are required again shortly afterwards can be displaced from memory. Above a certain limit, the available computing power is used almost entirely to store and remove memory contents and to search for processes that are ready to run.

The number of pages that a process uses at a certain point in time is known as the working set . If the entire work area of a process is in the main memory, it runs with very few page faults. The working set model therefore tries to reduce the page fault rate by having the operating system memorize the work area of a process when it is swapped out and ensure that it is swapped in again before it is executed again.

To avoid thrashing, the following points should be observed:

- The main memory should be large enough to be able to accommodate all working sets of the processes to be executed simultaneously, even in an extreme load case with a maximum of many simultaneous processes.

- At any given point in time, at least one of the processes that the system has to process should be able to be processed by the processor, i.e. its work area is located in the main memory. In this operating state, the processor does not wait for pages to be reloaded, but works on a process while pages are being reloaded for other processes in parallel.

- Programs should access their data as locally as possible and avoid random access to constantly changing memory pages.

The following sizes are decisive:

- t: The time the processor needs to access a memory location

- T: The time it takes to reload a page

- s: The proportion of pages that are in the main memory in relation to the total number of all pages required for program execution (0 <s ≤ 1)

- p (s): The probability of a page fault, depending on s

To avoid thrashing, p (s) ≤ t / T must be. The minimum proportion w of pages that must be in the working memory (i.e. the work area) is determined by the equation

- p (w) = t / T.

Since memory accesses usually accumulate locally, the probability is very high that the next program step and the next required data element are on the same page as the step just processed and the element just processed. On the other hand, the T / t ratio is typically very large: RAM is around 100,000 times as fast as hard disk space.

Experimental measurements and calculations which were carried out as early as the 1960s give a value of not significantly less than 0.5 for w under these conditions. This means that the swap memory hardly has to be larger than the main memory. In practice, for. For example, under UNIX operating systems, a size of the swap memory of two to three times the main memory is recommended for most applications (depending on whether the respective system manages the swap memory in addition to the main memory or the main memory as a real subset of the swap memory).

Page replacement strategies

When a page fault occurs, it may be necessary to displace a page in memory to make room for the new page. The performance of a paging system depends essentially on which pages in the main memory are displaced when reloading new pages. If you suppress a heavily used page, then you create effort for additional reloading processes. If possible, pages should be outsourced that are rarely used.

The field of page replacement algorithms has been extensively researched both theoretically and experimentally. The problem is that the operating system cannot know when which page will be accessed next.

Not-Recently-Used-Algorithm (NRU)

This paging strategy preferably outsources pages that have not been used (referenced) or modified within a time interval. For this purpose, the pages are marked as unread and unchanged at regular intervals. If a page has to be swapped out, a check is made to determine which pages have not changed these markings.

The operating system uses the two status bits to keep statistics on the use of pages:

- The M bit is set when a program writes to the page.

- The R bit is set for both read and write access.

The operating system now sets the R bits to 0 at regular intervals (e.g. with each timer interrupt). This means that the R bit is only set for the pages that have recently been used. In the event of a page fault, the operating system divides the pages into four categories according to the status of the R and M bits, which are swapped out with the corresponding priority:

- R = 0, M = 0 (not referenced, not modified)

- R = 0, M = 1 (not referenced, modified)

- R = 1, M = 0 (referenced, not modified)

- R = 1, M = 1 (referenced, modified)

The performance of the NRU algorithm is not optimal, but sufficient in many cases.

First-in-first-out algorithm (FIFO)

With this method, the elements that were saved first are also taken from memory first. Applied to page replacement, this means that the oldest page is replaced. The operating system keeps a list of all pages in memory, with the most recent entry at the entrance and the oldest entry at the end. If there is a page fault, the end is removed and a new page is appended to the entrance. This strategy is inefficient because the oldest page can easily be a page with very frequent accesses.

Second chance algorithm and clock algorithm

The second chance algorithm is an improvement on the first-in-first-out algorithm. The aim is to prevent the oldest page from being swapped out even though it is used frequently. Therefore the R bit is queried first. There are now two cases:

- The R bit is not set: the page is both old and unused. It will be replaced immediately.

- The R bit is set: The R bit is deleted and the page is moved to the list input. The search continues.

The second chance algorithm is inefficient, however, because it constantly moves pages in the list. The clock algorithm is an improvement: The pages are kept in a ring-shaped list and a pointer points to the oldest page, comparable to a clock hand. If the R bit is now 0, the page is swapped out (and the new page is swapped in at the same location), otherwise the R bit is set to 0 and the pointer advances one page.

Least Recently Used Algorithm (LRU)

This strategy removes the page that was last referenced the longest. It is therefore assumed that pages that have not been used for a long time will not be used in the future and that pages that have been used in the recent past will also be used frequently in the future. The LRU algorithm is a good approximation of the optimal algorithm, but it is rather difficult to implement. A lot of effort has to be made in order to have quick access to the longest unused page.

One possibility is to keep a list sorted by time of use. With each access, the currently used page is attached to the list input. However, finding, moving and deleting a page in the list are very laborious operations.

Another option is to implement LRU with special hardware. The LRU hardware leads, for example for a machine with n page frames one - matrix . Initially, all entries are set to 0. If a page frame k is accessed, the hardware sets all bits of row k to 1 and then all bits of column k to 0. At any point in time, the longest unused page is the one with the lowest binary value in the corresponding row of the matrix.

Due to the high complexity, so-called pseudo-LRU algorithms that use the R and M bits are preferred in practice. This also includes the second chance algorithm and the clock algorithm.

Working set algorithm

The working set algorithm is based on the idea that if a page fault occurs, a page is swapped out that no longer belongs to the work area of a process. In addition to the R bit, the page table also has an entry that contains the (approximate) time of the last access. The algorithm works as follows:

- The hardware sets the R bit for read and write access.

- The R bits are cleared at regular intervals (periodic timer interrupt).

- In the event of a page fault, the page table is searched for a page that should be swapped out:

- If R = 1 (R bit set) for an entry, the page was used in the current timer interval and obviously belongs to the work area. It is then out of the question for outsourcing. The new virtual time is now entered in the field for the time of the last access.

- If R = 0 (R bit deleted) for an entry, the page is a candidate for swapping. Their age is calculated (running time of the process minus time of last access) and compared with a value t. If the age is greater than t, the page no longer belongs to the work area and can be outsourced.

- The rest of the table is still run through to bring the access times up to date.

The working set algorithm has the disadvantage that with every page fault the entire page table has to be run through until a suitable candidate is found.

WSClock algorithm

The WSClock algorithm is on the one hand an improvement of the clock algorithm, but on the other hand it also uses information about the work area. As with the clock algorithm, the page entries are kept in a ring-shaped data structure. Each entry contains an R-bit, an M-bit and a field for the time of the last access (comparable to the working set algorithm). In the event of a page fault, as with the clock algorithm, the page to which the pointer points is examined first. The following cases are decisive:

- The R bit is set: the page is therefore not a suitable candidate for swapping. The R bit is set to 0 and the pointer advances.

- The R bit is not set and the age is greater than t:

- If M = 0, the page has not been modified and it can simply be deleted and replaced because the copy on disk is still up-to-date.

- If M = 1, the copy on the hard disk is no longer current. The page is reserved, but not yet swapped out immediately because there may still be a “clean” page further down the list that can simply be deleted.

Once a pointer has traversed the entire list, there are the following cases:

- At least one page has been reserved: The first reserved page that the pointer hits is updated on the hard disk and swapped out.

- No page has been reserved: all pages belong to the work area. Without additional information, the simplest strategy is to outsource any page and replace it with a new one.

Because of its good performance and ease of implementation, the WSClock algorithm is widely used in real systems.

Examples

Simple fictional example

The address length in this example is 16 bits, with the upper 8 bits being the page number and the lower 8 bits being the offset . (The address of a byte is thus = page frame * 256 + offset.)

Side table entry Valid Side frame 0 No - 1 Yes 0x17 2 Yes 0x20 3 Yes 0x08 4th No - 5 Yes 0x10

Addresses to be translated:

| virtual address | physical address | comment |

|---|---|---|

| 0x083A | invalid | because the page table only contains entries up to page 5. |

| 0x01FF | 0x17FF | Page 0x01 is in page frame 0x17, so the upper 8 bits of the virtual address are replaced by 0x17 |

| 0x0505 | 0x1005 | Page 0x05 is in page frame 0x10, so the upper 8 bits of the virtual address are replaced by 0x10 |

| 0x043A | invalid | because page 0x04 was marked as invalid. |

Real example: IA32 architecture

32-bit paging

Most architectures use multilevel paging to keep the page tables small. The IA32 architecture originally used two-stage paging. The 32 bits of the linear address are divided as follows:

| Bits | Assignment |

|---|---|

| 31… 22 | Index in the page directory (PD for short) |

| 21… 12 | Index in the page table (short: PT) |

| 11… 0 | Offset in the memory page |

The page directory and each page table consist of 1024 entries of 4 bytes each and thus each occupy exactly one memory page. Each entry has the following structure:

32-bit entry in the Page directory entry Bits: 31… 12 11… 9 8th 7th 6th 5 4th 3 2 1 0 Content: Bit 31… 12 of the base address ign. G PS D. A. PCD PHE U / S R / W P

- Meanings

- P - present . Page is available in RAM if bit is set to 1

- R / W - read / write . Write access is only permitted if the bit is set to 1

- U / S - user / supervisor . Ring 3 code is only allowed to access the page if the bit is set to 1

- PWT and PCD - used for cache control

- A - accessed . Is set automatically by the CPU as soon as the page is accessed

- D - dirty . Is set automatically by the CPU as soon as the page has been written

- PS - page size . If this bit is set, this directory entry refers directly to a 4 MiB page instead of a page table (see #Page Size Extension )

- G - global . Identifies a global memory page

Page size extension

To optimize large, contiguous memory areas (e.g. frame buffers for graphics cards, etc.) and to reduce the administrative effort in the memory management of the operating system, CPUs from the Pentium (officially documented from Pentium Pro) also support 4 MiB pages. This feature is called Page-Size Extension (PSE). A special bit in the associated page directory entry marks that the second level in paging should be bypassed for this address range. Bits 21 to 0 of the logical address thus directly indicate the offset in the 4 MiB memory page.

To activate the page size extension, the operating system must set bit 4 in control register CR4.

Since the 4 MiB pages can only begin on "even" addresses, bits 12 to 20 in the page directory entry must always be 0. This was done away with with the PSE-36 extension by giving these bits a new meaning:

| Bits: | 31… 22 | 21st | 20… 17 | 16… 13 | 12 | 11… 9 | 8th | 7th | 6th | 5 | 4th | 3 | 2 | 1 | 0 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSE (from Pentium) : | Bit 31… 22 of the base address | 0 | PAT | ign. | G | PS | D. | A. | PCD | PHE | U / S | R / W | P | |||||||||||||||||||

| PSE-36 (from Pentium II Xeon) | Bit 31… 22 of the base address | 0 | Bit 35 ... 32 | PAT | ign. | G | PS | D. | A. | PCD | PHE | U / S | R / W | P | ||||||||||||||||||

| PSE-36 (from AMD K8) | Bit 31… 22 of the base address | 0 | Bit 39 ... 32 | PAT | ign. | G | PS | D. | A. | PCD | PHE | U / S | R / W | P | ||||||||||||||||||

The PSE-36 extension made it possible to access more than 4 GiB physical main memory without major changes to the operating system kernel. However, main memory beyond the 4 GiB limit can only be addressed via 4 MiB pages.

PSE-36 was only used by Windows NT 4.0, new Windows versions and Linux rely exclusively on PAE .

Physical address extension

From the Pentium Pro it is possible to address up to 2 36 bytes (= 64 GiB) of physical memory. This technique is called physical address extension . To this end, paging is being expanded to include a third level. The top two bits of the linear address now select one of 4 entries in the so-called page directory pointer table (PDPT for short).

The entries in the tables have been expanded to 8 bytes, but each of the tables only contains 512 entries, so that the total size of the table is again 4 KiB:

64-bit entry in page table entry Bits: 63 62… 52 51… 32 Content: NX reserved Bit 51… 32 of the base address Bits: 31… 12 11… 9 8th 7th 6th 5 4th 3 2 1 0 Content: Bit 31… 12 of the base address ign. G PAT D. A. PCD PHE U / S R / W P

With PAE it is also possible to deactivate the last address translation stage of paging. Bits 20 to 0 of the logical address then directly form the offset of a 2 MiB memory page.

| Bits | Assignment | |

|---|---|---|

| 4 KiB Page | 2 MiB Page | |

| 31… 30 | Index in the PDPT | |

| 29… 21 | Index in the page directory (PD for short) | |

| 20… 12 | Index in the page table (short: PT) | Offset in the memory page |

| 11… 0 | Offset in the memory page | |

64-bit mode

With the introduction of 64-bit mode in the AMD Athlon 64, this principle was applied again. The paging has been extended by a fourth level (Page Map Level 4, PML4 for short) and the PDPT has been enlarged from 4 to 512 entries, so that it is the same size as the following page tables.

In addition to the 4 KiB and 2 MiB pages already available in 32-bit mode, 1 GiB pages are also possible in 64-bit mode. The lower 22 bits of an entry in the Page Directory Pointer Table refer directly to the start address of a 1 GiB page:

| Bits | Assignment | ||

|---|---|---|---|

| 4 KiB Page | 2 MiB Page | 1 GiB page | |

| 63 ... 48 | Copy of bit 47, as a sign extension | ||

| 47 ... 39 | Index in the PML4 table ( page mapping layer 4 ) | ||

| 38… 30 | Index in the PDPT | ||

| 29… 21 | Index in the page directory (PD for short) | 30-bit offset | |

| 20… 12 | Page table (. English page table , in short, PT) | 21-bit offset | |

| 11… 0 | 12-bit offset in the memory page | ||

5-level paging

The concept was further expanded with a fifth level, which expands the address area by 9 bits and thus to 128 PiB. The new table is called the PML5 table, is structured in the same way as the PML4 and also contains 512 entries. In order to be able to use this mode, the software must first determine with a new flag whether 5-level paging is supported or whether only 4-level paging is possible.

| Bits | Assignment | ||

|---|---|---|---|

| 4 KiB Page | 2 MiB Page | 1 GiB page | |

| 63… 57 | Copy of bit 56, as a sign extension | ||

| 56 ... 48 | Index in the PML5 table ( page mapping layer 5 ) | ||

| 47 ... 39 | Index in the PML4 table ( page mapping layer 4 ) | ||

| 38… 30 | Index in the PDPT | ||

| 29… 21 | Index in the page directory (PD for short) | 30-bit offset | |

| 20… 12 | Page table (. English page table , in short, PT) | 21-bit offset | |

| 11… 0 | 12-bit offset in the memory page | ||

literature

- Albert Achilles: Operating Systems. A compact introduction to Linux. Springer: Berlin, Heidelberg, 2006.

- Uwe Baumgarten, Hans-Jürgen Siegert: Operating systems. An introduction. 6th, revised, updated and expanded edition, Oldenbourg Verlag: Munich, Vienna, 2007.

- Mario Dal Cin: Computer Architecture. Basics of the structure and organization of computer hardware. Teubner: Stuttgart, 1996.

- Roland Hellmann: Computer architecture. Introduction to the structure of modern computers. 2nd edition, de Gruyter: Berlin, Boston, 2016

- Peter Mandl: Basic course operating systems. Architectures, resource management, synchronization, process communication, virtualization. 4th edition, Springer Vieweg: Wiesbaden, 2014.

- Andrew S. Tanenbaum : Modern Operating Systems. 3rd, updated edition. Pearson studies, Munich a. a., 2009, ISBN 978-3-8273-7342-7 .

- Klaus Wüst: Microprocessor technology. Basics, architectures, circuit technology and operation of microprocessors and microcontrollers. 4th edition, Vieweg + Teubner: Wiesbaden, 2011.

Individual evidence

-

↑

Use of the term "tile management":

- Karlsruhe Institute of Technology, Examination ( page no longer available , search in web archives ) Info: The link was automatically marked as defective. Please check the link according to the instructions and then remove this notice. , accessed September 1, 2014

- Ulm University, network driver project , accessed on September 1, 2014

- RabbitMQ 2.0.0 supports the current AMQP standard - article at Heise Developer , dated August 26, 2010

- ↑ a b c Tanenbaum: Modern operating systems. 2009, p. 243.

- ^ Wüst: microprocessor technology. 2011, p. 173.

- ^ Wüst: microprocessor technology. 2011, p. 174; Tanenbaum: Modern Operating Systems. 2009, p. 243.

- ^ Wüst: microprocessor technology. 2011, p. 173.

- ^ Mandl: Basic course operating systems. 2014, p. 223.

- ^ Wüst: microprocessor technology. 2011, p. 173.

- ↑ Note: Normally, each process has its own address space, which is independent of the address spaces of the other processes, with the exception of special circumstances when processes want to share their address spaces.

- ^ Mandl: Basic course operating systems. 2014, p. 225; Tanenbaum: Modern Operating Systems. 2009, pp. 243-244.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 246.

- ^ Achilles: Operating Systems. 2006, p. 57; Tanenbaum: Modern Operating Systems. 2009, p. 246; Mandl: Basic course operating systems. 2014, p. 225.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 247.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 246.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 246-247.

- ↑ Michael Wen: Finite Programming in C ++. iUniverse: New York u. a., 2005, p. 69.

- ^ Achilles: Operating Systems. 2006, p. 59, also Mandl: Basic course operating systems. 2014, p. 227.

- ^ Achilles: Operating Systems. 2006, p. 59.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 249.

- ^ Mandl: Basic course operating systems. 2014, p. 226.

- ^ Mario Dal Cin: Computer architecture. Basics of the structure and organization of computer hardware. Teubner: Stuttgart, 1996, p. 136; Wolfram Schiffmann: Technische Informatik 2. Fundamentals of computer technology. 5th edition, Springer: Berlin, Heidelberg, 2005, p. 310.

- ↑ To calculate an optimal utilization of the working memory by minimizing the memory cross-section, see Mario Dal Cin: Computer architecture. 1996, p. 137.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 275.

- ^ Mandl: Basic course operating systems. 2014, p. 195.

- ↑ AMD: AMD64 Technology , chap. 5.1 Page Translation Overview , p. 120: " The following physical-page sizes are supported: 4 Kbytes, 2 Mbytes, 4 Mbytes, and 1 Gbytes. "

- ^ Roland Hellmann: Computer architecture. Introduction to the structure of modern computers. 2nd edition, de Gruyter: Berlin, Boston, 2016.

- ↑ Tanenbaum: Modern Operating Systems. P. 273.

- ^ Mandl: Basic course operating systems. 2014, p. 252, with reference to Tanenbaum: Modern Operating Systems. 2009.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 253.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 251-253.

- ^ Wüst: microprocessor technology. 2011, pp. 195-196.

- ^ Mandl: Basic course operating systems. 2014, p. 251.

- ↑ Tanenbaum: Modern Operating Systems. Pp. 254-255.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 263.

- ^ Mandl: Basic course operating systems. 2014, p. 223.

- ^ Peter J. Denning: The Working Set Model for Program Behavior. In: Commun. of the ACM 11, 1968, pp. 323-333. ( Online )

- ^ Achilles: Operating Systems. 2006, p. 60.

- ^ Peter J. Denning: Working Sets Pasts and Present. In: IEEE Trans. On Software Engineering. Vol. SE-6, No.1, January 1980, pp. 64-84. ( Online )

- ^ Peter J. Denning: Virtual Memory. In: Computing Surveys Vol. 2, Sept. 1970, S: 153-189; Tanenbaum: Modern Operating Systems. 2009, pp. 263-264.

- ^ Per Brinch Hansen: Operating System Principles. Prentice Hall: Englewood Cliifs (NJ), 1973, pp. 185-191.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 255.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 257.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 258.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 258-259.

- ^ Mandl: Basic course operating systems. 2014, pp. 241–242.

- ↑ Tanenbaum: Modern Operating Systems. 2009, p. 260.

- ^ Mandl: Basic course operating systems. 2014, p. 242.

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 265-266.

- ^ Richard W. Carr, John L. Hennessy: WSClock - A Simple and Effective Algorithm for Virtual Memory Management. In: Proc. Eighth Symp. On Operating Systems Principles ACM, 1981, pp. 87-95. ( Online )

- ↑ Tanenbaum: Modern Operating Systems. 2009, pp. 267-268.

- ↑ support.amd.com (PDF)

- ↑ 5-level paging and 5-level EPT. Intel, 2017, accessed February 12, 2020 .