random access memory

The memory or main memory ( English core , main store , main memory , primary memory , RAM = Random Access Memory ) of a computer is the name of the store , which just execute programs contains or program components and thereby necessary data. The main memory is a component of the central processing unit . Since the processor accesses the main memory directly, its performance and size have a significant impact on the performance of the entire computer system.

Main memory is characterized by the access time or access speed and (associated with this) the data transfer rate as well as the storage capacity . The access speed describes the time until a requested date can be read. The data transfer rate indicates the amount of data that can be read per time. There can be separate information for the write and read process. There are two different notation forms for naming the size of the main memory, which result from the number base used. Either the size is given as base 10 (as decimal prefix ; 1 kByte or KB = 10 3 bytes = 1000 bytes , SI notation) or as base 2 (as binary prefix ; 1 KiB = 2 10 bytes = 1024 bytes, IEC notation) . Due to the binärbasierten structure and addressing of working memories (byte addressed in 8- bit -Aufteilung, word addressed in 16-bit division, double word addressed in 32-bit division, etc.), the latter variant, the more common form, which also without breaks manages.

If main memory is addressed via the address bus of the processor or is integrated directly in the processor, it is referred to as physical memory . More modern processors and operating systems can use virtual memory management to provide more main memory than is available in physical memory by storing parts of the address space with other storage media ( e.g. with a swap file , pagefile or swap, etc.). This additional memory is called virtual memory . To accelerate memory access - physical or virtual - additional buffer memories are used today .

Basics

The main memory of the computer is an area structured by addresses (in table form) which can hold binary words of a fixed size. Due to the binary addressing, the main memory practically always has a 'binary' size (based on powers of 2), since otherwise areas would remain unused.

The main memory of modern computers is volatile ; This means that all data is lost after the power supply is switched off - the main reason for this lies in the technology of the DRAMs . Available alternatives such as MRAM , however, are still too slow for use as main memory. That is why computers also contain permanent storage in the form of hard disks or SSDs , on which the operating system and application programs and files are retained when the system is switched off.

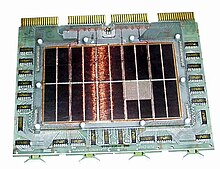

The most common design for use in computers is the memory module . A distinction must be made between different types of RAM . While memory in the form of ZIP , SIPP or DIP modules was still common in the 1980s, SIMMs with FPM or EDO RAM were predominantly used in the 1990s . Today, computers primarily use DIMMs with e.g. B. SD , DDR-SD, DDR2-SD, DDR3-SD or DDR4-SDRAMs are used.

history

The first computers had no working memory, just a few registers that were built using the same technology as the arithmetic unit, i.e. tubes or relays . Programs were hard-wired (“plugged in”) or stored on other media, such as punched tape or punched cards , and were executed immediately after reading.

"In computer systems of the 2nd generation, drum storage was used as the main storage" (Dworatschek). In addition, in the early days, more exotic approaches were experimented with, for example with transit time memories in mercury baths or in glass rod spirals (fed with ultrasonic waves). Magnetic core memories were introduced later , which stored the information in small ferrite cores . These were strung in a cross-shaped matrix , with an address line and a word line each crossing in the middle of a ferrite core. The memory was non-volatile, but the information was lost while reading and was then immediately written back by the control logic. As long as the memory was not written to or read, no current flowed. It is several orders of magnitude more voluminous and more expensive to manufacture than modern semiconductor memories.

In the mid-1960s, typical mainframes were equipped with 32 to 64 kilobyte main memories (for example IBM 360-20 or 360-30 ), at the end of the 1970s (for example the Telefunken TR 440 ) with 192,000 words of 52 bits each ( 48 bits net) ), i.e. with over 1 megabyte.

The core memory as a whole offered sufficient space, in addition to the operating system , to load the currently executing program from an external medium into the main memory and to hold all data. In this model, programs and data are located in the same memory from the processor's point of view; the Von Neumann architecture , which is the most widely used today , was introduced.

With the introduction of microelectronics, the main memory was increasingly replaced by integrated circuits ( chips ). Each bit was stored in a bistable switch ( flip-flop ), which requires at least two, but with control logic but up to six transistors and uses a relatively large amount of chip area. Such storage systems always consume electricity. Typical sizes were integrated circuits (ICs) with 1 KiBit, whereby eight ICs were addressed together. The access times were a few 100 nanoseconds and were faster than the processors , which were clocked by one megahertz. On the one hand, this made it possible to introduce processors with very few registers such as the MOS Technology 6502 or the TMS9900 from Texas Instruments , which mostly performed their calculations in the main memory. On the other hand, it made it possible to build home computers whose video logic used part of the main memory as screen memory and was able to access it parallel to the processor.

At the end of the 1970s, dynamic working memories were developed that store the information in a capacitor and only require one additional field effect transistor per memory bit. They can be built very small and require very little power. The capacitor loses the information slowly, however, so the information has to be rewritten over and over again at intervals of a few milliseconds. This is done by an external logic that periodically reads out the memory and writes it back (refresh). Due to the higher level of integration in the 1980s, this refresh logic could be set up inexpensively and integrated into the processor. Typical sizes in the mid-1980s were 64 kbit per IC, with eight chips being addressed together.

The access times of the dynamic RAMs were also a few 100 nanoseconds with an inexpensive structure and have changed little since then, but the sizes have grown to a few GBit per chip. The processors are no longer clocked in the megahertz, but in the gigahertz range. Therefore, in order to reduce the average access time, caches are used and both the clock rate and the width of the connection of the main memory to the processor are increased (see Front Side Bus ).

In June 2012, it was announced that the so-called memory cube (English Hybrid Memory Cube , and shortly HMC called) a new smaller and more powerful design will be developed for memory, in which a stack of several This is to be used. The Hybrid Memory Cube consortium was founded especially for this purpose, and ARM , Hewlett-Packard and Hynix , among others , have joined.

Physical and virtual memory

In order to expand the physical memory, modern operating systems can allocate (place, allocate) additional virtual memory on mass storage devices . This memory is also called swap memory .

In order to implement this expansion transparently , the operating system uses a virtual memory space in which both the physical and the virtual memory are available. Parts of this virtual memory space - one or more memory pages - are either mapped into the physically available RAM or into the swap space. The usage rate of the individual pages determines which memory pages are swapped out and only on mass storage and which exist in fast RAM. These functions are supported by today's CPUs , although the amount of total memory supported has increased significantly in the course of development.

The swap memory is a very inexpensive, but with extremely poor performance associated extension to the physical main memory. A disproportion between the two types of storage can be recognized by frequent "swapping", ie the movement of data between mass and physical main memory. Compared to the main memory, the hard disk takes several milliseconds very long to provide the data. The access time to the main memory, on the other hand, is only a few nanoseconds, which corresponds to a millionth of the hard disk.

Cache

Access to the main memory by the main processor is mostly optimized via one or more buffer memories or cache RAMs ("cache" for short). The computer holds and uses the most frequently addressed memory areas in the cache, representing the original main memory areas. The cache is very fast compared to other stores because it is possible directly connected to the processor (or directly on the modern processors The located). However, it is usually only a few megabytes in size.

With low memory requirements, programs or parts of them can run almost exclusively in the cache without having to address the main memory.

The cache is designed as associative memory, so it can decide whether the data of an address is already stored in the cache or whether it still needs to be fetched from the main memory. Then another part of the cache is given up. The cache is always filled with several consecutive words, for example always with at least 256 bits (so-called burst mode ), since it is very likely that data will soon be read before or after the currently required ones.

Performance of memory modules

The performance of memory modules (clock and switching time behavior , English timing ) is measured primarily in the absolute latency . The theoretical bandwidth is only relevant for burst transfer .

A common misconception is that higher numerical timings would result in poorer performance. However, this only applies to the same clock, since the absolute latency results from the factors (effective) clock and switching time behavior (timing).

| designation | CAS | tRCD | tRP | tRAS |

|---|---|---|---|---|

| DDR400 CL2-2-2-5 | 10 ns | 10 ns | 10 ns | 25 ns |

| DDR500 CL3-3-2-8 | 12 ns | 12 ns | 8 ns | 32 ns |

| DDR2-667 CL5-5-5-15 | 15 ns | 15 ns | 15 ns | 45 ns |

| DDR2-800 CL4-4-4-12 | 10 ns | 10 ns | 10 ns | 30 ns |

| DDR2-800 CL5-5-5-15 | 12.5 ns | 12.5 ns | 12.5 ns | 37.5 ns |

| DDR2-1066 CL4-4-4-12 | 7.5 ns | 7.5 ns | 7.5 ns | 22.5 ns |

| DDR2-1066 CL5-5-5-15 | 9.38 ns | 9.38 ns | 9.38 ns | 28.13 ns |

| DDR3-1333 CL7-7-7-24 | 10.5 ns | 10.5 ns | 10.5 ns | 36 ns |

| DDR3-1333 CL8-8-8 | 12 ns | 12 ns | 12 ns | |

| DDR3-1600 CL7-7-7 | 8.75 ns | 8.75 ns | 8.75 ns | |

| DDR3-1600 CL9-9-9 | 11.25 ns | 11.25 ns | 11.25 ns |

calculation

Formula:

Example:

- DDR3-1333 CL8-8-8

This results in the consequence that DDR2 / 3/4-SDRAM, although they have higher (numerical) switching times (timings) than DDR-SDRAM , can be faster and provide a higher bandwidth.

Some memory manufacturers do not adhere to the official specifications of the JEDEC and offer modules with higher clock rates or better switching time behavior (timings). While DDR3-1600 CL9-9-9 is subject to an official specification, DDR2-1066 CL4-4-4-12 are non-standard-compliant memory modules. These faster memories are often referred to as memory modules for overclockers.

- CAS (column access strobe) - latency (CL)

- Indicates how many clock cycles the memory needs to provide data. Lower values mean higher storage performance.

- RAS to CAS Delay (tRCD)

- A specific memory cell is localized via the scanning signals “columns” and “rows”, and its content can then be processed (read / write). There is a defined delay ⇒ Delay between the query "Line" and the query "Column". Lower values mean higher storage performance.

- RAS (row access strobe) - precharge delay (tRP)

- Describes the time that the memory needs to deliver the required voltage state. The RAS signal can only be sent after the required charge level has been reached. Lower values mean higher storage performance.

- Row Active Time (tRAS)

- Permitted new accesses after a specified number of clock cycles is made up of CAS + tRP + security.

- Command rate (in German command rate)

- Is the latency that is required when selecting the individual memory chips, more precisely, the address and command decode latency. The latency indicates how long a memory bank addressing signal is present before the rows and columns of the memory matrix are activated. Typical values for DDR and DDR2 memory types are 1–2T, mostly 2T is used.

practice

In practice, FSB1333 processors from Intel could receive a maximum of 10 GiB / s of data with their front side bus . This is already exhausted by DDR2-667 (10.6 GiB / s) in the usual dual-channel operation with two memory bars.

Current processors are no longer subject to this restriction, as the memory controller is no longer installed in the Northbridge , as with Socket 775 and predecessors, but directly on the CPU.

In addition to dual channel, it also plays a role whether the memory supports dual rank . Dual-rank stands for the equipping of the memory bar on both sides with twice as many but only half as large memory chips. CPUs with an internal GPU, such as the AMD Kaveri architecture, can particularly benefit from this form of memory restriction.

Connection of the main memory

The classic connection of physical memory takes place via one (with Von Neumann architecture ) or several (with the Harvard architecture or Super Harvard architecture, which is no longer used in the PC area today ) memory buses. Memory buses transmit control information, address information and the actual user data. One of the many possibilities is to use separate lines for this different information and to use the data bus both for reading and for writing user data.

The data bus then takes over the actual data transfer. Current PC processors use between two and four 64-bit memory buses, which have not been generic memory buses since around the year 2000, but speak directly to the protocols of the memory chips used. The address bus is used to select the requested memory cells ; the maximum addressable number of memory words depends on its bus width (in bits ). In today's systems, 64 bits are usually stored at each address (see 64-bit architecture ); previously 32 bits ( Intel 80386 ), 16 bits ( Intel 8086 ) and 8 bits ( Intel 8080 ) were also used. Many, but not all, processors support finer granular access, mostly at the byte level, through their way of interpreting addresses ( endianness , "bit spacing" of addresses, misaligned access) at the software level as well as through the hardware interface (byte enable Signals, number of the least significant address line).

Example: Intel 80486

- Address bus: A31 to A2

- Data bus: D31 to D0

- Byte enable: BE3 to BE0

- Endianness: Little Endian

- Support of misaligned access: yes

- Addressable memory: 4 Gi × 8 bits as 1 Gi × 32 bits

One of the main differences between the two processor generations " 32-bit " and " 64-bit " currently used in PCs is the maximum controllable main memory that has already been mentioned, but which can be expanded slightly beyond the usual level with the help of physical address extension can. However, the number of bits of a processor generation generally means the width of the data bus , which does not necessarily correspond to the width of the address bus. However, the width of the address bus alone determines the size of the address space. For this reason, for example, the "16-bit" processor 8086 was not only able to address 64 KiB (theoretical 16-bit address bus), but 1 MiB (actual 20-bit address bus).

The bus of modern computers from the cache to the main memory is carried out quickly, i.e. with a high clock rate and data transmission with rising and falling clock edge (DDR: Double Data Rate). It is synchronous and has a large word length, for example 64 bits per address. If several memory slots are used on the main board of a PC, consecutive addresses are stored in different slots. This enables overlapping access (interleaved) with burst access.

Entire address lines are stored in shift registers within the memory chips . For example, a 1 MiBit chip can have 1024 lines with 1024 bits. The first time it is accessed, a fast, internal 1024-bit register is filled with the data of one line. In the case of burst accesses, the data of the following addresses are already in the shift register and can be read from it with a very short access time.

It makes sense to transfer not only the requested bit to the processor, but also a so-called "cache line", which today is 512 bits (see processor cache ).

Manufacturer

The largest memory chip manufacturers are:

These manufacturers share a 97 percent market share. Supplier of memory modules such as Corsair , Kingston Technology , MDT , OCZ , A-Data , etc. (so-called third-party manufacturer) buy chips in the mentioned manufacturers and solder them on their boards, for which they design their own layout. In addition, they program the SPD timings according to their own specifications, which can be set more precisely than those of the original manufacturer.

For dual-channel or triple-channel operation, almost identical modules should be used if possible so that the firmware (for PCs, the BIOS or UEFI ) does not refuse parallel operation due to unforeseeable incompatibilities or the system does not run unstable as a result. It is common practice for a manufacturer to solder other chips to its modules for the same product in the course of production or, conversely, for different manufacturers to use the same chips. However, since this information is in almost all cases inaccessible, you are on the safe side when buying memory kits - although the dual / triple channel mode usually works with different modules.

As an intermediary between the large memory chip and module manufacturers on the one hand and retailers and consumers on the other, providers such as B. CompuStocx, CompuRAM, MemoryXXL and Kingston established, which offer memory modules specified for the most common systems. This is necessary because some systems only work with memory that meets proprietary specifications due to artificial restrictions imposed by the manufacturer .

See also

- Semiconductor memory

- Memory alignment

- Standby mode , hibernation mode for energy-saving techniques to deal with the working memory

- Storage protection , storage medium , storage

Web links

- More RAM can work wonders. In: Heise Video. May 3, 2014, accessed May 17, 2014 .

Individual evidence

- ^ Duden Informatik, a subject dictionary for study and practice . ISBN 3-411-05232-5 , p. 296

- ↑ Sebastian Dworatschek: Basics of data processing . P. 263

- ↑ Hybrid Memory Cube ARM, HP and Hynix support the memory cube. In: Golem.de . June 28, 2012. Retrieved June 29, 2012 .

- ↑ cf. Memory and the swap file under Windows. In: onsome.de - with a simple flow chart for memory access.

- ↑ http://www.computerbase.de/2014-01/amd-kaveri-arbeitsspeicher/

- ↑ Antonio Funes: Adviser RAM - You should know that before you buy RAM. In: PC Games . November 28, 2010, accessed on January 1, 2015 : "When you buy RAM, you should use two or four bars that are as identical as possible - the bars do not have to be identical, but it reduces the risk of incompatibility."