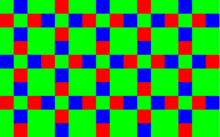

Bayer sensor

A Bayer sensor is a photo sensor that - similar to a chessboard - is covered with a color filter , which usually consists of 50% green and 25% each of red and blue. Green is privileged in the area allocation (and thus in the resolution), since green (or the green component in shades of gray) makes the greatest contribution to the perception of brightness in the human eye and thus also to the perception of contrast and sharpness: 72% of the brightness - and contrast perception of gray tones is caused by their green component, on the other hand red only makes 21% and blue only 7%. In addition, green, as the middle color in the color spectrum, is the one for the lenses i. d. Usually deliver the highest imaging performance (sharpness, resolution).

Almost all common image sensors in digital photo and film cameras work according to this concept of the Bayer matrix (English Bayer pattern ) . The concept of this type of sensor is in contrast to the concept of the Foveon X3 direct image sensors. Compare also Super-CCD-Sensor .

The "Bayer matrix" or "Bayer filter" is named after its inventor Bryce Bayer named the March 5, 1975, the patent on behalf of the Eastman Kodak Company in the United States filed ( Patent US3971065 : Color imaging array. ) .

Function and structure

The light-sensitive cells of a single photocell on the semiconductor can only record brightness values. In order to obtain color information , a tiny color filter in one of the three basic colors red, green or blue is applied in front of each individual cell . The filters are applied, for example, in the odd lines in the green-red sequence and in the even lines in the blue-green sequence. Each color point ( pixel ) accordingly only supplies information for a single color component at this point, so that for a complete image with the same dimensions, the respectively neighboring pixels of the same color must be used for color interpolation. For green, 50% of the pixels are calculated, for blue and red it is 75% of the area (or 50% in one line and 100% of the line in the next line) that must be filled by calculation. With color interpolation, it is assumed that there are only slight color differences between two neighboring pixels of the same color in the image and that the gray values of the pixels are therefore not stochastically independent of one another. Of course, this does not have to apply to every motif. Strictly speaking, the Bayer sensor only has a quarter of the apparent resolution when viewing an artifact-free image.

Such sensors also almost always have other pixels that are located at the edge of the sensor surface and are usually blackened in order to be able to determine the temperature-dependent background noise of the sensor during operation under exposure and to be able to take it into account computationally, e.g. B. to calculate a compensation value ("offset") for the evaluation of the other pixels. In addition, these pixels can also be used e.g. B. to detect extreme overexposure, for example due to too long integration time (= exposure time) of the sensor elements. For the normal camera user, however, they are of no importance, since the calibration process runs automatically and, depending on the model, may already be implemented directly on the sensor.

interpolation

The mentioned interpolation (English demosaicing ) can be carried out in different ways. Simple methods interpolate the color value from the pixels of the same color in the neighborhood. Since this approach is particularly problematic perpendicular to edges, other methods try to perform the interpolation along edges rather than perpendicular to them. Still other algorithms are based on the assumption that the color tone of an area in the image is relatively constant even with changing lighting conditions, which means that the color channels then correlate strongly with one another. The green channel is therefore interpolated first, and then the red and blue channels are interpolated in such a way that the respective color ratios red-green and blue-green are constant. There are other methods that make other assumptions about the image content and, based on these, attempt to calculate the missing color values. With 5 × 5 matrix filters, e.g. B. generates a smooth image that is then sharpened again.

Problems (image errors, often referred to as interpolation artifacts) can arise if the assumptions made by the algorithm are violated in a specific recording. For example, the above-mentioned assumption that is used by many higher-level algorithms that the color planes correlate no longer applies if the color planes in the edge areas are shifted from one another due to chromatic aberrations of commercially available lenses.

Another problem is stripe patterns with a stripe width corresponding approximately to that of a single pixel, for example a picket fence at a suitable distance. Since a Bayer raw image pattern generated by such a motif could have been generated by both horizontal and vertical picket fences (of different colors), the algorithm must make a decision as to whether it is a horizontal or a vertical structure, and which one Color combinations as how plausible should be assessed. Since the algorithm does not have human motive world knowledge, random decisions are often made, and therefore wrong decisions. For example, such a picket fence can then be wrongly represented as a random mixture of horizontal and vertical sections, thus similar to a labyrinth .

Given z. B. a motif section, within which all red pixels light up and of the green only those in the red columns. The following motifs would match this illustration:

- a vertical picket fence with white pickets against a black background

- a vertical picket fence with yellow pickets against a black background

- a vertical picket fence with white pickets against a red background

- a vertical picket fence with yellow pickets against a red background

- a horizontal picket fence with red pickets against a green background

- a horizontal picket fence with red pickets against a yellow background

- a horizontal picket fence with purple pickets against a green background

- a horizontal picket fence with purple pickets against a yellow background

- as well as all conceivable picket fence or grid motifs that are any mixture of the above options.

In this simple theoretical example, an algorithm could e.g. B. prefer the variant with the lowest overall color, thus adopting vertical white slats against a black background. In practice, however, the structure alignments hardly match the Bayer grid exactly, so that with such a picket fence motif there is no black and white option to choose from, but several alternatives with similar color plausibility compete for preference, and it comes to random decisions.

However, these problems are all the more mitigated when the resolution of modern sensors reaches or even exceeds the resolution of the lenses, especially with zoom lenses or lenses in the lower price range. Since the resolution limit of lenses (in contrast to sensors) is not fixed, but rather defined as a contrast limit, this means that finely detailed motif sections with a high tendency towards artifacts can only be reproduced on the sensor by such lenses with a very low contrast . Thus, interpolation artifacts of such motif details also have a very low contrast and are less disturbing.

Machining example

An example of a Bayer image reconstruction with software. For the sake of illustration, the images are enlarged by a factor of 10.

Alternative developments

Kodak has experimented with various pixel arrangements with additional "white" pixels. Sony had also installed “white” pixels in some models and, for example, used an image sensor with two shades of green in the Sony DSC-F 828 in 2003 ( RGEB = r ed (red) / g reen (green) / e merald (emerald green) / b lue (blue)).

In addition, a Bayer variant was developed in which the two green pixels of a 2 × 2 block each had different color filters (for slightly different shades of green). This variant was used for example in the Canon EOS 7D and by other manufacturers.

Fujifilm introduced a different approach with its Fujifilm X-Pro1 camera, which was launched in 2012 : The RGB pixels were in a different ratio (22% / 56% / 22% instead of 25% / 50% / 25%) and a different one Arrangement (XTrans) distributed on the sensor, the unit cell (according to which the pattern repeats) increases from 2 × 2 to 6 × 6 pixels, and every pixel color appears in every row and every column. Since red and blue pixels are now no longer exactly 2, but on average 2.23 units of length from their nearest neighbors of the same color, the resolution of the red and blue levels is reduced by around ten percent, but paradoxically also the green resolution . Because every green pixel within a green 2 × 2 square still has exactly four green neighbors, as in the Bayer pattern, but now unevenly distributed: two closer and two further away.

Since the mathematical research on color pattern interpolation algorithms regularly starts from a classic Bayer pattern, such alternative color pattern ideas often cannot benefit sufficiently from the quality of more recent algorithmic approaches and are consequently poor in the quality of implementation in a full color image through raw image conversion -Software disadvantaged.

Web links

- Color processing with Bayer mosaic sensors , calculation algorithms for color interpolation in a Bayer matrix (PDF; 341 kB)

- Demosaicing: Color Filter Array Interpolation in Single-Chip Digital Cameras , Interpolation Algorithms (PDF, English; 328 kB)

- Markus Bautsch: Color Filter Arrays , in: Wikibooks Digital Imaging Processes - Light Conversion

Individual evidence

- ↑ Uwe Furtner: Color processing with Bayer Mosaic sensors (PDF; 350 kB) Matrix Vision GmbH. August 31, 2001. Retrieved December 27, 2010.

- ↑ Sony digital camera DSC-F 828 with four-color chip - better than the others , test.de (March 2003), accessed online on October 2, 2012