Probabilistic classification

In probabilistic classification , a machine classifier predicts a probability distribution for a set of classes for a given observation , rather than just returning the most likely class, for example. The predicted probability can either be used directly or combined into an ensemble with predictions from other models.

definition

An ordinary, non-probabilistic classifier can be viewed as a function that assigns a class label to an observation :

- .

The observation comes from a set of all possible observations (e.g. documents or images). The possible labels form a finite set .

In contrast, probabilistic classifiers describe a conditional probability . This means that they assign a probability to each possible label for an observation , the sum of the probabilities being 1. If necessary, a single class can be selected from this probability distribution in order to obtain a non-probabilistic classifier. Optimally, the class with the highest probability is chosen, i.e.:

Probability calibration

Some classification models have a probabilistic output from the ground up, for example Naive Bayes , logistic regression, and neural networks with Softmax output. However, various effects can distort the predicted probabilities.

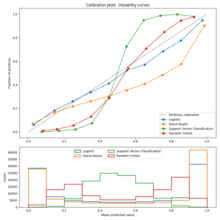

With well-calibrated classifiers, the predicted probability of a class can be interpreted directly. For example, of all observations in which the classifier indicates a probability of 80 percent for a certain class, about 80 percent should actually belong to this class. The calibration can be examined using a calibration curve. Larger deviations from the diagonal indicate a bad calibration.

Various techniques can be used to improve the calibration of a poorly calibrated model. In the binary case, these are, for example, Platt Scaling , which learns a logistic regression model, or isotonic regression , which is superior to Platt Scaling if a sufficient amount of data is available.

Individual evidence

- ↑ Christopher M. Bishop: Pattern Recognition and Machine Learning . Springer Science + Business Media , 2006, ISBN 978-0-387-31073-2 .

- ↑ Comparison of Calibration of Classifiers. In: scikit-learn. Retrieved July 28, 2020 (English).

- ↑ John C. Platt: Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods . In: Advances in large margin classifiers . tape 10 , no. 3 , 1999, p. 61-74 .

- ↑ Bianca Zadrozny, Charles Elkan: Transforming classifier scores into accurate multiclass probability estimates . In: Transforming classifier scores into accurate multiclass probability estimates . 2002, p. 694-699 .

- ↑ Alexandru Niculescu-Mizil, Rich Caruana: Predicting good probabilities with supervised learning . In: Proceedings of the 22nd international conference on Machine learning . 2005, p. 625-632 .