Logistic regression

Under logistic regression or logit model refers to regression analyzes for (usually multiple) modeling the distribution of dependent discrete variables . If logistic regressions are not characterized as multinomial or ordered logistic regressions, the binomial logistic regression for dichotomous (binary) dependent variables is mostly meant. The independent variables can have any scale level , with discrete variables with more than two characteristics being broken down into a series of binary dummy variables .

In the binomial case, there are observations of the type where a binary dependent variable (the so-called regressand) occurs with , a known and fixed covariate vector of regressors. denotes the number of observations. The logit model results from the assumption that the error terms are independent and identically Gumbel-distributed . The ordinal logistic regression is an extension of the logistic regression ; a variant of this is the cumulative logit model .

motivation

The influences on discrete variables cannot be investigated with the method of classical linear regression analysis, since essential application requirements, in particular a normal distribution of the residuals and homoscedasticity , are not given. Furthermore, a linear regression model can lead to inadmissible predictions for such a variable: If you code the two values of the dependent variable with 0 and 1, then the prediction of a linear regression model can be understood as a prediction of the probability that the dependent variable will have the value 1 assumes - formally: -, but it can happen that values outside this range are predicted. Logistic regression solves this problem through a suitable transformation of the expected value of the dependent variable .

The relevance of the logit model is also made clear by the fact that Daniel McFadden and James Heckman received the Alfred Nobel Memorial Prize for Economics in 2000 for their contribution to its development .

Application requirements

In addition to the nature of the variables, as presented in the introduction, there are a number of application requirements. So the regressors should not have a high multicollinearity .

Model specification

The (binomial) logistic regression model is

- ,

this represents the unknown vector of the regression coefficients is and the product is the linear predictor .

It comes from the idea of the opportunities ( English odds of) d. H. the ratio of to the counter-probability or (when coding the alternative category with 0)

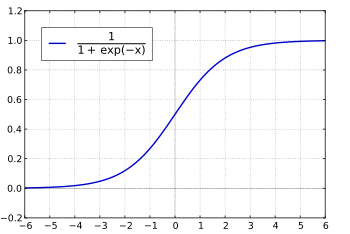

The chances can assume values greater than 1, but their value range is limited downwards (it approaches 0 asymptotically). An unlimited range of values is created by transforming the opportunities into so-called logits

achieved; these can assume values between minus and plus infinite. The logits serve as a kind of coupling function between the probability and the linear predictor . The regression equation is then used in logistic regression

estimated; thus regression weights are determined from which the estimated logits for a given matrix of independent variables can be calculated. The following graphic shows how logits ( ordinate ) are related to the output probabilities (abscissa):

The regression coefficients of logistic regression are not easy to interpret. The so-called effect coefficients are therefore often formed by exponentiation; the regression equation thus relates to the odds:

The coefficients are often referred to as effect coefficients . Here, coefficients smaller than 1 indicate a negative influence on the chances, a positive influence is given if .

By means of a further transformation, the influences of the logistic regression can also be expressed as influences on the probabilities :

Estimation method

In contrast to the linear regression analysis, a direct calculation of the best regression curve is not possible. For this reason, a maximum likelihood solution is usually estimated using an iterative algorithm .

Model diagnosis

The regression parameters are estimated on the basis of the maximum likelihood method . Inferential statistical methods are available for the individual regression coefficients as well as for the overall model (see Wald test and likelihood quotient test ); In analogy to the linear regression model, methods of regression diagnostics have also been developed, which can be used to identify individual cases with an excessive influence on the result of the model estimation. Finally, there are also some suggestions for calculating a quantity which, in analogy to the coefficient of determination of linear regression, allows an estimation of the “ explained variation ”; one speaks here of so-called pseudo-determinations . The Akaike information criterion and Bayesian information criterion are also occasionally used in this context.

The Hosmer-Lemeshow test is often used to assess the goodness of fit , particularly in models for risk adjustment . This test compares the predicted and observed rates of events in subgroups of the population, often the deciles, ordered by probability of occurrence . The test statistic is calculated as follows:

In this case represent the observed ( english -observed ) events, the expected ( english expected ) events, the number of observations and the probability of occurrence of the g th percentiles . The number of groups is .

The ROC curve is also used to assess the predictive power of logistic regressions, with the area under the ROC curve ( AUROC for short ) serving as a quality criterion.

Alternatives and extensions

As an (essentially equivalent) alternative, the probit model can be used, which is based on a normal distribution.

A transfer of the logistic regression (and the probit model) to a dependent variable with more than two discrete features is possible (see Multinomial Logistic Regression or Ordered Logistic Regression ).

literature

- Hans-Jürgen Andreß, J.-A. Hagenaars, Steffen Kühnel: Analysis of tables and categorical data. Springer, Berlin 1997, ISBN 3-540-62515-1 .

- Dieter Urban: Logit Analysis. Lucius & Lucius, Stuttgart 1998, ISBN 3-8282-4306-1 .

- David Hosmer, Stanley Lemeshow: Applied logistic regression. 2nd Edition. Wiley, New York 2000, ISBN 0-471-35632-8 .

- Alan Agresti: Categorical Data Analysis. 2nd Edition. Wiley, New York 2002, ISBN 0-471-36093-7 .

- Scott J. Long: Regression Models for Categorical and Limited Dependent Variables. Sage 1997, ISBN 0-8039-7374-8 .

Web links

- Daniel McFadden's Nobel Prize Speech: History of Logit Regression

- Introduction to Logistic Regression with SPSS (PDF)

Individual evidence

- ^ Paul David Allison: Logistic regression using the SAS system theory and application . SAS Institute, Cary NC 1999, p. 48.