Quality engineering

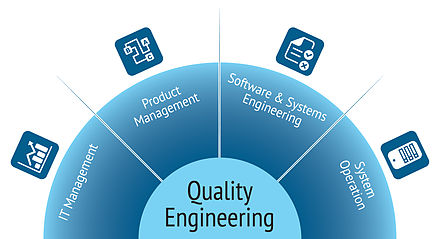

Quality engineering describes the management, creation, operation and further development of IT systems and company architectures with high quality standards.

description

IT services are increasingly linked to workflows across the boundaries of platforms, devices and organizations, for example when integrating services from a cloud, with cyber-physical systems or business-to-business workflows. In such contexts, a comprehensive consideration of quality properties in the form of quality engineering has become necessary.

This requires an "end-to-end" quality assessment from management to operation. Quality Engineering integrates methods and tools from corporate architecture management, software product management , IT service management , software engineering and systems engineering , as well as software quality management and IT security management . Quality engineering therefore goes beyond the classic disciplines of software engineering, IT management or software product management, as management aspects (e.g. strategy, risk management, business process view, knowledge and information management, operational performance management), design aspects (e.g. . as the software development process , requirements analysis , software testing ) and operational aspects (eg. as configuration, monitoring, IT security management) involves. In many application domains, quality engineering is closely linked to the fulfillment of legal and business requirements, contractual obligations and standards. Reliability and safety of IT services play a dominant role in terms of quality characteristics.

Quality objectives are achieved in quality engineering through a cooperative process. This process requires the interaction of largely independent actors whose knowledge is based on different sources of information.

Quality goals

Quality goals describe basic requirements for the quality of the software . In quality engineering, they often address the quality characteristics of availability, security, safety, reliability, performance and usability . With the help of quality models such as ISO / IEC 25000 and methods such as the Goal Question Metric approach, measurable key figures can be assigned to the quality goals. The measurement of the degree of achievement of quality goals made possible by this is a central component of the quality engineering process and a prerequisite for its continuous control and management. In order to ensure an effective and efficient measurement of the quality objectives, the integration of manually determined key figures (e.g. through expert assessments or reviews) and automatically determined metrics (e.g. through static analysis of source code or automated regression tests) is propagated as a basis for decision-making.

actors

The end-to-end quality management approach requires many actors with different responsibilities and tasks, different expertise and organizational integration for quality engineering.

Roles that are involved in quality engineering:

- Specialist architect,

- IT architect,

- Security officer,

- Requirements Engineer,

- Software quality manager,

- Test manager,

- Project manager,

- Product manager and

- Security architect.

Typically these roles are distributed across geographical and organizational boundaries. Accordingly, quality engineering requires measures to coordinate the heterogeneous tasks of these roles and to consolidate and synchronize the data and information necessary to fulfill these tasks and to make them accessible to the respective actors in a suitable form.

Knowledge management

Knowledge management is an important task within quality engineering . The quality engineering knowledge base comprises a large number of structured and unstructured data, ranging from code repositories to requirements specifications, standards, test reports and enterprise architecture models to system configurations and runtime logs. Software and system models play a major role in mapping this knowledge. The data of the quality engineering knowledge base is generated, processed and made accessible in a geographically, organizationally and technically distributed context both manually and (semi-) automatically with the aid of tools. The most important goal here is that the quality assurance tasks can be carried out in the best possible way, risks are recognized at an early stage and the cooperation between the actors is suitably supported.

This results in the following requirements for a quality engineering knowledge base:

- The knowledge is available in the required quality. Important quality criteria are consistency and timeliness, but also completeness and adequate granularity, based on the tasks of the assigned actors.

- The knowledge is linked and traceable to support stakeholder interaction and data analysis. Traceability refers both to the linking of data across abstraction levels (e.g. linking of requirements with realizing services) and to traceability over time, which requires suitable versioning concepts. The data can be linked either manually or (semi-) automatically.

- The information must be available in the form that corresponds to the domain knowledge of the assigned actors. The knowledge base must therefore provide suitable mechanisms for information transformation (e.g. aggregation) and visualization. The RACI concept , for example, is a suitable model for assigning actors to information in a quality engineering knowledge base .

- In contexts in which actors from different organizations or levels interact with one another, the quality engineering knowledge base must provide mechanisms that ensure confidentiality and integrity.

- Quality engineering knowledge bases offer a broad field for analysis and for finding information to support the quality assurance tasks of the actors.

Cooperative processes

The quality engineering process includes all manual and (semi-) automated tasks for the collection, fulfillment and measurement of quality properties in the selected context. This process is highly cooperative in the sense that it requires the interaction of largely independent actors.

The quality engineering process typically has to integrate existing sub-processes that can include strongly structured processes such as IT service management and weakly structured processes such as agile software development . Another important aspect is a change-driven approach in which change events such as B. changed requirements are dealt with in the local context of the information and actors affected by this change. Supporting methods and tools that support change propagation and change handling are a prerequisite for this.

The aim of an efficient quality engineering process is to coordinate automated and manual quality assurance tasks. Examples of manual tasks are the code review or the collection of quality goals, examples of tasks that can be carried out automatically are the regression test or the collection of code metrics. The quality engineering process (or its sub-processes) can also be supported by tools, e.g. B. through ticket systems or security management tools.

Web links

- Txture is a tool for IT architecture documentation and analysis.

- mbeddr are integrated and extensible languages for embedded software engineering, plus an integrated development environment (IDE).

Individual evidence

- ↑ Ruth Breu, Annie Kuntzmann-Combelles, Michael Felderer: New Perspectives on Software Quality . IEEE Computer Society. Pp. 32-38. January-February 2014. Retrieved April 2, 2014.

- ↑ Ruth Breu, Berthold Agreiter, Matthias Farwick, Michael Felderer, Michael Hafner, Frank Innerhofer-Oberperfler: Living Models - Ten Principles for Change-Driven Software Engineering . ISCAS. Pp. 267-290. 2011. Retrieved April 16, 2014.

- ↑ Michael Felderer, Christian Haisjackl, Ruth Breu, Johannes Motz: Integrating Manual and Automatic Risk Assessment for Risk-Based Testing . Springer Berlin Heidelberg. Pp. 159-180. 2012. Retrieved April 16, 2014.

- ↑ Michael Kläs, Frank Elberzhager, Jürgen Münch, Klaus Hartjes, Olaf von Graevemeyer: Transparent combination of expert and measurement data for defect prediction: an industrial case study . ACM New York, USA. Pp. 119-128. 2. Accessed April 8, 2014.

- ↑ Jacek Czerwonka, Nachiappan Nagappan, Wolfram Schulte, Brendan Murphy: CODEMINE: Building a Software Development Data Analytics Platform at Microsoft . IEEE Computer Society. Pp. 64-71. July-August 2013. Retrieved April 7, 2014.