Transinformation

Trans information or mutual information (engl. Mutual information ) is a size from the information theory , the strength of the statistical correlation of two random variables indicating. The transinformation is also called synentropy . In contrast to the synentropy of a Markov source of the first order, which expresses the redundancy of a source and should therefore be minimal, the synentropy of a channel represents the average information content that reaches the sender and receiver and should therefore be maximum.

The term relative entropy is occasionally used, but this corresponds to the Kullback-Leibler divergence .

The transinformation is closely related to entropy and conditional entropy . The transinformation is calculated as follows:

Definition of the difference between source entropy and equivocation or receive entropy and misinformation:

Definition via probabilities:

Definition of the Kullback-Leibler divergence:

Definition via the expected value :

If the transinformation disappears, one speaks of the statistical independence of the two random variables. The transinformation is maximal when one random variable can be calculated completely from the other.

Transinformation is based on the definition of information introduced by Claude Shannon with the help of entropy (uncertainty, average information content). If the transinformation increases, the uncertainty about one random variable decreases, provided that the other is known. If the transinformation is at its maximum, then the uncertainty disappears. As can be seen from the formal definition, the uncertainty of one random variable is reduced by knowing another. This is expressed in the transinformation.

Transinformation plays a role, for example, in data transmission. It can be used to determine the channel capacity of a channel .

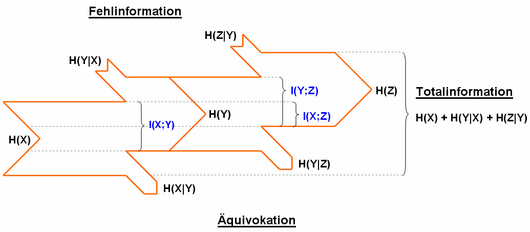

Correspondingly, an entropy H (Z) can also depend on two different, in turn dependent, entropies:

Various terms are used in the specialist literature. The equivocation is also referred to as "loss entropy" and the misinformation as " irrelevance ". Transinformation is also referred to as "transmission" or "medium transinformation content".

literature

- Martin Werner: Information and Coding. Basics and Applications, 2nd edition, Vieweg + Teubner Verlag, Wiesbaden 2008, ISBN 978-3-8348-0232-3 .

- Herbert Schneider-Obermann: Basic knowledge of electrical, digital and information technology. 1st edition. Friedrich Vieweg & Sohn Verlag / GWV Fachverlage GmbH, Wiesbaden 2006, ISBN 978-3-528-03979-0 .

- D. Krönig, M. Lang (Ed.): Physics and Computer Science - Computer Science and Physics. Springer Verlag, Berlin / Heidelberg 1991, ISBN 978-3-540-55298-7 .

Web links

- Peter E. Latham, Yasser Roudi: Mutual information . In: Scholarpedia . (English, including references)

- Information channels and their capacity (accessed February 26, 2018)

- Entropy, Transinformation and Word Distribution of Information {Carrying Sequences (accessed February 26, 2018)

- Information and Coding Theory (accessed February 26, 2018)

- Formulas and Notes Information Theory (accessed February 26, 2018)

Individual evidence

- ↑ R. Lopez De Mantaras: A Distance-Based Attribute Selection Measure for Decision Tree Induction . In: Machine Learning . tape 6 , no. 1 , January 1, 1991, ISSN 0885-6125 , p. 81-92 , doi : 10.1023 / A: 1022694001379 ( springer.com [accessed May 14, 2016]).