Explainable Artificial Intelligence

Explainable Artificial Intelligence ( XAI ; German: explainable artificial intelligence or explainable machine learning ) is a neologism that has been used in research and discussion about machine learning since around 2004 .

XAI is intended to make it clear how dynamic and non-linear programmed systems, e.g. B. artificial neural networks , deep learning systems (reinforcement learning) and genetic algorithms come to results. XAI is a technical discipline that develops and provides operational methods that are used to explain AI systems, for example as part of the implementation of the General Data Protection Regulation (GDPR) of the European Union .

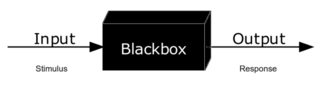

Without XAI, machine learning is a black box process in which the introspection of a dynamic system is unknown or impossible and the user has no control over how software arrives at solving a problem.

definition

There is currently no generally accepted definition of XAI.

The XAI program of the Defense Advanced Research Projects Agency (DARPA), whose approach can be shown schematically, defines its goals with the following requirements:

- Produce more explainable models while maintaining high learning performance (predictive accuracy).

- Enable human users to understand the emerging generation of artificially intelligent partners, to trust them appropriately, and to deal with them effectively.

history

While the term “XAI” is still relatively new - the concept was mentioned early in 2004 - the conscious approach of wanting to fully understand the procedure of machine learning systems has a longer history. Researchers have been interested in deriving rules from trained neural networks since the 1990s, and scientists in the field of clinical expert systems that provide neural decision support for medical professionals have tried to develop dynamic explanatory systems that make these technologies more trustworthy in practice.

Lately, however, the focus has been on explaining machine learning and AI to decision-makers - and not to the designers or direct users of decision-making systems and making them understandable. Since the introduction of the program by DARPA in 2016, new initiatives have attempted to address the problem of algorithmic accountability (such as 'algorithmic accountability') and to create transparency (glass box process) of how technologies work in this area:

- April 25, 2017: Nvidia publishes Explaining How a Deep Neural Network Trained with End-to-End Learning Steers a Car .

- July 13, 2017: Accenture recommended Responsible AI: Why we need Explainable AI .

Methods

Different methods are used for XAI:

- Layer-wise relevance propagation (LRP) was first described in 2015 and is a technique for determining the characteristics of certain input vectors that contribute most to the output result of a neural network.

- Counterfactual method : After receiving a result, input data (text, images, diagrams, etc.) are specifically changed and one observes how this changes the output result.

- Local interpretable model-agnostic explanations (LIME)

- Generalized additive model (GAM)

- Rationalization : Especially with AI-based robots, the machine is enabled to “verbally explain” its own actions.

Application examples

Certain industries and service sectors are particularly affected by XAI requirements, since by the ever more intense there application of AI systems, the "accountability" (English accountability ) more and more on the software and its - shifting results ( "delegated") - sometimes surprising becomes.

The following areas are particularly in focus (alphabetical listing):

- Antenna design

- High frequency trading (algorithmic trading)

- Medical diagnostics

- Self-driving motor vehicles

- Neural network imaging

- Training of military strategies

International exchange on the topic and demands

As regulators , agencies and general users depend on dynamic AI-based systems, clearer accountability for decision-making will be needed to ensure trust and transparency .

The International Joint Conference on Artificial Intelligence: Workshop on Explainable Artificial Intelligence (XAI) in 2017 is proof that this legitimate demand is gaining momentum .

With regard to AI systems, the Australian publicist and scientist Kate Crawford and her colleague Meredith Whittaker (AI Now Institute) demand that “the most important public institutions, such as For example, those responsible for criminal justice, health, welfare and education […] should stop using black box AI and algorithmic systems ”. In addition, they demand - beyond the purely technical measures and methods of explaining such systems - binding ethical standards, such as those used in the pharmaceutical industry and clinical research .

Web links

- Explainable AI: Making machines understandable for humans . Retrieved November 2, 2017.

- Explaining How End-to-End Deep Learning Steers a Self-Driving Car . May 23, 2017. Retrieved November 2, 2017.

- New isn't on its way. We're applying it right now. . October 25, 2016. Retrieved November 2, 2017.

- David Alvarez-Melis and Tommi S. Jaakkola: A causal framework for explaining the predictions of black-box sequence-to-sequence models , arxiv : 1707.01943 , July 6, 2017.

- TEDx Talk by Peter Haas on the need for transparent AI systems: The Real Reason to be Afraid of Artificial Intelligence (Engl.)

Individual evidence

- ↑ a b Patrick Beuth: The machines need supervision , Die Zeit, October 25, 2017, p. 3; accessed on January 25, 2018.

- ↑ A commonly used German term has not yet established itself, which is why the English term and its abbreviation are used here in the article.

- ↑ Andreas Holzinger: explainable AI (ex AI) . In: Computer Science Spectrum . tape 41 , no. 2 , April 1, 2018, ISSN 0170-6012 , p. 138-143 , doi : 10.1007 / s00287-018-1102-5 .

- ^ Optimization Technology Center of Northwestern University and Argonne National Laboratory: Nonlinear Programming - Frequently Asked Questions ; accessed on December 2, 2017.

- ↑ a b Patrick Beuth: The puzzling world of thoughts of a computer , DIE ZEIT Online, March 24, 2017; accessed on January 29, 2018.

- ↑ Will Knight: DARPA is funding projects that will try to open up AI's black boxes , MIT Technology Review, March 14, 2017; accessed on November 2, 2017.

- ↑ WF Lawless, Ranjeev Mittu, Donald Sofge and Stephen Russell: Autonomy and Artificial Intelligence: A Threat or Savior? , August 24, 2017, Springer International Publishing, ISBN 978-3-319-59719-5 , p. 95.

- ↑ David Gunning: Explainable Artificial Intelligence (XAI) (DARPA / I2O) ; Retrieved December 3, 2017.

- ↑ Michael van Lent and William Fisher: An Explainable Artificial Intelligence System for Small-unit Tactical Behavior , Proceedings of the 16th Conference on Innovative Applications of Artificial Intelligence (2004), pp. 900-907.

- ^ AB Tickle, R. Andrews, M. Golea and J. Diederich: The truth will come to light: directions and challenges in extracting the knowledge embedded within trained artificial neural networks , Transactions on Neural Networks, Volume 9 (6) (1998) , Pp. 1057-1068, doi: 10.1109 / 72.728352 .

- ↑ Lilian Edwards and Michael Veale: Slave to the Algorithm? Why a 'Right to an Explanation' Is Probably Not the Remedy You Are Looking For , Duke Law and Technology Review (2017), doi: 10.2139 / ssrn.2972855 .

- ^ Andreas Holzinger, Markus Plass, Katharina Holzinger, Gloria Cerasela Crisan, Camelia-M. Pintea: A glass-box interactive machine learning approach for solving NP-hard problems with the human-in-the-loop . In: arXiv: 1708.01104 [cs, stat] . August 3, 2017 (arXiv: 1708.01104 [accessed May 27, 2018]).

- ↑ M. Bojarski, P. Yeres, A. Choromanska, K. Choromanski, B. Firner, L. Jackel and U. Muller: Explaining How a Deep Neural Network Trained with End-to-End Learning Steers a Car , arxiv : 1704.07911 .

- ↑ Responsible AI: Why we need Explainable AI . Accenture. Retrieved July 17, 2017.

- ↑ Dan Shiebler: Understanding Neural Networks with LayerWise Relevance propagation and Deep Taylor Series . April 16, 2017. Retrieved November 3, 2017.

- ↑ Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller and Wojciech Samek: On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation . In: Public Library of Science (PLoS) (Ed.): PLOS ONE. 10, No. 7, July 10, 2015. doi: 10.1371 / journal.pone.0130140 .

- ↑ a b c d Paul Voosen: How AI detectives are cracking open the black box of deep learning , Science, July 6, 2017; accessed on March 27, 2018.

- ↑ Christoph Molnar: Interpretable Machine Learning - A Guide for Making Black Box Models Explainable : 5.4 Local Surrogate Models (LIME) , March 29, 2018; accessed on March 31, 2018.

- ↑ Simon N. Wood: Generalized Additive Models: An Introduction with R, Second Edition . CRC Press, May 18, 2017, ISBN 978-1-4987-2837-9 .

- ↑ Upol Ehsan, Brent Harrison, Larry Chan and Mark O. Riedl: Rationalization: A Neural Approach to Machine Translation Generating Natural Language Explanations , Cornell University Library, February 15, 2017; accessed on March 31, 2018.

- ↑ Dong Huk Park, Lisa Anne Hendricks, Zeynep Akata, Bernt Schiele, Trevor Darrell and Marcus Rohrbach: Attentive Explanations: Justifying Decisions and Pointing to the Evidence , Cornell University Library, December 16, 2016; accessed on March 31, 2018.

- ↑ NASA 'Evolutionary' software automatically designs antenna . NASA. Retrieved July 17, 2017.

- ^ Matt Levine, Dark Pools and Money Camps , Bloomberg View, July 10, 2017; accessed on January 20, 2018.

- ^ The Flash Crash: The Impact of High Frequency Trading on an Electronic Market . CFTC. Retrieved July 17, 2017.

- ↑ Stephen F. Weng, Jenna Reps, Joe Kai, Jonathan M. Garibaldi, Nadeem Qureshi, Bin Liu: Can machine-learning improve cardiovascular risk prediction using routine clinical data ?. In: PLOS ONE. 12, 2017, p. E0174944, doi: 10.1371 / journal.pone.0174944 .

- ↑ Will Knight: The Dark Secret at the Heart of AI , MIT Technology Review, April 11, 2017; accessed on January 20, 2018.

- ↑ Tesla says it has 'no way of knowing' if autopilot was used in fatal Chinese crash . Guardian. Retrieved July 17, 2017.

- ↑ Joshua Brown, Who Died in Self-Driving Accident, Tested Limits of His Tesla . New York Times. Retrieved July 17, 2017.

- ↑ Neual Network Tank image . Neil Fraser. Retrieved July 17, 2017.

- ^ Chee-Kit Looi: Artificial Intelligence in Education: Supporting Learning Through Intelligent and Socially Informed Technology . IOS Press, 2005, ISBN 978-1-58603-530-3 , p. 762 ff.

- ↑ IJCAI 2017 Workshop on Artificial Intelligence explainable (XAI) . IJCAI. Retrieved July 17, 2017.

- ↑ In the original: "Core public agencies, such as those responsible for criminal justice, healthcare, welfare, and education (eg" high stakes "domains) should no longer use 'black box' AI and algorithmic systems."

- ^ AI Now Institute: The 10 Top Recommendations for the AI Field in 2017 , October 18, 2017; accessed on January 29, 2018.

- ↑ Scott Rosenberg: Why AI Is Still Waiting For Its Ethics Transplant , Wired , Jan. 11, 2017; Retrieved January 25, 2018. See also the Pdf AI Now 2017 Report .