Collective operations

Collective operations are basic building blocks for interaction patterns that are often used in SPMD algorithms and parallel programming . This creates the need to perform these operations efficiently.

The Message Passing Interface (MPI) provides a realization of the collective operations.

Definitions

In asymptotic runtime analysis , let the latency , the communication time per word , the number of processor units and the size of the input per node . For operations that start with messages on different processor units, we assume that all local messages have the same size. To denote individual processor units, we use .

An upper limit can be determined from the specified transit times in the event that the initial messages have different sizes. Processor unit got a message of size . Then you sit down .

The model of a distributed memory is assumed. The concepts presented are similar in the shared memory model. With shared memory, however, there is the possibility that the hardware supports operations such as broadcast directly. This support opens up additional possibilities in the development of algorithms.

Broadcast

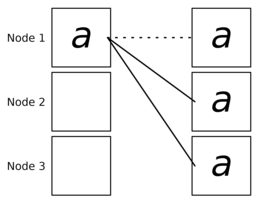

The broadcast pattern is used to distribute data from one processor unit to all other processor units. One use case of broadcast is to distribute inputs and global variables in SPMD parallel programs. The broadcast can be viewed as an inverse reduction . At the beginning, the root for a fixed contains the message . Here we assume to make the explanation simpler. During the broadcast, it is sent to the remaining processor units so that it is ultimately available on all processor units.

Since the trivial implementation, which is transmitted in iterations directly from to , does not perform well enough, an approach that uses the ' divide-and-conquer ' principle is used. If there is a power of two, a binomial tree can be used as the underlying structure. Assume processor unit is responsible for forwarding the message to processor units . Then send the message to with . The responsibility for the transmission of to processor units with indices is transferred to , in the following it is only responsible for the transmission of to the processor units with indices . The performance of the binomial tree broadcast is not good for long messages, since a processor unit that receives can only forward the message when it has been completely received. Pipelining is used to compensate for this. It is broken down into an array of packages of the size . The packets are then distributed one after the other by broadcast, which allows better utilization of the communication network.

Broadcast with pipelining on a balanced binary tree is possible in runtime .

reduction

The reduction pattern is used to collect data or partial results from various processor units and combine them into a global result. Reduction can be understood as an inverse operation to broadcast ( #Broadcast ). Let be an associative operator , the processing unit on which the result should be stored. Then the reduction calculates the result and stores it on the processor unit . Some algorithms require that there is also commutative . Common operators are .

Since reduction can be viewed as an inverse broadcast, the same boundary conditions apply for an implementation. In order to enable pipelining it is important that the message can be represented as a vector of smaller objects so that a component-wise reduction is possible.

Reduction with pipelining on a balanced binary tree is possible in time .

All-reduce

The All-reduce pattern is used when the result of a reduction ( #reduction ) is to be made available to all processor units. At the beginning, the message is on the processor unit . After the all-reduce, the result is available for all . Conceptually, all-reduce corresponds to a reduction with a subsequent broadcast ( #Broadcast ). All-reduce must also be associative.

The same boundary conditions play a role for long messages. For short messages, the latency can be improved by using a hypercube topology, provided it is a power of two.

We see that all-reduce in is possible, since reduction and broadcast are both possible in.

Prefix Sum / Scan

The prefix sum or scan pattern is used to collect data or partial results from several processor units and to calculate intermediate results using an operator . The intermediate results are stored on the individual processor units. The prefix sum can be understood as a generalization of the pattern reduction ( #reduction ). As in Reduction and All-reduce ( # All-reduce ), the operator requires at least associativity, although some algorithms also require commutativity. Common surgeries are .

After completing the prefix sum, the processor unit contains the message . In the special case of the exclusive prefix sum, it is calculated instead . Some algorithms also require that, in addition to the prefix sum, the complete sum is also stored on each processor unit, i.e. that the prefix sum and all-reduce are combined.

For short messages, an optimal implementation can be achieved using a hypercube topology. The hypercube is not effective for long messages, since all processor units are active in every step and pipelining cannot therefore be used. For long messages, a binary tree in combination with pipelining is better suited instead . The prefix sum is divided into an upward and a downward phase. The reduction takes place in the upward phase. The downward phase is similar to broadcast ( #Broadcast ). The prefix sum is calculated by sending the nodes different data to their left and right nodes. Pipelining is used as with reduction and broadcast.

Prefix sum in time is possible on a binary tree .

barrier

The barrier is a generalization of the concept of the barrier on distributed computing. When a processing unit calls the barrier, it waits until all other processing units have also called the barrier before continuing in the program. The barrier is therefore a possibility of global synchronization .

One way to implement the barrier is to call All-reduce ( # All-reduce ) with an empty operand. This reduces the message size to a constant factor and only the latency term remains in the runtime analysis. Since the running time for all-reduce is, the running time of the barrier is in .

Gather

The Gather pattern is used to collect data from all processor units and combine them on a single processor unit. Is located at the beginning of the message to processor unit , it shall on the root after Gather the message is stored. Conceptually, gather corresponds to reduction ( #reduction ) where the operator is the concatenation of the messages. Concatenation is associative and thus fulfills the requirement of reduction.

By using the binomial tree algorithm of reduction, a running time of . The running time is similar to the running time of the reduction, except for an additional factor that was multiplied by the term . This factor comes from the fact that the size of the messages increases with each step. This is due to the concatenation as an operator and is in contrast to operators such as , which require a constant message size over all steps.

All-gather

The all-gather pattern is used to collect data from all processor units on all processor units. Given a message on the processor unit , the message should be transferred to all processor units.

All-gather can be viewed in different ways. On the one hand, it corresponds to the pattern all-reduce with the operation concatenation, just as Gather can be seen as reduce with concatenation. On the other hand, it corresponds to the pattern gather with subsequent broadcast of the aggregated message with size . We see that all-gather can be done in runtime .

Scatter

The scatter pattern is used to distribute data from a processor unit to all processor units. It differs from broadcast in that not all processor units receive the same message. Instead, each processor unit receives a cutout. The message on the root should therefore be distributed in such a way that the message is then available on the processor unit . Scatter can be seen as an inverted gather ( #Gather ).

The same considerations can be made for scatters as for gathers. The result is a runtime in .

All-to-all

The all-to-all pattern represents the most general communication pattern. For is the message that is present on the processor unit at the beginning and is on the processor unit after the operation . So each processor unit has individual messages for all other processor units. All other patterns that do not require surgery can be expressed using all-to-all. For example, broadcast can be emulated in which the root distributes the message by setting and blank message for .

If the network can be seen as a complete graph , a runtime in is possible. All-to-all is implemented through rounds of message exchanges in pairs. If a power of two, may in this round of nodes with the node , to communicate.

If the message size is small and the latency dominates the runtime, a runtime in can be achieved using a hypercube .

Term overview

This table gives an overview of the best possible asymptotic runtimes, provided the network topology is free to choose .

Example topologies for an optimal runtime are binary tree , binomial tree and hypercube , depending on the algorithm .

In practice, the algorithms have to be adapted to the actually available topologies, e.g. fat tree , grid, dragonfly.

For some operations, the choice of the optimal algorithm can depend on the input size. For example, broadcast is optimal for short messages on a binomial tree, while for long messages, communication using pipelining is optimal on a binary tree.

The name of the respective pattern is in the Name column of the table . The # Sender column lists the number of processor units that initially have a message to be distributed. # Receiver lists the number of nodes that have received a message. # Messages shows the total number of messages to be delivered. Calculation lists whether there is a calculation in addition to communication. Runtime complexity lists the asymptotic runtime of an optimal implementation with a free choice of topology.

| Surname | # Channel | # Receiver | # News | calculation | Runtime complexity |

|---|---|---|---|---|---|

| Broadcast | No | ||||

| reduction | Yes | ||||

| All-reduce | Yes | ||||

| Prefix Sum / Scan | Yes | ||||

| barrier | No | ||||

| Gather | No | ||||

| All-gather | No | ||||

| Scatter | No | ||||

| All-to-all | No | or |

literature

- Peter Sanders, Kurt Mehlhorn, Martin Dietzfelbinger, Roman Dementiev: Sequential and Parallel Algorithms and Data Structures - The Basic Toolbox . Springer Nature Switzerland AG, 2019, ISBN 978-3-030-25208-3 .

Individual evidence

- ^ Intercommunicator Collective Operations . The Message Passing Interface (MPI) standard, chapter 7.3.1. Mathematics and Computer Science Division, Argonne National Laboratory .

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, p. 395

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, pp. 396–401

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, pp. 402–403

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, pp. 403–404

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, pp. 404-406

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, p. 408

- ↑ a b Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, pp. 412–413

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, p. 413

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, pp. 413-418

- ↑ Sanders, Mehlhorn, Dietzfelbinger, Dementiev 2019, p. 394