Message passing interface

| MPI

|

|

|---|---|

|

|

| Basic data

|

|

| developer | MPI Forum |

| Current version |

Version 3.1 (PDF; 2.8 MB) (June 4, 2015) |

| operating system | Linux , Unix , Microsoft Windows NT , macOS |

| category | API |

| German speaking | No |

| MPI website | |

Message Passing Interface ( MPI ) is a standard that describes the exchange of messages in parallel calculations on distributed computer systems. It defines a collection of operations and their semantics, i.e. a programming interface , but no specific protocol and no implementation.

An MPI application usually consists of several processes that communicate with one another , all of which are started in parallel at the beginning of the program execution. All these processes then work together on a problem and use messages for data exchange that are explicitly sent from one process to the other. One advantage of this principle is that the exchange of messages also works across computer boundaries. Parallel MPI programs can therefore be executed both on PC clusters (here messages are exchanged, e.g. via TCP ) and on dedicated parallel computers (messages are exchanged here via a high-speed network such as InfiniBand or Myrinet or via the shared main memory ) .

history

In 1992, the development of the MPI 1.0 standard began with drafts (November 1992, February 1993, November 1993). The starting point were older communication libraries such as PVM, PARMACS, P4, Chameleon and Zipcode. The standard appeared on May 5, 1994 with

- Point-to-point communication

- global communication

- Groups, context and communicators

- Surroundings

- Profiling interface

- Language integration for C and Fortran 77

In June 1995 errors were corrected with MPI 1.1.

On July 18, 1997, the stable version MPI 1.2 was published, which, in addition to further bug fixes, allows version identification. It is also known as MPI-1.

On May 30, 2008 MPI 1.3 was released with further bug fixes and clarifications.

At the same time as version 1.2, the MPI 2.0 standard was adopted on July 18, 1997. This is also known as MPI-2 and includes the following extensions:

- parallel file input / output

- dynamic process management

- Access to memory of other processes

- additional language integration of C ++ and Fortran 90

On June 23, 2008, the previously separate parts MPI-1 and MPI-2 were combined into a common document and published as MPI 2.1. MPI Standard Version 2.2 is from September 4th, 2009 and contains further improvements and minor extensions.

On September 21, 2012 the MPI Forum published MPI-3, which incorporates new functionality such as non-blocking collectives, an improved one-way communication model (RMA, Remote Memory Access), a new Fortran interface, topography-related communication and non-blocking parallel input and output.

One of the main developers is Bill Gropp .

Point-to-point communication

The most basic type of communication takes place between two processes: a sending process transfers information to a receiving process. In MPI, this information is packed into so-called messages with the parameters buffer, countand datatypeare described below. A suitable receive operation must exist for every send operation. Since the mere sequence of processing operations is not always sufficient in parallel applications, MPI also offers the tagparameter - only if this value is identical for the send and receive operations, then both will fit together.

Blocking sending and receiving

The simplest operations for point-to-point communication are send and receive :

int MPI_Send (void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm)

- buf : Pointer to the send buffer

- count : number of elements in the send buffer

- datatype : data type of the elements in the send buffer

- dest : Rank of the target process

- tag : tag the message

- comm : communicator of the process group

int MPI_Recv (void* buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status* status)

-

buf: Pointer to a receive buffer of sufficient size -

count: Number of elements in the receive buffer -

datatype: Data type of the elements in the receive buffer -

source: Rank of the source process (withsource=MPI_ANY_SOURCEis received by any process) -

tag: expected marking of the message (tag=MPI_ANY_TAGevery message is received with) -

comm: Communicator of the process group -

status: Pointer to a status structure in which information about the received message is to be stored

The two operations are blocking and asynchronous . That means:

-

MPI_Recvcan be executed before the associated one hasMPI_Sendstarted -

MPI_Recvblocked until the message is completely received

The following applies analogously:

-

MPI_Sendcan be executed before the associated one hasMPI_Recvstarted -

MPI_Sendblocked until the send buffer can be reused (i.e. the message has been completely transmitted or buffered)

Sample program

The use of MPI_Sendand MPI_Recvis illustrated in the following ANSI-C example for 2 MPI processes:

#include "mpi.h"

#include <stdio.h>

#include <string.h>

int main(int argc, char *argv[])

{

int myrank, message_size=50, tag=42;

char message[message_size];

MPI_Status status;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &myrank);

if (myrank == 0) {

MPI_Recv(message, message_size, MPI_CHAR, 1, tag, MPI_COMM_WORLD, &status);

printf("received \"%s\"\n", message);

}

else {

strcpy(message, "Hello, there");

MPI_Send(message, strlen(message)+1, MPI_CHAR, 0, tag, MPI_COMM_WORLD);

}

MPI_Finalize();

return 0;

}

Non-blocking communication

The efficiency of a parallel application can often be increased by overlapping communication with calculation and / or avoiding synchronization-related waiting times. For this purpose, the MPI standard defines so-called non-blocking communication, in which the communication operation is only initiated. A separate function must then be called to end such an operation. In contrast to the blocking variant, when the operation is started, an Requestobject is created that can be used to check or wait for the completion of this operation.

int MPI_Isend (void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm, MPI_Request* request)

- ...

-

request: Address of the data structure that contains information about the operation

int MPI_Irecv (void* buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Request* request)

- ...

Query progress

To know the progress of one of these operations, the following operation is used:

int MPI_Test (MPI_Request* request, int* flag, MPI_Status* status)

Where flag=1or is 0set depending on whether the operation has been completed or is still in progress.

Waiting blocking

The following operation is used to wait for a MPI_Isend- or - MPI_Irecvoperation in a blocking manner:

int MPI_Wait (MPI_Request* request, MPI_Status* status)

Synchronizing sending

The synchronous variants MPI_Ssendand are also MPI_Issenddefined for the send operations . In this mode, sending will not end until the associated receive operation has started.

Buffering variants

...

Groups and communicators

Processes can be summarized in groups , with each process being assigned a unique number, the so-called rank . A communicator is required to access a group . If a global communication operation is to be restricted to a group, the communicator belonging to the group must be specified. The communicator for the set of all processes is called MPI_COMM_WORLD.

The commgroup belonging to the communicator is included

int MPI_Comm_group (MPI_Comm comm, MPI_Group* group)

The usual set operations are available for process groups.

Union

Two groups group1and group2can be combined into a new group new_group:

int MPI_Group_union (MPI_Group group1, MPI_Group group2, MPI_Group* new_group)

The processes from group1keep their original numbering. The group2ones that are not already included in the first are consecutively numbered.

Intersection

The intersection of two groups is obtained with

int MPI_Group_intersection (MPI_Group group1, MPI_Group group2, MPI_Group* new_group)

difference

The difference between two groups is obtained with

int MPI_Group_difference (MPI_Group group1, MPI_Group group2, MPI_Group* new_group)

Global communication

In parallel applications one often encounters special communication patterns in which several or even all MPI processes are involved at the same time. The MPI standard has therefore defined its own operations for the most important patterns. These are roughly divided into three types: synchronization (barrier), communication (e.g. broadcast, gather, all-to-all) and communication coupled with calculation (e.g. reduce or scan). Some of these operations use a selected MPI process that has a special role and is typically rootreferred to as. In addition to the regular communication operations, there are also vector-based variants (e.g. Scatterv) that allow different arguments per process where it makes sense.

Broadcast

With the broadcast operation, a selected MPI process sends the same data to rootall other processes in its group comm. The function defined for this is identical for all processes involved:

int MPI_Bcast (void *buffer, int count, MPI_Datatype type, int root, MPI_Comm comm)

The MPI process rootmakes bufferits data available, while the other processes transfer the address of their receive buffer here. The remaining parameters must be the same (or equivalent) in all processes. After the function returns, all buffers contain the data that were originally only rootavailable for.

Gather

With the Gather operation, the MPI process collects rootthe data from all processes involved. The data from all send buffers are stored one after the other in the receive buffer (sorted by rank):

int MPI_Gather (void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm)

Vector-based variant

The vector-based variant of the gather operation allows a process-dependent number of elements:

int MPI_Gatherv (void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int *recvcounts, int *displs, MPI_Datatype recvtype, int root, MPI_Comm comm)

-

recvcounts: Field that contains the number of elements received by the individual processes (only forrootrelevant) -

displs: Field whose entry i specifies the shift in the receive buffer at which the data from process i should be stored (also onlyrootrelevant)

With the fields it should be noted that gaps are allowed in the receive buffer, but no overlaps. Should therefore about 3 processes 1, 2 and 3 elements of the type Integer can be received, so must recvcounts = {1, 2, 3}and displs = {0, 1 * sizeof(int), 3 * sizeof(int)}be set.

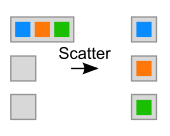

Scatter

With a scatter operation, the MPI process sends rooteach participating process a different but equally large data element:

int MPI_Scatter (void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm)

Vector-based variant

int MPI_Scatterv (void *sendbuf, int *sendcounts, int *displs, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm)

accumulation

Accumulation is a special form of the gather operation. The data of all processes involved are also collected here, but are also reduced to a date using a specified reduction operation. For example, let the value in the process of rank , then delivers Reduce (+) the total of all values: .

int MPI_Reduce (void *sendbuf, void *recvbuf, int count, MPI_Datatype type, MPI_Op op, int root, MPI_Comm comm)

The opfollowing predefined reduction operations exist for the parameter :

Logical operations

-

MPI_LAND: logical AND link -

MPI_BAND: bitwise AND operation -

MPI_LOR: logical OR link -

MPI_BOR: bitwise OR link -

MPI_LXOR: logical exclusive OR link -

MPI_BXOR: bitwise exclusive-OR operation

Arithmetic operations

-

MPI_MAX: Maximum -

MPI_MIN: Minimum -

MPI_SUM: Total -

MPI_PROD: Product -

MPI_MINLOC: Minimum with process -

MPI_MAXLOC: Maximum with process

The operations MPI_MINLOCand also MPI_MAXLOCreturn the rank of the MPI process that determined the result.

Custom operations

In addition to the predefined reduction operations, you can also use your own reduction operations. For this purpose, a freely programmable binary logic operation, which must be associative and optionally can be commutative, is announced to the MPI:

int MPI_Op_create (MPI_User_function *function, int commute, MPI_Op *op)

The associated user function calculates an output value from two input values and does this - for reasons of optimization - not just once with scalars, but element by element on vectors of any length:

typedef void MPI_User_function (void *invec, void *inoutvec, int *len, MPI_Datatype *datatype)

Prefix Reduction

In addition to the above-mentioned accumulation, there is also an Allreduce variant - which makes the same result available to all MPI processes and not just to one rootprocess. The so-called prefix reduction now extends this option by not calculating the same result for all processes, but instead calculating a process-specific partial result. For example, let again the value in the process of rank , then delivers scan (+) the partial sum of the values of rank to : .

int MPI_Scan (void *sendbuf, void *recvbuf, int count, MPI_Datatype type, MPI_Op op, MPI_Comm comm)

If your own value is not to be included in the calculation (i.e. excluded), this can be done with the exclusive scan function MPI_Exscan.

Allgather

In the Allgather operation, every process sends the same data to every other process. It is therefore a multi-broadcast operation in which there is no separate MPI process.

int MPI_Allgather (void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, MPI_Comm comm)

All-to-all (total exchange)

With all-to-all communication - similar to Allgather communication - data is exchanged between all processes. However, only the i th part of the send buffer is sent to the i th process. Data that come from the process with rank j are stored accordingly at the j position in the receive buffer.

int MPI_Alltoall (void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, MPI_Comm comm)

There is also the synchronizing MPI_Barrier operation. This function only returns after all MPI processes in the specified group have reached this part of the program.

MPI-2

A second version of the MPI standard has been available since 1997, adding some extensions to the still existing MPI-1.1 standard. These extensions include, among others

- dynamic process management, d. H. Processes can now be created and deleted at runtime

- [parallel] access to the file system

- one-way communication

- Specification of additional language interfaces (C ++, Fortran 90), whereby the language interfaces to C ++ have been marked as obsolete since MPI 2.2

Example: Reading an nx (n + 1) matrix with parallel file input and size processes with the numbers rank = 0… size-1. The column n + 1 contains the right-hand side of the system of equations A * x = b in the form of the extended matrix [A, b]. The rows of the matrix are evenly distributed over the processors. The distribution takes place cyclically (each processor one line, after size lines rank = 0 is served again) and not in blocks (each processor receives a contiguous block of n / size lines):

ndims = 1; /* dimensions */ aosi [0] = size * (n+1); /* array of sizes */ aoss [0] = n+1; /* array of subsizes */ aost [0] = rank * (n+1); /* array of starts */ order = MPI_ORDER_C; /* row or column order */ MPI_Type_create_subarray (ndims, aosi, aoss, aost, order, MPI_DOUBLE, &ft); MPI_Type_commit (&ft);

MPI_File_open (MPI_COMM_WORLD, fn, MPI_MODE_RDONLY, MPI_INFO_NULL, &fh); MPI_File_set_view (fh, sizeof (int), MPI_DOUBLE, ft, „native“, MPI_INFO_NULL);

for (i = rank; i < n; i+=size)

{ MPI_File_read (fh, rdbuffer, n+1, MPI_DOUBLE, &status);

for (j = 0; j < n+1; j++)

{ A [i / size] [j] = rdbuffer [j]; /* nur die dem Prozess zugeordneten Zeilen */

} }

MPI_File_close (&fh);

The interface follows the POSIX 1003.1 standard with slight changes due to the parallelism. The file is opened with MPI_File_open for shared reading. The apertures (views) for the individual processes are set with MPI_File_set_view. The previously defined variable ft (filetype) is required here, in which a line with n + 1 doubles is picked out in a block of size * (n + 1) doubles, starting at position rank * (n + 1). This means that exactly one line is successively assigned to each process from the entire block. This type is defined with MPI_Type_create_subarray and made known in the MPI system with MPI_Type_commit. Each process reads "its" lines with the numbers i = rank, rank + size, rank + 2 * size, ... until the entire matrix has been read with MPI_File_read. The size of (int) argument takes into account the size of the matrix, which is stored as an int at the beginning of the file.

Benefit: In size processors, a matrix can be stored in a distributed manner that would no longer have any space in the memory of a single processor. This also justifies the convention of specifying the sum of the memory of the individual cores and individual nodes as the memory of a parallel system.

File format:

n Zeile 0 (n+1) Zahlen für Prozess rank 0 Zeile 1 (n+1) Zahlen für Prozess rank 1 … Zeile r (n+1) Zahlen für Prozess rank r … Zeile size-1 (n+1) Zahlen für Prozess rank size-1

Zeile size (n+1) Zahlen für Prozess rank 0 Zeile size+1 (n+1) Zahlen für Prozess rank 1 … Zeile size+r (n+1) Zahlen für Prozess rank r … Zeile 2*size-1 (n+1) Zahlen für Prozess rank size-1

Zeile 2*size (n+1) Zahlen für Prozess rank 0 … … es folgen entsprechend der Zeilenzahl der Matrix ausreichend viele solcher Blöcke

The actual reading is done with MPI_File_read. Each process sequentially reads only the lines assigned to it. The collective operation is that the MPI library can optimize and parallelize reading. When the reading is finished, the file must be closed as usual. This is done with MPI_File_close. MPI has its own data types MPI_Datatype ft and MPI_File fh for the operations. The filetype is described with normal C variables: int ndims; int aosi [1]; int aoss [1]; int aost [1]; int order;

More in.

Implementations

C ++, C and Fortran

The first implementation of the MPI-1.x standard was MPICH from Argonne National Laboratory and Mississippi State University . MPICH2, which implements the MPI-2.1 standard, is now available. LAM / MPI from the Ohio Supercomputing Center was another free version, the further development of which has since been discontinued in favor of Open MPI.

From version 1.35 of the Boost Libraries, there is Boost.MPI, a C ++ friendly interface to various MPI implementations. Other projects, such as B. TPO ++ , offer this possibility and are able to send and receive STL containers.

C #

- MPI.NET (MPI1)

python

- MPI for Python (MPI-1 / MPI-2)

- pyMPI

- Boost: MPI Python Bindings (development discontinued)

Java

- MPJ Express

- mpiJava (MPI-1; development discontinued)

Pearl

R.

Haskell

See also

literature

- Heiko Bauke, Stephan Mertens: Cluster Computing. Springer, 2006, ISBN 3-540-42299-4

- William Gropp, Ewing Lusk, Anthony Skjellum: MPI - An Introduction - Portable Parallel Programming with the Message-Passing Interface . Munich 2007, ISBN 978-3-486-58068-6 .

- M. Firuziaan, O. Nommensen: Parallel Processing via MPI & OpenMP . Linux Enterprise, 10/2002

- Marc Snir , Steve Otto, Steven Huss-Lederman, David Walker, Jack Dongarra : MPI - The complete reference , Vol 1: The MPI core. 2nd Edition. MIT Press, 1998

- William Gropp, Steven Huss-Lederman, Andrew Lumsdaine, Ewing Lusk, Bill Nitzberg, William Saphir, Marc Snir: MPI-The Complete Reference , Vol. 2: The MPI-2 Extensions. The MIT Press, 1998.

Web links

- MPI

- MPI forum

- EuroMPI - MPI Users' Group Meeting (2010 edition)

- Open MPI - freely available MPI implementation

- MPICH - freely available MPI implementation

- MPICH2 - freely available MPI implementation

- MVAPICH - MPI implementation with VAPI

- Boost

- TPO ++

Individual evidence

- ↑ MPI Documents ( Memento of the original dated November 6, 2006 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ^ The Future of MPI . (PDF)

- ↑ MPI-2: Extensions to the Message-Passing Interface ( Memento of the original from September 21, 2007 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.