Transmission Control Protocol

| TCP (Transmission Control Protocol) | |||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Family: | Internet protocol family | ||||||||||||||||||||||||

| Operation area: | Reliable bidirectional data transport |

||||||||||||||||||||||||

|

|||||||||||||||||||||||||

| Standards: |

RFC 793 (1981) RFC 7323 (2014) |

||||||||||||||||||||||||

The English Transmission Control Protocol ( TCP , German Transmission Control Protocol ) is a network protocol that defines to be on the way in which data exchanged between network components. Almost all current operating systems of modern computers can handle TCP and use it to exchange data with other computers. The protocol is a reliable , connection-oriented , packet-switched transport protocol in computer networks . It is part of the Internet protocol family , the foundation of the Internet .

TCP was developed by Robert E. Kahn and Vinton G. Cerf . Their research work, which they began in 1973, lasted for several years. The first standardization of TCP therefore took place in 1981 as RFC 793 . After that there were many enhancements that are still specified in new RFCs , a series of technical and organizational documents on the Internet.

Unlike the connectionless UDP ( English User Datagram Protocol ) is a TCP connection between two endpoints of a network connection ( sockets ) ago. Data can be transmitted in both directions on this connection. In most cases, TCP is based on the IP (Internet Protocol), which is why the term “ TCP / IP protocol” is often (and often incorrectly) also referred to . In protocol stacks like the OSI model , TCP and IP are not located on the same layer. TCP is an implementation of the transport layer .

Due to its many positive properties (data loss is recognized and automatically corrected, data transmission is possible in both directions, network overload is prevented, etc.) TCP is a very widely used protocol for data transmission. For example, TCP is used as the almost exclusive transport medium for the WWW , e-mail and many other popular network services.

General

In principle, TCP is an end-to-end connection in full duplex , which allows information to be transmitted in both directions, similar to a telephone conversation. This connection can also be viewed as two half-duplex connections in which information can flow in both directions (but not at the same time). The data in the opposite direction can contain additional control information. The management of this connection as well as the data transfer are taken over by the TCP software. The TCP software is usually located in the network protocol stack of the operating system. Application programs use an interface for this purpose, mostly sockets , which are (depending on the operating system), for example in Microsoft Windows in specially integrated program libraries (" Winsock .dll" or "wsock32.dll"). Linux and many other Unix-like operating systems contain a socket layer in the operating system kernel. The socket layer is accessed via system calls. Applications that frequently use TCP include web browsers and web servers .

Each TCP connection is uniquely identified by two endpoints. An endpoint is an ordered pair consisting of an IP address and a port . Such a pair forms a bidirectional software interface and is also known as a socket . A TCP connection is thus identified by four values (a quadruple):

(Lokaler Rechner, Port x, Entfernter Rechner, Port y)

It depends on the entire quadruple. For example, two different processes on the same computer can use the same local port and even communicate with the same computer on the opposite side, provided that the processes involved use different ports on the other side. In such a case, there would be two different connections, the quadruples of which differ only in one of four values: the port on the opposite side.

Verbindung 1: (Lokaler Rechner, Port x, Entfernter Rechner, Port y) Verbindung 2: (Lokaler Rechner, Port x, Entfernter Rechner, Port z)

For example, a server process creates a socket ( socket , bind ) on port 80, marks it for incoming connections ( listen ) and requests the next pending connection from the operating system ( accept ). This request initially blocks the server process because there is no connection yet. If the first connection request arrives from a client, it is accepted by the operating system so that the connection is established. From now on this connection is identified by the quadruple described above.

Finally, the server process is woken up and given a handle for this connection. The server process then usually starts a child process to which it delegates the handling of the connection. He himself then continues his work with another Accept request to the operating system. This makes it possible for a web server to accept multiple connections from different computers. Multiple lists on the same port are not possible. Usually, the program on the client side does not determine the port itself, but allows the operating system to assign it.

Ports are 16-bit numbers (port numbers) and range from 0 to 65535. Ports from 0 to 1023 are reserved and are assigned by the IANA , e.g. B. Port 80 is reserved for the HTTP used in the WWW . The use of the predefined ports is not binding. For example, every administrator can run an FTP server (usually port 21) on any other port.

Connection establishment and termination

A server that offers its service creates an end point (socket) with the port number and its IP address. This is known as passive open or also as listen .

If a client wants to establish a connection, it creates its own socket from its computer address and its own, still free port number. A connection can then be established with the help of a known port and the address of the server. A TCP connection is clearly identified by the following 4 values:

- Source IP address

- Source port

- Destination IP address

- Destination port

During the data transfer phase ( active open ) the roles of client and server (from a TCP point of view) are completely symmetrical. In particular, each of the two computers involved can initiate a connection cleardown.

Semi-closed connections

The connection can be terminated in two ways: on both sides or on one side in steps. The latter variant is called a half-closed connection (not to be confused with half-open connections, see below). It allows the other side to transmit data after the one-sided separation.

Half-closed connections are a legacy of the Unix operating system , in whose environment TCP was created. Accordance with the principle everything is a file (dt. " Everything is a file ") supports Unix TCP connections too Pipes completely analogous interaction of two processes: for a program it should be utterly irrelevant whether one of a TCP connection or File reads. A Unix program typically reads to the end of the standard input and then writes the processing result to the standard output. The standard data streams are linked to files before the program is executed.

The outward and return channels of a TCP connection are connected to standard input and output and thus logically represented as one file each. A closed connection is translated to the reading process as the end of file has been reached. The mentioned typical Unix processing scheme assumes that the connection in the reverse direction is still available for writing after reading the end of the file, which results in the need for half-closed connections.

Half open connections

A connection is half open when one side crashes without the remaining side knowing. This has the undesirable effect that operating system resources are not released. Half-open connections can arise because TCP connections on the protocol side exist until they are cleared. However, appropriate precautions are often taken by the application side.

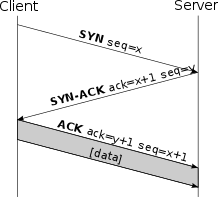

Connection establishment

The client that wants to establish a connection sends the server a SYN packet (from English synchronize ) with a sequence number x . The sequence numbers are important to ensure a complete transmission in the correct order and without duplicates. It is a packet whose SYN bit is set in the packet header (see TCP header ). The start sequence number is any number, the generation of which depends on the respective TCP implementation. However, it should be as random as possible to avoid security risks.

The server (see sketch) receives the package. If the port is closed, it replies with a TCP-RST to signal that no connection can be established. If the port is open, it confirms receipt of the first SYN packet and agrees to the establishment of the connection by sending back a SYN / ACK packet ( ACK from English acknowledgment 'confirmation'). The set ACK flag in the TCP header identifies these packets, which contain the sequence number x + 1 of the SYN packet in the header. In addition, it sends its start sequence number y in return , which is also arbitrary and independent of the start sequence number of the client.

Finally, the client confirms receipt of the SYN / ACK packet by sending its own ACK packet with the sequence number x + 1 . This process is also known as "Forward Acknowledgment". For security reasons, the client sends back the value y + 1 (the sequence number of the server + 1) in the ACK segment. The connection is now established. The following example shows the process in an abstract way:

| 1. | SYN-SENT | → | <SEQ = 100> <CTL = SYN> | → | SYN-RECEIVED |

| 2. | SYN / ACK-RECEIVED | ← | <SEQ = 300> <ACK = 101> <CTL = SYN, ACK> | ← | SYN / ACK-SENT |

| 3. | ACK-SENT | → | <SEQ = 101> <ACK = 301> <CTL = ACK> | → | ESTABLISHED |

Once established, the connection has equal rights for both communication partners; it is not possible to see who is the server and who is the client of an existing connection at TCP level. Therefore, a distinction between these two roles is no longer relevant in further consideration.

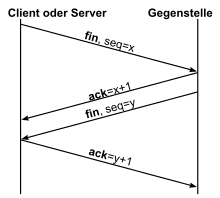

Disconnection

The regulated disconnection takes place in a similar way. Instead of the SYN bit, the FIN bit is (of English. Finish end ',' final ') is used, which indicates that no more data is coming from the transmitter. Receipt of the package is confirmed again by means of ACK. The recipient of the FIN packet finally sends a FIN packet, which is also confirmed to him.

In addition, a shortened procedure is possible in which FIN and ACK are accommodated in the same packet, just like when establishing a connection. The maximum segment lifetime (MSL) is the maximum time a segment can spend in the network before it is discarded. After the last ACK has been sent, the client changes to a two-MSL wait state , in which all late segments are discarded. This ensures that late segments cannot be misinterpreted as part of a new connection. Correct connection termination is also ensured. If ACK y + 1 is lost, the server's timer expires and the LAST_ACK segment is retransmitted.

buffer

Two buffers are used when sending data over the TCP . On the sender side, the application transmits the data to be sent to the TCP, which buffers the data in order to send several small transmissions more efficiently in the form of a single large one. After the data has been transmitted to the recipient, it ends up in the recipient's buffer. This pursues similar goals. If several individual packets have been received from the TCP, it is better to pass them on to the application in combination.

Three-way handshake

Both when establishing and disconnecting the connection, the responses to the first SYN or FIN packet are typically combined into a single packet (SYN / ACK or FIN / ACK) - theoretically, sending two separate packets would also be conceivable. Since in this case only three packages have to be sent, one often speaks of the so-called three-way handshake . Combining the FIN packet and the ACK packet is problematic, however, since sending a FIN packet means “no more data will follow”. However, the sender of the FIN packet can still (want to) receive data; one speaks of a semi-closed connection (the receiving direction is still open while the sending direction is closed). It would be For example, it is conceivable to send the beginning of an HTTP request ( HTTP request ) directly in the SYN packet, further data as soon as the connection has been established, and in the last HTTP request packet to close the (sending direction of the) connection immediately using FIN . However, this procedure is not used in practice. If the browser were to close the connection immediately in this way, the server would possibly also close the connection instead of completely answering the request.

Structure of the TCP header

General

The TCP segment always consists of two parts: the header and the payload ( English payload ). The payload contains the data to be transmitted, which in turn can correspond to protocol information of the application layer, such as HTTP or FTP . The header contains the data required for communication as well as information describing the file format. The schematic structure of the TCP header can be seen in Figure 5. Since the option field is usually not used, a typical header has a size of 20 bytes. The values are specified in the big-endian byte order .

Explanation

- Source Port (2 bytes)

- Specifies the port number on the sender side.

- Destination Port (2 bytes)

- Specifies the port number on the receiving end.

- Sequence Number (4 bytes)

- Sequence number of the first data octet ( byte ) of this TCP packet or the initialization sequence number if the SYN flag is set. After the data transmission, it is used to sort the TCP segments, as these can arrive at the recipient in different order.

- Acknowledgment Number (4 bytes)

- It indicates the sequence number that the sender of this TCP segment expects next. It is only valid if the ACK flag is set.

- Data offset (4 bit)

- Length of the TCP header in 32-bit blocks - without the user data ( payload ). This shows the start address of the user data.

- Reserved (4 bit)

- The Reserved field is reserved for future use. All bits must be zero.

- Control flags (8 bit)

- Are two-valued variables set and not set with the possible states that are required to identify certain states that are important for communication and further processing of the data. The following describes the flags of the TCP header and the actions to be carried out depending on their status.

- CWR and ECE

- are two flags that are required for Explicit Congestion Notification (ECN). If the ECE bit (ECN echo) is set, the receiver informs the transmitter that the network is overloaded and that the transmission rate must be reduced. If the sender has done this, it informs the receiver of this by setting the CWR bit ( Congestion Window Reduced ).

- URG

- If the Urgent flag (= urgent urgent) is set, the data will be processed after the header immediately by the application. The application interrupts the processing of the data of the current TCP segment and reads out all bytes after the header up to the byte to which the urgent pointer field points. This procedure is closely related to a software interrupt . This flag can be used, for example, to cancel an application on the receiver. The procedure is only used very rarely, examples are the preferred handling of CTRL-C (abort) for a terminal connection via rlogin or telnet .

- This flag is usually not evaluated.

- ACK

- In conjunction with the acknowledgment number, the acknowledgment flag has the task of confirming receipt of TCP segments during data transfer. The acknowledgment number is only valid if the flag is set.

- PSH

- RFC 1122 and RFC 793 specify the push flag so that when the flag is set, both the outgoing and incoming buffers are skipped. Since with TCP no datagrams are sent, but rather a data stream, the PSH flag helps to process the stream more efficiently, as the receiving application can be woken up in a more targeted manner and does not have to find out for every incoming data segment that parts of the data have not yet been received which would be necessary to be able to continue at all.

- This is helpful if, for example, you want to send a command to the recipient during a Telnet session. If this command were to be temporarily stored in the buffer, it would be processed with a (strong) delay.

- The behavior of the PSH flag can differ from the above explanation, depending on the TCP implementation.

- RST

- The reset flag is used when a connection is to be terminated. This happens, for example, in the event of technical problems or to reject undesired connections (such as unopened ports, here - unlike UDP - no ICMP packet with "Port Unreachable" is sent).

- SYN

- Packets with the SYN flag set initiate a connection. The server usually responds either with SYN + ACK when it is ready to accept the connection, otherwise with RST. Used to synchronize sequence numbers when establishing a connection (hence the name SYN).

- FIN

- This final flag ( finish ) is used to release the connection and indicates that no more data is coming from the transmitter. The FIN and SYN flags have sequence numbers so that they are processed in the correct order.

- (Receive) Window (2 bytes)

- Is the number of data octets ( bytes ), starting with the data octet indicated by the acknowledgment field , that the sender of this TCP packet is ready to receive.

- Checksum (2 bytes)

- The checksum is used to detect transmission errors and is calculated using the TCP header, the data and a pseudo header. This header consists of the destination IP, the source IP, the TCP protocol identifier (0x0006) and the length of the TCP header including user data (in bytes).

- Urgent pointer (2 bytes)

- Together with the sequence number, this value indicates the position of the first byte after the urgent data in the data stream. The urgent data starts immediately after the header. The value is only valid if the URG flag is set.

- Options (0-40 bytes)

- The option field varies in size and contains additional information. The options must be a multiple of 32 bits long. If they are not, they must be filled with zero bits ( padding ). This field enables connection data to be negotiated that are not contained in the TCP header, such as the maximum size of the user data field.

Data transfer

TCP / IP segment size

A TCP segment typically has a maximum size of 1500 bytes. However, a TCP segment must fit into the underlying transmission layer, the Internet Protocol (IP); see also Maximum Transmission Unit (MTU).

IP packets, on the other hand, are theoretically specified up to 65,535 bytes (64 KiB ), but are themselves usually transmitted via Ethernet , and with Ethernet the size of the (Layer 3) user data (if one disregards jumbo frames ) is 64 (possibly including padding) up to 1500 bytes. The TCP and IP protocol each define a header of 20 bytes in size. For the (application) user data, 1460 bytes (= 1500 bytes Ethernet [user data] - 20 bytes of header data TCP - 20 bytes of header data IP) remain in a TCP / IP packet. Since most Internet connections use DSL , the Point-to-Point Protocol (PPP) is also used between IP and Ethernet, which uses a further 8 bytes for the PPP frame. The user data is therefore reduced to a total of 1500 - 20 - 20 - 8 = 1452 bytes MSS (maximum segment size). This corresponds to a maximum user data rate of 96.8%.

Distribution of the application data on TCP / IP segments

Before the data exchange, receiver and sender agree on the size of the MSS via the option field . The application that wants to send data, such as a web server, stores, for example, a 7 kilobyte block of data in the buffer. In order to send 7 kilobytes of data with a 1460 byte user data field, the TCP software divides the data into several packets, adds a TCP header and sends the TCP segments. This process is called segmentation . The data block in the buffer is divided into five segments (see Fig. 6). Each segment receives a TCP header from the TCP software. The TCP segments are sent one after the other. These do not necessarily arrive at the recipient in the same order as they were sent, as each TCP segment may take a different route on the Internet. Each segment is numbered so that the TCP software in the receiver can sort the segments again. The sequence number is used to assign the segments in the receiver.

The recipient's TCP software confirms those TCP segments that have arrived correctly (i.e. with the correct checksum). Otherwise the packages will be requested again.

Example of a TCP / IP data transmission

The sender sends its first TCP segment with a sequence number SEQ = 1 (varies) and a useful data length of 1460 bytes to the receiver. The recipient confirms it with a TCP header without data with ACK = 1461 and thus requests the second TCP segment from byte number 1461 from the sender. This then sends it to the recipient with a TCP segment and SEQ = 1461. This confirms it again with an ACK = 2921 and so on. The recipient does not need to acknowledge every TCP segment if they are contiguous. If it receives the TCP segments 1–5, it only needs to confirm the last TCP segment. For example, if TCP segment 3 is missing because it has been lost, it can only confirm 1 and 2, but not yet 4 and 5. Since the sender does not receive a confirmation for the 3, his timer expires and he sends the 3 again. If the 3 arrives at the receiver, it confirms all five TCP segments, provided that both sides support the TCP option SACK (Selective ACK). The sender starts a retransmission timer for each TCP segment that it sends on the journey.

Retransmission timer

To determine when a packet has been lost in the network, the sender uses a timeout by which the ACK of the other side must have arrived. If the timeout is too short, packets that actually arrived correctly are repeated; If the timeout is too high, the packet to be repeated will be sent unnecessarily late in the event of actual losses. Due to the different transit times of the underlying IP packets, only a timer that is dynamically adapted to the connection makes sense. The details are set out in RFC 6298 as follows:

- The timeout (RTO = retransmission timeout) is calculated from two status variables carried by the transmitter:

- the estimated round trip time (SRTT = Smoothed RTT)

- as well as their variance (RTTVAR).

- Initially it is estimated that RTO = 1s (in order to create compatibility with the older version of the document, values> 1s are also possible.)

- After measuring the RTT of the first packet sent, the following is set:

- SRTT: = RTT

- RTTVAR: = 0.5 * RTT

- RTO: = RTT + 4 * RTTVAR (If 4 * RTTVAR is less than the measuring accuracy of the timer, this is added instead.)

- With every further measurement of the RTT 'the values are updated (here RTTVAR must be calculated before SRTT):

- RTTVAR: = (1-β) * RTTVAR + β * | SRTT - RTT '| (The variance is also smoothed with a factor β; since the variance indicates an average deviation (which is always positive), the amount of the deviation between the estimated and actual RTT 'is used here, not the simple difference. It is recommended that β = 1/4 to be selected.)

- SRTT: = (1-α) * SRTT + α * RTT '(The new RTT' is not simply set, but it is smoothed with a factor α. It is recommended to choose α = 1/8.)

- RTO: = SRTT + 4 * RTTVAR (If 4 * RTTVAR is less than the measurement accuracy of the timer, this is added instead. A minimum value of 1 s applies to the RTO - regardless of the calculation; a maximum value may also be assigned, provided this is at least 60 s.)

By choosing powers of 2 (4 or 1/2, 1/4 etc.) as factors, the calculations in the implementation can be carried out using simple shift operations .

To measure the RTT must Karn algorithm by Phil Karn be used; d. This means that only those packets are used for the measurement, the confirmation of which arrives without the packet being sent again in between. The reason for this is that if it were to be retransmitted, it would not be clear which of the packets sent repeatedly was actually confirmed, so that a statement about the RTT is actually not possible.

If a packet was not confirmed within the timeout, the RTO is doubled (provided that it has not yet reached the optional upper limit). In this case, the values found for SRTT and RTTVAR may (also optionally) be reset to their initial value, as they could possibly interfere with the recalculation of the RTO.

Relationship between flow control and congestion control

The following two sections explain the TCP concepts for flow control and congestion control (or congestion control). The sliding window and the congestion window are introduced. The sender selects the minimum of both windows as the actual transmission window size. So-called ARQ protocols (Automatic Repeat reQuest) are used to ensure reliable data transmission through repeat transmissions.

Flow control

As the application reads data from the buffer, the level of the buffer changes constantly. It is therefore necessary to control the data flow according to the fill level. This is done with the sliding window and its size. As shown in Fig. 8, we expand the transmitter's buffer to 10 segments. In Fig. 8a, segments 1-5 are currently being transmitted. The transmission is comparable to Fig. 7. Although the receiver's buffer in Fig. 7 is full at the end, it requests the next data from byte 7301 from the sender with ACK = 7301. The consequence of this is that the recipient can no longer process the next TCP segment. Exceptions, however, are TCP segments with the URG flag set. With the window field he can tell the sender that he should no longer send any data. This is done by entering the value zero in the window field (zero window). The value zero corresponds to the free space in the buffer. The receiver application now reads segments 1–5 from the buffer, which frees up 7300 bytes of memory again. It can then request the remaining segments 6–10 from the sender with a TCP header that contains the values SEQ = 1, ACK = 7301 and Window = 7300. The sender now knows that it can send a maximum of five TCP segments to the receiver and moves the window five segments to the right (see Fig. 8b). The segments 6–10 are now all sent together as a burst . If all TCP segments arrive at the receiver, it acknowledges them with SEQ = 1 and ACK = 14601 and requests the next data.

- Silly Window Syndrome

- The receiver sends a zero window to the transmitter because its buffer is full. However, the application at the recipient only reads two bytes from the buffer. The receiver sends a TCP header with Window = 2 (Window Update) to the sender and requests the two bytes at the same time. The sender complies with the request and sends the two bytes in a 42-byte packet (with IP header and TCP header) to the recipient. This means that the receiver's buffer is full again and it sends a zero window to the sender again. For example, the application now reads a hundred bytes from the buffer. The receiver sends a TCP header with a small window value to the sender again. This game continues over and over and wastes bandwidth as only very small packets are sent. Clark's solution is that the receiver is a Zero Window sends and as long as the window update is to wait until the application of at least the maximum segment size (maximum segment size, 1460 bytes in our previous examples) has read out from the buffer or the buffer half empty - whichever comes first (Dave Clark, 1982). The sender can also send packets that are too small and thus waste bandwidth. This fact is eliminated with the Nagle algorithm . That's why he complements himself with Clark's solution.

Overload control / congestion control

In the Internet, where many networks with different properties are connected, data loss of individual packets is quite normal. If a connection is heavily loaded, more and more packets are discarded and have to be repeated accordingly. The repetition in turn increases the load, without suitable measures there will be a data congestion.

The loss rate is constantly monitored by an IP network . Depending on the loss rate, the transmission rate is influenced by suitable algorithms : Normally, a TCP / IP connection is started slowly (slow start) and the transmission rate is gradually increased until data is lost. Loss of data reduces the transmission rate, without loss it is increased again. Overall, the data rate initially approaches the respective maximum available and then roughly remains there. Overloading is avoided.

Overload control algorithm

If packets are lost at a certain window size, this can be determined if the sender does not receive an acknowledgment (ACK) within a certain time ( timeout ). It must be assumed that the packet was discarded by a router in the network due to excessive network load . This means that a router's buffer is full; it is, so to speak, a traffic jam in the network. In order to resolve the congestion, all participating transmitters must reduce their network load. For this purpose, four algorithms are defined in RFC 2581 : slow start , congestion avoidance , fast retransmit and fast recovery , with slow start and congestion avoidance being used together. The two algorithms fast retransmit and fast recovery are also used together and are an extension of the slow start and congestion avoidance algorithms .

Slow Start and Congestion Avoidance

At the beginning of a data transmission, the slow-start algorithm is used to determine the congestion window (literally: overload window) in order to prevent a possible overload situation. You want to avoid traffic jams, and since the current load on the network is not known, you start with small amounts of data. The algorithm starts with a small window from an MSS (Maximum Segment Size) in which data packets are transmitted from the sender to the receiver.

The recipient now sends an acknowledgment (ACK) back to the sender. For each ACK received, the size of the congestion window is increased by one MSS. Since an ACK is sent for each packet sent if the transfer is successful, this leads to a doubling of the congestion window within a round trip time. So there is exponential growth at this stage. If the window for example, sending two packets permitted, the sender receives two ACKs and therefore increases the window by 2 to 4. This exponential growth is continued until the so-called slow-start threshold is reached (Engl. Threshold , Threshold'). The phase of exponential growth is also called the slow start phase .

After that, the congestion window is only increased by one MSS if all packets have been successfully transmitted from the window. So it only grows by one MSS per round trip time, i.e. only linearly. This phase is known as the Congestion Avoidance Phase . The growth stops when the receiving window specified by the receiver has been reached (see flow control).

If a timeout occurs, the congestion window is reset to 1 and the slow-start threshold is reduced to half the flight size (flight size is the number of packets that have been sent but not yet acknowledged). The phase of exponential growth is thus shortened, so that the window only grows slowly in the event of frequent packet losses.

Fast retransmit and fast recovery

Fast retransmit and fast recovery ("quick recovery") are used to react more quickly to the congestion situation after a packet has been lost. To do this, a receiver informs the sender if packets arrive out of sequence and there is a packet loss in between. To do this, the recipient confirms the last correct package again for each additional package that arrives out of sequence. One speaks of Dup-Acks ( duplicate acknowledgments ), i.e. several consecutive messages which ACKen the same data segment. The sender notices the duplicate acknowledgments and after the third duplicate it immediately sends the lost packet again before the timer expires. Because there is no need to wait for the timer to expire, the principle is called Fast Retransmit . The Dup-Acks are also indications that although a packet was lost, the following packets did arrive. Therefore, the transmission window is only halved after the error and not started again with slow start as with the timeout. In addition, the transmission window can be increased by the number of Dup-Acks, because each stands for a further packet that has reached the recipient, albeit out of sequence. As this means that full transmission power can be achieved again more quickly after the error, the principle is called fast recovery .

Selective ACKs (SACK)

Selective ACKs are used to send even more control information about the data flow back from the receiver to the sender. After a packet has been lost, the recipient inserts an additional header in the TCP option field, from which the sender can see exactly which packets have already arrived and which are missing (in contrast to the standard cumulative ACKs of TCP, see above). The packets are still only considered confirmed when the recipient has sent the sender an ACK for the packets.

TCP-Tahoe and TCP-Reno

The TCP congestion control variants Tahoe and Reno , named after locations in Nevada , are two different methods of how TCP reacts to an overload event in the form of timeouts or dup-acks .

The now no longer used TCP Tahoe reduces the congestion window for the next transmission unit to 1 as soon as there is a timeout. Then the TCP slow start process starts again (with a reduced threshold, see below) until a new timeout or DUP ACKs event occurs or the threshold value ( threshold ) for transition to the Congestion Avoidance phase is achieved. This threshold value was set to half the size of the current congestion window after the overload event occurred. The disadvantage of this procedure is, on the one hand, that a packet loss is only detected by a timeout, which sometimes takes a long time, and on the other hand, the congestion window is greatly reduced to 1.

The further development of Tahoe is TCP-Reno. A distinction is made between occurring timeout and dup-acks events: While TCP-Reno proceeds in the same way as TCP Tahoe when a timeout occurs, it uses a different variant for determining the subsequent congestion window when three double acks occur. The basic idea is that the loss of a segment on the way to the recipient can be recognized not only by a timeout, but also by the recipient sending back the same ACKs several times for the segment immediately before the lost segment (each time he receives another segment after the "gap"). Therefore, the following congestion window is set to half the value of the congestion window at the time of the overload event; then the congestion avoidance phase is switched back to. As mentioned in the article above, this behavior is described as fast recovery .

Overload control as a research field

The exact design of the TCP overload control was and is an extremely active field of research with numerous scientific publications. Even today, many scientists around the world are working on improving TCP overload control or trying to adapt it to certain external circumstances. In this context, the special conditions of the various wireless transmission technologies should be mentioned in particular, which often lead to long or strongly fluctuating propagation delays or to high packet losses. In the case of packet loss, TCP assumes by default that the transmission path is busy at some point (data congestion). This is mostly the case with wired networks, since packets are rarely lost on the line , rather packets that have not arrived were almost always discarded by an overloaded router. The correct reaction to such a “data congestion” is therefore to reduce the transmission rate. However, this assumption no longer applies to wireless networks. Due to the much more unreliable transmission medium, packet losses often occur without one of the routers being overloaded. In this scenario, however, reducing the sending rate does not make sense. On the contrary, increasing the transmission rate, for example by sending multiple packets, could increase the reliability of the connection.

Often these changes or extensions of the overload control are based on complex mathematical or control-technical foundations. The design of corresponding improvements is anything but easy, since it is generally required that TCP connections with older congestion control mechanisms must not be significantly disadvantaged by the new methods, for example if several TCP connections "fight" for bandwidth on a shared medium. For all these reasons, the TCP congestion control used in reality is also made much more complicated than described earlier in the article.

Due to the numerous researches on TCP overload control, various overload control mechanisms have established themselves as quasi-standards over the course of time. In particular, TCP Reno , TCP Tahoe and TCP Vegas should be mentioned here.

In the following some newer or more experimental approaches will be roughly outlined by way of example. One approach is, for example, RCF (Router Congestion Feedback). The routers send more extensive information to the TCP senders or receivers along the path so that they can better coordinate their end-to-end congestion control. This has been shown to enable considerable increases in throughput. Examples of this can be found in the literature under the keywords XCP ( explicit control protocol ), EWA ( explicit window adaptation ), FEWA ( fuzzy EWA ), FXCP ( fuzzy XCP ) and ETCP ( enhanced TCP ) (status: mid-2004). The Explicit Congestion Notification (ECN) is also an implementation of an RFC. Put simply, these methods simulate an ATM type of overload control .

Other approaches pursue the logical separation of the control loop of a TCP connection into two or more control loops at the crucial points in the network (e.g. with so-called split TCP ). There is also the method of logically bundling several TCP connections in a TCP sender so that these connections can exchange their information about the current state of the network and react more quickly. The EFCM ( Ensemble Flow Congestion Management ) method should be mentioned here in particular . All of these processes can be summarized under the term Network Information Sharing .

TCP checksum and TCP pseudo headers

The pseudo header is a combination of header parts of a TCP segment and parts of the header of the encapsulating IP packet. It is a model on which the calculation of the TCP checksum ( English checksum can be described graphically).

If IP is used with TCP, it is desirable to include the header of the IP packet in the backup of TCP. This guarantees the reliability of its transmission. This is why the IP pseudo header is created. It consists of the IP sender and recipient address, a null byte, a byte that specifies the protocol to which the user data of the IP packet belongs and the length of the TCP segment with the TCP header. Since the pseudo header always involves IP packets that transport TCP segments, this byte is set to the value 6. The pseudo header is placed in front of the TCP header for the calculation of the checksum. Then the checksum is calculated. The sum is stored in the "checksum" field and the fragment is sent. No pseudo header is ever sent.

| Bit offset | Bits 0-3 | 4-7 | 8-15 | 16-31 | ||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | IP sender address | |||||||||||||||||||||||||||||||

| 32 | Recipient IP address | |||||||||||||||||||||||||||||||

| 64 | 00000000 | 6 (= TCP) | TCP length | |||||||||||||||||||||||||||||

| 96 | Source port | Destination port | ||||||||||||||||||||||||||||||

| 128 | Sequence number | |||||||||||||||||||||||||||||||

| 160 | ACK number | |||||||||||||||||||||||||||||||

| 192 | Data offset | Reserved | Flags | Window | ||||||||||||||||||||||||||||

| 224 | Checksum | Urgent pointer | ||||||||||||||||||||||||||||||

| 256 | Options (optional) | |||||||||||||||||||||||||||||||

| 256/288 + | Data |

|||||||||||||||||||||||||||||||

The calculation of the checksum for IPv4 is defined in RFC 793 :

The checksum is the 16-bit one's complement of the one's complement sum of all 16-bit words in the header and the payload of the underlying protocol. If a segment contains an odd number of bytes, a padding byte is appended. The padding is not transmitted. During the calculation of the checksum, the checksum field itself is filled with zeros.

In contrast to this, the pseudo header for IPv6 according to RFC 2460 looks as follows:

“ Any transport or other upper-layer protocol that includes the addresses from the IP header in its checksum computation must be modified for use over IPv6, to include the 128-bit IPv6 addresses instead of 32-bit IPv4 addresses. ”

| Bit offset | 0-7 | 8-15 | 16-23 | 24-31 | ||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Source address | |||||||||||||||||||||||||||||||

| 32 | ||||||||||||||||||||||||||||||||

| 64 | ||||||||||||||||||||||||||||||||

| 96 | ||||||||||||||||||||||||||||||||

| 128 | Destination address | |||||||||||||||||||||||||||||||

| 160 | ||||||||||||||||||||||||||||||||

| 192 | ||||||||||||||||||||||||||||||||

| 224 | ||||||||||||||||||||||||||||||||

| 256 | TCP length | |||||||||||||||||||||||||||||||

| 288 | Zero values | Next header | ||||||||||||||||||||||||||||||

| 320 | Source port | Destination port | ||||||||||||||||||||||||||||||

| 352 | Sequence number | |||||||||||||||||||||||||||||||

| 384 | ACK number | |||||||||||||||||||||||||||||||

| 416 | Data offset | Reserved | Flags | Window | ||||||||||||||||||||||||||||

| 448 | Checksum | Urgent pointer | ||||||||||||||||||||||||||||||

| 480 | Options (optional) | |||||||||||||||||||||||||||||||

| 480/512 + | Data |

|||||||||||||||||||||||||||||||

The recipient also creates the pseudo header and then performs the same calculation without setting the checksum field to zero. This should make the result FFFF ( hexadecimal ). If this is not the case, the TCP segment is discarded without a message. As a result, the RTT timer expires at the sender and the TCP segment is sent again.

The reason for this complicated procedure is that parts of the IP header change during routing in the IP network. The TTL field is decremented by one with each IP hop. If the TTL field were to be included in the checksum calculation, IP would destroy the security of the transport via TCP. Therefore only part of the IP header is included in the checksum calculation. On the one hand, because of its length of only 16 bits and because of the simple calculation rule, the checksum is prone to undetectable errors. Better methods like CRC-32 were considered too laborious at the time of definition.

Data integrity and reliability

In contrast to connectionless UDP , TCP implements a bidirectional, byte-oriented, reliable data stream between two endpoints. The underlying protocol ( IP ) is packet-oriented, whereby data packets can get lost, arrive in the wrong order and can even be received twice. TCP was designed to deal with the uncertainty of the underlying layers. It therefore checks the integrity of the data using the checksum in the packet header and ensures the order using sequence numbers . The sender repeats the sending of packets if no acknowledgment is received within a certain period of time ( timeout ). At the receiver, the data in the packets are combined in a buffer in the correct order to form a data stream and duplicate packets are discarded.

The data transfer can of course be disturbed, delayed or completely interrupted at any time after a connection has been established. The transmission system then runs into a timeout. The "connection establishment" carried out in advance therefore does not guarantee a subsequent, permanently secure transmission.

Confirmations

The respective length of the buffer, up to which there is no gap in the data stream, is confirmed ( windowing ). This means that the network bandwidth can also be used over long distances. In the case of an overseas or satellite connection, the arrival of the first ACK signal sometimes takes several 100 milliseconds for technical reasons, during which time several hundred packets can be sent. The sender can fill the receiver buffer before the first acknowledgment arrives. All packages in the buffer can be confirmed together. Acknowledgments can be added to the data in the TCP header of the opposite data stream ( piggybacking ) if the recipient also has data ready for the sender.

See also

- CYCLADES - The model for TCP with its CIGALE datagram.

- List of standardized ports

- Stream Control Transmission Protocol (SCTP)

literature

- Douglas Comer: Internetworking with TCP / IP. Principles, Protocols, and Architectures. Prentice Hall, 2000, ISBN 0-13-018380-6 .

- Craig Hunt: TCP / IP Network Administration. O'Reilly, Bejing 2003, ISBN 3-89721-179-3 .

- Richard Stevens : TCP / IP Illustrated. Volume 1. The Protocols . Addison-Wesley, Boston 1994, 2004. ISBN 0-201-63346-9 .

- Richard Stevens: TCP / IP Illustrated. Volume 2. The Implementation . Addison-Wesley, Boston 1994, ISBN 0-201-63354-X .

- Andrew S. Tanenbaum : Computer Networks. 4th edition. Pearson Studium, Munich 2003, ISBN 978-3-8273-7046-4 , p. 580 ff.

- James F. Kurose, Keith W. Ross: Computer Networks. A top-down approach with a focus on the Internet. Bafög issue. Pearson Studium, Munich 2004, ISBN 3-8273-7150-3 .

- Michael Tischer, Bruno Jennrich: Internet Intern. Technology & programming. Data-Becker, Düsseldorf 1997, ISBN 3-8158-1160-0 .

Web links

RFCs

- RFC 793 (Transmission Control Protocol)

- RFC 1071 (calculating the checksum for IP, UDP and TCP)

- RFC 1122 (bug fixes for TCP)

- RFC 1323 (extensions to TCP)

- RFC 2018 (TCP SACK - Selective Acknowledgment Options)

- RFC 3168 (Explicit Congestion Notification)

- RFC 5482 (TCP User Timeout Option)

- RFC 5681 (TCP Congestion Control)

- RFC 7414 (overview of TCP RFCs)

Others

- Congestion Avoidance and Control (TCP milestone 1988; PDF; 220 kB)

- Warriors of the net (film about TCP)

- Introduction to TCP / IP - Online introduction to the TCP / IP protocols. Heiko Holtkamp, AG Computer Networks and Distributed Systems, Technical Faculty, Bielefeld University.

Individual evidence

- ↑ Not to be confused with packet switching . The task of TCP is not to transmit packets, but the bytes of a data stream. Packet switching is provided by the Internet Protocol (IP). Therefore IP is packet-switched but TCP is packet-switched.

- ↑ Port numbers. Internet Assigned Numbers Authority (IANA), June 18, 2010, accessed August 7, 2014 .

- ↑ RFC 793. Internet Engineering Task Force (IETF), 1981. "A passive OPEN request means that the process wants to accept incoming connection requests rather than attempting to initiate a connection."

- ↑ RFC 793. Internet Engineering Task Force (IETF), 1981. "Briefly the meanings of the states are: LISTEN - represents waiting for a connection request from any remote TCP and port."

- ^ W. Richard Stevens: TCP / IP Illustrated, Vol. 1: The Protocols. Addison-Wesley, chapter 18.

- ↑ Steven M. Bellovin: RFC 1948 - Defending Against Sequence Number Attacks . Internet Engineering Task Force (IETF), 1996

- ↑ RFC 6298 - Computing TCP's Retransmission Timer

- ^ Stevens, W. Richard, Allman, Mark, Paxson, Vern: TCP Congestion Control. Retrieved February 9, 2017 .