As Ränderung (ger .: Bordering Method ) a method of improving the solution properties are called linear equations . The method is used in linear algebra and numerics .

A system of linear equations

with a singular or poorly conditioned system matrix

, by adding rows and columns to and correspondingly enlarging and assigning an extended system of linear equations in which the system matrix is well conditioned (i.e. also regular). The simple examples in the next two sections should clarify this. The system matrix supplemented by rows and columns is also known as a rimmed matrix .

Example (regularization)

The system of equations is given

with the system matrix , the ones vector of the unknowns and the right hand side . This can be

regularized by changing the system matrix with the column and the row :

The entries added to the original system are overlined. The rimmed system has the unique solution with . Of this, the solution assigned to the original problem by the change is . The size of expresses how strong the regularizing influence of the change is.

Example (improvement of fitness)

In this example, a measured value vector with 10% errors with respect to the Euclidean norm is assumed from the using the equation

the sizes are to be determined. The

solution results

for For the right side deviating by approx. 10% (i.e. within the error tolerance)

, the completely different solution is determined .

A measure of how strongly relative errors in the measurement affect the relative error in the calculation result is the condition of the system matrix

For the special choice of from this example results . Due to the poor condition of the matrix, relative errors in the measurement data can be reflected in the relative error of the values calculated from these data .

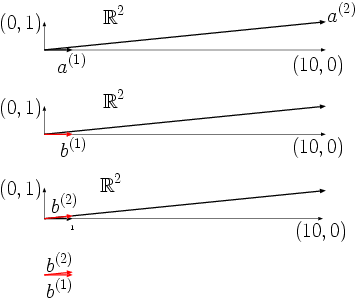

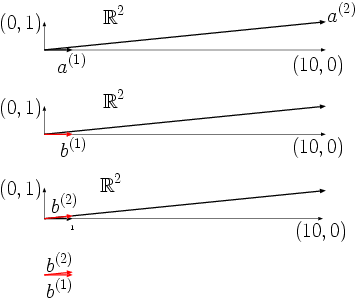

This effect is graphically illustrated in the following image for the right-hand sides specified above. From the upper part of the image can be seen that the two column vectors and of are almost linearly dependent on each other.

As a result, the two right-hand sides and , shown in red in the lower part of the image , which are very close to one another, fall into the image spaces of different columns from and the coefficients in

differ from each other when switching from too much.

Effect of a change:

The addition of an additional line to corresponds to the expansion of the value range of by one dimension from to and with the addition of a column a new column vector is added. A clever choice of the additional components and the additional column vector ensures

that the column vectors of the bordered matrix are separated from one another much better. More precisely, one chooses the new degrees of freedom so that the columns of the bordered matrix are perpendicular to each other and have the same length.

In the example, this is approximately achieved with the change

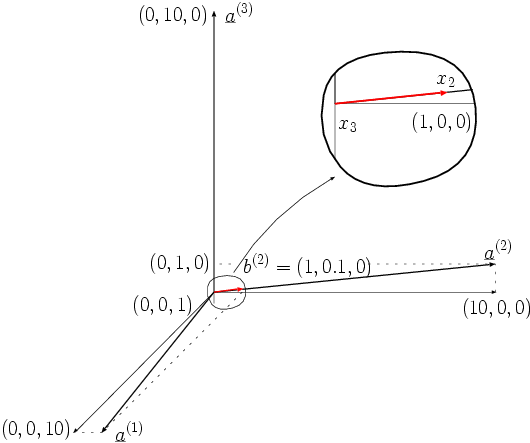

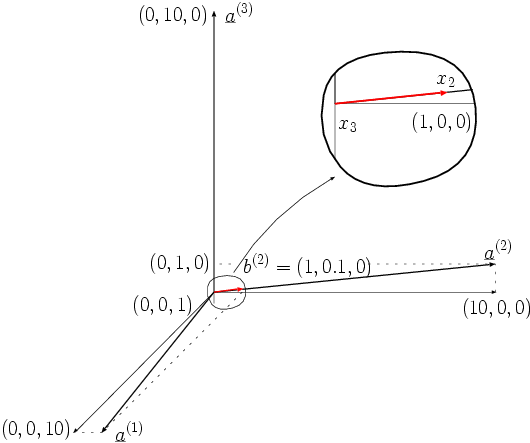

The position of the column vectors in the bordered matrix is shown in the following figure.

For the modified system

result with the rimmed right-hand sides and the solutions and respectively . The components essential for the measurement task and therefore do not change at all due to the 10% disruption of .

This is illustrated again in the following two images.

The condition number of the modified matrix has been reduced to. Calculating from the measured values will only worsen the relative error by a maximum of approx. 15%.

In this motivating example, the margins have been kept very simple. Through a skilful choice of Ränderung be reached that the relative error by calculating from no longer deteriorating, so that applies.

Regularization

Let be a real matrix and a matching -dimensional column vector. A rimmed matrix

with matching dies and is regular if the line from a base of the core form and the column of a minimal system of columns is that, together with the columns of the spans. In this case, the modified system

solve uniquely and is the dimension of the (square) bordered matrix (here is the defect of ).

Depending on the choice of matrices and you can solve different tasks. If, for example, the rows of span the kernel of and the columns of the orthogonal complement of the image of , then the solution of the modified system of equations with the pseudo inverse of . The kernel of can be calculated with the help of the Gaussian elimination method and the orthogonal complement of the image of is conveniently calculated as the kernel of .

Optimal margins

The idea from the last section is taken up here and any matrix is modified in such a way that the columns of the expanded matrix are all perpendicular to one another and have the same Euclidean norm . The extended matrix is then the product of the largest singular value of with an orthogonal matrix and thus has the minimum possible condition number one. The representation used here is based on.

Singular value decomposition as a tool for changing margins

The structure of the system matrix can be using one of the singular value decomposition of coordinate transformation gained , simplify. The new system matrix then has a large number of zero entries. Only the elements have non-zero elements, namely the singular values . Where is the rank of the matrix .

The transformation and are orthogonal and standard-sustaining , . From this it follows that the transformed system matrix has the same condition as the original system matrix .

An extension

the matrix to matrix blocks (of mating dimensions) corresponds to a Ränderung the matrix when the transformation matrices and suitably by parts of the unit matrix adds:

The extended transformation matrices and are again orthogonal, so that the condition of the extended system matrix matches that of the matrix .

In the following, only matrices need to be examined that have a system matrix with the structure (known from singular value decomposition)

with an integer .

Completion of a rectangular matrix to a square one

Here only the modification of matrices with more rows than columns is described ( ). The statements can be transferred to matrices with more columns than rows by transposition. With the statements from the previous section it is clear that we are dealing with the study of matrices of form

where is a positive semidefinite diagonal matrix and a null matrix with the same number of columns as . First, it is assumed that there is no zero matrix. The maximum diagonal element of is therefore greater than zero. Then it is beneficial to supplement the missing columns with the columns belonging to these column positions in the unit matrix scaled with:

If is regular, this also applies to the rimmed matrix . So the matrix has been regularized in this case.

After you have completed the rectangular matrix to a square one, you can use the margins described in the next section to better condition the matrix (or, if it is singular, to regularize it). There it will turn out that the choice of as a scaling factor is favorable, since one then no longer has to artificially enlarge the norm of these columns by changing the margin.

Optimal modification of a square matrix

In the previous section it was described how a rectangular matrix can be conveniently expanded to a square one by changing the edges. Here it is discussed how the condition of a square matrix can be improved or, in the singular case, the matrix can be regularized.

The subsection on singular value decomposition shows that one can restrict oneself to changing diagonal matrices .

The column vectors of the matrix are already perpendicular to one another. You just have to lengthen them appropriately so that they conform to the norm. One starts with the last column vector, the component of which has the smallest value of the diagonal elements of . First you add a line , which corresponds to an extension of the column vector space by one dimension (the point stands here as a substitute for the column index). In this additional dimension, the last column vector of the expanded matrix is extended so far that it has the same Euclidean length as the first (longest) column vector of (i.e. the length ):

The column vectors of the expanded matrix

thus remain orthogonal to one another. To get a square matrix again, one more column has to be added. To do this, one conveniently chooses a column with

The column has the desired norm and is in turn perpendicular to all other columns of the expanded matrix

-

.

.

By adding additional rows and columns in the same way, the norm of all column vectors of the extended matrix can be gradually adjusted. As desired, the result is a system matrix, the columns of which are all orthogonal to one another and have the same norm.

Web links

Individual evidence

-

↑ Uwe Schnabel: Minimizing the condition number by changing matrices. Preprint IOKOMO-05-2001, TU Dresden. 2001.