Remez algorithm

The Remez algorithm in approximation theory is a minimax approximation algorithm. As such, it minimizes the maximum absolute difference between the polynomial sought (given maximum degree ) and the given (in an interval) continuous function . It is named after the Soviet mathematician Yevgeny Jakowlewitsch Remes , who introduced it in 1934.

The algorithm relies on the alternant theorem by Pafnuti Lwowitsch Tschebyschow , by iteratively evaluating the mentioned difference at support points in the interval and calculating new support points from this .

algorithm

| Given: | An interval and a continuous function ; |

| also a maximum polynomial degree . | |

| Searched: | A polynomial of degree at most which |

| minimized. |

From the alternant theorem it follows that the polynomial in the Limes is uniquely determined and that there are points for which applies

with the deviation ( supremum norm ).

The polynomial we are looking for is with

designated. So it applies to the unknown

to determine.

preparation

One begins with the extreme places of the Chebyshev polynomial of the first kind of degree

- with .

Such a set of points is called a "reference". It is

- .

iteration

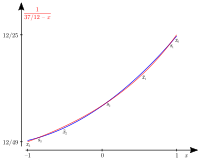

Compute an approximation on the reference

We are looking for the polynomial of the degree whose error function alternates on the reference obtained in the previous step . I.e. are sought

- and .

With

- for .

This task has only the reference as input and only the values of the function . It can also be formulated as an optimization task, namely as the best approximation on the reference, and can be clearly solved.

The system of equations to be solved in matrix representation :

This gives you new coefficients

for the polynomial and a new "reference deviation" .

Replace the reference with extreme places

Starting from the polynomial , extreme points of the error function apply

to find. If it is differentiable , extreme points can often be obtained by setting the derivative function to zero.

In any case, the interval can be divided into the sub-intervals that can be specified by the current error function as follows. Since with is also continuous, there are, according to the intermediate value theorem, for all zeros of the error function (in the figure, interfaces of the red function with the blue polynomial ) in the interval , since the sign of the error function alternates at the places ( ). Comes in two adjacent intervals and only one zero respectively , then the error function in the interval is only non-negative, or only non-positive. (But even if there are several zeros, the other applies - the extremes may just not be so pronounced.) Because of the continuity of the error function, according to the principle of minimum and maximum, there are (local) extreme points in each sub-interval . The result is the first extremum, the following extremes alternate between maximum and minimum up to the last extremum .

In addition, the (absolute) quality of the approximation occurs in the form of . If it is unsatisfactory, you can iterate with the newly acquired reference . It can also be interesting to increase the degree of approximation by inserting intermediate points in the reference. The Example 2 in the article alternant theorem shows how the quality of the local best approximation depends on the degree of the polynomial.

Convergence speed

Under suitable conditions regarding the function , the method converges locally quadratically.

literature

- Leçons sur l'approximation des fonctions d'une variable réelle Paris, Gauthier-Villars, 1919, 1952

- C. de Boor, A. Pinkus: Proof of the conjectures of Bernstein and Erdös concerning the optimal nodes for polynomial interpolation . In: Journal of Approximation Theory . 24, 1978, pp. 289-303. doi : 10.1016 / 0021-9045 (78) 90014-X .

References and comments

-

↑ E. Ya. Remez, "Sur la détermination des polynômes d'approximation de degré donnée", Comm. Soc. Math. Kharkov 10 , 41 (1934);

"Sur un procédé convergent d'approximations successives pour déterminer les polynômes d'approximation", Compt. Rend. Acad. Sc. 198: 2063 (1934);

"Sur le calcul effectiv des polynômes d'approximation de Chebyshev", Compt. Rend. Acad. Sc. 199: 337-340 (1934). - ↑ René Grothmann Lecture Notes Approximation Theory 1.69. definition

-

↑ The given matrix has Vandermonde matrices as sub-matrices.

The solution of the equation system costs many standard packages , but it can also be done in (see polynomial interpolation # Newton's algorithm ). - ↑ René Grothmann Lecture Notes Approximation Theory 1.71. comment

![{\ displaystyle [a, b] \ subset \ mathbb {R}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a659536067aaaac2db1c44613a09a715f0cf7246)

![{\ displaystyle f \ colon [a, b] \ to \ mathbb {R}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c5ab61178bf5349838758ffe3d96135406ed0245)

![{\ displaystyle p (x) \ in \ mathbb {R} [x]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/58e2ccc8eb59bd0fa227eba1cd54ac8212d2edce)

![[from]](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935)

![{\ displaystyle [s_ {i}, s_ {i + 1}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/077d6051cf55ff07f9a03e37b85b790ad6886972)

![[x _ {{i-1}}, x_ {i}]](https://wikimedia.org/api/rest_v1/media/math/render/svg/09cb12a889d47020c8ce7046a2eb60785e00c0b6)

![[x_i, x_ {i + 1}]](https://wikimedia.org/api/rest_v1/media/math/render/svg/7138606cdb8eb7dddaba59b5aefe1ade6bc05a1b)

![{\ displaystyle s_ {i} \ in [x_ {i-1}, x_ {i}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/03421563e3f859ffce61e174ab72a91c13843491)

![{\ displaystyle s_ {i + 1} \ in [x_ {i}, x_ {i + 1}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/12af66d28cc0993519205358430af08223e7490b)