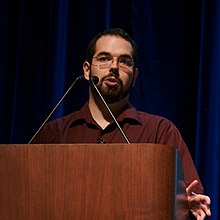

Eliezer Yudkowsky

Eliezer Shlomo Yudkowsky (born September 11, 1979 ) is an American researcher and author. He deals with decision theory and the long-term social and philosophical consequences of the development of artificial intelligence . He lives in Redwood City , California .

Life

Yudkowsky finished school when he was 12 years old. Since then he has been self-taught and has no formal training in the field of artificial intelligence. In 2000, together with Brian and Sabine Atkins, he founded the non-profit organization Singularity Institute for Artificial Intelligence (SIAI), which has been called the Machine Intelligence Research Institute (MIRI) since the end of January 2013 . He is still employed there as a researcher. He married Brienne Yudkowsky in 2013 and lives with her in an open marriage .

plant

In his research, Yudkowsky tries to develop a theory that makes it possible to create an artificial intelligence with a reflexive self-image, which is also able to modify and recursively improve itself, without changing its original preferences, especially morality and ethics, to change (see Seed AI , Friendly AI , and especially Coherent Extrapolated Volition ). The term "Friendly AI" used by him describes an artificial intelligence that represents human values and does not entail any negative effects or even the destruction of humanity. In addition to his theoretical research Yudkowsky also has some introductions to philosophical writing topics, such as the text "An Intuitive Explanation of Bayesian 'Theorem" (German "An intuitive explanation of Bayes' Theorem ").

OvercomingBias and LessWrong

Alongside Robin Hanson, Yudkowsky was one of the key contributors to the OvercomingBias blog, which was sponsored by the Future of Humanity Institute at Oxford University. In spring 2009 he helped found the community blog LessWrong, which aims to increase human rationality. Yudkowsky also wrote the so-called "Sequences" on "LessWrong", which consist of more than 600 blog posts and deal with topics such as epistemology, artificial intelligence, errors in human rationality and metaethics.

Further publications

He contributed two chapters to the anthology "Global Catastrophic Risk" edited by Nick Bostrom and Milan Cirkovic.

Yudkowsky is also the author of the following publications: "Creating Friendly AI" (2001), "Levels of Organization in General Intelligence" (2002), "Coherent Extrapolated Volition" (2004) and "Timeless Decision Theory" (2010).

Yudkowsky has also written some works of fiction . His Harry Potter - fan fiction "Harry Potter and the Methods of Rationality" is currently the most popular fanfiction.net Harry Potter history of the site. It covers topics of cognitive science, rationality and philosophy in general and has been positively rated by science fiction author David Brin and programmer Eric S. Raymond , among others .

Yudkowsky published his latest book, Inadequate Equilibria: Where and How Civilizations Get Stuck, in 2017. In the book, he discusses the circumstances that lead societies to make better or worse decisions in order to achieve widely recognized goals.

literature

- Our Molecular Future: How Nanotechnology, Robotics, Genetics and Artificial Intelligence Will Transform Our World by Douglas Mulhall, 2002, p. 321.

- The Spike: How Our Lives Are Being Transformed By Rapidly Advancing Technologies by Damien Broderick, 2001, pp. 236, 265-272, 289, 321, 324, 326, 337-339, 345, 353, 370.

- Inadequate Equilibria: Where and How Civilizations Get Stuck

Web links

- Personal website

- Less Wrong - "A community blog devoted to refining the art of human rationality" founded by Yudkowsky .

- Biography page at KurzweilAI.net

- Predicting The Future :: Eliezer Yudkowsky, NYTA Keynote Address - Feb 2003

- "Harry Potter and the Methods of Rationality" on Fanfiction.net

Individual evidence

- ^ Machine Intelligence Research Institute: Our Team. Retrieved November 22, 2015.

- ^ Eliezer Yudkowsky: About

- ↑ "GDay World # 238: Eliezer Yudkowsky". The Podcast Network. Retrieved on July 26, 2009 ( Memento of the original from April 28, 2012 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ^ "We are now the" Machine Intelligence Research Institute "(MIRI)". Singularity Institute Blog. ( Memento from February 6, 2013 in the Internet Archive )

- ^ Kurzweil, Ray (2005). The singularity is near. New York, US: Viking Penguin. p. 599

- ↑ Eliezer S. Yudkowsky. In: yudkowsky.net. Retrieved February 10, 2018 .

- ↑ Coherent Extrapolated Volition, May 2004 ( Memento of the original from August 15, 2010 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ^ "Overcoming Bias: About". Overcoming bias. Retrieved July 26, 2009

- ↑ Nick Bostrom , Milan M. Ćirković (Ed.): Global Catastrophic Risks. Oxford University Press, Oxford (UK) 2008, pp. 91-119, 308-345.

- ↑ Creating Friendly AI 1.0 ( Memento from June 16, 2012 in the Internet Archive )

- ↑ Archive link ( Memento of the original from October 25, 2011 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ^ "Eliezer Yudkowsky Profile". Accelerating Future ( Memento of the original from November 19, 2011 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ↑ http://www.davidbrin.blogspot.com/2010/06/secret-of-college-life-plus.html

- ↑ http://esr.ibiblio.org/?p=2100

- ↑ Inadequate Equilibria by Eliezer Yudkowsky. Retrieved May 20, 2018 (American English).

| personal data | |

|---|---|

| SURNAME | Yudkowsky, Eliezer |

| ALTERNATIVE NAMES | Yudkowsky, Eliezer Shlomo |

| BRIEF DESCRIPTION | American researcher and author |

| DATE OF BIRTH | September 11, 1979 |