Bayes' theorem

The Bayes' Theorem is a mathematical theorem from the theory of probability , the calculation of conditional probabilities describes. It is named after the English mathematician Thomas Bayes , who first described it in a special case in the treatise An Essay Towards Solving a Problem in the Doctrine of Chances , published posthumously in 1763 . It is also called Bayes 'formula or (as a loan translation ) Bayes' theorem .

formula

For two events and with , the probability of under the condition that has occurred can be calculated by the probability of under the condition that it has occurred:

- .

Here is

- the (conditional) probability of the event under the condition that it has occurred,

- the (conditional) probability of the event under the condition that it has occurred,

- the a priori probability of the event and

- the a priori probability of the event .

For a finite number of events, Bayes’s theorem reads:

If there is a decomposition of the result set into disjoint events , then the posterior probability holds true

- .

The last reshaping step is also known as marginalization.

Since an event and its complement always represent a decomposition of the result set, it is particularly true

- .

Furthermore, the theorem also applies to a decomposition of the base space into a countable number of pairwise disjoint events.

proof

The theorem follows directly from the definition of conditional probability:

- .

The relationship

is an application of the law of total probability .

interpretation

In a certain sense, Bayes' theorem allows conclusions to be reversed: one starts with a known value , but is actually interested in the value . For example, it is of interest how high the probability is that someone has a certain disease if a rapid test developed for it shows a positive result. The probability that the test will lead to a positive result in a person affected by this disease is usually known from empirical studies . The desired conversion is only possible if you know the prevalence of the disease, i.e. the (absolute) probability with which the disease in question will occur in the overall population (see calculation example 2 ).

A decision tree or a four-field table can help for understanding. The process is also known as reverse induction .

Sometimes one encounters the fallacy of wanting to infer directly from on without taking the a priori probability into account, for example by assuming that the two conditional probabilities must be approximately the same (see prevalence error ). As Bayes' theorem shows, this is only the case if and are approximately the same size.

It should also be noted that conditional probabilities alone are not suitable for demonstrating a specific causal relationship .

application areas

- Statistics : All questions of learning from experience in which an a priori probability assessment is changed on the basis of experience and converted into an a posteriori distribution (see Bayesian statistics ).

- Data mining : Bayesian classifiers are theoretical decision rules with a demonstrably minimal error rate.

- Spam detection : Bayesian filter - Characteristic words in an e-mail (event A) indicate that it is spam (event B).

- Artificial intelligence : Here Bayes' theorem is used to be able to draw conclusions even in domains with "uncertain" knowledge. These are then not deductive and therefore not always correct, but rather of an abductive nature, but have proven to be quite useful for forming hypotheses and for learning in such systems.

- Quality management : Assessment of the significance of test series.

- Decision theory / information economics : Determination of the expected value of additional information.

- Basic model of traffic distribution .

- Bioinformatics : Determining the functional similarity of sequences.

- Communication theory : solving detection and decoding problems.

- Econometrics : Bayesian econometrics

- Neuroscience : Models of Perception and Learning.

Calculation example 1

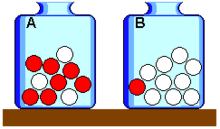

There are ten balls in each of the urns and . In there are seven red and three white balls, one red and nine white. Now any ball is drawn from a randomly chosen urn. In other words: whether there will be an urn or a drawing is a priori equally likely. The result of the drawing is: The ball is red. We are looking for the probability that this red ball comes from an urn .

Let it be:

- the event "The ball comes from the urn ",

- the event "The ball comes from the urn " and

- the event "The ball is red".

Then: (both urns are a priori equally probable)

(in urn A there are 10 balls, 7 of them red)

(in urn B there are 10 balls, 1 of them red)

(total probability of pulling a red ball)

So is .

The conditional probability that the drawn red ball was drawn from the urn is therefore .

The result of Bayes' formula in this simple example can easily be seen: Since both urns are selected a priori with the same probability and there are the same number of balls in both urns, all balls - and thus also all eight red balls - have the equal chance of being drawn. If you repeatedly draw a ball from a random urn and put it back in the same urn, you will on average draw a red ball in eight out of 20 cases and a white ball in twelve out of 20 cases (hence the total probability of hitting a red ball pull, same ). Of these eight red balls, an average of seven come from the urn and one from the urn . The probability that a drawn red ball came from an urn is therefore the same .

Calculation example 2

A certain disease has a prevalence of 20 per 100,000 people. The fact that a person carries this disease has a probability .

Is screening the general population regardless of risk factors or symptoms suitable for identifying carriers of the disease? The majority of the tests would be people from the complement of , i.e. people who do not have this disease in themselves: the probability that a person to be tested is not a carrier of the disease is .

denote the fact that the test turned out "positive" in a person, indicating the disease. It is known that the test shows with 95% probability ( sensitivity ), but sometimes also works in healthy people, i. H. delivers a false positive test result with a probability of ( specificity ).

Not only for the initial question, but in each individual case , especially before the result of further examinations, is interested in the positive predictive value called the conditional probability that those who have tested positive are carriers of the disease.

Calculation with Bayes' theorem

.

Calculation using a tree diagram

Problems with few classes and simple distributions can be clearly shown in the tree diagram for the division of the frequencies. If one moves from frequencies to relative frequencies or to (conditional) probabilities, the tree diagram becomes an event tree, a special case of the decision tree .

According to the information above, the absolute frequency for 100,000 people is 20 actually sick people, 99,980 people are healthy. The test correctly diagnoses the disease in 19 cases (95 percent sensitivity) of the 20 sick people. In one case, the test fails and does not indicate the disease present (false negative). In probably 1,000 of the 99,980 healthy people, the test will falsely indicate disease. Of the total of 1019 people who tested positive, only 19 are actually sick ( ).

Meaning of the result

The price of finding 19 carriers of the disease, possibly in time for treatment or isolation, is not only the cost of 100,000 tests, but also the unnecessary anxiety and possibly treatment of 1,000 false positives. The initial question as to whether mass screening makes sense with these numerical values must therefore be answered in the negative.

The intuitive assumption that a - at first glance impressive - sensitivity of 95% means that a person who tested positive is actually very likely to be ill is wrong. This problem occurs whenever the actual rate at which a characteristic occurs in the total set examined is small compared to the rate of false positive results.

Without training in the interpretation of statistical statements, risks are often incorrectly assessed or conveyed. The psychologist Gerd Gigerenzer speaks of number illiteracy when dealing with uncertainty and calls for a broad-based didactic offensive.

Bayesian statistics

Bayesian statistics uses Bayes' theorem in the context of inductive statistics to estimate parameters and test hypotheses.

Problem

The following situation is given: is an unknown environmental condition (e.g. a parameter of a probability distribution) that is to be estimated on the basis of an observation of a random variable . Furthermore, prior knowledge is given in the form of an a priori probability distribution of the unknown parameter . This a priori distribution contains all the information about the state of the environment that is given before the observation of the sample.

Depending on the context and philosophical school, the a priori distribution is understood

- as mathematical modeling of the subjective degree of belief ( subjective concept of probability ),

- as an adequate representation of the general prior knowledge (whereby probabilities are understood as a natural extension of the Aristotelian logic in relation to uncertainty - Cox's postulates ),

- as the probability distribution of an actually random parameter known from preliminary studies or

- as a specifically chosen distribution that corresponds in an ideal way to ignorance of the parameter (objective a priori distributions, for example using the maximum entropy method ).

The conditional distribution of under the condition that assumes the value is denoted below with . This probability distribution can be determined after observing the sample and is also known as the likelihood of the parameter value .

The posterior probability can be calculated using Bayes' theorem. In the special case of a discrete a priori distribution one obtains:

If the set of all possible environmental states is finite, the a posteriori distribution in value can be interpreted as the probability with which one expects the environmental state after observing the sample and taking into account the previous knowledge .

A follower of the subjectivist school of statistics usually uses the expected value of the a posteriori distribution, in some cases the modal value, as an estimate .

example

Similar to the above, consider an urn filled with ten balls, but it is now unknown how many of them are red. The number of red balls is here the unknown environmental status and as its a priori distribution it should be assumed that all possible values from zero to ten should be equally probable, i.e. H. it applies to everyone .

Now a ball is drawn from the urn five times with replacement and denotes the random variable that indicates how many of them are red. Under the assumption there is then binomial distribution with the parameters and , so it is true

for .

For example for , d. H. two of the five balls drawn were red, the following values result (rounded to three decimal places)

| 0 | 1 | 2 | 3 | 4th | 5 | 6th | 7th | 8th | 9 | 10 | |

| 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | 0.091 | |

| 0.000 | 0.044 | 0.123 | 0.185 | 0.207 | 0.188 | 0.138 | 0.079 | 0.031 | 0.005 | 0.000 |

It can be seen that, in contrast to the a priori distribution in the second line, in which all values of were assumed to be equally probable, the a posteriori distribution in the third line has the greatest probability, i.e. the a posteriori Mode is .

The expected value of the a posteriori distribution results here:

- .

See also

literature

- Alan F. Chalmers : Ways of Science: An Introduction to the Philosophy of Science. 6th edition. - Springer, Berlin [a. a.], 2007, ISBN 3-540-49490-1 , pp. 141–154, doi : 10.1007 / 978-3-540-49491-1_13 (Introduction to the perspective of the history of science)

- Sharon Bertsch McGrayne: The Theory That Did n't Want to Die. How the English pastor Thomas Bayes discovered a rule that, after 150 years of controversy, has become an indispensable part of science, technology and society . Springer, Berlin Heidelberg 2014. ISBN 978-3-642-37769-3 , doi: 10.1007 / 978-3-642-37770-9

- F. Thomas Bruss (2013), 250 years of 'An Essay towards solving a Problem in the Doctrine of Chance. By the late Rev. Mr. Bayes, communicated by Mr. Price, in a letter to John Canton, AMFRS ' , doi: 10.1365 / s13291-013-0069-z , Annual Report of the German Mathematicians Association, Springer Verlag, Vol. 115 , Issue 3-4 (2013), 129-133.

- Wolfgang Tschirk: Statistics: Classic or Bayes. Two ways in comparison , Springer Spectrum, 2014, ISBN 978-3-642-54384-5 , doi: 10.1007 / 978-3-642-54385-2 .

Web links

- Entry in Edward N. Zalta (Ed.): Stanford Encyclopedia of Philosophy .

- Rudolf Sponsel: Bayes' theorem

- Bayesian theorem of probability

- Ian Stewart: The Interrogator's Fallacy (An application example from criminology)

- Christoph Wassner, Stefan Krauss, Laura Martignon: Does Bayes' sentence have to be difficult to understand? (An article on mathematics didactics.)

- Calculated exercise examples

- Collection of thought traps and paradoxes by Timm Grams

- Ulrich Leuthäusser (2011): "Bayes and GAUs: Probability statements on future accidents in nuclear power plants after Fukushima, Chernobyl, Three Mile Island" (PDF; 85 kB)

Individual evidence

- ↑ Gerd Gigerenzer: The basics of skepticism . Piper, Berlin 2014, ISBN 978-3-8270-7792-9 ( review of the English original in NEJM ).

- ↑ Bernhard Rüger (1988), p. 152 ff.