| Binomial distribution

|

Probability distribution

|

Distribution function

|

| parameter

|

, , ![p \ in [0.1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/33c3a52aa7b2d00227e85c641cca67e85583c43c)

|

|

carrier

|

|

|

Probability function

|

|

|

Distribution function

|

|

|

Expected value

|

|

|

Median

|

i. A. no closed formula, see below

|

|

mode

|

or or

|

|

Variance

|

|

|

Crookedness

|

|

|

Bulge

|

|

|

entropy

|

|

|

Moment generating function

|

|

|

Characteristic function

|

|

The binomial distribution is one of the most important discrete probability distributions .

It describes the number of successes in a series of similar and independent experiments, each of which has exactly two possible results (“success” or “failure”). Such series of experiments are also called Bernoulli processes .

If the probability of success in one attempt and the number of attempts, then one designates with (also , or ) the probability of achieving exactly successes (see section definition ).

The binomial distribution and Bernoulli's experiment can be illustrated with the help of the Galton board . It is a mechanical device into which bullets are thrown. These then fall randomly into one of several subjects, with the division corresponding to the binomial distribution. Depending on the construction, different parameters and are possible.

Although the binomial distribution was known long before, the term was first used in 1911 in a book by George Udny Yule .

Examples

The probability of rolling a number greater than 2 with a normal dice is ; the probability that this will not be the case is . Assuming you roll the dice 10 times ( ), there is a small probability that a number greater than 2 will not be rolled once, or vice versa every time. The probability of throwing such a number times is described by the binomial distribution .

The process described by the binomial distribution is often illustrated by a so-called urn model . In an urn z. B. 6 balls, 2 of them black, the others white. Reach into the urn 10 times, take out a ball, note its color and put the ball back. In a special interpretation of this process, the drawing of a white ball is understood as a "positive event" with the probability , the drawing of a non-white ball as a "negative result". The probabilities are distributed in the same way as in the previous example of rolling the dice.

definition

Probability function, (cumulative) distribution function, properties

The discrete probability distribution with the probability function

is called the binomial distribution for the parameters (number of attempts) and (the success or hit probability ).

![{\ displaystyle p \ in \ left [0,1 \ right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/403c14a696bad2adffdf3b4b91494c89fb043180)

The above formula can be understood as follows: We need exactly successes of the probability for all experiments and consequently we have exactly failures of the probability . However, any of the successes can occur in any of the attempts, so we still have to multiply by the number of -element subsets of an -element set. Because there are just as many possibilities to choose the successful ones from all attempts .

The probability of failure complementary to the probability of success is often abbreviated with .

As is necessary for a probability distribution, the probabilities for all possible values must add up to 1. This results from the binomial theorem as follows:

A randomly distributed variable is accordingly called binomially distributed with the parameters and as well as the distribution function

-

,

,

where denotes the rounding function.

Other common notations of the cumulative binomial distribution are , and .

Derivation as Laplace probability

Scheme of experiment: An urn contains balls, of which are black and white. So the probability of drawing a black ball is . Balls are taken one after the other at random , their color is determined and then put back again.

We calculate the number of possibilities in which black balls can be found, and from this the so-called Laplace probability (“number of possibilities favorable for the event, divided by the total number of (equally probable) possibilities”).

There are options for each of the draws, so overall options for selecting the balls. In order for these balls to be black, the draws must have a black ball. There are possibilities for every black ball, and possibilities for every white ball . The black balls can still be distributed across the draws in possible ways , so there is

Cases where exactly black balls have been selected. So the probability of finding exactly black balls among balls is:

properties

symmetry

- The binomial distribution is in the special cases , and symmetrical and otherwise asymmetrical.

- The binomial distribution has the property

Expected value

The binomial distribution has the expected value .

proof

The expected value is calculated directly from the definition and the binomial theorem to

Alternatively, you can use that -distributed random variable as a sum of independent Bernoulli distributed random variables with can be written. With the linearity of the expected value it then follows

Alternatively, the following proof can also be given with the help of the binomial theorem: Differentiate in the equation

both sides after , arises

-

,

,

so

-

.

.

The desired result follows with and .

Variance

The binomial distribution has the variance with .

proof

Let it be a -distributed random variable. The variance is determined directly from the shift set to

or alternatively, from the equation of Bienaymé applied to the variance of independent random variables when considering that the identical individual processes of the Bernoulli distribution with enough to

The second equality holds because the individual experiments are independent, so that the individual variables are uncorrelated.

Coefficient of variation

The coefficient of variation is obtained from the expected value and the variance

Crookedness

The skew arises too

Bulge

The curvature can also be shown closed as

That’s the excess

mode

The mode , i.e. the value with the maximum probability, is for equal and for equal . If is a natural number, is also a mode. If the expected value is a natural number, the expected value is equal to the mode.

proof

Be without limitation . We consider the quotient

-

.

.

Now , if and if . So:

And only in this case does the quotient have the value 1, i.e. H. .

Median

It is not possible to give a general formula for the median of the binomial distribution. Therefore, different cases have to be considered that provide a suitable median:

- If a natural number, then the expected value, median and mode match and are the same .

- A median lies in the interval . Here denote the rounding function and the rounding function .

- A median can not deviate too much from the expected value: .

- The median is unique and matches round if either or or (unless and is even).

- If and is odd, then every number in the interval is a median of the binomial distribution with parameters and . Is and even, so is the clear median.

Accumulators

The function generating cumulants is analogous to the Bernoulli distribution

-

.

.

Therewith the first cumulants are and the recursion equation applies

Characteristic function

The characteristic function has the form

Probability generating function

For the probability generating function one obtains

Moment generating function

The moment-generating function of the binomial distribution is

Sum of binomially distributed random variables

For the sum of two independent binomially distributed random variables and with the parameters , and , the individual probabilities are obtained by applying the Vandermonde identity

![{\ displaystyle {\ begin {aligned} \ operatorname {P} (Z = k) & = \ sum _ {i = 0} ^ {k} \ left [{\ binom {n_ {1}} {i}} p ^ {i} (1-p) ^ {n_ {1} -i} \ right] \ left [{\ binom {n_ {2}} {ki}} p ^ {ki} (1-p) ^ {n_ {2} -k + i} \ right] \\ & = {\ binom {n_ {1} + n_ {2}} {k}} p ^ {k} (1-p) ^ {n_ {1} + n_ {2} -k} \ qquad (k = 0.1, \ dotsc, n_ {1} + n_ {2}), \ end {aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/94d620c42183da7a282177dd40b3b98088ed0b2a)

so again a binomial random variable, but with the parameters and . Thus applies to the convolution

The binomial distribution is therefore reproductive for fixed or forms a convolution half-group .

If the sum is known, each of the random variables and under this condition follows a hypergeometric distribution. To do this, one calculates the conditional probability :

This represents a hypergeometric distribution .

In general, if the random variables are stochastically independent and satisfy the binomial distributions , then the sum is also binomially distributed, but with the parameters and . Adding binomial random variables with , then we obtain a generalized binomial distribution .

Relationship to other distributions

Relationship to the Bernoulli distribution

A special case of the binomial distribution for is the Bernoulli distribution . The sum of independent and identical Bernoulli-distributed random variables is therefore sufficient for the binomial distribution.

Relationship to the generalized binomial distribution

The binomial distribution is a special case of the generalized binomial distribution with for all . More precisely, it is the generalized binomial distribution with maximum entropy for a fixed expectation value and a fixed order .

Transition to normal distribution

According to Moivre-Laplace's theorem , the binomial distribution converges in the limit to a normal distribution , i.e. That is, the normal distribution can be used as a useful approximation of the binomial distribution if the sample size is sufficiently large and the proportion of the desired expression is not too small. With the Galton board one can experience the approximation to the normal distribution.

It is true and by inserting it into the distribution function the standard normal distribution follows

As can be seen, the result is nothing more than the function value of the normal distribution for , as well as (which can also be visualized as the area of the -th strip of the histogram of the standardized binomial distribution with its width and its height). The approximation of the binomial distribution to the normal distribution is used in the normal approximation in order to quickly determine the probability of many levels of the binomial distribution, especially if there are no table values (any longer) for these.

Transition to the Poisson distribution

An asymptotically asymmetrical binomial distribution, the expectation value of which converges for and against a constant , can be approximated by the Poisson distribution . The value is then the expected value for all binomial distributions considered in the limit value formation as well as for the resulting Poisson distribution. This approximation is also known as the Poisson approximation , Poisson's limit theorem or the law of rare events.

As a rule of thumb, this approximation is useful if and .

The Poisson distribution is the limit distribution of the binomial distribution for large and small , it is a question of convergence in distribution .

Relationship to the geometric distribution

The number of failures up to the first occurrence of success is described by the geometric distribution .

Relationship to the negative binomial distribution

The negative binomial distribution, on the other hand, describes the probability distribution of the number of attempts that are required to achieve a specified number of successes in a Bernoulli process .

Relationship to the hypergeometric distribution

With the binomial distribution, the selected samples are returned to the selected set and can therefore be selected again at a later point in time. In contrast, if the samples are not returned to the population , the hypergeometric distribution is used. The two distributions merge into one another if the size of the population is large and the size of the samples is small . As a rule of thumb, even if the samples are not taken, the binomial distribution can be used instead of the mathematically more demanding hypergeometric distribution, since in this case the two only provide results that differ from one another only insignificantly.

Relationship to the multinomial distribution

The binomial distribution is a special case of the multinomial distribution .

Relationship to the Rademacher distribution

If binomial distribution is for the parameter and , the scaled sum of Rademacher-distributed random variables can be represented:

This is particularly the symmetric random walk on used.

Relationship to the Panjer distribution

The binomial distribution is a special case of the Panjer distribution , which combines the distributions binomial distribution, negative binomial distribution and Poisson distribution in one distribution class.

Relationship to beta distribution

For many applications it is necessary to use the distribution function

to be calculated specifically (for example in statistical tests or for confidence intervals ).

The following relationship to the beta distribution helps here :

For integer positive parameters and :

To the equation

to prove, one can proceed as follows:

- The left and right sides are the same for (both sides are equal to 1).

- The derivatives according to are the same for the left and right side of the equation, namely they are both equal .

Relationship to the beta binomial distribution

A binomial distribution whose parameters are beta-distributed is called a beta binomial distribution . It is a mixed distribution .

Relationship to the Pólya distribution

The binomial distribution is a special case of the Pólya distribution (choose ).

Examples

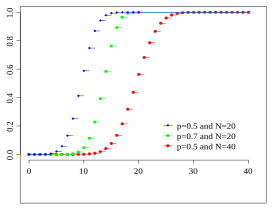

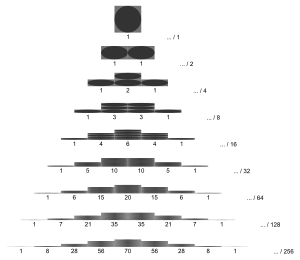

Symmetrical binomial distribution ( p = 1/2)

p = 0.5 and n = 4, 16, 64

Scaling with standard deviation

This case occurs when tossing a fair coin twice (probability for heads is the same as for tails, i.e. equal to 1/2). The first figure shows the binomial distribution for and for various values of as a function of . These binomial distributions are mirror symmetrical around the value :

Binomial distributions with

p = 0.5 (with shift by -

n / 2 and scaling) for

n = 4, 6, 8, 12, 16, 23, 32, 46

The same data in semi-log plot

This is illustrated in the second figure. The width of the distribution increases proportionally to the standard deviation . The function value at , i.e. the maximum of the curve, decreases proportionally .

Accordingly, one may binomial distributions with different successive scale by the abscissa by splits and the ordinate with multiplied (third figure above).

The graphic opposite shows once again rescaled binomial distributions, now for other values of and in a plot that better shows that all function values converge towards a common curve with increasing values . By applying the Stirling formula to the binomial coefficients , you can see that this curve (solid black in the picture) is a Gaussian bell curve :

-

.

.

This is the probability density for the standard normal distribution . In the central limit theorem , this finding is generalized in such a way that sequences of other discrete probability distributions also converge to the normal distribution.

The second graphic opposite shows the same data in a semi-logarithmic plot. This is recommended if you want to check whether even rare events that deviate from the expected value by several standard deviations follow a binomial or normal distribution.

Pulling bullets

There are 80 balls in a container, 16 of which are yellow. A ball is removed 5 times and then put back again. Because of the replacement, the probability of drawing a yellow ball is the same for all withdrawals, namely 16/80 = 1/5. The value indicates the probability that precisely the balls removed are yellow. As an example we calculate :

So in about 5% of the cases you draw exactly 3 yellow balls.

|

B (k | 0.2; 5)

|

| k

|

Probability in%

|

| 0 |

0032.768

|

| 1 |

0040.96

|

| 2 |

0020.48

|

| 3 |

0005.12

|

| 4th |

0000.64

|

| 5

|

0000.032

|

| ∑ |

0100

|

| Adult value |

0001

|

| Variance |

0000.8

|

Number of people with birthday on the weekend

The probability that a person's birthday will be on a weekend this year is 2/7 (for the sake of simplicity). There are 10 people in one room. The value indicates (in the simplified model) the probability that exactly those present have a birthday on a weekend this year.

|

B (k | 2/7; 10)

|

| k

|

Probability in% (rounded)

|

| 0

|

0003.46

|

| 1

|

0013.83

|

| 2

|

0024.89

|

| 3

|

0026.55

|

| 4th

|

0018.59

|

| 5

|

0008.92

|

| 6th

|

0002.97

|

| 7th

|

0000.6797

|

| 8th

|

0000.1020

|

| 9

|

0000.009063

|

| 10

|

0000.0003625

|

| ∑ |

0100

|

| Adult value

|

0002.86

|

| Variance

|

0002.04

|

Common birthday in the year

253 people came together. The value indicates the probability that exactly those present have a birthday on a randomly chosen day (regardless of the year of birth).

|

B (k | 1/365; 253)

|

| k

|

Probability in% (rounded)

|

| 0

|

049.95

|

| 1

|

034.72

|

| 2

|

012.02

|

| 3

|

002.76

|

| 4th

|

000.47

|

The likelihood that “anyone” of these 253 people, i.e. H. one or more people who have their birthday on this day is therefore .

With 252 people the probability is . This means that the threshold for the number of people from which the probability that at least one of these people has a birthday on a randomly chosen day is greater than 50% is 253 people (see also the birthday paradox ).

Calculating the binomial distribution directly can be difficult due to the large factorials. An approximation using the Poisson distribution is permissible here ( ).  The parameter results in the following values:

The parameter results in the following values:

|

P 253/365 (k)

|

| k

|

Probability in% (rounded)

|

| 0

|

050

|

| 1

|

034.66

|

| 2

|

012.01

|

| 3

|

002.78

|

| 4th

|

000.48

|

Confidence interval for a probability

In an opinion poll of people, people say they vote for party A. Determine a 95% confidence interval for the unknown proportion of voters who vote for party A in the total electorate.

A solution to the problem without recourse to the normal distribution can be found in the article Confidence Interval for the Success Probability of the Binomial Distribution .

Utilization model

Using the formula can be the probability calculated that of people an activity that on average takes minutes per hour to run simultaneously.

Statistical error of the class frequency in histograms

The representation of independent measurement results in a histogram leads to the grouping of the measurement values in classes.

The probability for entries in class is given by the binomial distribution

-

with and .

with and .

Expectation and variance of are then

-

and .

and .

Thus the statistical error of the number of entries in class is at

-

.

.

If there is a large number of classes, and becomes small .

In this way, for example, the statistical accuracy of Monte Carlo simulations can be determined.

Random numbers

Random numbers for the binomial distribution are usually generated using the inversion method.

Alternatively, one can also take advantage of the fact that the sum of Bernoulli-distributed random variables is binomially distributed. To do this, one generates Bernoulli-distributed random numbers and adds them up; the result is a binomial distributed random number.

Web links

Individual evidence

-

↑ Peter Kissel: MAC08 Stochastics (Part 2). Studiengemeinschaft Darmstadt 2014, p. 12.

-

↑ Bigalke / Köhler: Mathematics 13.2 Basic and advanced course. Cornelsen, Berlin 2000, p. 130.

-

↑ George Udny Yule : An Introduction to the Theory of Statistics. Griffin, London 1911, p. 287.

-

↑ Peter Kissel: MAC08 Stochastics (Part 2). Studiengemeinschaft Darmstadt 2014, p. 23.

-

↑ Bigalke / Köhler: Mathematics 13.2 Basic and advanced course. Cornelsen, Berlin 2000, pp. 144 ff.

-

↑ P. Neumann: About the median of the binomial and Poisson distribution . In: Scientific journal of the Technical University of Dresden . 19, 1966, pp. 29-33.

-

↑ Lord, Nick. (July 2010). "Binomial averages when the mean is an integer", The Mathematical Gazette 94, 331-332.

-

↑ a b R. Kaas, JM Buhrman: Mean, Median and Mode in Binomial Distributions . In: Statistica Neerlandica . 34, No. 1, 1980, pp. 13-18. doi : 10.1111 / j.1467-9574.1980.tb00681.x .

-

↑ a b K. Hamza: The smallest uniform upper bound on the distance between the mean and the median of the binomial and Poisson distributions . In: Statistics & Probability Letters . 23, 1995, pp. 21-25. doi : 10.1016 / 0167-7152 (94) 00090-U .

-

↑ Peter Harremoës: Binomial and Poisson Distributions as Maximum Entropy Distributions . In: IEEE Information Theory Society (Ed.): IEEE Transactions on Information Theory . 47, 2001, pp. 2039-2041. doi : 10.1109 / 18.930936 .

-

↑ M. Brokate, N. Henze, F. Hettlich, A. Meister, G. Schranz-Kirlinger, Th. Sonar: Basic knowledge of mathematics studies: higher analysis, numerics and stochastics. Springer-Verlag, 2015, p. 890.

-

↑ In the concrete case one has to calculate for the binomial distribution and for the Poisson distribution . Both are easy with the calculator. When calculating with paper and pencil, you need 8 or 9 terms for the value of the Poisson distribution with the exponential series, while for the binomial distribution you get the 256th power by squaring several times and then dividing by the third power.

Discrete univariate distributions

Continuous univariate distributions

Multivariate distributions

![p \ in [0.1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/33c3a52aa7b2d00227e85c641cca67e85583c43c)

![{\ displaystyle p \ in \ left [0,1 \ right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/403c14a696bad2adffdf3b4b91494c89fb043180)

![{\ displaystyle {\ begin {aligned} \ operatorname {P} (Z = k) & = \ sum _ {i = 0} ^ {k} \ left [{\ binom {n_ {1}} {i}} p ^ {i} (1-p) ^ {n_ {1} -i} \ right] \ left [{\ binom {n_ {2}} {ki}} p ^ {ki} (1-p) ^ {n_ {2} -k + i} \ right] \\ & = {\ binom {n_ {1} + n_ {2}} {k}} p ^ {k} (1-p) ^ {n_ {1} + n_ {2} -k} \ qquad (k = 0.1, \ dotsc, n_ {1} + n_ {2}), \ end {aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/94d620c42183da7a282177dd40b3b98088ed0b2a)