In probability theory, a characteristic function is a special complex-valued function that is assigned to a finite measure or, more specifically, a probability measure on the real numbers or the distribution of a random variable . The finite measure is clearly determined by its characteristic function and vice versa, so the assignment is bijective .

An essential benefit of characteristic functions is that many more difficult to grasp properties of the finite measure are found as properties of the characteristic function and are there more easily accessible as properties of a function. For example, the convolution of probability measures is reduced to the multiplication of the corresponding characteristic functions.

definition

A finite measure is given . Then the name is the complex-valued function

defined by

the characteristic function of . If a probability measure , the definition follows analogously. If a random variable with distribution is given, the characteristic function is given by

with the expected value .

This results in the following important special cases:

- If has a probability density function (with respect to the Riemann integral) , then the characteristic function is given as

-

.

.

- If has a probability function , then the characteristic function is given as

-

.

.

In both cases, the characteristic function is the (continuous or discrete) Fourier transform of the density or probability function.

Elementary examples

If Poisson is distributed , then has the probability function

-

.

.

The above representation for the characteristic function using probability functions then results

If it is exponentially distributed to the parameter , then has the probability density function

This results in

Further examples of characteristic functions are tabulated further down in the article or can be found directly in the article about the corresponding probability distributions.

Properties as a function

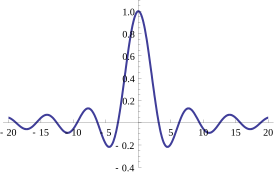

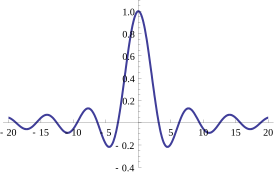

The characteristic function of a random variable that is continuously uniformly distributed over (-1,1). In general, however, characteristic functions are not real-valued.

existence

The characteristic function exists for any finite measures and thus also probability measures or distributions of random variables, because

the integral always exists.

Narrow-mindedness

Every characteristic function is always limited; for a random variable , that

-

.

.

In the general case of a finite measure on holds

-

.

.

symmetry

The characteristic function is real-valued if and only if the random variable is symmetric .

Furthermore , it is always Hermitian, that is, it applies

-

.

.

Uniform continuity

-

is a uniformly continuous function.

is a uniformly continuous function.

characterization

It is particularly interesting when a function is the characteristic function of a probability measure. Pólya's theorem (after George Pólya ) provides a sufficient condition : It is a function

![{\ displaystyle f \ colon \ mathbb {R} \ to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/804bc4294f8fbfa876c763dc1f27702951e750f6)

and, in addition , it is the characteristic function of a probability measure.

Bochner's theorem (after Salomon Bochner ) provides a necessary and sufficient condition :

A continuous function

is the characteristic function of a probability measure if and only if a positive semidefinite function is and holds.

Other properties

Linear transformation

-

for all

for all

Reversibility

If integrable , then the probability density of can be reconstructed as

Moment generation

-

for all natural , if .

for all natural , if .

In particular, the special cases arise

If the expectation value is finite for a natural number , then -mal is continuously differentiable and can be expanded into a Taylor series um :

An important special case is the development of a random variable with and :

Convolution formula for densities

For independent random variables and applies to the characteristic function of the sum

because because of independence applies

Characteristic function of random sums

If independently identically distributed random variables and a -value random variable that is independent of all , then the characteristic function of the random variable can be

as a concatenation of the probability-generating function of and the characteristic function of :

-

.

.

Uniqueness set

The following theorem of uniqueness applies: If , are random variables and holds for all , then , i. H. and have the same distribution function. Hence, it can easily be used to determine the convolution of some distributions.

From the uniqueness theorem, Lévy's continuity theorem can be deduced: If is a sequence of random variables, then ( convergence in distribution ) holds if and only if holds for all . This property can be used with central limit value sets.

Examples

| distribution

|

Characteristic function

|

|

Discrete distributions

|

Binomial distribution

|

|

Poisson distribution

|

|

Negative binomial distribution

|

|

|

Absolutely continuous distributions

|

standard normally distributed standard normally distributed

|

|

normally distributed normally distributed

|

|

evenly distributed evenly distributed

|

|

Standard Cauchy distributed Standard Cauchy distributed

|

|

gamma distributed gamma distributed

|

|

More general definitions

Definition of multidimensional random variables

The characteristic function can be extended to -dimensional real random vectors as follows:

-

,

,

where denotes the standard scalar product.

Definition of nuclear space

The concept of characteristic function also exists for nuclear spaces . The function , defined in the nuclear space , is called characteristic function if the following properties apply:

-

is steady

is steady

-

is positive definite, i.e. H. for each choice is

is positive definite, i.e. H. for each choice is

-

is normalized, i.e. H.

is normalized, i.e. H.

In this case, the Bochner-Minlos theorem says that a probability measure is induced on the topological dual space .

For random measurements

The characteristic function can also be defined for random dimensions . However, it is then a functional , so its arguments are functions. If a measure is accidental, the characteristic function is given as

for all limited, measurable real-valued functions with compact support . The random measure is clearly determined by the values of the characteristic function on all positive continuous functions with compact support .

Relationship to other generating functions

In addition to the characteristic functions, the probability-generating functions and the moment-generating functions also play an important role in probability theory.

The probability-generating function of a -valent random variable is defined as . Accordingly, the relationship applies .

The moment generating function of a random variable is defined as . Accordingly, the relationship applies if the torque-generating function exists. In contrast to the characteristic function, this is not always the case.

There is also the cumulative-generating function as the logarithm of the torque-generating function. The term cumulative is derived from it.

Individual evidence

-

↑ Achim Klenke: Probability Theory . 3. Edition. Springer-Verlag, Berlin Heidelberg 2013, ISBN 978-3-642-36017-6 , p. 553 , doi : 10.1007 / 978-3-642-36018-3 .

literature

Web links

![{\ displaystyle f \ colon \ mathbb {R} \ to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/804bc4294f8fbfa876c763dc1f27702951e750f6)