Probability function

A probability function , also called counting density , is a special real-valued function in stochastics . Probability functions are used to construct and examine probability distributions , more precisely discrete probability distributions . Each discrete probability distribution can be assigned a unique probability function. Conversely, each probability function defines a uniquely determined discrete probability distribution.

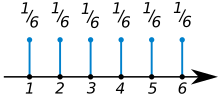

In most cases, probability functions are defined on the natural numbers . You then assign each number the probability that that number will occur. For example, when modeling a fair dice, the probability function would assign the value to the numbers from one to six and zero to all others.

From the point of view of measure theory , probability functions are special density functions (in the sense of measure theory) with regard to the counting measure . In a more general context, these are also called weight functions.

definition

Definition of probability function : For a discrete random variable , the probability function for is defined by

One writes “for all others ”, or otherwise as an abbreviation for “other ”.

For the construction of probability distributions

Given a function

- ,

for which applies

- It is for everyone . The function assigns a real number between zero and one to every natural number .

- is normalized in the sense that the function values add up to one. So it applies

- .

Then a probability function is called and defined by

- for all

a uniquely determined probability distribution on the natural numbers , provided with the power set as the event system .

Derived from probability distributions

Given a probability distribution on the natural numbers , provided with , and be a random variable with values in . Then is called

defined by

the probability function of . Analog means

defined by

the probability function of

Examples

A typical probability function is

for a natural number and a real number . The normalization here follows directly from the binomial theorem , because it is

- .

The probability distribution generated in this way is the binomial distribution .

Another classic probability function is

- For

and a . Here the normalization follows from the geometric series , because it is

- .

The probability distribution generated in this way is the geometric distribution .

general definition

The definition can be extended from the natural numbers to arbitrary at most countable sets . Is such a crowd and is

With

- ,

so defined by

- for all

a clearly determined probability distribution . Conversely, if a probability distribution is and a random variable with values in , then are called

- defined by

and

- defined by

the probability function of respectively .

Alternative definition

Some authors first define real sequences with for all and

and call these sequences probability vectors or stochastic sequences .

A probability function is then defined as

given by

- for all

Conversely, each probability distribution or random variable then also defines a stochastic sequence / probability vector via or

Other authors already call the sequence a counting density.

Further examples

A typical example of probability functions on arbitrary sets is the discrete uniform distribution on a finite set . By definition it then has the probability function

- for everyone .

The access via the stochastic sequences allows the following construction of probability functions: If any (at most countable) sequence of positive real numbers with index set is given for which

is true, that's how one defines

- .

Then is a stochastic sequence and thus also defines a probability function. For example, if you look at the episode

- for ,

so is

- .

This is the normalization constant and results as a probability function

- .

This is the probability function of the Poisson distribution .

Determination of key figures using probability functions

Many of the important key figures of random variables and probability distributions can be derived directly from the probability function if it exists.

Expected value

If a random variable with values in and probability function , then the expected value is given by

- .

It always exists, but it can also be infinite. If, more generally, a subset of real numbers that can be counted at most and a random variable with values in and probability function, then the expected value is given by

if the sum exists.

Variance

Analogous to the expected value, the variance can also be derived directly from the probability function. Be to it

the expected value. If then a random variable with values in and probability function , then the variance is given by

or equivalent to it based on the law of displacement

Accordingly, in the more general case of a random variable with values in (see above), that

Here, too, all statements only apply if the corresponding sums exist.

mode

For discrete probability distributions, the mode is defined directly via the probability function: If a random variable with values in and probability function or is a probability distribution on with probability function , then a mode or modal value of or , if

is. If, more generally, there is an at most countable set , the elements of which are sorted in ascending order, that is , a is called a mode or modal value if

applies.

Properties and constructive terms

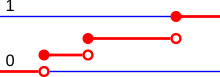

Distribution functions and probability functions

If a probability function is on , then the distribution function of the corresponding probability measure is given as

- .

The rounding function denotes , that is , the largest whole number that is less than or equal to .

If the real numbers are defined on at most a countable subset, i.e. on , then the distribution function of the probability measure is defined by

- .

Example of this is .

Convolution and sum of random variables

For probability distributions with probability functions, the convolution (of probability distributions) can be traced back to the convolution (of functions) of the corresponding probability functions. If probability distributions are with probability functions and , then is

- .

The convolution of and and denotes the convolution of the functions and . The probability function of the convolution of two probability distributions is thus exactly the convolution of the probability functions of the probability distributions.

This property is directly transferred to the sum of stochastically independent random variables . If two stochastically independent random variables with probability functions and are given, then is

- .

The probability function of the sum is thus the convolution of the probability functions of the individual random variables.

Probability generating function

A probability-generating function can be assigned to each probability distribution. This is a polynomial or a power series with the probability function as a coefficient. It is thus defined as

for the probability function of a probability distribution . The probability-generating function of a random variable is defined analogously.

Probability-generating functions make it easier to investigate and calculate with probability distributions. For example, the probability-generating function of the convolution of two probability distributions is exactly the product of the probability-generating functions of the individual probability distributions. Important key figures such as the expected value and the variance can also be found in the derivatives of the probability-generating functions.

literature

- Hans-Otto Georgii: Stochastics . Introduction to probability theory and statistics. 4th edition. Walter de Gruyter, Berlin 2009, ISBN 978-3-11-021526-7 , doi : 10.1515 / 9783110215274 .

- Achim Klenke: Probability Theory . 3. Edition. Springer-Verlag, Berlin Heidelberg 2013, ISBN 978-3-642-36017-6 , doi : 10.1007 / 978-3-642-36018-3 .

- David Meintrup, Stefan Schäffler: Stochastics . Theory and applications. Springer-Verlag, Berlin Heidelberg New York 2005, ISBN 978-3-540-21676-6 , doi : 10.1007 / b137972 .

- Klaus D. Schmidt: Measure and Probability . 2nd, revised edition. Springer-Verlag, Heidelberg Dordrecht London New York 2011, ISBN 978-3-642-21025-9 , doi : 10.1007 / 978-3-642-21026-6 .

- Claudia Czado, Thorsten Schmidt: Mathematical Statistics . Springer-Verlag, Berlin Heidelberg 2011, ISBN 978-3-642-17260-1 , doi : 10.1007 / 978-3-642-17261-8 .

- Ulrich Krengel : Introduction to probability theory and statistics . For studies, professional practice and teaching. 8th edition. Vieweg, Wiesbaden 2005, ISBN 3-8348-0063-5 , doi : 10.1007 / 978-3-663-09885-0 .

Individual evidence

- ^ Georgii: Stochastics. 2009, p. 18.

- ↑ Klenke: Probability Theory. 2013, p. 13.

- ^ Schmidt: Measure and probability. 2011, p. 196.

- ↑ Czado, Schmidt: Mathematical Statistics. 2011, p. 4.

- ↑ Klenke: Probability Theory. 2013, p. 13.

- ↑ Meintrup, Schäffler: Stochastics. 2005, p. 63.

- ^ Schmidt: Measure and probability. 2011, p. 234.

- ^ Georgii: Stochastics. 2009, p. 18.

- ^ AV Prokhorov: Mode . In: Michiel Hazewinkel (Ed.): Encyclopaedia of Mathematics . Springer-Verlag , Berlin 2002, ISBN 978-1-55608-010-4 (English, online ).

![{\ displaystyle f (i) \ in [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e858b4d8c2c8ad065703d13a47d25fda70b0fcae)

![{\ displaystyle f \ colon \ Omega \ to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8970817f50bfd1ffa3f538617ca394ecc19c255f)

![{\ displaystyle f_ {P} \ colon \ Omega \ to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/292ecd34080a3194456f9f0a4e4f673c61222355)

![{\ displaystyle f_ {X} \ colon \ Omega \ to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b4b302d3624a2ae2a94447f9b2388f3ef22816eb)

![{\ displaystyle p_ {i} \ in [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/14760da7a7625a3070329a16c4c37033424871fe)