Load distribution (computer science)

By means of load distribution ( English Load Balancing ) are in the computer science extensive calculations or large amounts of requests to multiple concurrent systems distributed with the aim to make their whole processing more efficient.

Load sharing is the result of research in the field of parallel computers . Two main approaches exist side by side: static algorithms, which do not take into account the state of the various machines, and dynamic algorithms, which are often more general and more efficient, but require a sophisticated exchange of information between the various processing units, which can damage efficiency.

This can take very different forms. A simple load distribution takes place, for example, on computers with several processors. Each process can be run on its own processor . The way the processes are distributed across processors can have a major impact on the overall performance of the system. B. the cache content is local to each processor. Another method is found with computer clusters or servers . Several computers form a network, which mostly behaves like a single system to the outside world.

Problem overview

A load balancing algorithm always tries to solve a specific problem. Among other things, the type of tasks to be solved, the algorithmic complexity , the hardware architecture and the fault tolerance should be taken into account. Therefore, a compromise has to be found in order to best meet the application-specific requirements.

Type of jobs

The efficiency of load balancing algorithms crucially depends on the type of job. The more information about the tasks is available at the time of decision making, the greater the potential for optimization.

size

A perfect knowledge of the execution time of each of the tasks allows to achieve an optimal load distribution (see the prefix sum algorithm below). Unfortunately, this is actually an idealized case. Knowing exactly the execution time of each task is an extremely rare situation.

Because of this, there are several techniques that you can use to get an idea of the different execution times. First of all, in the fortunate case that the tasks are of relatively homogeneous size, one can assume that each of them will take roughly the average execution time. On the other hand, if the execution time is very erratic, more subtle techniques must be used. One technique is adding some metadata to each task. Depending on the previous execution time for similar metadata, conclusions for a future task can be drawn on the basis of statistics.

Finally, the number of tasks themselves can be of some concern.

Dependency

In some cases the tasks are interdependent. These mutual dependency relations can be illustrated by an acyclically oriented graph ( English Directed acyclic graph ). Intuitively, some tasks cannot begin until others are not completed.

Assuming that the time required for each of the tasks is known in advance, an optimal execution order must minimize the total execution time. Although this is an NP-hard problem and can therefore be difficult to solve precisely, there are scheduling algorithms that produce honorable assignments of tasks.

Cleavage

Another feature of the jobs that has a big impact on the design of the load balancing algorithm is their ability to be split into subtasks while they are running. The "work-stealing" algorithm that we will introduce later makes use of this special feature.

Static and dynamic algorithms

Static algorithms

A load balancing algorithm is said to be "static" if it does not take the state of the system into account when distributing tasks. By system state we mean the utilization (and sometimes even overload) of certain processors. Instead, assumptions are made about the overall system in advance, such as B. the arrival times and the resource requirements of the incoming tasks. The number of processors, their respective performance and communication speed are also known. So it's about combining tasks with the processors in such a way that a certain performance function is minimized. The trick lies in the design of this performance function.

The technologies are always centralized around a router that distributes the loads and ensures that the function is optimized. This minimization can take into account information related to the tasks to be distributed and derive an expected execution time.

The advantage of static algorithms is that they are easy to implement and extremely efficient for relatively regular tasks (such as processing HTTP requests from a website). However, there is still statistical variance in the distribution of tasks that can overload some computation units.

Dynamic algorithms

In contrast to static load distribution algorithms, dynamic algorithms take into account the current load of each of the computing units (also called nodes) in the system. With this approach, tasks can be dynamically moved from an overloaded node to an overloaded node for faster processing. Although these algorithms are much more complicated to design, they can produce great results, especially when execution times vary widely from one task to another.

With dynamic load balancing, the architecture can be more modular since it is not mandatory to have a special node for work balancing. If tasks are clearly assigned to a processor according to its state at a certain point in time, we speak of a unique assignment. On the other hand, if the tasks can be permanently redistributed according to the state of the system and its development, this is called dynamic allocation. Obviously, a load sharing algorithm that requires too much communication to make its decisions is undesirable at the risk of slowing down the overall problem solving. The worst case scenario is a game of ping pong between the processors which results in the solution being blocked indefinitely.

Hardware architecture

Heterogeneous machines

Parallel computer infrastructures often consist of units with different computing power, which should be taken into account when distributing the load.

For example, units with lower performance can receive queries that require less computational effort or, in the case of homogeneous or unknown query sizes, receive fewer queries than larger units.

Shared and distributed storage

Parallel computers are often divided into two broad categories: those in which all processors share a single common memory on which they read and write in parallel ( PRAM model ), and those in which each processing unit has its own memory ( model des distributed memory ) and exchanges information with the other units through messages.

For computers with shared memory, the management of write conflicts slows down the individual execution speed of each individual processing unit considerably. However, you can work well in parallel. Conversely, when exchanging messages, each of the processors can work at full speed. On the other hand, when exchanging messages, all processors are forced to wait for the slowest processors to begin the communication phase.

In reality, few systems fall into exactly one of the categories. In general, the processors each have an internal memory to store the data needed for the next calculations and are organized in successive clusters. Often these processing elements are then coordinated through distributed storage and messaging. Therefore, the load sharing algorithm should be clearly adapted to a parallel architecture. Otherwise, there is a risk that the efficiency of parallel problem-solving is severely impaired.

hierarchy

There are two main categories of load distribution algorithms to adapt to the hardware structures shown above. On the one hand, the tasks are assigned by a "master" and carried out by "workers" ( master-slave model ) who keep the master informed about the development of their work. The master can then be responsible for assigning (and reallocating) the workload if the algorithm is dynamic (with dynamic assignment). The literature speaks of the "master worker" architecture. Conversely, the control can be distributed to the various nodes. The load balancing algorithm is then run on each of them, and responsibility for assigning jobs (and reassigning and sharing them, if any) is shared. The latter category requires a dynamic load balancing algorithm.

The same applies here: Since the design of each load balancing algorithm is unique, the previous distinction must be restricted. An intermediate strategy is also possible, e.g. B. with "master" nodes for each sub-cluster, which in turn are subject to a global "master". There are also organizations with multiple levels, with a switch between master-slave and distributed control strategies. The latter strategies quickly become complex and rarely encountered. Developers prefer algorithms that are easier to control.

Scalability

In the context of algorithms that run very long-term ( server , cloud ...), the computer architecture develops over time. However, it is preferable not to have to design a new algorithm every time.

Another important parameter of a load balancing algorithm is therefore its ability to adapt to an evolving hardware architecture. This is called the scalability of the algorithm. An algorithm should also be scalable for an input parameter if its performance remains relatively independent of the size of that parameter.

If the algorithm is able to adapt to a different number of processors, the number of processors must be determined, however, before the execution, he is called a "malleable" ( English called "moldable"). If the algorithm is the other hand, capable of dealing with a fluctuating number of processors during the execution of the algorithm is considered a "malleable" ( English "malleable"). Most load sharing algorithms are at least malleable.

Fault tolerance

In large computer networks in particular , it is intolerable to run a parallel algorithm that cannot withstand the failure of a single component. Therefore, fault-tolerant algorithms are developed that can detect processor failures and restore the computation.

approaches

Static load distribution with full commitment to the jobs: “Prefix sum” algorithm

If the tasks are independent and fissile, and if one knows their respective execution times, then there is a simple and optimal algorithm.

By dividing the tasks so that each processor has the same computing effort, the results only need to be grouped. With the help of a prefix-sum algorithm, this division can be calculated in a logarithmic time of the number of processors.

However, if the tasks cannot be broken down (they are said to be atomic), even though optimizing task assignment is a difficult problem, it is still possible to approximate a relatively fair distribution of tasks, provided that the size of each task is much less than the total amount of computations performed by each of the nodes.

Most of the time, the execution time of a task is unknown and only rough approximations are available. While this algorithm is particularly efficient, it is not practical for these scenarios.

Static load distribution without prior knowledge

If the execution time is not known in advance at all, static load balancing is always possible.

Round robin

In this algorithm, the first request is sent to the first server, then the next to the second, and so on until the last. Then we start again by assigning the next request to the first server, and so on.

This algorithm can be weighted so that the top performing units receive the greatest number of requests and are the first to receive them.

Randomized assignment

It is simply a matter of randomly assigning tasks to the various servers. This method works pretty well. If the number of tasks is known in advance, it is even more efficient to compute a random permutation in advance. This avoids communication costs for each order. A distribution master is not necessary, because everyone knows what task is assigned to them. Even if the number of tasks is not known, there is no need to communicate with a pseudo-random assignment generation known to all processors.

The performance of this strategy (as measured by the total execution time for a given fixed set of tasks) decreases with the maximum size of the tasks.

"Master worker"

The master worker scheme is one of the simplest dynamic load balancing algorithms. A master distributes the workload among all workers (sometimes referred to as a "slave"). At first all workers are inactive and report this to the master. The master distributes the tasks to them. When he has no more tasks to assign, he informs the workers so that they should no longer ask for work.

The advantage of this system is that it distributes the load very fairly. If the time required for the job is not taken into account, the execution time would be comparable to the prefix sum given above .

The problem with this algorithm is that it is difficult to adapt to a large number of processors because of the large volumes of communication. This lack of scalability means that it quickly becomes inoperable with very large servers or very large parallel computers. The master acts as a bottleneck.

However, the quality of the algorithm can be significantly improved if the master is replaced with a task list in which the various processors are used. Although this algorithm is a little more difficult to implement, it promises much better scalability, even if it is still insufficient for very large data centers.

Distributed architecture, without prior knowledge: labor theft

One technique that is used to overcome scalability without prior knowledge of the execution times is, Work stealing ( English for work theft).

The approach is to assign a certain number of tasks to each processor randomly or in a predefined manner and then allow inactive processors to “steal” work from active or overloaded processors. There are several implementations of this concept, defined by a model of task sharing and the rules governing exchanges between processors. While this technique can be particularly effective, it is difficult to implement because it must be ensured that the message exchange does not replace the actual execution of calculations to solve the problem.

In the case of atomic tasks, two main strategies can be distinguished: those in which the processors with low load offer their computing capacity to those with the highest load, and those in which the most heavily loaded units want to reduce the workload assigned to them. It has been shown that when the network is heavily used it is more efficient if the least loaded units offer their availability, and that when the network is under low utilization the overloaded processors require the support of the least active. This rule of thumb limits the number of messages exchanged.

In the event that one starts with a single large task that cannot be split up beyond an atomic level, there is a very efficient algorithm in which the higher-level task is distributed in a tree.

ancestors

First of all, all processors have an empty task except one that works on it sequentially. Inactive processors then randomly send requests to other processors (which are not necessarily active). If it is able to subdivide the task it is working on, it does so by sending part of its work to the requesting node. Otherwise it will return an empty task. This creates a tree structure. It is then necessary to send a termination signal to the higher-level processor when the subtask has been completed so that it in turn sends the message to its higher-level processor until it reaches the root of the tree. When the first processor, i.e. H. the root, done, a global completion message can be broadcast. In the end it is necessary to put the results together by bringing the tree back up.

Efficiency

The efficiency of such an algorithm is close to the prefix sum, if the time for cutting and communication is not too high compared to the work to be done. To avoid excessive communication costs, it is possible to imagine a list of jobs on shared memory. Therefore, a request simply reads from a particular location in this shared memory at the request of the master processor.

Application examples

Some possible procedures are the upstream connection of a system (load balancer, front-end server), which splits the requests, or the use of DNS with the round robin procedure . Server load distribution is particularly important for web servers , as a single host can only answer a limited number of HTTP requests at once.

The upstream load balancer adds additional information to the HTTP request in order to send requests from the same user to the same server. This is also important when using SSL to encrypt communication so that a new SSL handshake does not have to be carried out for each request.

Load balancing is also used for data / voice lines in order to distribute the traffic flow over parallel lines. In practice, however, problems often arise in distributing the data / voice traffic evenly over both lines. The solution is therefore usually implemented that one line is used as a forward and the second line is used as a return channel.

Load balancing often goes hand in hand with fail-safe mechanisms : By setting up a cluster with the appropriate capacity and distributing the requests to individual systems, you achieve an increase in fail-safety, provided that the failure of a system is detected and the requests are automatically transferred to another system ( see also: high availability or high availability, "HA").

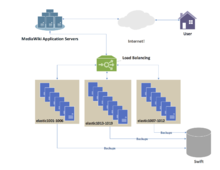

Server load balancing

Server load balancing (s. Server Load Balancing , "SLB") is used in all applications where a large number of clients create a high demand density and thus each server machine would overload one. Applications can be scaled by distributing the requests to several servers using SLB . Typical criteria for determining the need for SLB are the data rate , the number of clients and the request rate.

Another aspect is the increase in data availability through SLB. The use of several systems with the same data / application basis enables redundant data storage. The task of the SLB here is to relay the clients to the individual servers. This technology is used, among other things, in content delivery networks .

SLB is used by large portals such as Wikipedia , marketplaces or online shops. In principle, the user does not notice whether SLB is being used on the other side. See also Redirect ( forwarding ).

SLB can be implemented on different layers of the ISO-OSI reference model .

DNS round robin

Several IP addresses are stored for a host name in the domain name system , which are returned alternately as the result of inquiries. It is the easiest way to distribute the load. For a detailed description, see load balancing via DNS .

NAT based SLB

The so-called NAT based SLB is more complex, but more powerful . Here, two networks must first be set up: a private network, to which the servers belong, and a public network, which is connected to the public Internet via routers . A content switch is connected between these two networks, i.e. a router that receives and evaluates inquiries from the public network and then decides to which computer in the private network it sends the connection. This takes place on the network layer of the OSI reference model . NAT technology is used here: The load balancer manipulates incoming and outgoing IP packets in such a way that the client has the impression that it is always communicating with one and the same computer, namely the load balancer. The servers in the private network all have the same virtual IP address, so to speak.

The problem with this method is that all traffic flows through the load balancer, which means that sooner or later it will become a bottleneck if it is too small or not redundant.

The advantage of the NAT based SLB is that the individual servers are additionally protected by the load balancer. Numerous manufacturers of load balancer solutions offer additional security modules for this purpose, which can filter out attacks or incorrect queries before they reach the server cluster . The termination of SSL sessions and thus the relief of the server clusters in HTTP farms is an advantage of server-based load balancing that should not be underestimated.

In addition to active health checks, as they are necessary with the other procedures, passive health checks have been increasingly used for some time in large web clusters. Here the incoming and outgoing data traffic is monitored by the load balancer.As soon as a computer in the server cluster triggers a timeout when answering a request, the same request can be sent to another cluster server without the client noticing.

Flat based SLB

This method only requires one network. The servers and the load balancer must be connected to one another via a switch. If the client sends a request (to the load balancer), the corresponding Ethernet frame is manipulated in such a way that it represents a direct request from the client to one of the servers - the load balancer exchanges its own MAC address for that of the server to be switched and sends the frame on. The IP address remains unchanged. This procedure is also called MAT (MAC Address Translation). The server that received the frame sends the response directly to the IP address of the sender, i.e. the client. The client has the impression that it is communicating with only one computer, namely the load balancer, while the server actually only communicates with one computer, directly with the client. This process is known as DSR (Direct Server Return).

The advantage of flat-based SLB is that it relieves the load balancer. The (usually more data-rich) return traffic takes place directly.

Anycast SLB

With load distribution via anycast , a whole group of computers is addressed via one address. The answer comes from the one who can be reached via the shortest route. This is implemented on the Internet with BGP .

The advantage of this solution is the geographically close selection of a server with a corresponding reduction in latency. However, the implementation requires the operation of its own autonomous system on the Internet.

Problems in practice

Applications such as online shops often manage client requests through sessions . For existing sessions z. B. the content of the shopping cart is saved. However, this assumes that a client, for which a session has already been opened, repeatedly communicates with the same server, provided that client-based sessions are used here. Either all connections of a client must be routed to the same server via its IP, or the load balancer must be able to act on the application layer of the OSI reference model, e.g. B. to extract cookies and session IDs from packets and evaluate them in order to then make a mediation decision. The forwarding of a session to always the same backend server is called "affinity". Layer 4-7 switches are therefore used as load balancers in practice. Alternatively, the problem can also be solved by the application itself (e.g. by storing the session in a database) so that a request can also be answered by any computer in the server pool.

literature

- Ingo Wegener (ed.): Highlights from computer science. Springer Verlag, Berlin / Heidelberg, ISBN 978-3-642-64656-0 .

- Hartmut Ernst: Basic course in computer science . 3rd edition, Friedrich Vieweg & Sohn, Wiesbaden 2003, ISBN 978-3-528-25717-0 .

- Paul J. Kühn: Communication in distributed systems. Springer Verlag, Berlin / Heidelberg 1989, ISBN 978-3-540-50893-9 .

- Bernd Bundschuh, Peter Sokolowsky: Computer structures and computer architectures. Friedrich Vieweg & Sohn Verlag, Wiesbaden 1988, ISBN 978-3-528-04389-6 .

Individual evidence

- ^ A b Sanders Peter, Dietzfelbinger Martin, Dementiev Roman: Sequential and parallel algorithms and data structures: the basic toolbox . Ed .: Springer. ISBN 978-3-03025209-0 .

- ^ Qi Liu, Weidong Cai, Dandan Jin et Jian Shen: Estimation Accuracy on Execution Time of Run-Time Tasks in a Heterogeneous Distributed Environment . In: Sensors . August 30, 2016, ISSN 1424-8220 , p. 1386 , doi : 10.3390 / s16091386 , PMID 27589753 (English, mdpi.com ).

- ↑ Alakeel, Ali: A Guide to Dynamic Load Balancing in Distributed Computer Systems . In: International Journal of Computer Science and Network Security, (IJCSNS), vol 10 . November 2009 (English, researchgate.net ).

- ↑ Asghar Sajjad, Aubanel Eric, Bremner David: A Dynamic Moldable Job Scheduling Based Parallel SAT Solver . In: 22013 42nd International Conference on Parallel Processing . October 2013, p. 110-119 , doi : 10.1109 / ICPP.2013.20 ( ieee.org ).

- ↑ G. Punetha, N. Gnanambigai, P. Dinadayalan: Survey on fault tolerant - Load balancing algorithms in cloud computing . In: IEEE (Ed.): 2015 2nd International Conference on Electronics and Communication Systems (ICECS) . 2015, ISBN 978-1-4799-7225-8 , doi : 10.1109 / ecs.2015.7124879 .

- ↑ Shared Session Management ( Memento from May 23, 2011 in the Internet Archive ). Retrieved June 3, 2011.

Web links

- Load Balancing in a Cluster-Based Distributed Virtual Environment (accessed July 24, 2017)

- Load balancing in distributed multi-agent simulations (accessed July 24, 2017)

- Load Balancing - Distributed Systems (accessed July 24, 2017)

- Parallel Programming Concepts (accessed July 24, 2017)

- Conception of a high availability solution for LAMP systems with open source software and standard hardware (accessed on July 24, 2017)