Optical note recognition

Music ocr (ger .: Optical Music Recognition, abbreviation: OMR ) is the research field that investigates the computerized reading music notation in documents. The goal is to teach the computer to read and interpret sheet music so that it can produce a machine-readable version. As soon as this version has been created, it can be exported in various formats in order to play the music back (e.g. via MIDI ) or to set it again (e.g. via MusicXML ).

In the past, OMR was also seen as a form of optical character recognition and called Music OCR . However, due to numerous significant differences, these terms should not be mixed up and the term Music OCR should be avoided.

history

Research into optical note recognition began at MIT in the late 1960s when the first research scanners became affordable. Since the working memory of the computers used was a limiting factor, the first attempts were limited to a few cycles (see the first published scan). In 1984 a Japanese research group from Waseda University developed a specialized robot called WABOT (WAseda roBOT), which could read the printed music and accompany a singer on an electric organ.

Crucial research advances in the early days were made by Ichiro Fujinaga, Nicholas Carter, Kia Ng, David Bainbridge, and Tim Bell, who developed a number of approaches that are still used in some systems today.

The availability of inexpensive scanners enabled more researchers to devote themselves to optical note recognition and the number of projects increased. In 1991 the first commercial solution MIDISCAN (now SmartScore ) was developed by Musitek Corporation.

With the spread of smartphones that are equipped with sufficiently good cameras and sufficient computing capacity, some mobile solutions have become possible in which photos taken with the smartphone are processed directly on the device.

Relationship to other research areas

Optical note recognition is related to several other areas of research, particularly computer vision , document analysis , and music information retrieval . From the point of view of musical practice, OMR can be seen as a method of inputting notes into the computer, which makes editing and transcribing musical notes as well as composing easier. In libraries, OMR can be used to make music archives searchable and musicological studies can be carried out cost-effectively on a large scale thanks to OMR.

OMR vs. OCR

Optical note recognition is often compared with optical character recognition (OCR for short). While there are many similarities, the term “Music OCR” is misleading because of key differences. The first difference is that music notation is a configurative writing system. This means that the semantics do not depend on the symbols used (e.g. noteheads, stems, flags), but on the way in which these are placed on the staves. Two-dimensional relationships and context are crucial in determining the correct interpretation of notes. In contrast, with text, usually only the text line is decisive --- slight deviations from it do not change the meaning of the text. So it can be viewed as a one-dimensional flow of information along the text line.

The second big difference is in expectations. An OCR system usually ends with the recognition of letters and words, whereas an OMR system is expected to also reconstruct the semantics (e.g. from a filled note head and an attached note stem that it is a note with a certain duration). The graphic concepts (position, type of symbol) must therefore be translated into musical concepts (pitch, note duration ...) by applying the rules of musical notation. There is no suitable equivalent for this step in text recognition. The following comparison can be helpful: The requirements for an OMR system are as complex as if one expects an OCR system to reconstruct the HTML source code from the screenshot of a website .

The third big difference is the character set. While there are also extremely complex symbols and extensive character sets in other writing systems such as Chinese writing , the character set of musical notation is characterized by the fact that the symbols can have enormous differences in size - from small dots to brackets that span a whole page. Some symbols even have an almost unlimited type of representation, such as slurs, which are only defined as more or less smooth curves that can be interrupted at will.

Procedure

The recognition of notes usually takes place in several sub-steps, which are solved by means of special algorithms from the field of pattern recognition .

A number of competing approaches exist, most of which provide some sort of pipeline where each step performs a specific function, e.g. B. Detecting and removing staves before proceeding to the next step. A common problem with these methods is that errors propagate and multiply through the system. For example, if you miss staves in the first few steps, subsequent steps will likely ignore that area of the image, making the output incomplete.

Optical note recognition is often underestimated because it is a seemingly simple problem: working with a perfect scan of printed notes, visual recognition can be solved with a number of relatively simple algorithms such as projections or pattern matching . However, the process becomes significantly more difficult if the scan is of poor quality or if handwritten notes are to be recognized - a challenge that almost all systems fail. Even with perfect visual recognition, the reconstruction of the musical semantics is still a great challenge due to ambiguities and frequent violations of the rules of music notation (see Chopin's Nocturne). Donald Byrd and Jakob Simonsen claim that OMR is so difficult because modern music notation is enormously complex.

Donald Byrd has collected a number of interesting examples on his website, as well as some extreme examples which demonstrate how far the rules of music notation can be bent.

Output from OMR systems

OMR systems typically generate a version of the notes that can be reproduced acoustically (reproducibility). The most common way such a version can be created is via the generation of a MIDI file, which can be converted into an audio file using a synthesizer . However, MIDI files are limited in the information that can be stored. For example, they cannot store any information about the visual notation (how the notes were actually arranged).

If the aim of the software is to reconstruct a version that can be easily read by humans (printability, English reprintability), the complete information must be restored, including precise layout information of the notation. Suitable formats for these tasks are MEI and MusicXML .

In addition to the two applications mentioned, it can also be interesting to just extract meta information about an image or just make it searchable. In both of these cases, less understanding of the grades may be sufficient.

General model (2001)

In 2001 David Bainbridge and Tim Bell published an overview of the research activities that had taken place up to then. From this they extracted a general model for OMR, which served as a template for numerous systems that were developed after 2001. The problem is broken down into four steps, which mainly deal with visual recognition. The authors recognized that the description of how the musical semantics is reconstructed is often left out in scientific papers, since these operations depend on which output format is selected.

Refined Model (2012)

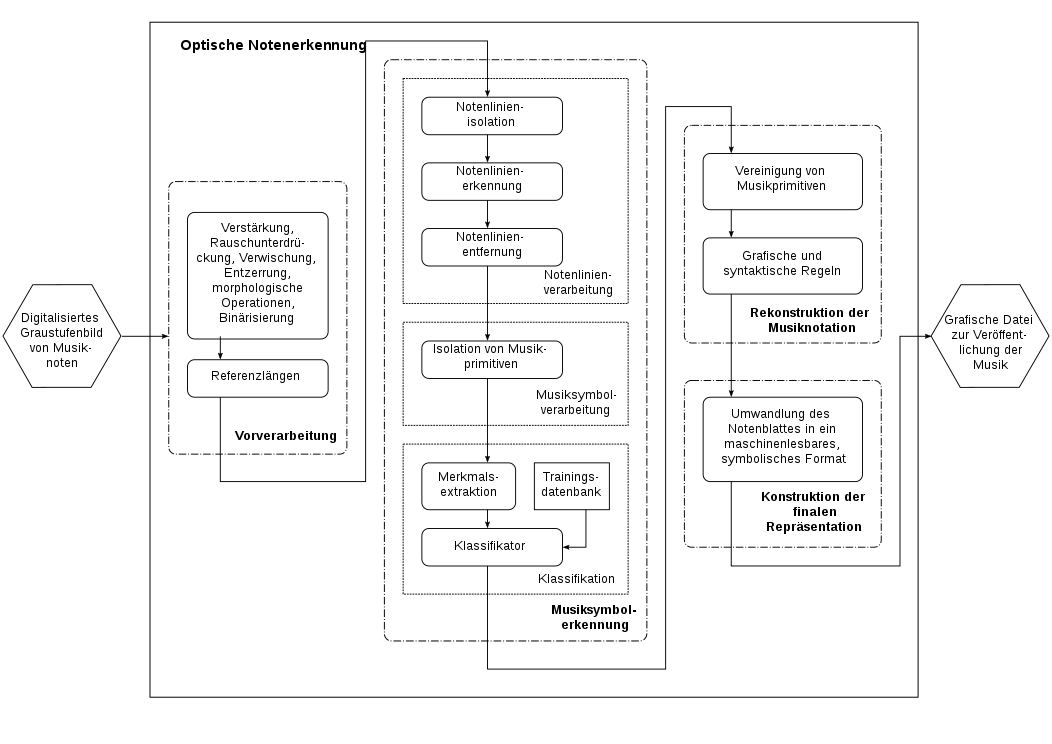

In 2012 Ana Rebelo et al. a further investigation into the techniques used in optical note recognition. The found works were categorized and a refined model was proposed with four main components: preprocessing, music symbol recognition, reconstruction of music notation and construction of the final representation. This model became the de facto standard for OMR and we still use it today (albeit sometimes with slightly different names). For each of these four blocks, the thesis gives an overview of the techniques used to solve this problem. The work is the most frequently cited publication as of 2019.

Deep Learning (since 2016)

With the advent of deep learning , many problems in the field of machine vision have seen a change. Machine learning takes the place of manually created heuristics and the development of suitable features. Staff processing, music symbol recognition and the reconstruction of music notation have seen significant advances through deep learning.

In some cases, completely new methods have even emerged that try to solve OMR directly by using sequence-to-sequence models. These methods convert an image of musical notes directly into a simplified sequence of recognized notes.

Significant scientific projects

Staff recognition competition

For systems developed before 2016, the detection and removal of the staff was a significant challenge. A scientific competition was organized to improve the state of the art for this problem. After very good results could already be achieved and many modern approaches no longer require explicit staff recognition, this competition was not continued.

An important contribution of this competition is the development and publication of the freely available CVC-MUSCIMA data set. This consists of 1000 high quality pictures of handwritten notes. 50 different musicians each transcribed a page from 20 musical works of various kinds. A further development of CVC-MUSCIMA is the MUSCIMA ++ data set, which contains further detailed annotation for 140 selected pages.

SIMSSA

The Single Interface for Music Score Searching and Analysis (SIMSSA) project is probably the largest research project investigating optical note recognition. The goal is the efficient provision of large quantities of searchable notes in electronic form. Some sub-projects have already been successfully completed, for example the Liber Usualis project and the Cantus Ultimus project.

TROMPA

Towards Richer Online Music Public-Domain Archives (TROMPA) is another international research project with the aim of making public domain digital music resources more accessible.

Records

The development of OMR systems is significantly influenced by which data sets are used for development. A sufficiently large and diverse data set helps ensure that the developed system can work robustly in different situations and can cope with a wide variety of inputs. Because sheet music is protected by copyright, it can be difficult to create and publish a record. However, there are a number of data sets that the OMR Dataset project has collected and summarized. The most important are CVC-MUSCIMA, MUSCIMA ++, DeepScores, PrIMuS, HOMUS, and the SEILS dataset, as well as the Universal Music Symbol Collection.

software

Academic and open source software

A large number of scientific OMR projects were carried out, but only a few reached such a mature state that they were published and distributed to users. These systems are:

- Aruspix

- Audiveris

- CANTOR

- Gamera

- DMOS

- OpenOMR

- Rodan

Commercial software

Most of the commercial desktop applications released in the last 20 years have disappeared from the market due to a lack of commercial success. Only a few vendors are currently developing, maintaining and selling OMR products. Some of these products claim to have detection rates close to 100%, but since no information is given about how these numbers were calculated and what data they are based on, they are not verifiable and make it almost impossible to compare different products. In addition to the desktop applications, a number of mobile applications have also been developed. After the ratings of these were mixed, however, these projects were discontinued (or at least have not received any updates since 2017). A number of OMR applications have also been developed for the iPhone and iPad and are available in the Apple Store.

- capella scan

- ForteScan Light from Fortenotation now Scan Score

- MIDI-Connections Scan of MIDI-Connections

- MP Scan from Braeburn Uses SharpEye SDK.

- NoteScan bundled with Nightingale

- OMeR (Optical Music easy Reader) Add-on for Harmony Assistant and Melody Assistant: Myriad Software (ShareWare)

- PDFtoMusic

- PhotoScore from Neuratron. The Light version of PhotoScore is used in Sibelius . PhotoScore uses the SharpEye SDK.

- Scorscan from npcImaging. Based on SightReader (?)

- SharpEye from Visiv

- VivaldiScan (just like SharpEye)

- SmartScore from Musitek. Formerly sold as "MIDISCAN". (SmartScore Lite was used in previous versions of Finale ).

- ScanScore from Lugert Verlag (Also in a bundle with Forte notation.)

See also

- Pattern recognition

- Pattern analysis

- Text recognition deals with the recognition of written text, which can be used to make documents searchable. OMR can take on a similar function in Music Information Retrieval, but a complete OMR system must also recognize the text contained in musical notes, which is why OCR can be seen as a sub-problem of OMR.

- Music information retrieval

- Music notation program

Individual evidence

- ↑ Alexander Pacha: Self-Learning Optical Music Recognition (doctoral thesis) . Ed .: TU Wien, Austria. 2019, doi : 10.13140 / RG.2.2.18467.40484 (available online ).

- ↑ Jorge Calvo-Zaragoza, Jan jr. Hajič, Alexander Pacha: Understanding Optical Music Recognition . In: Computing Research Repository . 2019, ISSN 2331-8422 , pp. 1-42.

- ↑ Fujinaga, Ichiro (2018). The OMR Story on YouTube , accessed July 30, 2019.

- ↑ Dennis Howard Pruslin: Automatic Recognition of Sheet Music (PhD) . Ed .: Massachusetts Institute of Technology, Cambridge, Massachusetts, USA. 1966.

- ↑ David S. Prerau: Computer pattern recognition of printed music . In: Fall Joint Computer Conference., Pp. 153-162.

- ↑ WABOT - WAseda robOT. Waseda University Humanoid, accessed July 30, 2019 .

- ^ Wabot's entry in the IEEE collection of Robots. IEEE, accessed July 30, 2019 .

- ↑ Audrey Laplante, Ichiro Fujinaga: Digitizing Musical Scores: Challenges and Opportunities for Libraries . In: 3rd International Workshop on Digital Libraries for Musicology., Pp. 45-48.

- ↑ Jan jr. Hajič, Marta Kolárová, Alexander Pacha, Jorge Calvo-Zaragoza: How Current Optical Music Recognition Systems Are Becoming Useful for Digital Libraries . In: 5th International Conference on Digital Libraries for Musicology., Pp. 57-61.

- ^ Donald Byrd, Jakob Grue Simonsen: Towards a Standard Testbed for Optical Music Recognition: Definitions, Metrics, and Page Images . In: Journal of New Music Research . 44, No. 3, 2015, pp. 169-195. doi : 10.1080 / 09298215.2015.1045424 .

- ^ Donald Byrd: Gallery of Interesting Music Notation. Retrieved July 30, 2019 .

- ^ Donald Byrd: Extremes of Conventional Music Notation. Retrieved July 30, 2019 .

- ^ A b David Bainbridge, Tim Bell: The challenge of optical music recognition . In: Computers and the Humanities . 35, No. 2, 2001, pp. 95-121. doi : 10.1023 / A: 1002485918032 .

- ↑ Ana Rebelo, Ichiro Fujinaga, Filipe Paszkiewicz, Andre RS Marcal, Carlos Guedes, Jamie dos Santos Cardoso: Optical music recognition: state-of-the-art and open issues . In: International Journal of Multimedia Information Retrieval . 1, No. 3, 2012, pp. 173-190. doi : 10.1007 / s13735-012-0004-6 .

- ^ Francisco J. Castellanos, Jorge Calvo-Zaragoza, Gabriel Vigliensoni, Ichiro Fujinaga: Document Analysis of Music Score Images with Selectional Auto-Encoders . In: 19th International Society for Music Information Retrieval Conference., Pp. 256-263.

- ↑ Lukas Tuggener, Ismail Elezi, Jürgen Schmidhuber, Thilo Stadelmann: Deep Watershed Detector for Music Object Recognition . In: 19th International Society for Music Information Retrieval Conference., Pp. 271-278.

- ↑ Jan jr. Hajič, Matthias Dorfer, Gerhard Widmer, Pavel Pecina: Towards Full-Pipeline Handwritten OMR with Musical Symbol Detection by U-Nets . In: 19th International Society for Music Information Retrieval Conference., Pp. 225-232.

- ↑ Alexander Pacha, Jan jr. Hajič, Jorge Calvo-Zaragoza: A Baseline for General Music Object Detection with Deep Learning . In: Applied Sciences . 8, No. 9, 2018, pp. 1488–1508. doi : 10.3390 / app8091488 .

- ↑ Alexander Pacha, Kwon-Young Choi, Bertrand Coüasnon, Yann Ricquebourg, Richard Zanibbi, Horst Eidenberger: Handwritten Music Object Detection: Open Issues and Baseline Results . In: 13th International Workshop on Document Analysis Systems., Pp. 163-168. doi : 10.1109 / DAS.2018.51

- ↑ Alexander Pacha, Jorge Calvo-Zaragoza, Jan jr. Hajič: Learning Notation Graph Construction for Full-Pipeline Optical Music Recognition . In: 20th International Society for Music Information Retrieval Conference (in press) ..

- ^ Eelco van der Wel, Karen Ullrich: Optical Music Recognition with Convolutional Sequence-to-Sequence Models . In: 18th International Society for Music Information Retrieval Conference ..

- ^ Jorge Calvo-Zaragoza, David Rizo: End-to-End Neural Optical Music Recognition of Monophonic Scores . In: Applied Sciences . 8, No. 4, 2018. doi : 10.3390 / app8040606 .

- ^ Arnau Baró, Pau Riba, Jorge Calvo-Zaragoza, Alicia Fornés: Optical Music Recognition by Recurrent Neural Networks . In: 14th International Conference on Document Analysis and Recognition., Pp. 25-26. doi : 10.1109 / ICDAR.2017.260

- ↑ Arnaud Baró, Pau Riba, Jorge Calvo-Zaragoza, Alicia Fornés: From Optical Music Recognition to Handwritten Music Recognition: A baseline . In: Pattern Recognition Letters . 123, 2019. doi : 10.1016 / j.patrec.2019.02.029 .

- ↑ Alicia Fornés, Anjan Dutta, Albert Gordo, Josep Lladós: The 2012 Music Scores Competitions: Staff Removal and Writer Identification . In: Springer (Ed.): Graphics Recognition. New trends and challenges . 2013, pp. 173-186. doi : 10.1007 / 978-3-642-36824-0_17 .

- ↑ website SIMSSA project. McGill University, accessed July 30, 2019 .

- ^ The Liber Usualis project website. McGill University, accessed July 30, 2019 .

- ^ The Cantus Ultimus project website. McGill University, accessed July 30, 2019 .

- ↑ The TROMPA project website. Trompa Consortium, accessed July 30, 2019 .

- ↑ Pacha, Alexander: The OMR Datasets Project (Github Repository). Retrieved July 30, 2019 .

- ↑ Alicia Fornés, Anjan Dutta, Albert Gordo, Josep Lladós: CVC-MUSCIMA: A Ground-truth of Handwritten Music Score Images for Writer Identification and Staff Removal . In: International Journal on Document Analysis and Recognition . 15, No. 3, 2012, pp. 243-251. doi : 10.1007 / s10032-011-0168-2 .

- ↑ Jan jr. Hajič, Pavel Pecina: The MUSCIMA ++ Dataset for Handwritten Optical Music Recognition . In: 14th International Conference on Document Analysis and Recognition., Pp. 39-46. doi : 10.1109 / ICDAR.2017.16

- ↑ Lukas Tuggener, Ismail Elezi, Jürgen Schmidhuber, Marcello Pelillo, Thilo Stadelmann: DeepScores - A Dataset for Segmentation, Detection and Classification of Tiny Objects . In: 24th International Conference on Pattern Recognition .. doi : 10.21256 / zhaw-4255

- ↑ Jorge Calvo-Zaragoza, David Rizo: Camera-PrIMuS: Neural End-to-End Optical Music Recognition on Realistic Monophonic Scores . In: 19th International Society for Music Information Retrieval Conference., Pp. 248-255.

- ↑ Jorge Calvo-Zaragoza, Jose Oncina: Recognition of Pen-Based Music Notation: The HOMUS Dataset . In: 22nd International Conference on Pattern Recognition., Pp. 3038-3043. doi : 10.1109 / ICPR.2014.524

- ↑ Emilia Parada-Cabaleiro, Anton Batliner, Alice Baird, Björn Schuller: The SEILS Dataset: Symbolically Encoded Scores in Modern-Early Notation for Computational Musicology . In: 18th International Society for Music Information Retrieval Conference., Pp. 575-581.

- ↑ Alexander Pacha, Horst Eidenberger: Towards a Universal Music Symbol Classifier . In: 14th International Conference on Document Analysis and Recognition., Pp. 35-36. doi : 10.1109 / ICDAR.2017.265

- ↑ Aruspix

- ↑ Audiveris

- ↑ CANTOR

- ↑ Gamera

- ↑ Bertrand Coüasnon: DMOS: a generic document recognition method, application to an automatic generator of musical scores, mathematical formulas and table structures recognition systems . In: Sixth International Conference on Document Analysis and Recognition., Pp. 215-220. doi : 10.1109 / ICDAR.2001.953786

- ↑ OpenOMR

- ↑ Rodan

- ↑ Information about the accuracy of capella-scan

- ↑ a b PhotoScore Ultimate 7

- ↑ PlayScore Pro

- ↑ iSeeNotes

- ↑ NotateMe Now

- ↑ MusicPal

- ^ Sheet Music Scanner

- ↑ PlayScore 2

- ↑ Notation Scanner - Music OCR

- ↑ Comp Create

- ↑ Info capella-scan

- ↑ FORTE Scan Light http://www.fortenotation.com/en/products/sheet-music-scanning/forte-scan-light ( Memento from September 22, 2013 in the Internet Archive )

- ↑ Scan Score

- ↑ MIDI-Connections SCAN 2.0 http://www.midi-connections.com/Product_Scan.htm ( Memento from December 20, 2013 in the Internet Archive )

- ↑ Music Publisher Scanning Edition http://www.braeburn.co.uk/mpsinfo.htm ( Memento from April 13, 2013 in the Internet Archive )

- ↑ NoteScan

- ↑ OMeR

- ↑ PDFtoMusic

- ↑ ScorScan

- ↑ SharpEye

- ↑ VivaldiScan http://www.vivaldistudio.com/Eng/VivaldiScan.asp ( Memento from December 24, 2005 in the Internet Archive )

- ↑ SmartScore http://www.musitek.com/smartscre.html ( Memento from April 17, 2012 in the Internet Archive )

- ↑ Scanning and editing notes | It's easy with ScanScore. Accessed December 19, 2019 (German).

- ↑ FORTE 11 Premium. Accessed December 19, 2019 (German).

Web links

- Website about research activities in the field of optical note recognition

- Github page for open-source projects on optical music recognition

- Bibliography on OMR Research

- Recording of the ISMIR 2018 tutorial "Optical Music Recognition for Dummies"

- Optical Music Recognition (OMR): Programs and scientific papers

- OMR (Optical Music Recognition) Systems : Detailed overview of OMR systems (Last updated: January 30, 2007).

- German sheet music scan overview page by Gerd Castan

- Bringing Sheet Music to Life: My Experiences with OMR (Andrew H. Bullen)