Pipeline (processor)

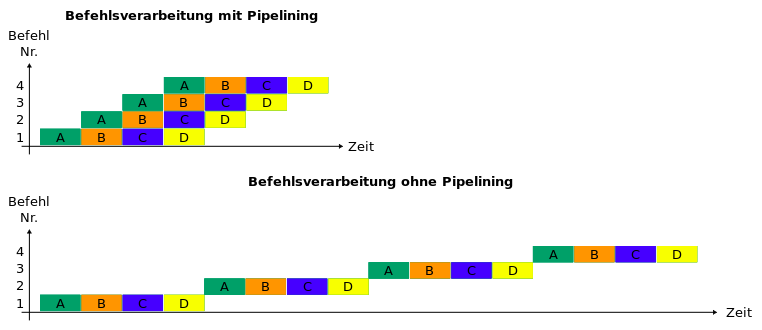

The pipeline (also instruction pipeline or processor pipeline ) describes a kind of "assembly line" in microprocessors , with which the processing of the machine instructions is broken down into subtasks that are carried out for several instructions in parallel. This principle, often also called pipelining for short , is a widespread microarchitecture of today's processors.

Instead of an entire command, only one sub-task is processed during a clock cycle of the processor, although the various subtasks of several commands are processed simultaneously. Since these subtasks are simpler (and therefore faster) than processing the entire command in one piece, pipelining can increase the efficiency of the microprocessor's clock frequency . Overall, a single command now requires several cycles for execution, but since a command is "completed" in each cycle due to the quasi-parallel processing of several commands, the overall throughput is increased by this method.

The individual subtasks of a pipeline are called pipeline stages , pipeline stages or also pipeline segments . These stages are separated by clocked pipeline registers . In addition to an instruction pipeline, various other pipelines are used in modern systems, for example an arithmetic pipeline in the floating point unit .

example

Example of a four-stage instruction pipeline:

- A - Load instruction code (IF, Instruction Fetch)

- In the command preparation phase, the command that is addressed by the command counter is loaded from the main memory. The command counter is then incremented.

- B - decoding instructions and loading the data (ID, instruction decoding)

- In the decoding and loading phase, the loaded command is decoded (1st clock half) and the necessary data is loaded from the main memory and the register set (2nd clock half).

- C - execute command (EX, Execution)

- The decoded instruction is executed in the execution phase. The result is buffered by the pipeline latch .

- D - return results (WB, Write Back)

- In the result storage phase, the result is written back to the main memory or to the register set.

Clocking

The simpler a single stage is, the higher the frequency with which it can be operated. In a modern CPU with a core clock in the gigahertz range (1 GHz ~ 1 billion clocks per second), the command pipeline can be more than 30 stages long (see Intel NetBurst microarchitecture ). The core clock is the time it takes an instruction to traverse one stage of the pipeline. In a k-stage pipeline, an instruction is processed in k cycles of k stages. Since a new instruction is loaded in each cycle, ideally one instruction per cycle also leaves the pipeline.

The cycle time is determined by the cycle time of the pipeline and is calculated from the maximum of all stage delays and an additional effort , which is caused by the intermediate storage of the results in pipeline registers.

Cycle time:

performance increase

Pipelining increases the overall throughput compared to instruction processing without pipelining. The total time for pipeline processing with stages and commands with a cycle time results from:

Total duration:

Initially the pipeline is empty and will be filled in steps. After each stage, a new instruction is loaded into the pipeline and another instruction is completed. The remaining commands are therefore completed in steps.

If you now form the quotient from the total runtime for command processing with and without pipelining, you get the speedup . This represents the gain in performance that is achieved through the pipelining process:

Speed-Up:

Assuming that there are always enough instructions to fill the pipeline and that the cycle time without a pipeline is a factor longer, then the limit value of the speed-up results for n towards infinity:

This means that the performance can be increased as the number of stages increases. However, the command processing cannot be divided into any number of stages, and the cycle time cannot be any shorter. An increase in the number of stages also has more severe effects if data or control conflicts occur. The hardware effort also increases with the number of stages .

Conflicts

If it is necessary for the processing of an instruction in one stage of the pipeline that an instruction which is located further up the pipeline is processed first, then one speaks of dependencies . These can lead to conflicts ( hazards ). Three types of conflict can arise:

- Resource conflicts when one stage in the pipeline needs access to a resource that is already in use by another stage

-

Data conflicts

- at command level: data used in a command are not available

- At transfer level: Register contents that are used in a step are not available

- Control flow conflicts when the pipeline has to wait to see if a conditional jump is executed or not

These conflicts require that corresponding commands wait ("stall") at the beginning of the pipeline, which creates "gaps" (also called "bubbles") in the pipeline. As a result, the pipeline is not being used optimally and throughput drops. Therefore one tries to avoid these conflicts as much as possible:

Resource conflicts can be resolved by adding additional functional units. Many data conflicts can be resolved through forwarding , whereby results from further back pipeline stages are transported forward as soon as they are available (and not at the end of the pipeline).

The number of control flow conflicts can be explained by a branch prediction (Engl. Branch prediction ) cut. Here, speculative calculations are continued until it is certain whether the forecast has proven to be correct. In the event of an incorrect branch prediction, commands executed in the meantime have to be discarded ( pipeline flush ), which takes a lot of time, especially in architectures with a long pipeline (such as Intel Pentium 4 or IBM Power5 ). Therefore, these architectures have very sophisticated techniques for branch prediction, so that the CPU only has to discard the contents of the instruction pipeline in less than one percent of the branches that take place.

advantages and disadvantages

The advantage of long pipelines is the significant increase in processing speed. The disadvantage is that there are many commands being processed at the same time. In the event of a pipeline flush , all instructions in the pipeline must be discarded and the pipeline then refilled. This requires the reloading of instructions from the main memory or the instruction cache of the CPU, so that high latency times result in which the processor is idle. In other words, the higher the number of commands between control flow changes , the greater the gain from pipelining, since the pipeline then only has to be flushed again after a long period of use under full load .

Exploitation by software

The programmer can skillfully use the knowledge about the existence of the pipelines to optimally utilize the processor. In particular, the control flow conflicts can be avoided.

Use of flags instead of conditional jumps

If on an architecture a carry (carry flag) is present and commands that allow it to be included in computing commands, so you can use it to Boolean variables to set, without branching.

Example (8086 in Intel syntax, i.e. in the form command destination,source ):

less efficient:

...

mov FLUSH_FLAG,0 ; FLUSH_FLAG = false

mov ax,CACHE_POINTER

cmp ax,CACHE_ENDE

jb MARKE; jump on below (<0), Verzweigung

mov FLUSH_FLAG,1 ; FLUSH_FLAG = true

MARKE:

...

more efficient:

...

mov FLUSH_FLAG,0

mov ax,CACHE_POINTER

cmp ax,CACHE_ENDE

cmc; complement carry flag

adc FLUSH_FLAG,0 ; FLUSH_FLAG = FLUSH_FLAG + 0 + carry flag, keine Verzweigung

...

Jumping in the rarer case

If it is known in a branch which case is more probable, branch should be made in the less probable case. If z. For example, if a block is only executed in rare cases, it should not be skipped if it is not executed (as it would be done in structured programming), but should be located somewhere else, jumped to with a conditional jump and returned with an unconditional jump, so that normally not is branched.

Example (8086):

many branches:

...

PROZEDUR:

...

inc cx

cmp cx,100

jb MARKE; Verzweigung im Normalfall (99 von 100)

xor cx,cx

MARKE:

...

few branches:

MARKEA:

xor cx,cx

jmp MARKEB

PROZEDUR:

...

inc cx

cmp cx,100

jnb MARKEA; Verzweigung nur im Ausnahmefall (1 von 100)

MARKEB:

...

Alternate different resources

Since memory access and the use of the arithmetic-logic unit take a relatively long time, it can help to use different resources alternately as possible.

Example (8086):

less efficient:

...

div CS:DIVISOR; Speicherzugriffe

mov DS:REST,dx; nahe hintereinander

mov ES:QUOTIENT,ax;

mov cx,40h

cld

MARKE:

...

loop MARKE

...

more efficient:

...

div CS:DIVISOR;Speicherzugriffe

mov cx,40h

mov DS:REST,dx; mit anderen

cld

mov ES:QUOTIENT,ax; Befehlen durchsetzt

MARKE:

...

loop MARKE

...

See also

literature

- Andrew S. Tanenbaum : Computer Architecture: Structures - Concepts - Basics . 5th edition. Pearson Studium, Munich 2006, ISBN 3-8273-7151-1 , especially chapter 2.1.5.

Web links

- Articles on pipelining; (Part 1) (Part 2) arstechnica.com (English)

- Elaboration on the basics of computer architecture , especially (Chapter 6 - Pipelining) .