Priority queue

In computer science , a is priority queue (and priority list , the priority queue , priority queue or English priority queue called) a particular abstract data structure , more specifically an expanded form of a queue . The elements that are placed in the queue are given a key that determines the order in which the elements are processed.

Operations

Priority queues need the operations

- insert , to insert an element and

- extractMin , for returning and removing the element with the lowest key (= highest priority)

- isEmpty , to check if the queue is empty,

support.

There are also operations that are particularly important for online algorithms :

- remove remove an element

- decreaseKey decrease the key value (= increase the priority)

Other commonly used operations are:

- peek , return the item with the highest priority without changing the queue

- merge , merging of two priority queues

implementation

The implementation of priority queues can be done via AVL trees . Both insert and extractMin can then be executed in O (log n) time. Another possibility is to use partially ordered trees ( heaps ). Here, too, the time complexity is O (log n).

Examples of data structures that efficiently implement priority queues are

- AVL tree

- Binary heap

- Binomial heap

- Fibonacci heap (amortized runtime for remove and extractMin O (log n), otherwise O (1), worst case O (n) or O (log n))

- K-level buckets

- Left tree

- Radix heap

- Van Emde Boas priority queue

The following table shows an overview of the different runtimes of some implementations in O notation .

| surgery | peek | extractMin | insert | decreaseKey | merge |

|---|---|---|---|---|---|

| Binary heap | Θ (1) | Θ (log n ) | O (log n ) | O (log n ) | Θ ( n ) |

| Left tree | Θ (1) | Θ (log n ) | Θ (log n ) | O (log n ) | Θ (log n ) |

| Binomial heap | Θ (1) | Θ (log n ) | Θ (1) a | Θ (log n ) | O (log n ) b |

| Fibonacci heap | Θ (1) | O (log n ) a | Θ (1) | Θ (1) a | Θ (1) |

Applications

Priority queues can be used to implement discrete event simulations. The times are calculated as a key for the respective events, the time-event pair is inserted into the priority queue and the priority queue then outputs the current (i.e. next to be processed) event.

In general, priority queues are needed for many algorithms or even classes of algorithms.

For example, greedy algorithms make use of priority queues, since there often the minimum or maximum of a set must be determined. One of the best-known algorithms of this type is Dijkstra's algorithm for calculating the shortest paths.

You can also use priority queues for best search algorithms. A priority queue is usually used to find the next most promising node in one iteration. An example of this is the A * algorithm for calculating the shortest paths in graphs.

A special algorithm that can also be implemented with priority queues is Huffman coding . The priority queue is used here to find the characters with the lowest probability.

Extensions

In addition to the classic, single-ended priority queue, there are also double-ended queues. These also support an extractMax operation to extract the element with the largest key. Double heaps offer one possibility for the implementation of such data structures . Alternatively, data structures such as min-max heaps can also be used, which directly support two-end priority queues.

Parallel priority queue

In order to speed up priority queues further, they can be parallelized. There are three different options for parallelization. The first option is to simply parallelize the individual operations ( insert , extractMin ...). The second option allows multiple processes to access the priority queue at the same time. The last option is to perform each operation on k elements instead of just one element at a time. In the case of extractMin, for example, the first k elements with the highest priority would be removed from the priority queue.

Parallelize individual operations

A simple approach to parallelizing priority queues is to parallelize the individual operations. This means that the queue works exactly like a sequential one, but the individual operations are accelerated by the use of several processors. The maximum speedup that can be achieved here is limited by the fact that there are sequential implementations for priority queues whose slowest operation runs in time (e.g. binomial heap).

On a Concurrent Read, Exclusive Write (CREW) PRAM model, the operations insert , extractMin , decreaseKey and remove can be performed in constant time if processors are available, where the size of the priority queue is. In the following, the queue is implemented as a binomial heap. After each operation, it must apply that a maximum of three trees of the same order exist and that the smallest root of the trees of the order is smaller than all the roots of trees of the higher order.

- insert (e) : A new binomial tree with order 0, whose only node contains the element e , is inserted. Then two trees of the same order are merged into one tree of order if three trees of the order exist. The tree with the smallest root of the trees with order is not used for this. This step is carried out in parallel with each processor taking care of the trees of an order. Thus insert in is feasible.

The following is the operation in pseudocode .

insert(e) {

L[0] = L[0] ∪ e

parallelesZusammenfügen()

}

parallelesZusammenfügen() {

für jede Ordnung i parallel:

falls |L[i]| >= 3:

füge zwei Bäume aus L[i] \ min(L[i]) zu einem neuen Baum b zusammen

L[i+1] = L[i+1] ∪ b

}

- extractMin : The minimum to be removed is the smallest element of the trees of order 0. This element is removed. To ensure that the new minimum is then also in order 0, the tree with the smallest root is split up for each order and the two new trees are added to the order . By assigning each processor to exactly one order, this step can be carried out in parallel . After this step, as in the insert operation, two trees of the order are merged to form one tree of the order if at least three trees of the order exist. Since this step can also be carried out in parallel for each order, extractMin can be carried out in constant time.

extractMin() {

e = min(L[0])

L[0] = L[0]\e

für jede Ordnung i parallel:

falls |L[i]| >= 1:

teile min(L[i]) in zwei Bäume a,b

L[i] = L[i] \ min(L[i])

L[i-1] = L[i-1] ∪ {a,b}

parallelesZusammenfügen()

return e

}

The concept for constant insert and extractMin operations can be extended to achieve a constant runtime for remove . The decreaseKey operation can then also be implemented in constant time by a remove and a subsequent insert .

The advantage of this parallelization is that it can be used just like a normal, sequential priority queue. But only a speedup of can be achieved. In practice, this is further restricted by the fact that, in the case of a parallel implementation, additional effort has to be made for communication between the various processors.

Simultaneous parallel access

If concurrent access to a priority queue is allowed, multiple processes can simultaneously perform operations on the priority queue. However, this poses two problems. For one thing, the semantics of the individual operations are no longer obvious. For example, if two processes are running extractMin at the same time , should both processes receive the same item or should they be different? This limits parallelism to the level of the application using the precedence queue. On the other hand, there is the problem of the access conflict, since several processes have access to the same element.

Simultaneous access to a priority queue can be implemented on a Concurrent Read, Concurrent Write (CRCW) PRAM model. In the following, the priority queue is implemented as a skip list . In addition, an atomic synchronization primitive CAS is used to enable a lock- free skip list. The nodes of the skip list consist of a unique key, a priority, an array of pointers to the next node and a delete tag. The delete indicator indicates whether the node is currently being deleted by a process. This means that the other processes know that the node is about to be deleted and can react accordingly, depending on which operations they are performing.

- insert (e) : First a new node with a key and a priority is created. In addition, a number of levels are passed to the node. Then a search is started to find the correct position in the skip list where you have to add the newly created node. The search starts from the first node and the highest level and traverses the skip list down to the lowest level until the correct position is found. The most recently traversed node is saved as the previous node for each level. In addition, the node to which the pointer of the predecessor node points is saved as the successor node. After that, for each level of the new node, the pointer of the saved previous node is set to the new node. Finally, the pointers for each level of the new node are set to the corresponding successor nodes.

- extractMin () : First the skip list is traversed until a node is reached whose delete flag is not set. Then the delete flag is set for this node. Then the pointers of the previous node are updated.

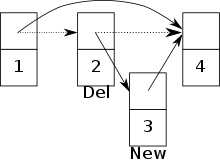

When allowing simultaneous access to a priority queue, care must be taken to avoid potential conflicts between two processes. For example, a conflict can arise if a process tries to add a node, but another process is already deleting its predecessor node. There is a risk that the new node was added to the skip list, but is no longer accessible. ( See picture )

K element operations

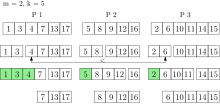

This type of parallelization of priority queues introduces new operations which no longer process a single element, but rather elements simultaneously. The new operation k_extractMin then deletes the smallest elements from the priority queue and returns them. The queue is parallelized on distributed memory. Each processor has its own private memory and a local (sequential) priority queue. The elements of the global queue are therefore distributed across all processors. In the case of a k_insert operation, the elements are randomly evenly distributed to the processors and each inserted into the local priority queues. Individual elements can still be inserted. With this strategy, there is a high probability that the globally smallest elements are in the union of the locally smallest elements of all processors. Each processor thus holds a representative part of the global priority queue.

This property is exploited with k_extractMin by removing the smallest elements from each local queue and collecting them in a result set. The elements remain assigned to their original processor. The number of elements that are deleted from each local priority queue depends on and the number of processors . The smallest elements of the result set are determined by parallel selection . It is very likely that these are also the smallest elements globally . If not, elements are again deleted from each local precedence queue until the globally smallest elements are in the result set. Now the smallest elements can be returned. All other items are put back into the local priority queues from which they were removed. The runtime of k_extractMin is expected when and describes the size of the priority queue.

The priority queue can be improved by not always inserting the result set directly into the local queue after a k_extractMin operation. This saves you from having to repeatedly remove elements from a local queue and then reinsert them immediately.

By removing several elements at the same time, a significant acceleration can be achieved compared to sequential priority queues. However, not all algorithms can take advantage of this type of priority queue. For example, with the Dijkstra algorithm, multiple nodes cannot be processed at once. Dijkstra takes the node with the smallest distance to the start node from the priority queue and then relaxes its edges. This can change the priority of the neighboring nodes that are already in the priority queue. By removing the nodes with the smallest distance, it can happen that the processing of one of the nodes changes the priority of one of the other nodes. This node should then only be edited later, but will now be edited earlier. This then marks this node as visited too early. In this case a path has been found from the starting node to this node, but the distance is not minimal. In other words, by using k element operations for Dijkstra's algorithm, the Label Setting property is destroyed.

literature

- George F. Luger: Artificial Intelligence. Strategies for solving complex problems . 2001, ISBN 3-8273-7002-7

Web links

-

java.util.PriorityQueuein the Java API at Oracle (English)

Individual evidence

- ↑ a b c Thomas H. Cormen ; Charles E. Leiserson ; Ronald L. Rivest : Introduction to Algorithms . In: MIT Press and McGraw-Hill . 1990, ISBN 0-262-03141-8 .

- ^ Clark A. Clane: Linear Lists and Priority Queues as Balanced Binary Trees (Ph.D. thesis) . Ed .: Department of Computer Science, Stanford University. 1972, ISBN 0-8240-4407-X ( stanford.edu ).

- ↑ Binomial Heap | Brilliant Math & Science Wiki ( en-us )

- ↑ Michael Fredman; Robert Tarjan : Fibonacci heaps and their uses in improved network optimization algorithms . In: Journal of the Association for Computing Machinery . tape 34 , no. 3 , July 1987, p. 596-615 , doi : 10.1145 / 28869.28874 ( ict.ac.cn [PDF]).

- ↑ a b Brodal, Gerth: Priority Queues on Parallel Machines . In: Algorithm Theory - SWAT 96 . Springer-Verlag, 1996, p. 416-427 , doi : 10.1007 / 3-540-61422-2_150 .

- ↑ a b Håkan Sundell, Philippas Tsigas: Fast and lock-free concurrent priority queues for multi-thread systems . In: Journal of Parallel and Distributed Computing . 65, No. 5, 2005, pp. 609-627. doi : 10.1109 / IPDPS.2003.1213189 .

- ^ Lindén, Jonsson: A Skiplist-Based Concurrent Priority Queue with Minimal Memory Contention . In: Technical Report 2018-003 . 2013 ( uu.se ).

- ↑ a b c Peter Sanders ; Kurt Mehlhorn; Martin Dietzfelbinger; Roman Dementiev: Sequential and Parallel Algorithms and Data Structures - The Basic Toolbox . Ed .: Springer International Publishing . 2019, p. 226-229 , doi : 10.1007 / 978-3-030-25209-0 .