Bayesian estimator

A Bayesian estimator (named after Thomas Bayes ) is an estimating function in mathematical statistics which, in addition to the observed data, takes into account any prior knowledge about a parameter to be estimated . In accordance with the procedure of Bayesian statistics , this prior knowledge is modeled by a distribution for the parameter, the a priori distribution . By Bayes' theorem , the resulting conditional distribution of the parameter under observation data, the a posteriori distribution . In order to obtain a clear estimated value from this, position measures of the a posteriori distribution, such as expected value , mode or median , are used as so-called Bayesian estimators. Since the a posteriori expectation value is the most important and most frequently used estimator in practice, some authors also refer to it as the Bayesian estimator. In general, a Bayesian estimator is defined as the value that minimizes the expected value of a loss function under the a posteriori distribution. For a quadratic loss function, the a posteriori expected value then results as an estimator.

definition

It denotes the parameter to be estimated and the likelihood , i.e. the distribution of the observation as a function of . The a priori distribution of the parameter is denoted by. Then

the posterior distribution of . Let there be a function , called the loss function , whose values model the loss that one suffers when estimating through . Then a value is called the expected value

of loss minimized under the posterior distribution, a Bayesian estimate of . In the case of a discrete distribution of , the integrals over are to be understood as a summation over .

Special cases

A posteriori expectation value

An important and frequently used loss function is the quadratic deviation

- .

With this loss function, the Bayesian estimator results in the expected value of the a posteriori distribution, in short the a posteriori expected value

- .

This can be seen in the following way: If a distinction by concerns

- .

Setting this derivative to zero and solving it for yields the above formula.

Posterior median

Another important Bayesian estimator is the median of the posterior distribution. It results from using the piecewise linear loss function

- ,

the amount of the absolute error. In the case of a continuous a posteriori distribution, the associated Bayesian estimator is the solution of the equation

- ,

thus as the median of the distribution with density .

A posteriori mode

For discretely distributed parameter is offering zero-one loss function

that assigns a constant loss to all incorrect estimates and only does not “penalize” an exact estimate. The expected value of the loss function, the posterior probability of the event gives so . This becomes minimal at the points at which it is maximal, that is to say at the modal values of the a posteriori distribution.

In the case of continuously distributed , the event has zero probability for all . In this case one can instead use the loss function for a (small) given one

consider. In the Limes , the a posteriori mode then also results as a Bayesian estimator.

In the case of a uniform distribution as a priori distribution, the maximum likelihood estimator results , which thus represents a special case of a Bayesian estimator.

example

An urn contains red and black balls of unknown composition, that is, the probability of drawing a red ball is unknown. In order to estimate, balls are drawn one after the other with replacement: only a single drawing produces a red ball, so it is observed. The number of red balls drawn is binomially distributed with and , so the following applies

- .

Since there is no information about the parameter to be estimated , the uniform distribution is used as an a priori distribution, i.e. for . The a posteriori distribution thus results

- .

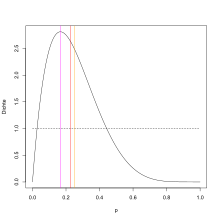

This is the density of a beta distribution with the parameters and . This results in the a posteriori expected value and the a posteriori mode . The posterior median has to be determined numerically and is approximate . In general, red balls in draws result in the a posteriori expected value and , i.e. the classic maximum likelihood estimator, as the a posteriori mode. For values of which are not too small , a good approximation for the posterior median is.

Practical calculation

An obstacle to the application of Bayesian estimators can be their numerical computation. A classic approach is the use of so-called conjugate a priori distributions , in which an a posteriori distribution results from a known distribution class, the location parameters of which can then be easily looked up in a table. For example, if any beta distribution is used as the prior in the urn experiment above, then a beta distribution as a posteriori distribution also results.

For general a priori distributions, the above formula for the a posteriori expectation value shows that two integrals over the parameter space must be determined for its calculation. A classic approximation method is the Laplace approximation , in which the integrands are written as an exponential function and then the exponents are approximated by a quadratic Taylor approximation.

With the advent of powerful computers, further numerical methods for calculating the occurring integrals became applicable (see Numerical Integration ). One problem in particular is high-dimensional parameter sets, i.e. the case that a large number of parameters are to be estimated from the data. Here, Monte Carlo methods are often used as an approximation method.

literature

- Leonhard Held: Methods of statistical inference. Likelihood and Bayes . Springer Spectrum, Heidelberg 2008, ISBN 978-3-8274-1939-2 .

- Erich Leo Lehmann, George Casella: Theory of Point Estimation . 2nd Edition. Springer, New York a. a. 1998, ISBN 0-387-98502-6 , chapter 4.

Individual evidence

- ^ Karl-Rudolf Koch: Introduction to Bayesian Statistics . Springer, Berlin / Heidelberg 2000, ISBN 3-540-66670-2 , pp. 66 ( limited preview in Google Book search).

- ^ Leonhard Held: Methods of statistical inference. Likelihood and Bayes . Springer Spectrum, Heidelberg 2008, ISBN 978-3-8274-1939-2 .

- ↑ Hero: Methods of Statistical Inference. 2008, pp. 146-148.

- ↑ Hero: Methods of Statistical Inference. 2008, pp. 188-191.

- ↑ Hero: Methods of Statistical Inference. 2008, pp. 192-208.