Generative grammar

Generative grammar ( Latin generare 'generate' and 'grammar') is the generic term for grammar models, with the rule system of which the sentences of a language can be generated - in contrast to the language teaching that only describes the phenomena. The main representative is Noam Chomsky with the generative transformation grammar he developed , which was controversially discussed in the context of the so-called Linguistics Wars and - in response to this - was changed in various variants. In this context, other, alternative, generative grammar concepts emerged.

Initial question: How do people “know” how to speak?

The basic answer of generative grammar to this question is: man's ability to speak; H. Making grammatically correct statements is based on cognitive structures that are genetically inherited. With this assumption, generative grammar is differentiated from behaviorism , which assumes that humans are born without innate abilities - as tabula rasa - and so must learn to speak exclusively by imitating their environment. (In this context, the cognitive turn is spoken of in linguistics .) Although children get to know the “word material” through their environment, they do, however, have a tendency to know how this material can be processed into sentences in a grammatically correct manner. H. how “correct” language is produced is genetically inherited. In the process of language acquisition, these systems are trained to develop specific language skills for a specific language.

use

The term is often used synonymously with generative transformation grammar , which means all generative grammars with transformation rules. However, there is also another conception of the term “generative grammar”, which then also includes alternative grammar models such as the head-driven phrase structure grammar or the lexical-functional grammar . However, the formal foundations of these approaches were revised in the 1990s, so that these models no longer belong to the generative grammars in the narrower sense.

In most cases, a generative grammar is able to use recursive rules to generate an infinite number of sentences from a finite number of lexemes . This property is very desirable for a model of natural languages , since human brains only have a finite capacity, but the number of possible grammatical sentences in each individual language is infinite due to their recursiveness.

Delimitations

Generative grammar has to be distinguished from traditional grammar, as the latter is often strongly prescriptive (instead of descriptive ) and not mathematically explicit and mostly deals with a relatively small amount of single-language-specific syntactic phenomena. Likewise, generative grammar should be distinguished from other descriptive approaches, such as B. the different functional grammar theories.

Generative grammar and structuralism

The generative transformation grammar is part of modern American structuralism, while others emphasize the differences to conventional structuralism . Generative grammar is said to have carried out a paradigm shift away from structuralist linguistics and "brought a fundamental reorientation in linguistics".

The following differences between structuralism (then in the narrower sense) and generative transformation grammar are named:

| structuralism | generative transformation grammar |

|---|---|

| descriptive | generating |

| static (finite corpus) | dynamic, language as enérgeia |

| based on the parole of real speakers |

starting from the langage (= competence ) of the ideal speaker |

| empirically | mentalistic |

| Orientation towards the natural sciences | Orientation towards philosophical rationalism |

| Orientation towards positivism | Orientation towards mathematical and machine-theoretical models |

Chomsky's syntax-stressed generative grammar

When one speaks of generative grammar, what is usually meant is the one developed by Chomsky, which also included semantic components with standard theory (more under: interpretative semantics ), but emphasized syntactic ones.

Stages of development of Chomsky's generative grammar

Generative grammar went through several stages of development at Chomsky:

- 1955–1964: early transformation grammar (Chomskys Syntactic Structures )

- 1965–1970: Standard Theory (ST)

- 1967–1980: Extended Standard Theory (EST) or Revised Extended Standard Theory (REST) (concept of modularity)

- since 1980: Government and Binding Theory (GB)

- 1990s: " Minimalist program "

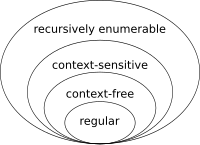

Relation to the Chomsky hierarchy

Generative grammars can be compared and described using the Chomsky hierarchy designed by Noam Chomsky in the 1950s. This classifies a number of different types of formal grammars according to increasing expressive power. They differ in their symbol (terminal and non-terminal symbols) and production control systems and must satisfy various precisely defined checking methods (e.g. using Turing machines ). Type 0 (unrestricted formal grammars) includes all formal grammars. The simplest types are the regular grammars (type 3). According to Chomsky, they are not suitable for mapping natural languages, since they cannot model sentences in a hierarchical arrangement ( hypotax ), which in his opinion is typical for the human communication system.

The context-sensitive grammars (type 1) and context-free grammars (type 2) , on the other hand, meet these requirements , e.g. B. Chomsky's "phrase structure grammar", in which the derivation of a sentence is represented as a tree structure . Linguists working in the field of generative grammar often regard such trees as their main subject of study. According to this view, sentences are not just chains of words, but trees with lower and upper branches connected by nodes.

The tree model works much like this example, where S is a sentence, D is a determiner , N is a noun , V is a verb , NP is a noun phrase, and VP is a verb phrase:

S

/ \

NP VP

/ \ / \

D N V NP

Der Hund fraß / \

D N

den Knochen

- S - sentence

- D - determiner

- N - noun

- V - verb

- NP - noun phrase

- VP - verb phrase

- Aux - auxiliary verbs

- A - adjectives

- Adv - adverbs

- P - prepositions

- Pr - pronoun

- C - complementer

The generated sentence is "The dog ate the bone". Such a tree diagram is also known as a phrase structure model. Such tree diagrams can be generated automatically based on the underlying rules (see web links ).

The tree can also be displayed as text, even if it is more difficult to read:

[S [NP [D Der ] [N Hund ] ] [VP [V fraß ] [NP [D den ] [N Knochen ] ] ] ]

Chomsky came to the conclusion that the phrase structure was also insufficient to describe natural languages. To remedy this, he formulated the more complex system of transformational grammar .

Roots and parallels

- Cartesian logic

- Chomsky resorted to Cartesian logic (see Cartesian linguistics ) in his considerations . The aim of the generative transformation grammar "is to map the implicit knowledge of language through a system of explicit rules and thus to create a logically founded theory about the way people think", thereby continuing "the enlightening idea of logical forms".

- Proposition and sentence lexeme

- The transformation grammar “shows the purely formal methods of converting a linguistic expression of a question into that of a command e.g. B. reshaped, and uses both [m ? ] a common basis ahead (deep structure) ”. The deep structure should correspond to Menne's sentence lexeme. The sentence lexeme corresponds to the proposition .

- Cognitive psychology

- "For Chomsky, the analysis of language ultimately serves to research the structure and functioning of the human brain. For him, linguistics becomes something like a sub-discipline of cognitive psychology". It is criticized that all attempts in language psychology should show that generative grammar is "a logical, not a psychological model".

Semantically oriented generative grammar by George Lakoff (generative semantics)

The generative semantics is a generative grammar, in contrast to that of Chomsky (generative grammar i. E. S.) 'primary importance to the semantics of the syntax before admitted ". It arose out of a critical examination of the generative transformation grammar. It is a "grammar theory in which, instead of syntax, semantics are generative components and the basis of sentence formation". The main representative of generative semantics is George Lakoff .

In generative semantics, the deep structure is an abstract semantic and the surface structure is a syntactically correct level of representation in normal language.

By changing the verb-subject-object relationships, the generative semantics reduce the projection rules: V is no longer subordinate to VP (tree graph see above: eat the bone ), but based on the predicate logic (V = predicate requires NP (1) or NP (2) and NP (3) as supplements / arguments) V is placed directly under the S-node. This results in the relationships V (eat) → NP (subj .: the dog) and V → NP (obj .: the bone) without detour and the model manages with fewer derivation rules. In addition, the GS system enables individual lexemes to be “broken down into semantic features ( decomposition ) and transformations carried out before the lexemes are inserted into the family tree (= pre-lexical transformations)”. That means: In the selection process of the linguistically correct sentences, the word meaning is involved at an earlier stage than in the interpretative semantics with its separate, consecutive syntax and semantics tests. This limits the possibilities.

Critics of generative semantics point out that the deep structure is "highly abstract" and that the transformation part is "extremely complex". Also, the principle of preserving the meaning of the transformations cannot be consistently maintained, or in other words: the syntactic structural relationships also determine the semantic interpretation.

Effects

Fred Lerdahl and Ray Jackendoff took up Chomsky's generative basic idea to describe a possible musical grammar. The French composer Philippe Manoury transferred the system of generative grammar to the field of music. Generative grammar is also used in the field of computer-aided, algorithmic composition, for example in the organization of musical syntax in Lisp-based programming languages such as open music or patchwork. Musical formal language can generally only be described as syntactically organized grammar within certain, narrowly defined styles. A general syntax, such as that of western tonal music , has not yet been proven.

Web links

- Brief introduction to generative grammar

- A. Max: The Syntax Student's Companion. Java applet for generating tree diagrams

Individual evidence

- ↑ Monika Schwarz: Introduction to Cognitive Linguistics . Francke, Tübingen / Basel 1996, ISBN 3-7720-1695-2 , pp. 13-15 .

- ^ Geoffrey K. Pullum, Barbara C. Scholz: On the Distinction between Generative-Enumerative and Model-Theoretic Syntactic Frameworks . In: Logical Aspects of Computational Linguistics: 4th International Conference (= Lecture notes in computer science ). No. 2099 . Springer Verlag, Berlin 2001, ISBN 3-540-42273-0 .

- ↑ Stefan Müller: Grammar Theory (= Stauffenburg Introductions . No. 20 ). Stauffenburg, Tübingen 2010, ISBN 978-3-86057-294-8 , 11.2 ( hpsg.fu-berlin.de ).

- ^ A b Dietrich Homberger: Subject dictionary for linguistics . Reclam, Stuttgart 2000, ISBN 3-15-010471-8 (structuralism).

- ^ A b Heidrun Pelz: Linguistics . An introduction. Hoffmann and Campe, Hamburg 1996, ISBN 3-455-10331-6 , pp. 169 ff .

- ^ A b Michael Dürr, Peter Schoblinski: Descriptive Linguistics: Basics and Methods . 3. Edition. Vandenhoeck and Ruprecht, Göttingen 2006, ISBN 3-525-26518-2 , pp. 115 .

- ^ A b Heidrun Pelz: Linguistics . 1996, p. 179 .

- ^ Heidrun Pelz: Linguistics . 1996, p. 174 ff . ("First version" - ST - EST - RST - GB, citing the generative semantics between ST and EST).

- ↑ Introduction to the basics of generative grammar . (PDF) TU Dresden, WS 2003/2004, pp. 1–19

- ^ Noam Chomsky: Cartesian Linguistics. A chapter in the history of rationalism . Tübingen 1971. Translation (R. Kruse) by Noam Chomsky: Cartesian linguistics: a chapter in the history of rationalist thought . University Press of America, Lanham, Maryland 1965. Reprint: University Press, Cambridge 2009.

- ↑ a b Radegundis Stolze: Translation Theories: An Introduction . 4th edition. Narr, Tübingen 2005, ISBN 3-8233-6197-X , p. 42 .

- ^ A b Albert Menne: Introduction to methodology: an overview of elementary general scientific methods of thinking . 2nd Edition. Scientific Book Society, Darmstadt 1984, p. 45 .

- ^ Heidrun Pelz: Linguistics . 1996, p. 172 .

- ↑ Christoph Schwarze, Dieter Wunderlich (ed.): Handbuch der Lexikologie . Athenaeum, Königstein / Ts. 1985, ISBN 3-7610-8331-9 , On the decomposability of word meanings, p. 64, 88 .

- ^ Heidrun Pelz: Linguistics . 1996, p. 175 .

- ↑ a b Winfried Ulrich: Dictionary of basic linguistic terms . 5th edition. Gebr.-Borntraeger-Verl.-Buchh., Berlin / Stuttgart 2002, ISBN 3-443-03111-0 (generative semantics).

- ↑ George Lakoff: Linguistics and Natural Logic. Frankfurt 1971.

- ^ Heidrun Pelz: Linguistics. 1996, p. 176.

- ↑ Fred Lehrdal, Ray Jackendoff: A Generative Theory of Tonal Music . The MIT Press, Cambridge MA 1996, ISBN 0-262-62107-X (English, mitpress.mit.edu (archived) ).

- ↑ Jonah Katz, David Pesetsky: The Identity Thesis for Language and Music . 2009 ( ling.auf.net ).

- ^ Julian Klein, Thomas Jacobsen: Music is not a Language: Re-interpreting empirical evidence of musical 'syntax' . Research Catalog (English, academia.edu - 2008–2012).