Hierarchical temporal storage

A Hierarchical Temporal Memory ( English hierarchical temporal memory , HTM ) is a model of machine learning , which by Jeff Hawkins and Dileep George ( Numenta, Inc. was developed). This model depicts some properties of the neocortex .

Layout and function

HTMs are organized as a hierarchically structured network of nodes. As in a Bayesian network , each node implements a learning and storage function. The structure is designed to create a hierarchical presentation of this data using time-varying data. However, this is only possible if the data can be hierarchically represented both in the (problem) space and in time.

An HTM performs the following functions, the last two being optional, depending on the implementation:

- Recognition and representation of elements and connections.

- Inference of new elements and relationships based on the known elements and relationships.

- Preparation of forecasts. If these predictions are not correct, the internal model is adjusted accordingly.

- Use predictions to take action and observe the impact. (Control and regulation)

Sparse Distributed Representation

Data that is processed by an HTM are encoded as sparse distributed representation (SDR). These are sparsely populated bit vectors in which each bit has a semantic meaning. Only a small number of bits are active for each possible entry.

Common active bits with different inputs indicate a common meaning. If, for example, in the course of subsampling when comparing two SDRs, a false positive occurs , they are not the same, but share a similar meaning.

Different coders are used depending on the data type. For example, there are coders for various number formats, time information, geographical information and for the semantic meaning of words in natural language.

Can in the manner shown by a SDR semantic meaning, similar to the vector space model , set operations are applied.

- example

Dendrite and synapse model

The connections between the neurons (dendrites) are represented by a matrix. If the connection strength ("permanence") exceeds a certain threshold value, a synapse is logically formed. Synapses are modeled as identical mapping , i.e. they forward the (binary) input value unchanged. ( see also: threshold value procedure )

A distinction is also made between basal and apical dendrites.

- Basal dendrites

- Basal dendrites make up about 10% of the dendrites. They are locally linked within a neuron layer with other (spatially close) neurons and represent the prediction for the future activation of neurons in a certain context. Each basal dendrite stands for a different context.

- If the activation of a neuron is predicted by a basal dendritic, the activation of the corresponding neuron is suppressed. Conversely, the unexpected activation of a neuron is not prevented and leads to a learning process.

- Apical dendrites

- Apical dendrites make up the remaining 90% of the dendrites. They create the connection between the layers of neurons. Depending on the layer, they are linked within a cortical cleft , for example as a feedforward network or feedback network .

Spacial pooling and temporal pooling

The Spacial Pooling is an algorithm which controls the learning behavior between geographically proximate neurons. If a neuron is activated, the activation of spatially neighboring neurons is suppressed by means of the basal dendrites. This ensures a sparse activation pattern. The remaining activated neuron is the best semantic representation for the given input.

If an activation of neighboring neurons, predicted by the activation of a neuron, takes place, the permanence of the basic connection to the suppression of the corresponding neurons is increased. If a predicted activation does not take place, the permanence of the basic connection is weakened.

The Temporal Pooling is functionally equivalent to the Spacial pooling works, but delayed. Depending on the implementation, temporal pooling is either implemented as an independent function or combined with spacial pooling. There are also different versions of how many time units in the past are taken into account. Due to the delayed prediction of neuron activation, an HTM is able to learn and predict time-dependent patterns.

Classifier

At the end of a pipeline consisting of one or more HTM layers, a classifier is used in order to assign a value to an output of the HTM that is coded as SDR.

Layer model

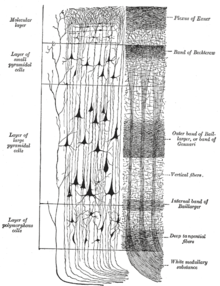

Similar to the neocortex , a cortical cleft is built up in several layers, each layer representing an HTM. In principle, a lower layer forwards its data to a higher layer.

Cortical columns are in turn connected in series. Lower columns pass their data on to organizationally higher columns, while higher columns return predictions about neuron activation to lower columns.

| layer | function | construction |

|---|---|---|

| 2/3 | Inference (sensors) | Feedforward |

| 4th | Inference (sensory motor skills) | |

| 5 | Motor skills | Feedback |

| 6th | attention |

Layer 6 receives sensor data that is combined with a copy of the associated motor function data. The data come either directly from the sensor system or from organizationally lower columns. Layer 6 predictions are forwarded to the columns below.

Layer 5 is responsible for controlling motor skills. In addition, motor control commands are passed on to organizationally higher columns via the thalamus.

Layer 2/3 is the highest logical layer. She forwards her result to organizationally higher columns.

The exact inputs and outputs of the individual layers, as well as their exact meaning, have only been partially researched. Practical implementations of an HTM are therefore mostly limited to layer 2/3, the function of which is best understood.

Compared to deep learning

| property | HTM | Deep learning |

|---|---|---|

| Data representation | Binary; sparsely populated data representation | Floating point; densely populated data display |

| Neuron model | Simplified model. | Greatly simplified model. |

| Complexity and calculation effort | High. So far no implementation for specialized hardware. | Medium to high. Various hardware acceleration options available. |

| implementation | So far only implemented as a prototype. | A large number of now relatively mature frameworks are available. |

| distribution | Hardly used so far. | Widely used by large companies and research institutions. |

| Learn | Online learning. Can continuously learn from a data stream. | Batch learning. Vulnerable to Catastrophic Interference . |

| Conversion rate | Slowly. Takes many iterations to learn a relationship. | Very slowly. |

| robustness | The SDR makes it very robust against adversarial machine learning methods. | Vulnerable to adversarial machine learning methods. Must be trained specifically to defend against certain attack scenarios. |

criticism

HTMs are nothing fundamentally new to AI researchers, but are a combination of already existing techniques, although Jeff Hawkins does not sufficiently refer to the origins of his ideas. In addition, Jeff Hawkins has bypassed the peer review common in science for publications and thus a well-founded examination by scientists. It should be noted, however, that Hawkins does not come from the academic, but from the industrial environment.

literature

- Jeff Hawkins, Sandra Blakeslee: On Intelligence . Ed .: St. Martin's Griffin. 2004, ISBN 0-8050-7456-2 , pp. 272 (English).

Web links

- Numenta. Retrieved on July 16, 2014 (English, homepage of Numenta).

- Evan Ratliff: The Thinking Machine. Wired , March 2007, accessed July 16, 2014 .

- Hierarchical temporal memory and HTM-based cortical learning algorithms. (PDF) Numenta , September 12, 2011, accessed July 16, 2014 .

- Ryan William Price: Hierarchical Temporal Memory Cortical Learning Algorithm for Pattern Recognition on Multi-core Architectures. (PDF) Portland State University , 2011, accessed July 16, 2014 .

- HTM School. Numenta , 2019, accessed August 4, 2019 .

Open source implementations

- Numenta Platform for Intelligent Computing (NuPIC). Numenta, accessed July 16, 2014 (English, C ++ / Python).

- Neocortex - Memory Prediction Framework. In: Sourceforge . Retrieved July 16, 2014 (English, C ++).

- OpenHTM. In: Sourceforge. Retrieved July 16, 2014 (English, C #).

- Michael Ferrier: HTMCLA. Retrieved July 16, 2014 (English, C ++ / Qt).

- Jason Carver: pyHTM. Retrieved July 16, 2014 (English, Python).

- htm. In: Google Code. Retrieved July 16, 2014 (English, Java).

- adaptive-memory-prediction-framework. In: Google Code. Retrieved July 16, 2014 (English, Java).

Individual evidence

- ^ Subutai Ahmad, Jeff Hawkins : Properties of Sparse Distributed Representations and their Application to Hierarchical Temporal Memory . March 24, 2015, arxiv : 1503.07469 (English).

- ↑ Jeff Hawkins: Sensory-motor Integration in HTM Theory, by Jeff Hawkins. In: YouTube. Numenta, March 20, 2014, accessed January 12, 2016 .

- ^ Jeff Hawkins: What are the Hard Unsolved Problems in HTM. In: YouTube. Numenta, October 20, 2015, accessed January 12, 2016 .