Fringe projection

The stripe projection , sometimes known as structured light scanning and rarely as Streifenlichttopometrie referred comprising optical measuring methods, in the image sequences are used for three-dimensional detection of surfaces. In addition to laser scanning, it is a 3D scanning process that enables the surface shape of objects to be digitized without contact and represented in three dimensions. In principle, such methods work in such a way that structured light (e.g. stripes) is projected onto the object to be measured with a projector and recorded by (at least) one camera. If you know the mutual position of the projector and the camera, you can cut the points mapped into the camera along a strip with the known orientation of the strip from the projector and calculate their three-dimensional position (see Fig. 1).

Overview of the fringe projection methods

The fringe projection methods differ in that they work with differently projected or coded light and in the way in which the different projection patterns are identified in the images.

Measuring principle

The measuring principle is based on human spatial vision, whereby a three-dimensional object is viewed by two eyes that are at a distance from one another (see stereoscopic vision ). The projector replaces one of the two eyes by projecting a beam of light onto the target, illuminating a point on the surface.

Structure of a strip light scanner

In the technical implementation, a strip light scanner that works with the principle of strip projection consists of at least one sample projector , which in principle is similar to a slide projector , and at least one digital video camera , which is mounted on a tripod . In commercial systems, structures with a projector and one or two cameras have now become established.

Measurement sequence

The projector illuminates the measurement object sequentially with patterns of parallel light and dark stripes of different widths (see Fig. 3). The camera (s) register the projected stripe pattern from a known viewing angle for the projection. For each projection pattern, an image is recorded with each camera. A temporal sequence of different brightness values is created for each pixel of all cameras.

Calculation of the surface coordinates

Before the actual calculation, the correct stripe number must be identified in the camera image so that it can be assigned to an image position.

The projector and camera form the base of a triangle, and the projected light beam from the projector and the back-projected pixel from the camera form the sides of the triangle (see Fig. 1). If you know the base length and the angle between the light rays and the base, you can determine the location of the intersection using a triangle calculation, which is known as the triangulation method. The exact calculation of the rays is carried out using the bundle adjustment method known from photogrammetry .

All of the following procedures are based on this principle.

Light section process

In the light section method, a plane light beam is projected onto the object to be measured (see Fig. 2). This bundle of light creates a bright line on the object. From the viewing direction of the projector, this line is exactly straight. From the side view of the video camera you can see it - due to the perspective distortion - deformed by the object geometry. The deviation from straightness in the camera image is a measure of the object height.

The method is often extended by projecting many parallel lines, i.e. a line grid, onto the measurement object at the same time. The correct stripe number is then found again by counting and identifying the corresponding grid line in the image.

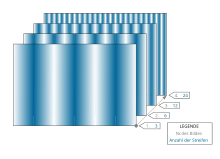

Discontinuities in the object surface lead to problems with the clear assignment. A hierarchical approach is used to avoid possible misassignments (see Fig. 3). This means that you take several pictures and vary the stripe pattern with a different number of stripes. You start with a low number of "coarse" stripes and increase the number of stripes for each image, making the stripes finer and finer.

Coded light approach

The resolution of the ambiguities is particularly problematic with discontinuous surfaces. With the help of the coded light approach, one can resolve the ambiguities and thus has an absolutely measuring method. There are differently coded light patterns that are used here.

One possibility is very similar to the coarse-to-fine strategy mentioned earlier. The difference is that instead of a continuous sinusoidal light-dark sequence, a binary-coded sequence with hard edges is used. These light code patterns projected one after the other are recorded by a synchronously switched camera. As a result, each position in the projected light pattern contains a unique binary code sequence which can then be assigned to a corresponding image position in the images. In other words: If you look at a single picture element in the camera, this picture element “sees” a clear light-dark sequence that can be clearly assigned to the projection line that illuminated the surface element using a table.

Other light codes are e.g. B. random (stochastic) patterns (see Fig. 4). The assignment of the projection pattern to the correct image position is done by selecting a small section of the projection pattern and comparing it with the image ( image matching ). In the field of computer vision and photogrammetry , this is an important process and is known there as the correspondence problem and is the subject of intensive research. Since the mutual position of the projector and the camera are known, the epipolar geometry can be used advantageously to limit the search area . This avoids incorrect assignments (as far as possible) and significantly accelerates the assignment.

Phase shift method

A higher level of accuracy can be achieved with the phase shift method (also called dynamic fringe projection or phase shift ). The projected grating is interpreted as a sinusoidal function. With small changes in height, a light / dark edge in the camera image only shifts by a fraction of a grid period (see Fig. 2). As already mentioned in the section light section method, a line projected onto the object is deformed by the object geometry. The deviation from this line in the camera image is a measure of the object height.

If the projection grating has a sinusoidal brightness modulation, a picture element in the camera signals a sinusoidal change. If you move the projection grid in the projector by a quarter period, the picture element will now output a cosine-shaped dependence on the object height. The quotient of the two signals thus corresponds to the tangent of the displacement caused by the change in height. The arctangent can be efficiently determined from this using a lookup table operation. As angle or phase information, this represents the shift in fractions of the grating period.

The phase shift method can either be used as a supplement to a Gray code or as an absolute measuring heterodyne method.

Practical aspects

A fringe projection scanner must be calibrated before use (see #Calibration ). Commercially available devices are usually already calibrated. The surface information recorded is documented in the form of point clouds or freeform surfaces .

calibration

Precise calibration of the imaging properties is important for the calculation of the coordinates and the guaranteed accuracy of the results . All imaging properties of projectors and cameras are described with the help of a mathematical model. A simple pinhole camera serves as the basis , in which all image rays from the object point in three-dimensional space run through a common point, the projection center, and are mapped into the associated image point on the sensor or film.

In addition, the properties of real lens systems, which are not ideal in this model and which result in distortions of the image, have to be adjusted by means of a distortion correction.

The mentioned parameters of the pinhole camera as well as their position and orientation in space are determined from a series of calibration recordings with photogrammetric methods, in particular with a bundle compensation calculation .

A single measurement with the fringe projection scanner is limited in its completeness by the visibility of the object surface. In order for a point on the surface to be recorded, it must be illuminated by the projector and observed by the cameras. Points that are, for example, on the back of the object must be recorded in a separate measurement.

For a complex object, very many (several hundred) individual measurements may be necessary for the complete acquisition. The following methods are used so that the results of all measurements can be brought together in a common coordinate system: the attachment of point markers to the object as control points , the correlation of object features, or the precise measurement of the sensor position with an additional measuring system. This process is known as navigation among experts .

accuracy

The achievable measurement accuracy is proportional to the third root of the measurement volume. Commercial systems that are used in the reverse engineering sector achieve accuracies of 0.003 mm to 0.3 mm, depending on the technical complexity and measurement volume. Systems with microscopic measuring fields below 1 cm² can be used to assess micro-geometries, such as B. radii on cutting edges or the assessment of microstructures can be used and achieve measurement accuracies below 1 µm.

Applications

Processes that work with projected light are mostly used when particularly high accuracy is required or the surface has no texture . In addition to applications in medicine , dental technology and pathology , fringe projection scanners are mainly used in industry, in the design process for new products ( reverse engineering ) and in checking the shape of workpieces and tools ( target / actual comparison ). With several thousand systems installed in Germany (estimated as of April 2005 ), they are very widespread in the automotive and aircraft industries and represent a preferred alternative to mechanical coordinate measuring machines in many applications.

Crime scene survey

With the help of stripe light scanners in area cameras, forensic technicians scan a crime scene in three dimensions and create a 3D image of the crime scene, which can be precisely analyzed and searched for traces without the police crime scene group having to enter the premises and possibly falsifying or destroying evidence. A partial application of this is the three-dimensional scanning of floor impressions and footprints, which has the advantage over previous pouring with plaster that the evidence is secured without contact and the impression can be reproduced as often as required.

Reverse engineering

The example opposite explains the reverse engineering process using the example of a historic racing car: The Silver Arrow W196, built in 1954. A point cloud (2) with 98 million measuring points was generated from the original (1) in 14 hours of measuring time. These were reduced to axially parallel cuts at a distance of two centimeters (3), on which a CAD model (4) was constructed in around 80 working hours . On the basis of the CAD model, a replica (5) on a scale of 1: 1 was finally made, which can be viewed today in the Mercedes-Benz Museum in Stuttgart-Untertürkheim .

Wound documentation

Wound documentation by means of strip light topometry is superior to all imaging methods used in forensic medicine practice, as it enables the spatial, metrically exact representation with realistic colors of individual relevant points of an injury . The result is a "digital human wounds" and allows the objectification of the outer necropsy .

Application examples in archeology, monument preservation and industry

The following list gives an overview of use cases for this procedure:

- Contactless and object-friendly 3D security documentation of art and cultural assets

- Quality control of machine components

- virtual reconstruction of destroyed objects

- Making difficult to read inscriptions visible

- Animation of facts

- Reproduction or creation of physical copies using rapid prototyping processes

- Production of museum replicas

See also

literature

- G. Frankowski, M. Chen, T. Huth: Real-time 3D Shape Measurement with Digital Stripe Projection by Texas Instruments Micromirror Devices (DMD). In: Proc. Of SPIE. Vol. 3958 (2000), pp. 90-106.

- G. Frankowski, M. Chen, T. Huth: Optical Measurement of the 3D Coordinates and the Combustion Chamber Volume of Engine Cylinder Heads. In: Proc. Of "Fringe 2001". Pp. 593-598.

- Fringe 2005. The 5th International Workshop on Automatic Processing of Fringe Patterns. Springer, Berlin 2006, ISBN 3-540-26037-4 .

- C. Hof, H. Hopermann: Comparison of Replica- and In Vivo-Measurement of the Microtopography of Human Skin. University of the Federal Armed Forces, Hamburg.

- Klaus Körner, Ulrich Droste: Depth scanning fringe projection (DSFP) University of Stuttgart.

- Christian Kohler, Klaus Körner: Stripe triangulation with spatial light modulators. University of Stuttgart.

- T. Peng, SK Gupta, K Lau: Algorithms for constructing 3-D point clouds using multiple digital fringe projection patterns. (PDF; 2.0 MB). CAD Conf., Bangkok, Thailand, June 2005.

- Elena Stoykova, Jana Harizanova, Venteslav Sainov: Pattern Projection Profilometry for 3D Coordinates Measurement of Dynamic Scenes. In: Three Dimensional Television. Springer, 2008, ISBN 978-3-540-72531-2 .

- Method for optical 3D measurement of reflective surfaces: KIT Scientific Publishing (January 29, 2008)

- Herbert Wichmann: Photogrammetry - Laserscanning - Optical 3D measurement technology: Articles from the Oldenburg 3D Days 2006, online version (accessed on June 12, 2020)

- W. Wilke: Segmentation and approximation of large point clouds. Dissertation. Univ. Darmstadt, 2000. (PDF; 4.5 MB).

- G. Wiora: Optical 3D metrology Precise shape measurement with an extended fringe projection method. Dissertation. Univ. Heidelberg, 2001.

- Song Zhang, Peisen Huang: High-resolution, Real-time 3-D Shape Measurement. PhD dissertation. Harvard Univ., 2005. (PDF; 8.1 MB)

Individual evidence

- ↑ a b c d Luhmann, Thomas: Close-range photogrammetry basics, methods and applications . 3., completely reworked. and exp. Wichmann, Berlin 2010, ISBN 978-3-87907-479-2 .

- ↑ Securing the world cultural heritage: 3D scanning instead of drawing

- ↑ Trigonart: 3D scan reverse engineering submarine

- ↑ Press release WS: Virtual reconstruction and animation of the Hathor Chapel

- ^ Inscription from Gisela's grave deciphered , message from November 7, 2016, accessed on November 15, 2016

- ↑ LISA - The Gerda Henkel Foundation's science portal: 3D scanning instead of drawing

- ↑ 3Print.com: Antiquity from the retort: A detailed temple model from the 3D printer

- ↑ Archeology Online: The New Image of the Beautiful