Photogrammetry

Photogrammetry (also known as photogrammetry or image measurement ) is a group of non-contact measurement methods and evaluation processes in order to indirectly determine its position and shape from photographs of an object by means of image measurement and to describe its content through image interpretation. In addition to photographs, other sensors are also used, such as radar with synthetic aperture ( SAR ) and laser scanners . In contrast to other fields such as remote sensing , cartography or computer vision, which also work with contactless sensors, the exact three-dimensional geometric reconstruction of the recorded object is in the foreground in photogrammetry. As a rule, the images are recorded with special measuring cameras . The result is usually presented as a digital model ( digital terrain model ) and in the form of images, plans and maps.

history

The theory of photogrammetry was developed in France and Prussia in the middle of the 19th century in parallel with the emerging photography. The French officer Aimé Laussedat published his text Métrophotographie in 1851 , the German architect Albrecht Meydenbauer published his photogrammetric method for building measurements in 1858. He gave photogrammetry its name and in 1885 founded the first photogrammetrically working authority in the world, the Royal Prussian Messbild-Anstalt . Also Edouard Gaston Deville and Paul Guest were some of the pioneers of this method. In 1907 Eduard von Orel invented the stereo autograph , which was manufactured commercially by the Carl Zeiss company from 1909 . With this device, the contour lines could be drawn automatically for the first time by optically scanning the photos ( stereo image pairs ).

In the 1930s, the bundle was (on the basis of guest by Carl Friedrich Gauss invented compensation calculation ), as he in 1930 in his work lecture on photogrammetry published, designed to be used in a big way on computers since the 1960s. When, at the end of the 1980s, large-format photo scanners for aerial photographs or video cameras and digital cameras for close-range recordings became available, the analog methods of photogrammetry were replaced by digital evaluation processes in most applications. In the first decade of the 21st century, the last step towards full digitization took place, as conventional film-based cameras were increasingly being replaced by digital sensors in aerial photography.

In recent years, the importance of aerial photogrammetry has also increased for rather small-scale applications, as powerful camera drones are becoming more and more affordable and, thanks to their compact dimensions and control options, can also be used sensibly at altitudes and in places where the use of man-carrying aircraft is not possible or not practical is.

Origin of the term photogrammetry

Albrecht Meydenbauer initially referred to the process in 1867 as "photometrography". The name "Photogrammetrie" was first used on December 6, 1867 as the title of the anonymously published article Die Photogrammetrie in the weekly newspaper of the Architects' Association in Berlin (later the Deutsche Bauzeitung ). The editorial team of the Wochenblatt commented: “The name photogrammetry is decidedly better than photometrography, although not quite indicative and satisfactory either.” Since Wilhelm Jordan claimed to have introduced the name photogrammetry in 1876, the editorial team shared the German Bauzeitung on June 22, 1892 under miscellaneous information that the article published in 1867 came from Albrecht Meydenbauer.

overview

Tasks of photogrammetry

Tasks and methods of the subject - which is mostly taught at technical universities in the context of geodesy - are according to Meyers Lexicon as follows:

Recording and evaluation of originally only photographic measurement images to determine the nature, shape and position of any objects. Photogrammetry is experiencing a significant expansion today thanks to new types of image recording devices and digital image processing as a result of the possibilities of optoelectronics , computer technology and digital mass storage. The main field of application of photogrammetry is → geodesy .

Today there are also the production of maps , digital GIS landscape models and special tasks such as architecture and accident photogrammetry as well as in medical applications (e.g. Virtopsy ).

Classifications of photogrammetry

The photogrammetry can be divided in different ways. Depending on the location and distance, a distinction is made between aerial and satellite photogrammetry, terrestrial photogrammetry and close-range photogrammetry. The terrestrial photogrammetry ( Erdbildmessung ) , in which the measurement images are taken from the earth-based points of view ( photo theodolite ), is in the geodetic area for example, topographical images in the high mountains and engineering surveys used. The recording distances are usually less than 300 m and are then referred to as close-range photogrammetry. In aerophotogrammetry ( aerial photo measurement ) , the measurement images are primarily taken from the aircraft (or satellite) using a → measuring chamber (→ aerial photography ) to produce topographic maps, for cadastral measurements and to obtain an elevation model . The use of radar instead of light is also called radar grammetry .

Depending on the number of images used, a distinction is made between single-image , two- image photogrammetry ( stereophotogrammetry ) and multi-image measurement. The measurement images are rectified in single image evaluation devices or measured in three dimensions on stereoscopic double image evaluation devices.

According to the recording and evaluation method, a distinction is made between measuring table photogrammetry, graphic and graphic evaluation (up to approx. 1930), analog photogrammetry for evaluating analog images using optical-mechanical devices (up to approx. 1980), analytical photogrammetry for computer-aided evaluation of analog images (up to approx. 2000) and digital photogrammetry for evaluating digital images on the PC (since around 1985).

Depending on the area of application, one subdivides into architectural photogrammetry for the measurement of architecture and works of art, and engineering photogrammetry for general engineering applications. In addition, there are also non-geodetic applications such as accident and crime scene surveying, biomedical applications, agriculture and forestry , radiology as well as civil engineering and technical testing .

Principal methods

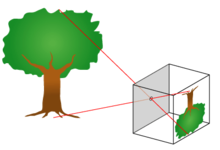

The photogrammetric process begins with capturing an object. An object is either recorded with a passive sensor (such as a camera) or an active sensor (laser scanner) is scanned. In any case, the recording is created through a complex physical process. In the case of a passive sensor, sunlight is usually reflected by the earth's surface and partially absorbed, passing through the atmosphere until it hits the light-sensitive sensor through the lens of a camera. Image recording is therefore subject to a multitude of influences, starting with the electromagnetic radiation as a carrier of information, the interaction with the surface material, the atmospheric conditions and finally the lens and the sensor of the camera. In particular, there are geometric and radiometric (color) changes in the image recording. Methods are therefore necessary that take these physical conditions into account.

In the photogrammetric evaluation of images, the imaging geometry must be restored at the time of recording. This restoration takes place in accordance with the laws of central projection in compliance with the condition of planarity . Each image defines a direction to the object point for a mapped point together with the projection center of the respective camera . If you know the position (position and orientation) of the camera (external orientation) and the imaging geometry within the camera (internal orientation), then each ray can be described in space.

By using at least two homologous (corresponding) image points from two different recording positions (stereo image pair), if the mutual position (relative orientation) is known, the two rays can be intersected and each object point can be calculated three-dimensionally. As a rule, adjustment calculation methods are used (bundle adjustment). From a procedural point of view, it was previously (and partly still today) necessary to first perform the 3D reconstruction in a temporary model coordinate system. In this case, you then had to carry out a transformation into the desired target system (absolute orientation).

Inner orientation

The internal orientation of a camera describes the position of the projection center in relation to the image plane. These are the coordinates ( ) of the main point of the image and the vertical distance ( camera constant ) between the image plane and the projection center ( ). These three elements are essential parameters of the pinhole camera model and describe its internal geometry. Since a real camera deviates from this idealized model of the pinhole camera, additional parameters are required that describe these deviations. These deviations are summarized under the term distortion . Only after the distortion has been corrected do the image positions match the original imaging rays again. That is why the distortion is usually included in the internal orientation data. The parameters of the internal orientation can be determined by means of a camera calibration .

External orientation

The external orientation describes the spatial position of the image during the recording, in particular the position of the projection center and the viewing direction of the camera. This establishes the relationship between the camera and a higher-level global coordinate system. This is done with the help of control points , on which the corresponding image coordinates are iteratively calculated in the course of a spatial backward cut.

Collinearity equations

If the internal and external orientation is known , the functional relationship between the 3D coordinates of the object points and their mapped image coordinates can be established. The underlying model is the pinhole camera , which represents the technical implementation of the central projection. The mathematical formulation of the central projection results in the collinearity equations, which also represent the central equations of photogrammetry:

The meanings of the symbols are explained below:

- - Chamber constant

- - Parameters of the 3 × 3 rotation matrix (from the global to the camera coordinate system)

- - Factors for different scaling of sensor pixels (only necessary for non-square sensor elements)

- - Coordinates of the projection center (with respect to the global coordinate system)

- - 3D coordinates of an object point (with respect to the global coordinate system)

- - Coordinates of the main point of the image (with respect to the fiducial mark system for analog images (film) or with respect to the sensor system). Note : depending on which alignment is selected for the axes of the sensor system, the fraction for must be multiplied by −1, because the axis of the SKS is directed opposite to the image coordinate system (see collinearity equation # level 3 - summary of the transformation variant 3) .

- and - functions for specifying the distortion corrections

Alternatively, the functional relationship of the central projection can also be formulated linearly using homogeneous coordinates with the aid of projective geometry (see projection matrix ). It is equivalent to the classical collinearity equation . However, this formulation arose from the field of computer vision and not from photogrammetry. It should be mentioned for the sake of completeness.

Relative orientation

Restoration of the relative position of two images in space and calculation of a so-called model . From the coordinates of the two images and the model coordinates are calculated. In practice, numerous images, for example from aerial photographs, can be combined to form a model network

Absolute orientation

The model network from the relative orientation already corresponds to the geometry of the points in the location, but the spatial orientation of the model network does not yet match the location and the scale is still unknown. In the course of a three-dimensional Helmert transformation , the model coordinates of the model network are transformed to the known control points in the location. The Helmert transformation fits the points into the existing point field in such a way that the residual gaps in the coordinates are minimal. If a residual error interpolation is used, this gap can also be eliminated.

In the past, two aerial images were evaluated in aerial image evaluation devices, which achieved relative and absolute orientation through physical restoration of the beam. Today the evaluation usually takes place in comparators in which image coordinates are measured directly. The further work steps are then numerical photogrammetry procedures, whereby model block and bundle block adjustment methods are used.

Camera calibration

During camera calibration, the imaging properties, i.e. the internal orientation of the camera and the external orientation, are calculated from the known image and 3D coordinates of the object points.

Image measurement

The image measurement determines the exact image coordinates of the image of an object point in an image. In the simplest case, the image measurement is done manually. On a negative or positive, the position of the object point of interest is determined by a person with a measuring device. Since this method is very error-prone and slow, computer-aided methods are used today almost without exception to search for and measure objects in images. Methods of digital image processing and pattern recognition are used. You can make these tasks much easier by using artificial signal markers. These can be identified with automatic methods and localized very precisely to 1/50 to 1/100 pixel in the image.

Backward cut

The reverse cut calculates the camera position, i.e. the outer orientation, from the known inner orientation , the 3D coordinates of the object points and their image coordinates . In classical geodesy, at least three fixed points are required for this , while a larger number of points is usually used in measurement images to increase accuracy. (Note: normally 6 control points are required to orient a measurement image).

Forward cut

With a forward cut , the 3D coordinates of the object points can be calculated with at least two known outer and inner orientations and the associated image coordinates . The prerequisite is that at least two photographs of an object were taken from different directions, whether simultaneously with several cameras or sequentially with one camera is irrelevant for the principle.

Model block adjustment

Two images in an analog or analytical evaluation device must be oriented relatively . The resulting spatial model coordinates are transformed with the help of linked three-dimensional Helmert transformations in a joint adjustment to the earth's surface (absolute orientation) . The numerics of the equations to be solved only consist of rotations, translations and a scale. By referring the coordinates to their center of gravity, the normal equations of the adjustment system decay and the 7 unknowns necessary to adjust a model are reduced to two normal equations with 4 and 3 unknowns. Since the numerics are not too demanding, this calculation method was widely used.

A program system developed at the University of Stuttgart at the end of the 1970s was called PAT-M43 (program system aerotriangulation - model block adjustment with 4 or 3 unknowns). The accuracies that can be achieved with model block adjustments result in mean errors for the position of ± 7 µm and for the height of ± 10 µm. Similar program systems emerged with the Orient of the Vienna University of Technology and at other universities.

Bundle block adjustment

The bundle block adjustment is the most important method of photogrammetry. With it one can calculate all unknown quantities of the collinearity equations from rough approximate values for outer and inner orientation . The only known quantity required is the image coordinates of the object points, as well as additional observations in the form of a length scale or the spatial coordinates of control points. This method is the most frequently used method of photogrammetry for static measurement objects. The main advantage lies in the possibility of simultaneous calibration . This means that the measuring camera is calibrated during the actual measurement. Measurement and calibration recordings are therefore identical, which reduces the effort required for the measurement and at the same time guarantees that the measuring camera is always calibrated. However, not all configurations of object points are suitable for simultaneous calibration. Then either additional object points must be included in the measurement or separate calibration recordings must be made.

The bundle block adjustment is based, as the name suggests, on the joint computation of bundle blocks . From a theoretical point of view, it is the stricter method compared to the model block adjustment. Obtaining the initial data is easier, however. The further calculation via the modeling up to the absolute orientation takes place in a single adjustment calculation . However, the requirements for numerics are much higher than for model block adjustment: the normal equations do not disintegrate and the number of unknowns is significantly higher at up to several thousand.

Further procedures

Structured light or fringe projection

Another method is the " structured light scanning " (English: "Structured Light Scanning"). Here, structured light is projected onto the object to be measured with a projector and recorded by a camera. If you know the mutual position of the projector and the camera, you can cut the points shown along a strip with the known alignment of the strip from the projector. There are several variants (s. Also working with different projected light stripe projection and light section method ).

Laser scanning

Under laser scanning (s. Also terrestrial laser scanning ) comprises one different methods, in which a laser "scans" the surface line by line. The distance to the object is determined for each individual position using a transit time measurement. Lidar (English abbreviation for light detection and ranging ) works according to the same principle .

3D TOF cameras

The abbreviation 3D-TOF is the English term 3D-time-of-flight, which can be translated as three-dimensional transit time. It is also based on a time of flight measurement of the light. The difference to other methods such as laser scanning or lidar is that it is a two-dimensional sensor. Similar to a normal digital camera, the image plane contains evenly arranged light sensors and additional tiny LEDs (or laser diodes) that emit a pulse of infrared light. The light reflected from the surface is captured by the optics and mapped onto the sensor. A filter ensures that only the emitted color is allowed through. This enables the simultaneous determination of the distance of a surface piece.

Applications

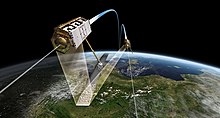

Aerial photogrammetry

In aerial photogrammetry , the photographs are taken with airborne, digital or analog measurement cameras . The result is usually regular, strip-wise image associations in which neighboring images clearly overlap. The image groups are oriented , i.e. transformed into a common coordinate system. The orientation of the image groups is based on fitting and connection points within the framework of a bundle block adjustment . Subsequent products such as 3D points, digital terrain models (DTM) , orthophotos, etc. can be derived from the oriented images . The results of aerial photogrammetry are used to create and update topographic maps and orthophotos, to determine large-scale points in real estate cadastres and to clean up land . Digital terrain models (DTM) can also be derived from the data. The land use survey as well as the environmental and pipeline cadastre also benefit from the results of aerial photogrammetry.

Close range photogrammetry

Close-range photogrammetry deals with objects in a size range from a few centimeters to around 100 meters.

The simplest method is to photograph an object together with a reference scale. This scale can be made of paper, cardboard, plastic, etc. Leveling staves are conceivable for larger objects . This method is used in medical documentation, archeology , forensics and forensics . By comparing the object with the scale, its actual size can be calculated.

In short-range photogrammetry, unlike aerial photogrammetry, there are no restrictions on the arrangement of the images. Any shooting positions can be used, such as those created when photographing an object with a handheld camera from several directions. As a rule, high-resolution digital cameras are used for this today .

The most common fields of application of close-range photogrammetry are industrial measurement technology (see fringe projection ), medicine and biomechanics as well as accident recording . In architecture and archeology , close-range photogrammetry is used for building surveys , i.e. for documentation as the basis for renovations and monument conservation measures.

Corrected photographs are an important by-product of short-range photogrammetry . These are photographs of almost flat objects such as building facades, which are projected onto a surface in such a way that the distances in the image can be converted into metric lengths and distances using a simple scale.

More recently, modern cinematography has also adopted techniques from photogrammetry. In the 1999 film Fight Club , for example, this technology enabled interesting tracking shots. With the release of the film Matrix in the same year, the bullet-time method first attracted worldwide attention.

See also

literature

- Konsortium Luftbild GmbH - Stereographik GmbH Munich (Hrsg.): The photogrammetric terrain measurement. Self-published, Munich 1922.

- Karl Kraus : Photogrammetry. de Gruyter, Berlin 2004, ISBN 3-11-017708-0

- Thomas Luhmann: Close-range photogrammetry. Wichmann, Heidelberg 2003, ISBN 3-87907-398-8

- McGlone, Mikhail, Bethel (Eds.): Manual of Photogrammetry. ASPRS, Bethesda MD 5 2004, ISBN 1-57083-071-1

- Alparslan Akça, Jürgen Huss (Hrsg.) Et al .: Aerial image measurement and remote sensing in forestry . Wichmann, Karlsruhe 1984, ISBN 3-87907-131-4

Individual evidence

- ↑ Duden | Photogrammetry | Spelling, meaning, definition, origin. Retrieved January 5, 2020 .

- ↑ Luhmann, Thomas: Close-range photogrammetry basics, methods and applications . 3., completely reworked. and exp. Wichmann, Berlin 2010, ISBN 978-3-87907-479-2 .

- ↑ Orel Eduard von. In: Austrian Biographical Lexicon 1815–1950 (ÖBL). Volume 7, Verlag der Österreichischen Akademie der Wissenschaften, Vienna 1978, ISBN 3-7001-0187-2 , p. 243 f. (Direct links on p. 243 , p. 244 ).

- ^ Albrecht Meydenbauer: The photometrography . In: Wochenblatt des Architektenverein zu Berlin, Vol. 1, 1867, No. 14, pp. 125–126 ( digitized version ); No. 15, pp. 139-140 ( digitized version ); No. 16, pp. 149-150 ( digitized version ).

- ↑ Photogrammetry . In: Wochenblatt des Architektenverein zu Berlin vol. 1, 1867, no. 49, pp. 471–472 ( digitized version ).

- ↑ Wochenblatt des Architektenverein zu Berlin, vol. 1, 1867, No. 49, p. 471 footnote.

- ↑ Zeitschrift für Vermessungswesen 26, 1892, p. 220 ( digitized version ) with reference to his article on the utilization of photography for geometric recordings (photogrammetry) . In: Zeitschrift für Vermessungswesen 5, 1876, pp. 1–17 ( digitized version ).

- ↑ Deutsche Bauzeitung, Volume 26, 1892, No. 50, p. 300 ( digitized version ). See Albrecht Grimm: The origin of the word photogrammetry . In: International Archives for Photogrammetry Vol. 23, 1980, pp. 323-330; Albrecht Grimm: The Origin of the Term Photogrammetry . In: Dieter Fritsch (Ed.): Proceedings of 51st photogrammetric week . Berlin 2007, pp. 53-60 ( digitized version ).

- ^ Meyers Lexikon ( Memento from November 12, 2006 in the Internet Archive )

- ↑ K. Kraus 2004, FK List 1998.

Web links

- Photogrammetry in traffic accident recording

- Swiss Society for Photogrammetry in the archive database of the Swiss Federal Archives