Oversampled binary image sensor

An oversampled binary image sensor is a new type of image sensor with properties reminiscent of conventional photographic film . Each pixel in the sensor has a binary output and outputs a measurement of the local light intensity, quantized to just one bit. The response function of the image sensor is non-linear and similar to a logarithmic function, which makes the sensor suitable for high-contrast images .

introduction

Before the advent of digital image sensors, photography used film for the majority of its history to record photo information. At the heart of any photographic film is a large number of photosensitive grains of silver halide crystals. During exposure, each micrometer-sized grain has a binary fate: It is either hit by a few incident photons and is “exposed” or is missed by the photon bombardment and remains “unexposed”. In the following development process, exposed grains are converted to metallic silver because of their changed chemical properties and contribute to opaque areas of the film; unexposed grains are washed out and leave transparent areas on the film. Therefore, photographic film is basically a binary image medium that uses local density differences of opaque silver grains to encode the original light intensity. Thanks to the small size and the large number of these grains, this quantized nature of the film can hardly be recognized at a certain viewing distance and only a continuous gray tone can be seen.

The properties of the oversampled binary image sensor are reminiscent of photographic film. Each pixel in the sensor has a binary output and outputs a measurement of the local light intensity, quantized to just one bit. At the beginning of the exposure time, all pixels are set to 0. A pixel is then set to 1 if the number of at least a certain threshold value q arriving within the exposure time reaches. One way of constructing such binary sensors is to modify conventional memory chip technology, with each memory cell being made sensitive to visible light. With current CMOS technology, the integration density of such systems can exceed 10 9 ~ 10 10 (i.e. 1 to 10 gigabytes) pixels per chip. In this case the resulting pixel sizes (around 50 ~ nm) are far below the diffraction limit of light and the image sensor achieves an oversampling of the optical resolution of the light field. This spatial redundancy can of course be used to compensate for the quantization to one bit, as is the case with oversampling delta-sigma modulation .

The construction of a binary sensor that mimics the principle of photographic film was first envisioned by Fossum, who coined the name digital film sensor . The original motivation was mainly a technical necessity. The miniaturization of camera systems calls for continuous reduction in the size of pixels. At some point, however, the limited saturation capacity (the highest number of photons - electrons that a pixel can contain) becomes the bottleneck and leads to a very low signal-to-noise ratio and poor dynamic range . In contrast, a binary sensor, the image points of which only needs to recognize a few photon electrons around a low threshold value q , has much lower demands on saturation capacities, which means that image point sizes can be further reduced.

Image acquisition

The Lens

Think of a simplified camera model as shown in Figure 1. This is the incident light intensity field. By assuming constant light intensities within a short exposure time, the field can be modeled solely by a function of the spatial variable . After passing through the optical system, the original light field is filtered through the lens, which behaves like a linear system with a fixed impulse response. Due to imperfections (e.g. aberrations) in the lens, the impulse response, also known as the point spread function (PSF) of the optical system, cannot be the same as Dirac Delta and thus limits the resolution of the observable light field. A more fundamental, physical limitation is based on the diffraction of light . As a result, the PSF is inevitably a small, washed-out spot, even with an ideal lens. In optics, such a diffraction-limited spot is often referred to as a diffraction disk, the radius of which can be calculated as

where is the wavelength of light and the f-number of the optical system. Due to the low-pass nature (soft focus) of the PSF, the resulting spatial resolution is limited, i.e. it has a limited number of degrees of freedom per unit of space.

The sensor

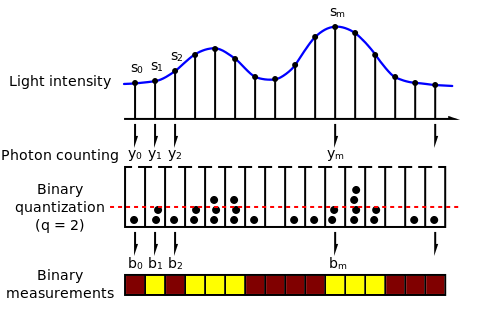

Figure 2 shows the binary sensor model. They stand for the exposure that a sensor pixel has received. Depending on the local values of (shown as “buckets” in the figure), each pixel collects a different number of photons that arrive on its surface. is the number of photons that hit the surface of the th pixel during the exposure time . The relationship between and the number of photons is stochastic. More precisely: can be implemented as a Poisson random variable whose intensity parameter is the same .

Each pixel in the image sensor acts as a light-sensitive device that converts photons into electrical signals, the amplitude of which is proportional to the number of photons that hit them. In a conventional sensor design, the analog electrical signals are subsequently quantized to 8 to 14 bits (usually the more the better) by an analog-to-digital converter . The binary sensor, however, quantizes to 1 bit. In Figure 2 is the quantized output of the th pixel. Since the photon numbers are obtained from random variables, the outputs of the binary sensor are also.

Spatial and temporal oversampling

If temporal oversampling is permitted, i.e. several separate images are recorded one after the other with the same exposure time , then the performance of the binary sensor is equal to that of a sensor with the same degree of spatial oversampling under certain conditions. This means that a trade-off between spatial and temporal oversampling can be made. This is quite important as there are usually technical limitations on pixel size and exposure time.

Advantages over conventional sensors

Due to the limited saturation capacity of conventional image points, the pixel is saturated if the light intensity is too high. For this reason, the dynamic range of the pixel is low. In the case of the oversampled binary image sensor, the dynamic range is not defined for a single pixel but for a group of pixels, which leads to a high dynamic range.

reconstruction

One of the most important problems with oversampled binary image sensors is the reconstruction of the light intensity from the binary measurement . It can be solved using the maximum likelihood method . Figure 4 shows the results of a reconstruction of the light intensity from 4096 binary recordings from a single-photon avalanche photo-diode camera (SPADs camera).

swell

- ↑ a b L. Sbaiz, F. Yang, E. Charbon, S. Süsstrunk and M. Vetterli: The Gigavision Camera, Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) , pages 1093-1096, 2009.

- ↑ a b c d F. Yang, YM Lu, L. Saibz and M. Vetterli: Bits from Photons: Oversampled Image Acquisition Using Binary Poisson Statistics, IEEE Transaction on Image Processing , Volume 21, Issue 4, Pages 1421-1436, 2012 .

- ^ TH James: The Theory of The Photographic Process, 4th Edition, New York: Macmillan Publishing Co., Inc., 1977.

- ↑ SA Ciarcia: A 64K-bit dynamic RAM chip is the visual sensor in this digital image camera, Byte Magazine , pages 21-31, September 1983.

- ↑ YK Park, SH Lee, JW Lee et al .: Fully integrated 56nm DRAM technology for 1Gb DRAM, in IEEE Symposium on VLSI Technology , Kyoto, Japan, June 2007.

- ↑ JC Candy and GC Temes: Oversamling Delta-Sigma Data Converters-Theory, Design and Simulation. New York, NY: IEEE Press, 1992.

- ↑ ER Fossum: What to do with sub-diffraction-limit (SDL) pixels? - A proposal for a gigapixel digital film sensor (DFS), in IEEE Workshop on Charge-Coupled Devices and Advanced Image Sensors , Nagano, June 2005, pages 214-217.

- ↑ a b M. Born and E. Wolf, Principles of Optics , 7th Edition, Cambridge: Cambridge University Press, 1999

- ↑ a b L. Carrara, C. Niclass, N. Scheidegger, H. Shea and E. Charbon: A gamma, X-ray and high energy proton radiation-tolerant CMOS image sensor for space applications, in IEEE International Solid-State Circuits Conference , Feb 2009, pages 40-41.