Switch (network technology)

Switch (from the English for “switch”, “toggle switch” or “switch”, also called network switch or distributor ) describes a coupling element in computer networks that connects network segments with one another. Within a segment ( broadcast domain ), it ensures that the data packets , so-called “frames”, arrive at their destination. In contrast to a repeater hub that looks very similar at first glance , frames are not simply forwarded to all other ports, but only to the one to which the target device is connected - a switch makes a forwarding decision based on the automatically learned hardware addresses of the connected ports Equipment.

The term switch generally refers to a multiport bridge - an active network device that forwards frames based on information from the data link layer (layer 2) of the OSI model . Sometimes the more precise terms bridging hub or switching hub are used, in the IEEE 802.3 standard the function is called MAC bridge . (Packet) "Switching" is borrowed from circuit-switching technology, nothing is actually "switched". The first EtherSwitch was introduced by Kalpana in 1990 .

The device on network layer 1, comparable to the switch, is referred to as a (repeater) hub. Switches that also process data on the network layer ( layer 3 and higher) are often referred to as layer 3 switches or multilayer switches and can fulfill the function of a router . In addition to Ethernet switches are available Fiber Channel switches, including SAS - expanders are increasingly being referred to as switches. Fiber Channel (FC) defines a non-routable standard protocol from the field of storage networks , which was designed as a variant of SCSI for the high-speed transmission of large amounts of data. SAS (Serial Attached SCSI) is the direct successor to the older parallel SCSI interface.

Features and functions

Simple switches work exclusively on the layer 2 ( data link layer ) of the OSI model. When it receives a frame, the switch processes the 48-bit long MAC address (e.g. 08: 00: 20: ae: fd: 7e) and creates an entry in the source address table (SAT) in the In addition to the MAC address, the physical port on which it was received is also saved. In contrast to the hub , frames are then only forwarded to the port that is listed in the SAT for the corresponding destination address. If the route to the destination address is still unknown (learning phase), the switch forwards the relevant frame to all other active ports. One difference between a bridge and a switch is the number of ports: Bridges typically only have two ports, rarely three or more, whereas switches, as individual devices, have around 5 to 50 ports, modular switches also have several hundred. From SOHO to large building installations to data centers, the enclosures change fluently. Larger devices have mostly metal housings and are equipped with mounting brackets for installation in 10 " or 19" racks . All ports should be able to send and receive simultaneously independently of one another (non-blocking). Another possible difference to bridges is that some switch types can handle the cut-through technology and other extensions (see below). This reduces latency, i.e. the delay between sending a request and receiving the response. Switches can also handle broadcasts ; these are forwarded to all ports. With a few exceptions: A switch is a bridge, but not every bridge is a switch. Bridges, which can connect different protocols such as Token Ring and Ethernet ( MAC bridge or LLC bridge ), are an exception . Such functionality is not found in switches.

A switch is largely transparent to the connected devices. If communication mainly takes place between the devices within a segment, the number of frames circulating in the other segments is drastically reduced by using a switch. However, if a switch has to forward frames to other segments, its use tends to lead to a delay in communication (latency). If a segment is overloaded or there is insufficient buffer memory in the switch, frames can be discarded. This must be compensated for by higher layer protocols such as TCP .

Layer 2 and Layer 3 switches

A distinction is made between layer 2 and layer 3 or higher switches. Layer 2 devices are often simpler models. Smaller devices often only have basic functions and usually do not have any management functions (they are, however, plug-and-play capable), or only with a limited range of functions such as port locks or statistics. Professional layer 3 or higher switches usually also have management functions; In addition to the basic switch functions, they also have control and monitoring functions that can also be based on information from layers higher than Layer 2, such as B. IP filtering , prioritization for Quality of Service , routing. In contrast to a router , with a Layer 3 switch the forwarding decision is made in the hardware and thus faster or with lower latency . The range of functions of layer 4 switches and higher differs greatly from manufacturer to manufacturer, but such functions are usually mapped in hardware such as network address translation / port address translation and load balancing .

management

The configuration or control of a switch with management functions takes place depending on the manufacturer via a command line (via Telnet or SSH ), a web interface, special control software or a combination of these options. With the current, "non-managed" (plug-and-play) switches, some higher-quality devices also have functions such as tagged VLAN or prioritization and still do without a console or other management interface.

functionality

Unless otherwise indicated, layer 2 switches are assumed below. The individual inputs / outputs, the so-called " ports ", of a switch can receive and send data independently of one another. These are either connected to one another via an internal high-speed bus ( backplane switch ) or crosswise ( matrix switch ). Data buffers ensure that no frames are lost if possible.

Source Address Table

As a rule, a switch does not have to be configured. If it receives a frame after switching on, it saves the MAC address of the sender and the associated interface in the Source Address Table (SAT).

If the target address is found in the SAT, the receiver is in the segment that is connected to the associated interface. The frame is then forwarded to this interface. If the receiving and target segments are identical, the frame does not have to be forwarded, as communication can take place in the segment itself without a switch.

If the destination address is not (yet) in the SAT, the frame must be forwarded to all other interfaces. In an IPv4 network, the SAT entry is usually made during the ARP address requests, which are necessary anyway . First of all, the sender MAC address can be assigned from the ARP address request, and the recipient MAC address is then obtained from the response frame. Since the ARP requests are broadcasts and the responses always go to MAC addresses that have already been learned, no unnecessary traffic is generated. Broadcast addresses are never entered in the SAT and are therefore always forwarded to all segments. Frames to multicast addresses are processed by simple devices such as broadcasts. More sophisticated switches are often able to handle multicasts and then only send multicast frames to the registered multicast address recipients.

In a sense, switches learn the MAC addresses of the devices in the connected segments automatically.

Different ways of working

An Ethernet frame contains the destination address after the so-called data preamble in the first 48 bits (6 bytes). Forwarding to the target segment can therefore begin after the first six bytes have been received, while the frame is still being received. A frame is 64 to 1518 bytes long; the last four bytes contain a CRC checksum ( cyclical redundancy check ) to identify incorrect frames . Data errors in frames can only be detected after the entire frame has been read.

Depending on the requirements for the delay time and error detection, switches can therefore be operated differently:

- Cut-through

- a) Fast-forward switching , mainly A very quick way of better switches - implemented . The switch makes a forwarding decision for the incoming frame directly after the destination MAC address and forwards the frame accordingly while it is still being received. The latency is made up of the length of the preamble (8 bytes), the destination MAC address (6 bytes) and the response time of the switch. Due to the earliest possible forwarding, however, the frame cannot be checked for correctness, and the switch also forwards possibly damaged frames. However, since there is no error correction in Layer 2, incorrect frames only burden the relevant connection. (A correction can only take place in higher network layers.) If errors are too frequent, some switches also switch to the slower, but error-free forwarding with store-and-forward or down (see below).

- b) Fragment-Free - Faster than store-and-forward switching, but slower than fast-forward switching, found especially with better switches. With this method, the switch checks whether a frame reaches the minimum length of 64 bytes (512 bits) required by the Ethernet standard and only then sends it on to the destination port without performing a CRC check. Fragments under 64 bytes are usually the debris of a collision that no longer results in a meaningful frame.

- Store-and-Forward - the safest, but also the slowest switch method with the greatest latency is mastered by every switch. The switch first receives the entire frame (stores it; "Store"), calculates the checksum over the frame and then makes its forwarding decision based on the destination MAC address. If there are differences between the calculated checksum and the CRC value stored at the end of the frame , the frame is discarded. In this way, no faulty frames spread in the local network. For a long time, store-and-forward was the only possible way of working when sender and receiver worked with different transmission speeds or duplex modes or used different transmission media. The latency in bits here is identical to the total packet length - with Ethernet, Fast Ethernet and Gigabit Ethernet in full duplex mode this is at least 576 bits, the upper limit is the maximum packet size (12,208 bits) - plus the response time of the switch. Today there are also switches that master a cut-and-store hybrid mode, which also reduces latency when transferring data between slow and fast connections.

- Error-Free-Cut-Through / Adaptive Switching - A mixture of several of the above methods, also mostly only implemented by more expensive switches. The switch initially works in "Cut through" mode and sends the frame on to the correct port into the LAN. However, a copy of the frame is kept in memory, which is then used to calculate a checksum. If it does not match the CRC value stored in the frame, the switch can no longer directly signal the defective frame that it is faulty, but it can count up an internal counter with the error rate per unit of time. If too many errors occur in a short period of time, the switch falls back into store-and-forward mode. If the error rate falls again low enough, the switch switches to cut-through mode. It can also temporarily switch to fragment-free mode if too many fragments with a length of less than 64 bytes arrive. If the sender and receiver have different transmission speeds or duplex modes or if they use other transmission media (fiber optics on copper), the data must also be transmitted using store-and-forward technology.

Today's networks distinguish between two architectures: symmetrical and asymmetrical switching according to the uniformity of the connection speed of the ports. In the case of asymmetrical switching, i. H. if the sending and receiving ports have different speeds, the store-and-forward principle is used. With symmetrical switching, i.e. the coupling of the same Ethernet speeds, the cut-through concept is used.

- Port switching, segment switching

In the early days of switching technology there were two variants: port and segment switching . This differentiation only plays a subordinate role in modern networks, since all commercially available switches are capable of segment switching on all ports.

- A port switch only manages one SAT entry for a MAC address per port. Only end devices ( server , router , workstation ) and no other segments, i.e. no bridges, hubs or switches (behind which there are several MAC addresses) may be connected to such a connection (see MAC flooding ). In addition, there was often a so-called "uplink port" that virtually connects the local devices "to the outside" and for which this restriction did not apply. This port often had no SAT, but was simply used for all MAC addresses that were not assigned to another local port. Such switches usually work according to the cut-through method. Despite these apparently disadvantageous restrictions, there were also advantages: The switches managed with extremely little memory (lower costs) and due to the minimum size of the SAT, the switching decision could also be made very quickly.

- All newer switches are segment switches and can manage numerous MAC addresses on each port. H. connect further network segments. There are two different SAT arrangements: Either each port has its own table of, for example, max. 250 addresses, or there is a common SAT for all ports - with a maximum of 2000 entries, for example. Caution: Some manufacturers give 2000 address entries, but mean 8 ports with a maximum of 250 entries per port.

Multiple switches in a network

In early switches the connection had several devices mostly either via a special uplink port or via a crossover cable (crossover cable) take place, newer switches as well as all Gigabit Ethernet switches control Auto-MDI (X) , so that these coupled together without any special cables can be. Often, but not necessarily, uplink ports are implemented using faster or higher quality (Ethernet) transmission technology than the other ports (e.g. Gigabit Ethernet instead of Fast Ethernet or fiber optic cables instead of twisted-pair copper cables ). In contrast to hubs, almost any number of switches can be connected to one another. The upper limit has nothing to do with a maximum cable length, but depends on the size of the address table (SAT). With current entry-level devices, 500 entries (or more) are often possible, which limits the maximum number of nodes (~ computers) to these 500. If several switches are used, the device with the smallest SAT limits the maximum number of nodes. High quality devices can handle many thousands of addresses. If an address table that is too small overflows during operation, all frames that cannot be assigned must be forwarded to all other ports , as is the case with MAC flooding , which can result in a drastic drop in transmission performance.

In order to increase the reliability, connections can be established redundantly with many devices. Here, the multiple transport of broadcast and switching loops through the via Spanning Tree Protocol established (STP) spanning tree prevented. Meshing ( IEEE 802.1aq - Shortest Path Bridging ) is another way of making a network redundant with loops and at the same time increasing performance . Any loops between meshing-capable devices may be formed here; To increase performance, all loops (including partial loops) can then continue to be used for unicast data traffic (similar to trunking) (no simple spanning tree is created). Multicast and broadcast must be handled separately by the meshing switch and may only be forwarded on one of the available meshed connections.

If switches in a network are connected to themselves without any further precautions or if several switches are connected cyclically in a loop, a loop is created, a so-called switching loop . Due to the endless duplication and circling of data packets, such a faulty network usually leads to a total failure of the network.

A better use of multiple connections (links) is the port bundling (English: trunking , bonding , etherchannel - depending on the manufacturer), whereby up to eight [2009] similar connections can be switched in parallel to increase the speed. This method is mastered by professional switches, which can be connected to one another, from switch to switch or from switch to server in this way. A standard is defined with LACP (first IEEE 802.3ad , later IEEE 802.1AX ), but the interconnection of switches from different manufacturers can sometimes be problematic. In addition to a few manufacturer-specific protocols, there are also non-negotiated, so-called static bundles. Such a port bundling is also limited to several links between two devices; It is not possible to connect three or more switches, for example in an active ring. Without STP, either a switching loop is formed or frames do not reach their destination reliably, with STP one of the links is blocked and only with SPB can all links actually be used.

In the switching environment, stacking is a technology with which a common logical switch with a higher number of ports and common management is configured from several independent, stackable switches. Stacking-capable switches offer special ports, the so-called stacking ports, which usually work with a particularly high transmission rate and low latency. With stacking, the switches, which usually have to come from the same manufacturer and model series, are connected to one another with a special stack cable. A stacking connection is usually the fastest connection between several switches and transfers not only data but also management information. Such interfaces can be more expensive than standard high-speed ports, which of course can also be used as uplinks; Uplinks are always possible, but: not all switches support stacking.

Architectures

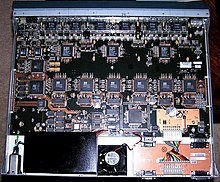

The core of a switch is the switching fabric , through which the frames are transferred from the input to the output port. The switching fabric is implemented entirely in hardware to ensure low latency and high throughput. In addition to the pure processing task, it collects statistical data such as the number of frames transferred, (frame) throughput or errors. The brokerage activity can be carried out in three ways:

- Shared memory switching: This concept is based on the idea that the computer and switch work in a similar way. They receive data via input interfaces, process them and pass them on via output ports. Similarly, a received frame signals its arrival to the switch processor via an interrupt. The processor extracts the destination address, searches for the corresponding output port and copies the frame into the buffer. As a result, a speed estimate results from the consideration that if N frames / s can be read into and out of the memory, the switching rate cannot exceed N / 2 frames / s.

- Bus switching: With this approach, the receiving port transmits a frame to the output port over a shared bus without intervention by the processor. The bottleneck is the bus, over which only one frame can be transferred at a time. A frame that arrives at the input port and finds the bus busy is therefore placed in the queue of the input port. Since each frame has to traverse the bus separately, the switching speed is limited to the bus throughput.

- Matrix switching: The matrix principle is a possibility to remove the throughput limitation of the shared bus. A switch of this type consists of a switching network that connects N input and N output ports via 2N lines. A frame arriving at an input port is transmitted on the horizontal bus until it intersects the vertical bus leading to the desired output port. If this line is blocked by the transmission of another frame, the frame must be placed in the queue of the input port.

advantages

Switches have the following advantages:

- If two network subscribers send at the same time, there is no data collision (see CSMA / CD ), since the switch can transmit both transmissions internally via the backplane . If the data arrives at a port faster than it can be sent on via the network, the data is buffered. If possible, flow control is used to request the sender (s) to send the data more slowly. If you have eight computers connected via an 8-port switch and two each send data to each other at full speed, so that four full-duplex connections are made, you have arithmetically eight times the speed of a corresponding hub, with all devices at the maximum Share bandwidth. Namely 4 × 200 Mbit / s as opposed to 100 Mbit / s. However, two aspects speak against this calculation: on the one hand, the internal processors, especially in the low-cost segment, are not always designed to serve all ports at full speed, on the other hand, a hub with several computers will never reach 100 Mbit / s, since the more collisions occur, the more the network is used, which in turn throttles the usable bandwidth. Depending on the manufacturer and model, the actually achievable throughput rates are more or less clearly below the theoretically achievable 100%, with inexpensive devices data rates between 60% and 90% are quite common.

- The switch records in a table which station can be reached via which port. For this purpose, the sender MAC addresses of the frames passed through are saved during operation . Data is only forwarded to the port where the recipient is actually located, which prevents espionage by using the promiscuous mode of the network card, as was still possible with networks with hubs. Frames with (still) unknown destination MAC addresses are treated like broadcasts and forwarded to all ports with the exception of the source port.

- The full duplex mode can be used so that data can be sent and received at one port at the same time, thus doubling the transmission rate. Since collisions are no longer possible in this case, better use is made of the physically possible transmission rate.

- The speed and the duplex mode can be negotiated independently at each port.

- Two or more physical ports can be combined into one logical port ( HP : Bundling , Cisco : Etherchannel ) in order to increase bandwidth; this can be done using static or dynamic processes (e.g. LACP or PAgP ).

- A physical switch can be divided into several logical switches using VLANs . VLANs can be spanned across multiple switches ( IEEE 802.1Q ).

disadvantage

- One disadvantage of switches is that troubleshooting in such a network can be more difficult. Frames are no longer visible on all strands in the network, but ideally only on those that actually lead to the goal. To enable the administrator to monitor network traffic despite this, some switches are capable of port mirroring . The administrator tells the (manageable) switch which ports he wants to monitor. The switch then sends copies of frames from the observed ports to a port selected for this purpose, where they can e.g. B. can be recorded by a sniffer . The SMON protocol , which is described in RFC 2613 , was developed to standardize port mirroring .

- Another disadvantage is the latency , which is higher with switches (100BaseTX: 5–20 µs) than with hubs (100BaseTX: <0.7 µs). Since there are no guaranteed access times with the CSMA process anyway and there are differences in the millionths of a second, this is seldom significant in practice. Where in a hub an incoming signal is simply forwarded to all network participants, the switch must first find the correct output port based on its MAC address table; this saves bandwidth, but costs time. In practice, however, the switch has an advantage, since the absolute latency times in an unswitched network due to the inevitable collisions of an already under-utilized network slightly exceed the latency period of a full-duplex (almost collision-free) switch. (The highest speed is achieved neither with hubs nor with switches, but by using crossed cables to connect two network end devices directly with one another. However, this method limits the number of network participants to two for computers with one network card each.)

- Switches are star distributors with a star-shaped network topology and do not have any redundancies with Ethernet (without port bundling, STP or meshing ) . If a switch fails, communication between all participants in the (sub) network is interrupted. The switch is then the single point of failure . This can be remedied by port bundling (FailOver), in which each computer has at least two LAN cards and is connected to two switches. However, for port bundling with FailOver, LAN cards and switches with the appropriate software (firmware) are required .

safety

With classic Ethernet with thin or thickwire as well as with networks that use hubs, eavesdropping on all network traffic was comparatively easy. Switches were initially considered to be much safer. However, there are methods of recording other people's data traffic even in switched networks without the switch cooperating:

- MAC flooding - The storage space in which the switch remembers the MAC addresses attached to the respective port is limited. This is used with MAC flooding by overloading the switch with fake MAC addresses until its memory is full. In this case, the switch switches to a failopen mode , where it behaves like a hub again and forwards all frames to all ports. Various manufacturers have implemented protective measures against MAC flooding - again almost exclusively for switches in the medium to high price range. As a further security measure, with most “managed switches” a list of permitted sender MAC addresses can be created for a port. Protocol data units (here: frames) with an invalid sender MAC address are not forwarded and can cause the relevant port to be switched off ( port security ).

- MAC spoofing - Here the attacker sends frames with a foreign MAC address as the sender. This overwrites their entry in the source address table, and the switch then sends all data traffic to this MAC to the attacker's switch port. Remedy as in the above case through fixed assignment of the MACs to the switch ports.

- ARP spoofing - Here the attacker takes advantage of a weakness in the design of the ARP , which is used to resolve IP addresses into Ethernet addresses . A computer that wants to send a frame via Ethernet needs to know the destination MAC address. This is requested using ARP (ARP Request Broadcast). If the attacker now replies with his own MAC address to the requested IP (not his own IP address, hence the name spoofing ) and is faster than the actual owner of this address, the victim will send his frames to the attacker, who will send them can now read and, if necessary, forward it to the original destination station. This is not a switch fault. A layer 2 switch does not know any higher protocols than Ethernet and can only make its decision about forwarding based on the MAC addresses. If a layer 3 switch is to be auto-configuring, it must rely on the ARP messages it has read and therefore also learns the falsified address. However, a “managed layer 3 switch” can be configured in such a way that the assignment from switch port to IP address and can no longer be influenced by ARP.

Parameters

- Forwarding Rate: indicates how many frames per second can be read, processed and forwarded

- Filter Rate: Number of frames processed per second

- Number of manageable MAC addresses (structure and max. Size of the source address table)

- Backplane throughput (switching fabric): capacity of the buses (also crossbar) within the switch

- VLAN capability or flow control.

- Management options such as fault monitoring and signaling, port-based VLANs , tagged VLANs , VLAN uplinks, link aggregation , meshing, spanning tree protocol , bandwidth management, etc.

history

The development of Ethernet switches began in the late 1980s. Thanks to better hardware and various applications with a high demand for bandwidth, 10 Mbit networks quickly reached their limits both in data center operations and on campus networks. In order to get more efficient network traffic, one began to segment networks via routers and to form subnetworks. Although this reduced collisions and increased efficiency, it also increased the complexity of the networks and increased installation and administration costs considerably. The bridges of the time were not real alternatives either, as they only had a few ports (usually two) and worked slowly - the data throughput was comparatively low and the latency times too high. This is where the first switches were born: The first commercially available model had seven 10 Mbit Ethernet ports and was offered in 1990 by the US startup company Kalpana (later taken over by Cisco ). The switch had a higher data throughput than Cisco's high-end router and was far cheaper. In addition, there was no need for restructuring: it could be easily and transparently placed in the existing network. This marked the beginning of the triumphal march of "switched" networks. Soon thereafter, Kalpana developed the port trunking process Etherchannel , which allows multiple ports to be bundled and used together as an uplink or backbone to increase data throughput . Fast Ethernet switches (non blocking, full duplex) reached market maturity in the mid-1990s. Repeater hubs were still defined in the standard for Gigabit Ethernet, but actually none exist. In 10 gigabit networks, there are no longer any hubs defined - everything is "switched". Today, segments with several thousand computers - without additional routers - are simply and efficiently connected to switches. Switches are used in business or private networks as well as in temporary networks such as LAN parties .

Web links

- RFC 2613 - Remote Network Monitoring MIB Extensions for Switched Networks Version 1.0

Individual evidence

- ↑ Dr. Lawrence G. Roberts : The Evolution of Packet Switching. November 1978, archived from the original on March 24, 2016 ; accessed on August 27, 2019 .

- ↑ Hauser BJ, Textbook of Communication Technology - Introduction to Communication and Network Technology for Vocational Schools and Studies (2011), pp. 130f.