Convolutional code

Convolutional codes (also convolutional code ;. English Convolutional Code ) - a concept of coding theory - be, as well as block codes in which communications technology to channel coding used, as they provide a forward error correction . The additional redundancy that is introduced provides greater protection against transmission or storage errors. Thanks to the mathematical convolution process that gives it its name , the information content of the individual user data locations is distributed over several locations in the code word.

Convolution codes have nothing to do with the similar sounding code convolution .

meaning

Convolution codes are used, for example, in mobile communications and satellite transmissions for digital data transmission. However, they are also used for storage media such as hard drives , where they serve to protect against read errors. A combination of convolution coding and digital modulation is the Trellis Coded Modulation .

A convolutional encoder generally maps k information bits (useful data bits) on the input side onto an n- bit long code word , where k is smaller than n . Successive code words are dependent on one another, i. H. In contrast to block codes, a convolutional encoder has an internal “memory”. However, since in practice only finitely long data sequences can be processed, these sequences are limited to a certain number of code words. The convolutional encoder is then brought back into a defined state by termination , which is usually the same as the initial state. Therefore, common convolutional codes can also be described as a form of special, non-systematic block codes.

In the case of convolutional codes, the information which a specific user data bit carries is distributed over several positions (bits) of the code word. The distribution of the information content - this can also be thought of as a kind of "smear" over individual bits of the code word - is achieved through the mathematical function of the convolution . This creates dependencies between the individual code bits. If individual digits of the code word are falsified by errors, whereby the number of errors per code word must not exceed a certain upper limit, the convolutional decoder can determine the correct user data sequence from the digits of the code word adjacent to the error location through the information distributed over several digits.

An essential feature of convolutional codes is that there is no known systematic method for their construction. Convolution codes are primarily obtained through computationally intensive simulations and trying out a large number of different convolution structures or through chance discoveries. The majority of the structures tried out in the process yield so-called catastrophic convolutional codes , which do not correct certain transmission errors but replace them with a theoretically infinitely long sequence of errors. Therefore, in comparison to the block codes, there are very few convolutional codes that are relevant and usable in practice. To this end, very powerful methods in the form of the Viterbi algorithm are known for decoding convolutional codes using so-called soft decision .

description

A convolutional coder can be described by a shift register , into which the useful data bits are shifted serially, and a combinatorial structure made up of logical XOR links that form the code word on the output side. A distinction is made between two essential structures:

- Non-recursive convolutional encoders

- Recursive convolutional encoders

Recursive convolutional encoders have internal feedback points which can lead to infinitely long output sequences. Recursive convolution structures can be obtained systematically from the non-recursive convolution structures. These encoders are referred to in the literature as RSC encoders ( Recursive Systematic Convolutional Encoder).

The subdivision is motivated in a similar way to the digital filters with finite impulse response (FIR) with a non-recursive structure or the filters with infinite impulse response (IIR) with a recursive structure. However, apart from rough similarities in structure, convolutional encoders have nothing to do with digital filters in particular.

parameter

The length of influence or also the memory order L c results from the structure of a convolutional encoder . It describes the number of clocks that an input bit (user data bit) needs to pass through all positions of the shift register and thus a certain user data bit has an influence on the code word on the output side. In the case of non-recursive convolutional encoders, it corresponds to the number of storage elements in the convolutional encoder.

Another parameter of a convolutional code is its code rate R c . It indicates the ratio of the integer number k of the information bits on the input side to the integer number n of the code bits generated on the output side:

R c is always less than or equal to 1. The number k of information bits on the input side can also be greater than 1. In this case, several useful data bits are sent in parallel to the encoder per cycle. The output-side n code bits, which are also to be tapped in parallel, are converted by a multiplexer into a serial data stream with a correspondingly higher clock rate.

With certain convolutional codes, individual user data bits on the input side can also be assigned directly to certain code bits on the output side without a convolutional coding. In this case, one speaks of asymmetrical convolutional codes. These methods are used as an essential component , for example, in trellis-coded modulation . If, on the other hand, all useful data bits are assigned to their own shift register chains of the memory order L c , one speaks of symmetrical convolutional codes.

Termination

In practical applications, only user data sequences of finite length are important. This makes a so-called termination (ger .: Termination ) required the sequence. This refers to the return of the encoder to a defined final state. If no termination is made at the end of the user data sequence, this has a significant effect on the ability to correct the decoding. If the decoder does not know the final state of a sequence, it can only estimate the last information bits with great uncertainty, which results in an increased error probability. If, on the other hand, the final state and the sequence length N are known and agreed between the encoder and decoder, the last digits of a user data sequence can be reliably determined on the decoder side.

In the case of non-recursive convolutional codes, a sequence of logical 0 bits is typically appended to the end of a user data sequence . This so-called tail leads the encoder back to a defined end state, the so-called zero state, which is also known to the decoder. With these additional termination points at the end, however, the user data sequence is lengthened and the code rate is reduced to the value:

In the case of recursive convolutional codes, the tail depends on the previous useful data.

Graphic representation

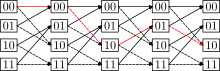

A convolutional coder can be interpreted as a finite automaton by means of a state transition diagram , as it is shown in the adjacent figure for two memories with four states. The number of states generally results in binary code from the number z of state memories to 2 z .

The disadvantage of the form of representation using the state transition diagram is the lack of a time reference. This lack of time reference in serial decoding can be visualized using a trellis diagram (trellis for short). A trellis diagram is the representation of a state transition diagram that is "scrolled" over the time axis. In the context of the trellis diagram, the decoding processes of convolutional codes can also be clearly illustrated with the Viterbi algorithm : The individual transitions from one state to the next are assigned different probability values, which means that in the sequence over several states, usually a single one Forms path in the trellis that has the lowest cumulative error probability compared to all other paths. The symbols assigned to this path are then viewed by the decoder as the symbols that are most likely to be sent and the assigned information bits are output for further processing (MLSE = Maximum Likelihood Sequence Estimation).

In the case of convolutional codes with a long length of influence, however, the number of states in the trellis diagram grow exponentially and this representation, including the transition edges, quickly becomes confusing. The trellis diagram is therefore used to represent the decoding process using the Viterbi algorithm in the case of exemplary convolutional codes with a short influence length.

Decoding

As a rule, the Viterbi decoder is used to decode convolutional codes . As mentioned, this method is based on the trellis representation of the code and determines the most likely associated user data or code sequence for a disturbed code sequence. The Viterbi decoder can process not only binary , but also continuous input sequences. One then speaks of hard or soft decision decoding. In general, soft decision decoders for convolutional codes can be implemented more easily than is the case with block codes.

The classic Viterbi decoder outputs binary sequences - for various applications, however, it is important not only to know the individual decoded bits, but also their reliability. These reliabilities can be generated, for example, with the aid of a modified Viterbi decoder, the so-called soft output Viterbi algorithm (SOVA), or the MAP / BCJR algorithm .

For codes with very large memory orders, the trellis becomes very complex and trellis-based decoding using a Viterbi decoder is therefore very time-consuming. In this case, sequential, sub-optimal decoders can alternatively be used, which work on the representation of the code tree.

Dotting

In the case of convolutional codes, a specific code rate R c can be selected in a targeted manner by puncturing the code word . With puncturing, certain bit positions of the code word are left out (“punctured”), thereby increasing the code rate. The decoder must know this so-called puncturing scheme and take it into account when decoding.

The reason for puncturing is to design the code word lengths precisely for a specific frame length for the subsequent data transmission or data storage. By omitting individual digits of the code word, however, there is also a reduction in the correction performance.

extension

The extension are concatenated convolutional codes. Several different or the same convolutional codes are concatenated with one another in series or in parallel. The chaining of the individual codes takes place via a function which is referred to as an interleaver and enables a uniform distribution of errors to the different codes and results in a kind of decoupling of the partial codes. A code gain can thus be achieved that is greater than the sum of the individual convolutional codes on their own.

Turbo codes are a special form of code chaining . A subgroup of turbo codes, the so-called turbo convolutional codes (TCC), are based on recursive systematic convolutional codes. Non-recursive convolutional codes do not show the same improvement in code gain in the TCC.

literature

- Martin Bossert : Channel coding (= information technology ). 2nd completely revised and expanded edition. BG Teubner, Stuttgart 1998, ISBN 3-519-16143-5 .

- Karl-Dirk Kammeyer , Volker Kühn: MATLAB in communications engineering . J. Schlembach Fachverlag, Weil der Stadt 2001, ISBN 3-935340-05-2 .

- Todd K. Moon: Error Correction Coding. Mathematical Methods and Algorithms . Wiley-Interscience, Hoboken NJ 2005, ISBN 0-471-64800-0 .