Error propagation

In many measurement tasks, one variable cannot be measured directly, but can be determined indirectly from several measurable variables according to a fixed mathematical relationship . Since every measured value of the individual quantities deviates from its correct value (see measurement deviation , older term measurement error), the result of the calculation will also deviate from its correct value. The individual deviations are transferred with the formula. This is called error propagation . For these there are calculation rules with which the deviation of the result can be determined or estimated.

Since the distinction between measurement deviation and measurement error , the term error propagation has to be regarded as outdated. However, since no new expression has yet been established, the term error has been retained here for linguistic consistency.

task

- Often to a result of a size or in the general case of a plurality of sizes , , are calculated. If the input variable (s) is determined incorrectly, the result variable is also incorrectly calculated. After major errors, the calculation must be recalculated. Otherwise it is more appropriate to determine only the effect of the error or errors on the result.

- In mathematical terms: if there is a function with several independent variables that are wrong by a small amount, the result will also be wrong by a small amount . This should be able to be calculated.

- In terms of measurement technology: If a measurement result is to be calculated from measurement values of various sizes, with these measurement values deviating from their correct values, a result is calculated that accordingly also deviates from the correct result. It should be possible to calculate the size of the deviation of the measurement result (within the scope of what is quantitatively reasonable, see error limit ).

Possibilities, limitations

Systematic error

In principle, systematic errors can be determined; they have magnitude and sign .

- Example: The electrical power converted in a consumer should be calculated and the current through the consumer measured. To do this, an ammeter is connected to the line. However, a voltage drops across the measuring device; this means that the voltage at the consumer is lower than the supply voltage; as a result, the current intensity is also smaller in the case of an ohmic consumer ; a little too little is measured (negative feedback deviation , which can be calculated with a known supply voltage and a known internal resistance of the measuring device). The power calculated from the supply voltage and the measured amperage is thus given too low.

In the case of systematic errors in the input variables, the systematic error in the output variable can be calculated using the error propagation rules.

Meter failure

Furthermore, it can not be assumed that the quantity recorded by the measuring device will be displayed without measuring device errors. In rare cases, the associated systematic error is known from an error curve for the measured value. In general, only the unsigned limit value of a measuring device error , the error limit, is known.

- Example: If the current intensity in the above example can only be determined with an error limit of 4%, the power can also only be determined in a range from −4% to +4% around the calculated value.

In the case of error limits of the input quantities, the error limit of the output quantity can be calculated using the error propagation rules.

Random error

As far as discussed so far, there are several input variables (independent variables, measured variables) and only one value of each . It is different with random errors , which are recognized when several values are available from an input variable - obtained through repeated determination (measurement) under constant conditions. The estimation of random errors leads to a component of the unsigned measurement uncertainty . Their determination is a goal of the error calculation .

If there are uncertainties in the input variables, the uncertainty in the output variable can be calculated using the error propagation rules.

In the case of measuring device errors, it can be assumed that the amount of the random error is significantly smaller than the error limit (otherwise the random error must also be taken into account when determining the error limit). In the case of independent measured values, the quality of which is determined by the error limits of the measuring devices, the investigation of random errors does not make sense.

Error in the mathematical model

The size to be calculated must be correctly described by the mathematical formula . In order to be able to calculate more easily or in the absence of complete knowledge, however, approximations are often used.

- Example: The supply voltage in the above example is assumed to be known, as is permissible when drawing from a constant voltage source . However, if the voltage of the source is dependent on the load, its parameter “open circuit voltage” is no longer the supply voltage; another error arises.

An error in the output variable, which arises due to an inadequate mathematical model for the connection with the input variables, can not be calculated using error propagation rules .

Error Propagation Rules

basis

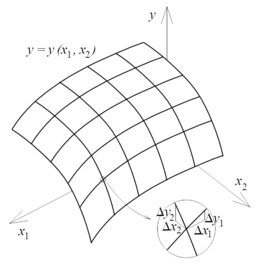

A function can be represented in the vicinity of a point by a Taylor series , which is expediently developed at this point. As a prerequisite for this, a relationship between every input variable and the result variable must be a smooth function , as is almost always the case in classical physics and technology. The following formalism with linear approximation only requires the simple differentiability .

Signed errors

A flawed size

The influence of an incorrect input variable on the result can be estimated using the Taylor series :

If it is sufficiently small , the series expansion after the linear term can be broken off, because terms with and even higher powers become really small. This results in the approximate solution

This provides a rule for error propagation if the values are viewed as absolute errors .

- Use with proportionality

- For the output variable , its absolute error contains the special proportionality constant . It is better to calculate with the relative error , which is independent of and always just as large as the relative error of the input variable .

- Use with inverse proportionality (reciprocal value formation)

- The relative error of the output variable has the same amount as the relative error of the input variable, but the opposite sign.

Several incorrect sizes

If there are several independent input variables, the corresponding series expansion is used, also up to the linear term as an approximate solution for small :

: Total error of the result : relative error of the result : Input quantity error : relative error of the input variable

The partial differential quotients to be used here provide information on how much changes if only changes from all independent input variables ; the other input variables are treated as constants.

The general solution is simplified for the four basic arithmetic operations :

| * With addition | |||

| * With subtraction | |||

| * With multiplication | |||

| * With division |

- Note : Information with an uncertain sign (±) does not indicate errors; the difference between error and error limit must be observed. In the case of error limits and measurement uncertainties, different facts apply, see the next sections.

The formulas are valid only if the actual values of the error with sign are known. In the case of error propagation, the errors can more or less complement or cancel each other out .

-

Example: If are 2% too big and 3% too big:

- Then the multiplication will be 5% too large.

- Then it becomes too small when dividing by 1%.

- The following example can serve to illustrate this: If you want to calculate, but insert a number that is 2% too large in the numerator and a number that is 3% too large in the denominator, calculates and receives 0.99. This result deviates from the correct value 1.00 by -1%. This statement about the error can be obtained more easily with the formula . And the minus sign in front is obviously correct!

Error limits

If the errors themselves are not known, but only their limits, the same mathematical approach can be used if the values are viewed as error limits. These are unsigned, i.e. defined as an amount. In this way, only the error limit can be calculated for the result; in addition, the most unfavorable combination of signs is to be expected by adding amounts .

: Total margin of error of the result : Error limit of the input quantity : relative error limit of the input variable : relative error limit of the result

The general solution is simplified with the four basic arithmetic operations:

| * With addition and subtraction | |

| * With multiplication and division |

-

Example: If it can be up to 2% too big or too small and up to 3% too big or too small:

- Then both multiplication and division can be too large or too small by up to 5%.

Measurement uncertainties

A flawed size

There is the size of several afflicted with random errors values with , the results according to the rules error analysis for normal distribution compared to the single value statement improved by forming the arithmetic mean value :

Every newly added value changes the mean value with its individual random error and thus makes it unsafe. The uncertainty attached to the calculated mean is given:

The squared random errors have been roughly added here. For large , the uncertainty tends towards zero, and in the absence of systematic errors the mean value tends towards the correct value.

If the mean value is used as the input variable in a calculation for error propagation , its uncertainty affects the uncertainty of the result . If it is sufficiently small , this value can be used for the error propagation as the linear approximation of the Taylor series. It should be noted that uncertainties are defined as amounts:

Several incorrect sizes

Error-prone quantities independent of one another

If there were several independent input variables , the mean values were each determined with an uncertainty . The result is calculated from the mean values. To calculate its uncertainty , we start again with the linear approximation for several independent variables; however - as with the calculation of the uncertainty - the squared contributions of the individual uncertainties must be added.

This equation "was formerly called Gaussian law of error propagation ". "However, it does not affect the propagation of measurement errors (formerly" errors "), but rather that of uncertainties."

The equation is simplified for the four basic arithmetic operations:

| * With addition and subtraction | ||

| * With multiplication and division |

The law is only applicable if the model function behaves sufficiently linearly in the case of changes in the influencing variables in the range of its standard uncertainties . If this is not the case, the calculation process is considerably more complex. The DIN 1319 standard and the “ Guidelines for specifying the uncertainty when measuring ” provide information on how to identify and avoid impermissible non-linearity. In addition, homogeneity of variance is assumed.

Interdependent, faulty quantities

In the case of a dependency ( correlation ) between error-prone quantities, the Gaussian error propagation law must be extended to the generalized (generalized) Gaussian error propagation law taking into account the covariances or the correlation coefficients between two quantities :

with the covariance . The correlation terms are omitted for independent quantities and the formula is derived from the section for independent quantities. The relative uncertainty of a variable that is derived from two completely correlated variables can be smaller (better) than the two relative uncertainties of the input variables.

The error propagation for a result and the correlated measurement errors can also be formulated as follows:

with as covariance matrix .

Generalized error propagation law

With the help of matrix formalism , the general law of error propagation can be compactly expressed as:

where and are the respective covariance matrices of the vectors and and are the Jacobi matrix . This is not just a result as in the example above, but a vector with many results that are derived from the input variables . The standard deviation for each now results from the roots of the diagonal elements .