In stochastics , the covariance matrix is the generalization of the variance of a one-dimensional random variable to a multidimensional random variable, i.e. H. on a random vector . The elements on the main diagonal of the covariance matrix represent the respective variances, and all other elements represent covariances. The covariance matrix is also variance-covariance matrix rarely or scattering matrix or dispersion matrix ( Latin dispersion "dispersion" of dispergere "spread, spread, scatter") called and is a positive semi-definite matrix. If all components of the random vector are linearly independent, the covariance matrix is positive definite.

definition

Be a random vector

-

,

,

where represents the expectation of , the variance of and the covariance of the real random variables and . The expectation vector of is then given by (see expectation of matrices and vectors )

-

,

,

d. H. the expected value of the random vector is the vector of the expected values. A covariance matrix for the random vector can be defined as follows:

The covariance matrix is noted with , or and the covariance matrix of the asymptotic distribution of a random variable with or . The covariance matrix and the expected value vector are the most important parameters of a probability distribution. They are shown in a random variable as additional information as follows: . The covariance matrix, as a matrix of all pairwise covariances of the elements of the random vector, contains information about its dispersion and about correlations between its components. If none of the random variables are degenerate (i.e., if none of them have a variance of zero) and there is no exact linear relationship between them , then the covariance matrix is positive definite. We also speak of a scalar covariance matrix if all off-diagonal entries in the matrix are zero and the diagonal elements represent the same positive constant.

properties

Basic properties

- For the following applies: . Thus, the covariance matrix for containing main diagonal , the variances of the individual components of the random vector. All elements on the main diagonal are therefore nonnegative.

- A real covariance matrix is symmetric because the covariance of two random variables is symmetric.

- The covariance matrix is positive semidefinite: Due to the symmetry, every covariance matrix can be diagonalized by means of a principal axis transformation , whereby the diagonal matrix is again a covariance matrix. Since there are only variances on the diagonal, the diagonal matrix is consequently positive semidefinite and thus also the original covariance matrix.

- Conversely, every symmetric positive semidefinite matrix can be understood as a covariance matrix of a -dimensional random vector.

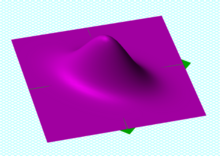

- Due to the diagonalisability, whereby the eigenvalues (on the diagonal) are non-negative because of the positive semidefiniteness, covariance matrices can be represented as ellipsoids .

- The following applies to all matrices .

- The following applies to all vectors .

- If and are uncorrelated random vectors, then .

- With the obtained diagonal matrix , the covariance matrix by the relationship , where the correlation matrix in the population represents

- If the random variables are standardized , the covariance matrix just contains the correlation coefficients and the correlation matrix is obtained

- The inverse of the covariance matrix is called the precision matrix or concentration matrix

- The following applies to the trace of the covariance matrix

Relationship to the expected value of the random vector

If the expectation value vector is , then it can be shown with the Steiner shift theorem applied to multidimensional random variables that

-

.

.

Here expectation values of vectors and matrices are to be understood component-wise.

A random vector that is supposed to obey a given covariance matrix and have the expected value can be simulated as follows: First, the covariance matrix has to be decomposed (e.g. with the Cholesky decomposition ):

-

.

.

The random vector can then be calculated

with a (different) random vector with independent standard normally distributed components.

Covariance matrix of two vectors

The covariance matrix of two vectors is

with the expected value of the random vector and the expected value of the random vector .

Covariance matrix as an efficiency criterion

The efficiency or precision of a point estimator can be measured by means of the variance-covariance matrix, since this contains the information about the scatter of the random vector between its components. In general, the efficiency of a parameter estimator can be measured by the “size” of its variance-covariance matrix. The “smaller” the variance-covariance matrix, the greater the efficiency of the estimator. Let and be two undistorted random vectors. If is a random vector, then is a positively definite and symmetric matrix. One can say that "smaller" is than in the sense of the Loewner partial order , i. i.e. that is a positive semi-definite matrix.

Sample covariance matrix

An estimate of the correlation matrix in the population is obtained by treating the variances and covariances in the population and by the empirical variance and empirical covariances (their empirical counterparts) and replace (if the variables random variable value representing the parameters in the population). These are given by

-

and .

and .

This leads to the sample covariance matrix :

-

.

.

For example, and are given by

-

and ,

and ,

with the arithmetic mean

-

.

.

Special covariance matrices

Ordinary Least Squares Estimator Covariance Matrix

For the covariance matrix of the ordinary least squares estimator

results from the above calculation rules:

-

.

.

This covariance matrix is unknown because the variance of the disturbance variables is unknown. An estimator for the covariance matrix is obtained by replacing the unknown disturbance variable variance with the unbiased estimator of the disturbance variable variance (see: Unambiguous estimation of the unknown variance parameter ).

Covariance matrix for seemingly unconnected regression equations

In seemingly unrelated regression equations ( English : seemingly unrelated regression equations , shortly SURE ) model

-

,

,

where the error term is idiosyncratic, the covariance matrix results as

See also

literature

Individual evidence

-

↑ George G. Judge, R. Carter Hill, W. Griffiths, Helmut Lütkepohl , TC Lee. Introduction to the Theory and Practice of Econometrics. 2nd Edition. John Wiley & Sons, New York / Chichester / Brisbane / Toronto / Singapore 1988, ISBN 0-471-62414-4 , p. 43.

-

↑ George G. Judge, R. Carter Hill, W. Griffiths, Helmut Lütkepohl, TC Lee. Introduction to the Theory and Practice of Econometrics. 2nd Edition. John Wiley & Sons, New York / Chichester / Brisbane / Toronto / Singapore 1988, ISBN 0-471-62414-4 , p. 43.

-

↑ Jeffrey Marc Wooldridge : Introductory econometrics: A modern approach. 5th edition. Nelson Education, 2015, p. 857.

-

↑ George G. Judge, R. Carter Hill, W. Griffiths, Helmut Lütkepohl, TC Lee. Introduction to the Theory and Practice of Econometrics. 2nd Edition. John Wiley & Sons, New York / Chichester / Brisbane / Toronto / Singapore 1988, ISBN 0-471-62414-4 , p. 78.

-

^ Ludwig Fahrmeir, Thomas Kneib , Stefan Lang, Brian Marx: Regression: models, methods and applications. Springer Science & Business Media, 2013, ISBN 978-3-642-34332-2 , p. 648.

-

^ Rencher, Alvin C., and G. Bruce Schaalje: Linear models in statistics. , John Wiley & Sons, 2008., p. 156.

Special matrices in statistics