Assessment of a binary classifier

In the case of a classification , objects are classified into different classes by means of a classifier based on certain characteristics . The classifier generally makes mistakes , so in some cases it assigns an object to the wrong class. Quantitative measures for assessing a classifier can be derived from the relative frequency of these errors .

Often the classification is of a binary nature; i.e. there are only two possible classes. The quality measures discussed here relate exclusively to this case. Such binary classifications are often formulated in the form of a yes / no question: does a patient suffer from a particular disease or not? Did a fire break out or not? Is an enemy plane approaching or not? There are two possible types of errors with classifications of this kind: an object is assigned to the first class although it belongs to the second, or vice versa. The key figures described here then offer the possibility of assessing the reliability of the associated classifier (diagnostic method, fire alarm , aviation radar).

Yes-no classifications are similar to statistical tests that decide between a null hypothesis and an alternative hypothesis .

Truth Matrix: Right and Wrong Classifications

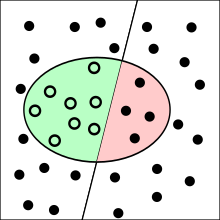

The dots in the oval are the people classified as sick by the test. Correctly evaluated cases are highlighted in green or yellow, incorrectly evaluated cases in red or gray.

In order to evaluate a classifier, one has to use it in a number of cases in which one has at least retrospectively knowledge of the “true” class of the respective objects. An example of such a case is a medical laboratory test that is used to determine whether a person has a particular disease. Later, more elaborate examinations will determine whether the person actually has this disease. The test is a classifier that classifies people into the categories “sick” and “healthy”. Since it is a yes / no question, it is also said that the test is positive (classification “sick”) or negative (classification “healthy”). In order to assess how well the laboratory test is suitable for diagnosing the disease, the actual state of health of each patient is compared with the result of the test. There are four possible cases:

- Correctly positive : the patient is sick and the test indicated this correctly.

- False negative : The patient is sick, but the test incorrectly classified them as healthy.

- False positive : The patient is healthy, but the test incorrectly classified them as sick.

- Correctly negative : the patient is healthy and the test correctly indicated this.

In the first and last case the diagnosis was correct, in the other two cases there was an error. The four cases are also named differently in different contexts. The English terms true positive , false positive , false negative and true negative are also used . In the context of signal detection theory , correctly positive cases are also referred to as hit , false negative cases as miss and correctly negative cases as correct rejection .

It is now counted how often each of the four possible combinations of test result (determined class) and state of health (actual class) occurred. These frequencies are entered in a so-called truth matrix (also called confusion matrix ):

| Person is sick ( ) |

Person is healthy ( ) |

|

|---|---|---|

| Test positive ( ) | really positive ( ) | false positive ( ) |

| Test negative ( ) | false negative ( ) | true negative ( ) |

Notes: stands for "wrong" (more precisely: for the number of wrong classifications); stands for “correct” (more precisely: for the number of correct classifications); the index stands for "positive"; the index stands for "negative". So: stands for "really positive" (more precisely: for the number of people correctly classified as positive) etc.

This matrix is a simple special case of a contingency table with two binary nominal variables - the judgment of the classifier and the actual class. It can also be used for classifications with more than two classes, in which case a 2 × 2 matrix becomes a matrix for classes .

Statistical quality criteria of the classification

By calculating different relative frequencies, parameters for assessing the classifier can now be calculated from the values of the truth matrix. These can also be interpreted as estimates of the conditional probability of the occurrence of the corresponding event. The measures differ with regard to the population to which the relative frequencies relate: For example, only all the cases can be taken into account in which the positive or negative category actually exists, or one considers the set of all objects that are positive or classified negatively (sum of the entries in one row of the truth matrix). This choice has serious effects on the calculated values, especially if one of the two classes occurs much more frequently than the other.

Sensitivity and false-negative rate

sensitivity

The sensitivity (also correct positive rate , sensitivity or hit rate ; English sensitivity , true positive rate , recall or hit rate ) indicates the proportion of objects correctly classified as positive in relation to the totality of actually positive objects. For example, sensitivity in a medical diagnosis corresponds to the proportion of those who are actually sick and who have also been diagnosed with the disease.

The sensitivity corresponds to the estimated conditional probability

- .

In the context of the statistical hypothesis test, the sensitivity of the test is referred to as the power of the test , although the term power has a more general usage in this context that is not applicable in the present context.

False negative rate

Accordingly, the false negative rate ( false negative rate or miss rate ) indicates the proportion of objects wrongly classified as negative in relation to the totality of actually positive objects. So in the example those who are actually sick, but who are diagnosed as healthy.

The false negative rate corresponds to the estimated conditional probability

- .

context

Since both measures relate to the case that the positive category is actually present ( first column of the truth matrix ), the sensitivity and the false-negative rate add up to 1 and 100%, respectively.

Specificity and false positive rate

Specificity

The specificity (also correct negative rate or characteristic property ; English: specificity , true negative rate or correct rejection rate ) indicates the proportion of objects correctly classified as negative in relation to the totality of actually negative objects. For example, in the case of a medical diagnosis, the specificity indicates the proportion of healthy people who have also been found to have no disease.

The specificity corresponds to the estimated conditional probability

- .

False positive rate

Accordingly, the false positive rate (also failure rate ; English fallout or false positive rate ) indicates the proportion of objects wrongly classified as positive that are actually negative. In the example, an actually healthy person would be wrongly diagnosed as sick. The probability of a false alarm is given.

The false positive rate corresponds to the estimated conditional probability

- .

context

Since both measures refer to the case that the negative category is actually present ( second column of the truth matrix ), the specificity and the false-positive rate add up to 1 and 100%, respectively.

Positive and negative predictive value

While the sensitivity and specificity of a medical test are epidemiologically and health-politically relevant parameters (for example, when it comes to the question of whether it makes sense to use it in screening for the early detection of diseases ), the predictive value is decisive for the patient and doctor in a specific case. Only he answers the question of a person who tested positive / negative, with what probability he is really sick / healthy.

Positive predictive value

The positive predictive value (also relevance , effectiveness , accuracy , positive predictive value ; English: precision or positive predictive value ; abbreviation: PPV) indicates the proportion of results correctly classified as positive in relation to the totality of results classified as positive ( first line of the truth matrix ). For example, the positive predictive value of a medical test indicates which percentage of people with a positive test result is actually ill.

The positive predictive value corresponds to the estimated conditional probability

- .

Negative predictive value

Accordingly, the negative predictive value (also known as segregance or separability ; English: negative predictive value ; abbreviation: NPV) indicates the proportion of the results correctly classified as negative in relation to the totality of results classified as negative ( second line of the truth matrix ). In the example, this corresponds to the proportion of people with a negative test result who are actually healthy.

The negative predictive value is the estimated conditional probability

Connections

Unlike the other pairs of quality measures, the negative and positive predictive values do not add up to 1 or 100%, since different cases are assumed in each case (actually positive or actually negative, i.e. different columns of the truth matrix). The predictive values can be calculated from the sensitivity and specificity , but the pretest probability (in the case of diseases, corresponds to the prevalence in the examined population) must be known or estimated. The positive predictive value benefits from a high pre-test probability, the negative predictive value from a low pre-test probability. A positive medical test result is therefore much more meaningful if the test was carried out on suspicion than if it was used for screening alone .

| ill | healthy | total | Predictive value | |

|---|---|---|---|---|

| positive | ||||

| negative | ||||

| total |

The a collective determined positive and negative predictive values are only transferable to other collectives if the relative frequency of positive cases is the same there. Example: If 100 HIV patients and 100 healthy control patients were examined to determine the positive predictive value, the proportion of HIV patients in this group (50%) is far from the HIV prevalence in Germany (0.08%) ( see also the numerical example given below ). The predictive values would be completely different if the same test was carried out on a randomly selected person. One measure that does not require the pre-test probability is the likelihood quotient (LQ) . The likelihood quotient is a prevalence-independent measure that takes into account the sensitivity and specificity of a diagnostic test. It describes how the test result affects the chance that the disease is actually present ( ) or not present ( ):

- .

Correct and incorrect classification rate

The correct classification rate (also confidence probability or hit accuracy ; English: accuracy ) indicates the proportion of all objects that are correctly classified. The remaining part corresponds to the incorrect classification rate (also the size of the classification error ). In the diagnosis example, the correct classification rate would be the proportion of correctly positive and correct negative diagnoses in the total number of diagnoses, while the incorrect classification rate would be the proportion of false positive and false negative diagnoses.

Correct classification rate

The correct classification rate corresponds to the estimated probability

- .

Misclassification rate

The misclassification rate corresponds to the estimated probability

- .

context

The correct and incorrect classification rates add up to 1 or 100%.

Combined dimensions

Since the various quality measures influence each other (see section Problems ), various combined measures have been proposed that allow the quality to be assessed with a single key figure. The dimensions presented below were developed in the context of information retrieval (see application in information retrieval ).

F dimension

The F-measure combines accuracy (precision, ) and hit rate (recall, ) using the weighted harmonic mean :

In addition to this measure, which is also referred to as a measure, in which accuracy and hit rate are weighted equally, there are also other weightings. The general case is the measure (for positive values of ):

For example, the hit rate weights four times as high as the accuracy and the accuracy four times as high as the hit rate.

Effectiveness measure

The measure of effectiveness also corresponds to the weighted harmonic mean. It was introduced in 1979 by Cornelis Joost van Rijsbergen . The effectiveness lies between 0 (best effectiveness) and 1 (poor effectiveness). For a parameter value of is equivalent to the hit rate, for a parameter value of equivalent to precision.

Function graph

Standardized, two-dimensional function graphs can be displayed for the six key figures sensitivity , false negative rate , specificity , false positive rate , positive predictive value and negative predictive value:

For example, if you look at the relationship between to and bet

- ,

so one gets for the sensitivity

and for the false negative rate

- ,

where the two functions and , the graphs of which are shown here, are defined as:

This procedure is only possible because sensitivity has the property that its value does not depend on the two specific individual values and , but solely on their ratio (or its reciprocal value ). Therefore, the sensitivity, which - formulated as a two- digit function - depends on the two variables and , can also be represented as a one-digit function as a function of (or ), whereby two-dimensional function graphs can be drawn. The same applies to the false negative rate.

The same procedure can be used for the other four parameters (it should be noted that they are used for different ratios), as the following summary table shows:

| identification number | relationship | formula |

|---|---|---|

| sensitivity | ||

| False negative rate | ||

| Specificity | ||

| False positive rate | ||

| Positive predictive value | ||

| Negative predictive value |

Problems

Mutual influences

It is not possible to optimize all quality criteria independently of one another. In particular, the sensitivity and the specificity are negatively correlated with one another . To illustrate these relationships, it is helpful to consider the extreme cases:

- If a diagnosis classifies almost all patients as sick ( liberal diagnosis), the sensitivity is maximum, because most sick people are recognized as such. However, at the same time the false-positive rate is also maximal, since almost all healthy people are classified as sick. The diagnosis therefore has a very low specificity.

- Conversely, if almost no one is classified as sick ( conservative diagnosis), the specificity is maximum, but at the expense of a low sensitivity.

How conservative or liberal a classifier should ideally be depends on the specific application. From this it can be derived, for example, which of the misclassifications has the more serious consequences. When diagnosing a serious illness or safety-related applications such as a fire alarm, it is important that no case goes undetected. When doing research using a search engine, however, it can be more important to get as few results as possible that are irrelevant to the search, i.e. represent false-positive results. The risks of the various misclassifications can be specified for the evaluation of a classifier in a cost matrix with which the truth matrix is weighted. Another possibility is to use combined dimensions for which a corresponding weighting can be set.

In order to show the effects of different conservative tests for a specific application example, ROC curves can be created in which the sensitivity for different tests is plotted against the false-positive rate. In the context of the signal discovery theory , one speaks of a criterion set in different conservative ways .

Rare positive cases

In addition, an extreme imbalance between actually positive and negative cases will falsify the parameters, as is the case with rare diseases. For example, if the number of sick people taking part in a test is considerably lower than that of healthy people, this generally leads to a low value in the positive predictive value (see the numerical example below ). Therefore, in this case, the likelihood quotient should be specified as an alternative to the predicted values.

This relationship must be taken into account in various laboratory tests: Inexpensive screening tests are adjusted so that the number of false negative results is as small as possible. The false positive test results produced are then identified by a (more expensive) confirmatory test. A confirmatory test should always be performed for serious medical conditions. This procedure is even required for the determination of HIV .

Incomplete truth matrix

Another problem when assessing a classifier is that it is often not possible to fill in the entire truth matrix. In particular, the false-negative rate is often not known, for example if no further tests are carried out on patients who have received a negative diagnosis and an illness remains undetected, or if a relevant document cannot be found in a search because it cannot has been classified as relevant. In this case, only the results classified as positive can be evaluated; In other words , only the positive predictive value can be calculated (see also the numerical example below ). Possible solutions to this problem are discussed in the section Application in Information Retrieval .

Classification evaluation and statistical test theory

| Binary classification | Statistical test | |

|---|---|---|

| target | On the basis of a sample , observations (objects) are assigned to one of the two classes. | Using a random sample , two mutually exclusive hypotheses (null and alternative hypotheses ) are tested for the population . |

| Action | The classifier is a regression function estimated from the sample with two possible result values . | The test value is calculated from the random sample by means of test statistics and compared with critical values calculated from the distribution of the test statistics. |

| Result | A class affiliation is predicted for an observation. | Based on the comparison of the test value and critical values, the alternative hypothesis can be accepted or rejected. |

| error | The quality of a classifier is judged retrospectively using the false classification rate (false positive and false negative). | Before the test is carried out, the size of the type 1 error (incorrect acceptance of the alternative hypothesis) is determined. The critical values are calculated from this. The second type error (wrongly rejection of the alternative hypothesis) is always unknown when the test is carried out. |

Classification assessment to assess the quality of statistical tests

The quality of a statistical test can be assessed with the help of the classification evaluation:

- If many samples are generated under the validity of the null hypothesis, then the acceptance rate of the alternative hypothesis should correspond to type 1 error. But in complicated tests you can often only specify an upper limit for the type 1 error, so that the “true” type 1 error can only be estimated with such a simulation.

- If one generates many samples under validity of the alternative hypothesis, then the rejection rate of the alternative hypothesis is an estimate of the error of the second kind. This is of interest, for example, if one has two tests for a fact. If the alternative hypothesis is true, then the test, which has a minor error of the 2nd kind, is preferred.

Statistical tests to assess a classification

Statistical tests can be used to check whether a classification is statistically significant; In other words , whether the assessment of the classifier is independent of the actual classes with regard to the population (null hypothesis) or whether it correlates significantly with them (alternative hypothesis).

In the case of multiple classes, the chi-square independence test can be used. It is checked whether the assessment of the classifier is independent of the actual classes or correlates significantly with them. The strength of the correlation is estimated using contingency coefficients .

In the case of a binary classification, the four-field test is used, a special case of the chi-square independence test. If you only have a few observations, the Fisher's exact test should be used. The strength of the correlation can be estimated using the Phi coefficient .

However, if the test rejects the null hypothesis, it does not mean that the classifier is good. It just means he's better than (random) guessing. A good classifier should also have as high a correlation as possible.

Diettrich (1998) examines five tests for the direct comparison of misclassification rates of two different classifiers:

- A simple two-sample t-test for independent samples,

- a two-sample t-test for related samples,

- a two-sample t-test for related samples with 10-fold cross-validation ,

- the McNemar test and

- a two-sample t-test for related samples with 5-way cross-validation and modified variance calculation (5x2cv).

As a result of the investigation of quality and error type 1 of the five tests, it emerges that the 5x2cv test behaves best, but is very computationally intensive. The McNemar test is slightly worse than the 5x2cv test, but it is significantly less computationally intensive.

Application in information retrieval

A special application of the measures described here is the assessment of the quality of hit sets of a search in information retrieval . It is about assessing whether a found document, for example web mining by search engines , is relevant according to a defined criterion. In this context, which are defined above terms "hit rate" (English. Recall ), "accuracy" (English. Precision ) and "default rate" (English. Fallout ) in use. The hit rate indicates the proportion of relevant documents found during a search and thus the completeness of a search result. The accuracy describes the accuracy of a search result with the proportion of relevant documents in the result set. The (less common) dropout denotes the proportion of found irrelevant documents in the total amount of all irrelevant documents, so it indicates in a negative way how well irrelevant documents are avoided in the search result. Instead of a measure, the hit rate, accuracy and failure can also be interpreted as probability:

- Hit rate is the probability with which a relevant document will be found (sensitivity).

- Accuracy is the probability with which a found document is relevant (positive predictive value).

- Failure is the probability that an irrelevant document will be found (false-positive rate).

A good research should find all relevant documents (really positive) and not find the non-relevant documents (really negative). As described above, however, the various dimensions are interdependent. In general, the higher the hit rate, the lower the accuracy (more irrelevant results). Conversely, the higher the accuracy (fewer irrelevant results), the lower the hit rate (more relevant documents that are not found). Depending on the application, the different dimensions are more or less relevant for the assessment. When doing a patent search , for example, it is important that no relevant patents remain undiscovered - so the negative predictive value should be as high as possible. For other searches it is more important that the hit list contains few irrelevant documents, i.e. that is, the positive predictive value should be as high as possible.

In the context of information retrieval, the combined measures described above, such as the F-value and the effectiveness, were also introduced.

Accuracy Hit Rate Chart

To assess a retrieval process, the hit rate and accuracy are usually considered together. For this purpose, the so-called Precision Recall Diagram (PR diagram) is entered for different sets of hits between the two extremes of accuracy on the -axis and hit rate on the -axis. This is especially easy with methods whose number of hits can be controlled by a parameter. This diagram serves a similar purpose as the ROC curve described above, which in this context is also referred to as the hit rate fallout diagram.

The (highest) value in the diagram at which the Precision value is equal to the hit value - i.e. the intersection of the accuracy-hit rate diagram with the identity function - is called the accuracy-hit rate breakeven point. Since both values are dependent on each other, one is often mentioned with the other value fixed. However, interpolation between the points is not permitted; these are discrete points, the spaces between which are not defined.

example

In a database with 36 documents, 20 documents are relevant and 16 are not relevant to a search query. A search yields 12 documents, 8 of which are actually relevant.

| Relevant | Not relevant | total | |

|---|---|---|---|

| Found | 8th | 4th | 12 |

| Not found | 12 | 12 | 24 |

| total | 20th | 16 | 36 |

The hit rate and accuracy for the specific search result from the values of the truth matrix.

- Hit rate: 8 ⁄ (8 + 12) = 8 ⁄ 20 = 2 ⁄ 5 = 0.4

- Accuracy: 8 ⁄ (8 + 4) = 8 ⁄ 12 = 2 ⁄ 3 ≈ 0.67

- Fallout: 4 ⁄ (4 + 12) = 4 ⁄ 16 = 1 ⁄ 4 = 0.25

Practice and problems

One problem with calculating the hit rate is the fact that it is seldom known how many relevant documents exist in total and have not been found (problem of the incomplete truth matrix). For larger databases, where it is particularly difficult to calculate the absolute hit rate, the relative hit rate is used. The same search is carried out with several search engines , and the respective new relevant hits are added to the relevant documents not found. The retrieval method can be used to estimate how many relevant documents exist in total.

Another problem is that the relevance of a document as a truth value (yes / no) must be known in order to determine the hit rate and accuracy . In practice, however, the subjective relevance is often important. Even for sets of hits arranged in a ranking , the specification of hit rate and accuracy is often not sufficient, since it not only depends on whether a relevant document is found, but also whether it is ranked sufficiently high compared to non-relevant documents . If the number of hits is very different, specifying average values for hit rate and accuracy can be misleading.

Further application examples

HIV in Germany

The aim of an HIV test should be to identify an infected person as reliably as possible. But what consequences a false positive test can have is shown by the example of a person who gets tested for HIV and then commits suicide because of a false positive result .

With an assumed accuracy of 99.9% of the non-combined HIV test for both positive and negative results (sensitivity and specificity = 0.999) and the current prevalence of HIV (as of 2009) in the German population (82,000,000 inhabitants, 67,000 of them are HIV-positive) a general HIV test would be devastating: with a non-combined HIV test, only 67 of the 67,000 actually sick people would be wrongly not recognized, but around 82,000 would be wrongly diagnosed as HIV-positive. Of 148,866 positive results, around 55% would be false positive, i.e. more than half of those who tested positive. Thus, the probability that someone who would only test positive with the ELISA test would actually be HIV-positive is only 45% (positive predictive value). Given the very low error rate of 0.1%, this value is due to the fact that HIV only occurs in around 0.08% of German citizens.

| ELISA test | HIV positive | HIV negative | total |

|---|---|---|---|

| HIV test positive | 66,933 | 81,933 | 148,866 |

| HIV test negative | 67 | 81,851,067 | 81.851.134 |

| total | 67,000 | 81,933,000 | 82,000,000 |

Heart attack in the US

In the United States , around four million men and women are admitted to clinic every year for chest pain suspected of having a heart attack . In the course of the complex and expensive diagnostics it turns out that only about 32% of these patients have actually suffered a heart attack. In 68% the diagnosis of infarction was incorrect (false positive suspected diagnosis). On the other hand, about 34,000 patients are discharged from hospital every year without an actual heart attack being detected (approx. 0.8% false negative diagnosis).

In this example, too, the sensitivity of the investigation is similarly high, namely 99.8%. The specificity cannot be determined because the false-positive results of the investigation are not known. Only the false-positive initial diagnoses that are based on the statement "heartache" are known. If one only looks at this initial diagnosis, then the statement of the 34,000 patients who are wrongly discharged is worthless, because they have nothing to do with it. You need the number of false negatives, i.e. those people with a heart attack who were not admitted because they had no heartache.

See also

- RATZ index

- Miscarriage of justice

- Misdiagnosis

- Inspection lot

- Bayes' theorem

- Interest rate sensitivity

literature

General

- Hans-Peter Beck-Bornholdt , Hans-Hermann Dubben : The dog that lays eggs. Detecting misinformation through cross-thinking. ISBN 3-499-61154-6 .

- Gerd Gigerenzer: The basics of skepticism. Berliner Taschenbuch Verlag, Berlin 2004, ISBN 3-8333-0041-8 .

Information retrieval

- John Makhoul, Francis Kubala, Richard Schwartz and Ralph Weischedel: Performance measures for information extraction . In: Proceedings of DARPA Broadcast News Workshop, Herndon, VA, February 1999 .

- R. Baeza-Yates and B. Ribeiro-Neto: Modern Information Retrieval . New York 1999, ACM Press, Addison-Wesley, ISBN 0-201-39829-X , pages 75 ff.

- Christa Womser-Hacker: Theory of Information Retrieval III: Evaluation. In R. Kuhlen: Basics of practical information and documentation. 5th edition. Saur, Munich 2004, pages 227-235. ISBN 3-598-11675-6 , ISBN 3-598-11674-8

- CV van Rijsbergen: Information Retrieval. 2nd edition. Butterworth, London / Boston 1979, ISBN 0-408-70929-4 .

- Jesse Davis and Mark Goadrich: The Relationship Between Precision-Recall and ROC Curves . In: 23rd International Conference on Machine Learning (ICML) , 2006. doi : 10.1145 / 1143844.1143874

Web links

- Test nationwide for Corona? (Interactive illustration of a binary classifier)

- Reading sample from Gerd Gigerenzer: The basics of skepticism

- Information Retrieval - CJ van Rijsbergen 1979

- The pitfalls of statistics: always think false positively! (fictitious scarlet fever screening as an illustrative example)

- Clear description of the problem of false conclusions

Individual evidence

- ↑ Lothar Sachs , Jürgen Hedderich: Applied Statistics: Collection of Methods with R. 8., revised. and additional edition. Springer Spectrum, Berlin / Heidelberg 2018, ISBN 978-3-662-56657-2 , p. 192

- ↑ Thomas G. Dietterich: Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms . In: Neural Computation . tape 10 , no. 7 , October 1, 1998, pp. 1895-1923 , doi : 10.1162 / 089976698300017197 .

![{\ displaystyle {\ color [rgb] {1,0,0} f_ {1} (x) = {\ tfrac {x} {x + 1}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f9878b7733882604aaf8ed12d6a144078494af3c)

![{\ displaystyle {\ color [rgb] {0,0,1} f_ {2} (x) = {\ tfrac {1} {x + 1}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/098616600673385c8aaffc47c5c2096f44ed6566)

![{\ displaystyle {\ text {Sensitivity}} = {\ frac {r _ {\ text {p}}} {r _ {\ text {p}} + f _ {\ text {n}}}} = {\ frac {x_ {\ text {pn}} \ cdot f _ {\ text {n}}} {x _ {\ text {pn}} \ cdot f _ {\ text {n}} + f _ {\ text {n}}}} = { \ frac {x _ {\ text {pn}}} {x _ {\ text {pn}} + 1}} = {\ color [rgb] {1,0,0} f_ {1} (x _ {\ text {pn }})} = {\ color [rgb] {0,0,1} f_ {2} \ left ({\ frac {1} {x _ {\ text {pn}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0c30b2152e66653f583e4449a31c955e9fe69092)

![{\ displaystyle {\ text {false negative rate}} = {\ frac {f _ {\ text {n}}} {r _ {\ text {p}} + f _ {\ text {n}}}} = { \ frac {f _ {\ text {n}}} {x _ {\ text {pn}} \ cdot f _ {\ text {n}} + f _ {\ text {n}}}} = {\ frac {1} { x _ {\ text {pn}} + 1}} = {\ color [rgb] {0,0,1} f_ {2} (x _ {\ text {pn}})} = {\ color [rgb] {1 , 0,0} f_ {1} \ left ({\ frac {1} {x _ {\ text {pn}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f57aec1cefd01261432be3ba5086305c0b5c98fc)

![{\ displaystyle {\ color [rgb] {1,0,0} f_ {1} (x)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b6044bcc83cd2fd7d4748d9755457e3ac36d4717)

![{\ displaystyle {\ color [rgb] {0,0,1} f_ {2} (x)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8efd67d011a64cc5a4f945dfacfcb210b25a4b8e)

![{\ displaystyle {\ color [rgb] {1,0,0} f_ {1} (x): = {\ frac {x} {x + 1}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/358a186fd51ca730f5b49a92fcf7be147287b1ad)

![{\ displaystyle {\ color [rgb] {0,0,1} f_ {2} (x): = {\ frac {1} {x + 1}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9221c3fce4b61136d9fd650692b927fa9b346ac2)

![{\ displaystyle {\ frac {r _ {\ text {p}}} {r _ {\ text {p}} + f _ {\ text {n}}}} = {\ frac {x _ {\ text {pn}}} {x _ {\ text {pn}} + 1}} = {\ color [rgb] {1,0,0} f_ {1} (x _ {\ text {pn}})} = {\ color [rgb] { 0,0,1} f_ {2} \ left ({\ frac {1} {x _ {\ text {pn}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c7e47fa81e18a38cdf68d4842b7be11142c84b1f)

![{\ displaystyle {\ frac {f _ {\ text {n}}} {r _ {\ text {p}} + f _ {\ text {n}}}} = {\ frac {1} {x _ {\ text {pn }} + 1}} = {\ color [rgb] {0,0,1} f_ {2} (x _ {\ text {pn}})} = {\ color [rgb] {1,0,0} f_ {1} \ left ({\ frac {1} {x _ {\ text {pn}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ade32dade135bc1239ea42e34e0845c6d2b0f2e3)

![{\ displaystyle {\ frac {r _ {\ text {n}}} {r _ {\ text {n}} + f _ {\ text {p}}}} = {\ frac {x _ {\ text {np}}} {x _ {\ text {np}} + 1}} = {\ color [rgb] {1,0,0} f_ {1} (x _ {\ text {np}})} = {\ color [rgb] { 0,0,1} f_ {2} \ left ({\ frac {1} {x _ {\ text {np}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/edd16fa49080a9b0eff261e001a91940ee889c01)

![{\ displaystyle {\ frac {f _ {\ text {p}}} {r _ {\ text {n}} + f _ {\ text {p}}}} = {\ frac {1} {x _ {\ text {np }} + 1}} = {\ color [rgb] {0,0,1} f_ {2} (x _ {\ text {np}})} = {\ color [rgb] {1,0,0} f_ {1} \ left ({\ frac {1} {x _ {\ text {np}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/20c248d51dfe6102d42c0087b9c38efc113b2727)

![{\ displaystyle {\ frac {r _ {\ text {p}}} {r _ {\ text {p}} + f _ {\ text {p}}}} = {\ frac {x _ {\ text {pp}}} {x _ {\ text {pp}} + 1}} = {\ color [rgb] {1,0,0} f_ {1} (x _ {\ text {pp}})} = {\ color [rgb] { 0,0,1} f_ {2} \ left ({\ frac {1} {x _ {\ text {pp}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8a706d11bb1494c188282eaacf2ca01622739e54)

![{\ displaystyle {\ frac {r _ {\ text {n}}} {r _ {\ text {n}} + f _ {\ text {n}}}} = {\ frac {x _ {\ text {nn}}} {x _ {\ text {nn}} + 1}} = {\ color [rgb] {1,0,0} f_ {1} (x _ {\ text {nn}})} = {\ color [rgb] { 0,0,1} f_ {2} \ left ({\ frac {1} {x _ {\ text {nn}}}} \ right)}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9ac299fd23c4961ca382f8942aef4e9fdc08e056)