Self-organizing card

As a self-organizing maps , Kohonenkarten or Kohonennetze (after Teuvo Kohonen ; English self-organizing map , SOM or self-organizing feature map , SOFM ) refers to a type of artificial neural networks . As an unsupervised learning process, they are a powerful data mining tool . Their functional principle is based on the biological knowledge that many structures in the brain have a linear or planar topologyexhibit. The signals of the entrance room, e.g. B. visual stimuli, however, are multidimensional.

The question arises, how these multidimensional impressions are processed by planar structures. Biological studies show that the input signals are mapped in such a way that similar stimuli are close together. The phase space of the applied stimuli is thus mapped.

If a signal is now applied to this card, only those areas of the card that are similar to the signal are excited. The neuron layer acts as a topological feature map when the position of the most strongly excited neurons is correlated with important signal features in a regular and continuous manner.

Self-organizing maps are used, for example, in computer graphics (as a quantization algorithm for color reduction of raster graphics data ) and in bioinformatics for cluster analysis .

Lateral environment inhibition

A general working principle of the nervous system is that active local groups of nerve cells inhibit other groups in their environment, and thus suppress their activity (see lateral inhibition ). The activity of a nerve cell is therefore determined from the superposition of the exciting input signal and the inhibiting contributions of all layer neurons. Since this lateral inhibition applies everywhere, there is constant competition for supremacy. The course of the lateral inhibition is exciting / strengthening for short distances and inhibiting / weakening for long distances. It can be shown that this effect is sufficient to localize the excitation response in the vicinity of the maximum external excitation.

Structure and learning

An input layer with ? neurons is completely connected to all neurons within the Kohonen map (the so-called competitive layer ), hereinafter simply map. Each input stimulus ? to be mapped is passed on to each neuron on this map via the connections.

The connecting weights ? between the neurons of the input layer and the neurons in the map each define a point in the input space of the applied stimuli ?. All neurons within the card are interconnected in an inhibitory manner.

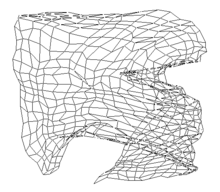

- The figure shows an adaptation step in Kohonen's model . A stimulus ? is applied to the network.

- The network looks for the excitation center ? in the map, the weight vector ? of which is closest to ? (smallest distance).

- The difference between ? and ? is reduced in an adaptation step.

- The neurons close to the excitation center ? are also adapted, but the less the further they are from the excitation center.

It is common, but not mandatory, to use the Euclidean distance as a distance measure for both the learning vectors and the map .

If a set of different training data is available, an epoch in training is complete when all stimuli have been applied to the input layer exactly once in a random sequence. The training ends when the network has reached its stable final state.

Learning in a self-organized map can formally be described as an iterative process . In the initial state, the weight vectors of the neurons are randomly distributed in the network and a stimulus is applied to the network in each learning step. The self-organizing map changes the weight vectors of the neurons according to Hebb's learning rule , so that a topographical mapping results over time .

Training a SOM in the example

The following table shows a network whose neurons are arranged in a grid and are initially randomly distributed in space. It is trained with input stimuli from the square, which are evenly distributed.

Formal description of the training

A finite set M of training stimuli m i is given , which are specified by an n-dimensional vector x i :

Further, let a set of μ N neurons given to each of which a weight vector w i in X and a position of k i is on a Kohonen map allocated is hereafter assumed two-dimensionally. The map dimension can be selected as desired, with map dimensions less than or equal to three being used to visualize high-dimensional relationships. The positions on the map should correspond to discrete, square grid points (alternative neighborhood topologies such as hexagonal topologies are also possible), and each grid point should be occupied by exactly one neuron:

In the learning phase of the stimuli is from the set to the presentation time point t an element m j t equally distributed randomly selected. This stimulus establishes a winning neuron n s t on the card , which is called the excitation center . This is precisely the neuron whose weight vector w s t has the smallest distance in space X to the stimulus vector x j t , where a metric d X (.,.) Of the input space is given:

After n s t has been determined, all neurons n i t that are allowed to adapt their weight vectors in addition to the excitation center are determined. These are the neurons whose distance d A ( k s , k i ) on the map is not greater than a time-dependent threshold value, which is referred to as the distance range δ t , with a metric d A (.,.) Of the map is given. These neurons are combined in a subset N + t ⊂ N t :

In the following adaptation step, a learning step is applied to all neurons from N + t , which changes the weight vectors. The learning step can be interpreted as a shift of the weight vectors in the direction of the stimulus vector x j t .

It is based on the model of Ritter et al. (1991) used the following adaptation rule:

with the time-dependent parameter equations ε t and h si t , which are defined as:

1) The time-dependent learning rate ε t :

with the start learning rate ε start and ε end as the learning rate at the end of the process, d. H. after t max stimulus presentations.

2) The time-dependent distance weighting function h si t :

with δ t as the neighborhood or adaptation radius around the winning neuron on the map:

with the adaptation radius δ start at the beginning of the process, and δ end as the adaptation radius at the end of the process.

So that a topology-preserving mapping is created, i.e. This means that neighboring points in the input space X are mapped onto neighboring points on the map, two factors must be taken into account:

- The topological neighborhood h si t to the excitation center has initially selected large and reduced during the proceedings.

- The adaptation strength ε t must, starting from a large value, decrease to a small residual value in the course of the method.

In the illustrated learning process, t max presentations are carried out, after which the SOM can be transferred to the application phase in which stimuli are presented that did not occur in the learning set. Such a stimulus is assigned to the winning neuron whose weight vector is closest to the stimulus vector, so that a neuron and a position on the neuron map can be assigned to the stimulus via the detour of the weight vector. In this way, the new stimulus is automatically classified and visualized.

Variants of the SOM

A number of variations and extensions to the original Kohonen model have been developed, including: a .:

- Context SOM (K-SOM)

- Temporary SOM (T-SOM)

- Motor SOM (M-SOM)

- Neuron Gas (NG-SOM)

- Growing cell structures (GCS-SOM)

- Growing lattice structure (GG-SOM)

- Growing hierarchical SOM (GH-SOM)

- Growing Neuron Gas (GNG-SOM)

- Parametric SOM (P-SOM)

- Hyperbolic SOM (H-SOM)

- Interpolating SOM (I-SOM)

- Local Weighted Regression SOM (LWR SOM)

- Selective Attention SOM (SA-SOM)

- Learned expectations in GNG-SOMs (LE-GNG-SOM)

- Fuzzy SOM (F-SOM)

- Adaptive Subspace SOM (AS-SOM)

- Generative topographic map (GTM)

literature

- Günter Bachelier: Introduction to self-organizing cards . Tectum-Verlag, Marburg 1998, ISBN 3-8288-5017-0

- Teuvo Kohonen : Self-Organizing Maps . Springer-Verlag, Berlin 1995, ISBN 3-540-58600-8

- Helge Ritter , Thomas Martinetz , Klaus Schulten: Neural Networks. An introduction to the neuroinformatics of self-organizing networks . Addison-Wesley, Bonn 1991, ISBN 3-89319-131-3

Web links

- DemoGNG.js JavaScript simulator for SOMs and other network models (Neural Gas, Growing Neural Gas, Growing Grid etc.)

- ANNetGPGPU : C ++ library with an implementation for SOMs on GPUs and CPUs and Python interface

- SOM research at Helsinki University of Technology (Teuvo Kohonen)

- About SOM in the comp.ai.neural-nets FAQ

- Java SOMToolbox : Open source application for creating, analyzing and interacting with self-organizing maps, developed at the Technical University of Vienna.

- Data bionics : data visualization and data mining with emergent SOM: Prof. Ultsch Marburg

- MusicMiner : visualization of music collections ESOM

- GNOD , The G lobal N etwork o f D reams , a Kohonen network for determining similarities between music , film and book authors

- Demonstration example : HTW Dresden - a SOM catches a ball

- Viscovery SOMine : SOM technology tool from Viscovery

- Neural Networks with Java