Atomic clock

This article needs additional citations for verification. (November 2007) |

An atomic clock is a type of clock that uses an atomic resonance frequency standard as its timekeeping element. They are the most accurate time and frequency standards known, and are used as primary standards for international time distribution services, and to control the frequency of television broadcasts and GPS satellite signals. Early atomic clocks were masers with attached equipment. Today's best atomic frequency standards (or clocks) are based on absorption spectroscopy of cold atoms in atomic fountains. National standards agencies maintain an accuracy of 10-9 seconds per day (approximately 1 part in 1014), and a precision set by the radio transmitter pumping the maser. The clocks maintain a continuous and stable time scale, International Atomic Time (TAI). For civil time, another time scale is disseminated, Coordinated Universal Time (UTC). UTC is derived from TAI, but synchronized using leap seconds to UT1, which is based on actual rotations of the earth with respect to the mean sun.

History

The first atomic clock was built in 1949 at the U.S. National Bureau of Standards (NBS). The first accurate atomic clock, a caesium standard based on a certain transition of the caesium-133 atom, was built by Louis Essen in 1955 at the National Physical Laboratory in the UK. This led to the internationally agreed definition of the second being based on atomic time.

Since the beginning of development in the 1950s, atomic clocks have been made based on the hyperfine (microwave) transitions in hydrogen-1, caesium-133, and rubidium-87. For decades, scientific-instrument companies such as Hewlett-Packard have been making caesium-beam clocks and hydrogen masers for entities like NIST and USNO, at prices rivalling those of cars.

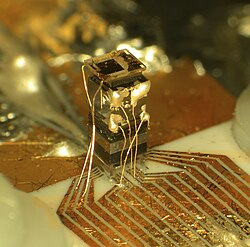

In August 2004, NIST scientists demonstrated a chip-scaled atomic clock.[1] According to the researchers, the clock was believed to be one-hundredth the size of any other. It was also claimed that it requires just 75 mW, making it suitable for battery-driven applications. This device could conceivably become a consumer product. It will presumably be much smaller, consume less power, and be much cheaper to produce than the traditional caesium-fountain clocks used by NIST and USNO as reference clocks.

In February 2008, physicists at JILA, a joint institute of the National Institute of Standards and Technology (NIST) and the University of Colorado at Boulder, demonstrated a new clock based on strontium atoms trapped in a laser grid. The new clock is more than twice as accurate as the best clock up to now, the NIST-F1, and has an inaccuracy of less than one second in 200 million years (compared to 1 second per 80 million years for the F1). [2]

How they work

Since 1967, the International System of Units (SI) has defined the second as the duration of 9,192,631,770 cycles of radiation corresponding to the transition between two energy levels of the ground state of the caesium-133 atom. This definition makes the caesium oscillator (often called an atomic clock) the primary standard for time and frequency measurements (see caesium standard). Other physical quantities, like the volt and metre, rely on the definition of the second as part of their own definitions.[3]

The core of the atomic clock is a tunable microwave cavity containing the gas. In a hydrogen maser clock the gas emits microwaves (mases) on a hyperfine transition, the field in the cavity oscillates, and the cavity is tuned for maximum microwave amplitude. Alternatively, in a caesium or rubidium clock, the beam or gas absorbs microwaves and the cavity contains an electronic amplifier to make it oscillate. For both types the atoms in the gas are prepared in one electronic state prior to filling them into the cavity. For the second type the number of atoms which change electronic state is detected and the cavity is tuned for a maximum of detected state changes.

This adjustment process is where most of the work and complexity of the clock lies. The adjustment tries to correct for unwanted side-effects, such as frequencies from other electron transitions, temperature changes, and the "spreading" in frequencies caused by ensemble effects. One way of doing this is to sweep the microwave oscillator's frequency across a narrow range to generate a modulated signal at the detector. The detector's signal can then be demodulated to apply feedback to control long-term drift in the radio frequency. In this way, the quantum-mechanical properties of the atomic transition frequency of the caesium can be used to tune the microwave oscillator to the same frequency, except for a small amount of experimental error. When a clock is first turned on, it takes a while for the oscillator to stabilize.

In practice, the feedback and monitoring mechanism is much more complex than described above.

A number of other atomic clock schemes are in use for other purposes. Rubidium standard clocks are prized for their low cost, small size (commercial standards are as small as 400 cm³) and short-term stability. They are used in many commercial, portable and aerospace applications. Hydrogen masers (often manufactured in Russia) have superior short-term stability compared to other standards, but lower long-term accuracy.

Often, one standard is used to fix another. For example, some commercial applications use a Rubidium standard periodically corrected by a GPS receiver. This achieves excellent short-term accuracy, with long-term accuracy equal to (and traceable to) the U.S. national time standards.

The lifetime of a standard is an important practical issue. Modern rubidium standard tubes last more than ten years, and can cost as little as US$50. Caesium reference tubes suitable for national standards currently last about seven years and cost about US$35,000. The long-term stability of hydrogen maser standards decreases because of changes in the cavity's properties over time.

Modern clocks use magneto-optical traps to cool the atoms for improved precision.

Application

Atomic clocks are used to generate standard frequencies.[citation needed] They are installed at sites of time signals, LORAN-C, and Alpha Navigation transmitters.[citation needed] They are also installed at some longwave and mediumwave broadcasting stations to deliver a very precise carrier frequency, which can also function as standard frequency.[citation needed]

Further, atomic clocks are used for long-baseline interferometry in radioastronomy.[citation needed]

Atomic clocks are the basis of the GPS navigation system. The GPS master clocks are atomic clocks at the ground stations, and each of the GPS satellites has an on-board atomic clock.

Power consumption

Power consumption varies enormously, but there is a crude scaling with size.[citation needed] Chip scale atomic clocks can use power on the order of 100 mW;[citation needed] NIST F1 uses power orders of magnitude greater.[citation needed]

Research

Most research focuses on ways to make the clocks smaller, cheaper, more accurate, and more reliable. These goals often conflict.

New technologies, such as femtosecond frequency combs, optical lattices and quantum information, have enabled prototypes of next generation atomic clocks. These clocks are based on optical rather than microwave transitions. A major obstacle to developing an optical clock is the difficulty of directly measuring optical frequencies. This problem has been solved with the development of self-referenced mode-locked lasers, commonly referred to as femtosecond frequency combs. Before the demonstration of the frequency comb in 2000, terahertz techniques were needed to bridge the gap between radio and optical frequencies, and the systems for doing so were cumbersome and complicated. With the refinement of the frequency comb these measurements have become much more accessible and numerous optical clock systems are now being developed around the world.

Like in the radio range absorption spectroscopy is used to stabilize an oscillator — in this case a laser. When the optical frequency is divided down into a countable radio frequency using a femtosecond comb, the bandwidth of the phase noise is also divided by that factor. Although the bandwidth of laser phase noise is generally greater than stable microwave sources, after division it is less.

The two primary systems under consideration for use in optical frequency standards are single ions isolated in an ion trap and neutral atoms trapped in an optical lattice.[4] These two techniques allow the atoms or ions to be highly isolated from external perturbations, thus producing an extremely stable frequency reference.

Optical clocks have already achieved better stability and lower systematic uncertainty than the best microwave clocks.[4] This puts them in a position to replace the current standard for time, the caesium fountain clock.

Atomic systems under consideration include but are not limited to Al+, Hg+,[4] Hg, Sr, Sr+, In+, Ca+, Ca, Yb+ and Yb.

Radio clocks

Modern radio clocks can be referenced to atomic clocks, and provide a way of getting high-quality atomic-derived time over a wide area using inexpensive equipment. However, radio clocks are not appropriate for high-precision scientific work. Many retailers market radio clocks as "atomic clocks", but in doing so they are misrepresenting their products.

There are a number of longwave radio transmitters around the world, in particular DCF77 (Germany), HPG (Switzerland), JJY (Japan), NPL or MSF (United Kingdom), TDF (France) and WWVB (United States). Many other countries can receive these signals (JJY can sometimes be received even in Western Australia and Tasmania at night), but it depends on time of day and atmospheric conditions. There is also a transit delay of approximately 1 ms for every 300 kilometers (185 mi) the receiver is from the transmitter. When operating properly and when correctly synchronized, better brands of radio clocks are normally accurate to the second.

Typical radio "atomic clocks" require placement in a location with a relatively unobstructed atmospheric path to the transmitter, perform synchronization once a day during the night-time, and need fair to good atmospheric conditions to successfully update the time. The device that keeps track of the time between, or without, updates is usually a cheap and relatively inaccurate quartz-crystal clock, since it is thought that an expensive precise time keeper is not necessary with automatic atomic clock updates. The clock may include an indicator to alert users to possible inaccuracy when synchronization has not been successful within the last 24 to 48 hours.

See also

- Network Time Protocol

- NIST-F1

- Radio clock

- Télé Distribution Française

- GPS

- Optical Clockwork

- Primary Atomic Reference Clock in Space

- Magneto-optical trap

- International Atomic Time

References

- ^ "Chip-Scale Atomic Devices at NIST". NIST. May 2007. Retrieved 2008-01-17.

- ^ Collaboration Helps Make JILA Strontium Atomic Clock ‘Best in Class’

- ^ "FAQs". Franklin Instrument Company. 2007. Retrieved 2008-01-17.

- ^ a b c Oskay, WH (2006). "Single-atom optical clock with high accuracy" (PDF). Physical Review Letters. 97 (2): 020801. PMID 16907426. Retrieved 2007-03-25.

{{cite journal}}: Unknown parameter|coauthors=ignored (|author=suggested) (help); Unknown parameter|month=ignored (help)

External links

- What is a Cesium atom clock?

- National Research Council of Canada: Optical frequency standard based on a single trapped ion

- United States Naval Observatory Time Service Department

- PTB Braunschweig, Germany - with link in English language

- National Physical Laboratory (UK) time website

- NIST Internet Time Service (ITS): Set Your Computer Clock Via the Internet

- NIST press release about chip-scaled atomic clock

- NIST website

- Web pages on atomic clocks by The Science Museum (London)

- Optical Atomic Clock BBC, 2005

- The atomic fountain