Backpropagation

Backpropagation or also backpropagation of error or also error feedback (also backpropagation ) is a common method for learning artificial neural networks . It belongs to the group of supervised learning processes and is applied to multilayer networks as a generalization of the delta rule . To do this, there must be an external teacher who knows the desired output, the target value , at all times . Backward propagation is a special case of a general gradient method in optimization based on the mean square error .

history

According to various sources, the foundations of the method in the context of control theory were derived from principles of dynamic programming , namely by Henry J. Kelley in 1960 and Arthur E. Bryson in 1961. In 1962, Stuart Dreyfus published a simpler derivation using the chain rule . Vladimir Vapnik cites an article from 1963 in his book on Support Vector Machines . In 1969 Bryson and Yu-Chi Ho described the process as a multi-stage optimization of dynamic systems.

Finally, in 1970, Seppo Linnainmaa published the general method for automatic differentiation (AD) of discrete networks of nested differentiable functions. This is the modern variant of the backpropagation method, which is efficient even with thin networks.

In 1973, Stuart Dreyfus used backpropagation to adjust parameters of control systems in proportion to their error gradients. Paul Werbos mentioned the possibility of applying this principle to artificial neural networks in 1974 , and in 1982 he did so in the way that is now widely used. Four years later, David E. Rumelhart , Geoffrey E. Hinton, and Ronald J. Williams showed through experiments that this method can lead to useful internal representations of input data in deeper layers of neural networks, which is the basis of deep learning . In 1993, Eric A. Wan was the first to win an international pattern recognition competition through back propagation.

Minimizing errors

In the learning problem, the most reliable possible mapping of given input vectors to given output vectors is sought for any network. For this purpose, the quality of the image is described by an error function, which is defined here by the square error:

- .

It is

- the mistake

- the number of samples presented to the network,

- the desired target output or the target value ( target ) and

- the calculated actual output .

The factor is only added to simplify the derivation.

The goal is now to minimize the error function, but generally only a local minimum is found. An artificial neural network is learned in with the backpropagation method by changing the weights, since the output of the network - apart from the activation function - is directly dependent on them.

algorithm

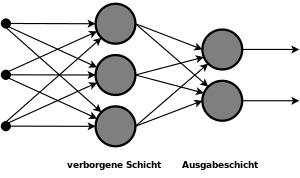

The backpropagation algorithm runs in the following phases:

- An input pattern is applied and propagated forward through the network.

- The output of the network is compared to the desired output. The difference between the two values is considered to be a fault in the network.

- The error is now propagated back to the input layer via the output layer. The weightings of the neuron connections are changed depending on their influence on the error. This guarantees an approximation of the desired output when the input is created again.

The name of the algorithm results from the back propagation of the error ( English error back propagation ).

Derivation

The formula of the back-propagation method is by differentiation derived: For the output of a neuron with two inputs and one obtains a two-dimensional hyperplane , wherein the error of the neuron depends on the weights of the inputs is. This error surface contains minima that have to be found. This can now be achieved by the gradient method by descending from a point on the surface in the direction of the greatest decrease in the error function.

Neuron output

For the derivation of the backpropagation method, the neuron output of an artificial neuron is shown briefly. The output of an artificial neuron can be defined by

and the power input by

It is

- a differentiable activation function whose derivative is not always zero,

- the number of entries,

- the input and

- the weighting between input and neuron .

There is no threshold value here. This is usually realized by an on-neuron that is always “firing” and its output is assigned the constant value 1. In this way one unknown is eliminated.

Derivation of the error function

The partial derivative of the error function results from using the chain rule :

The following formula can now be calculated from the individual terms. In contrast to the simple delta rule , the derivation depends on two cases:

- If the neuron is in the output layer, it is directly involved in the output,

- on the other hand, if it is in a hidden layer, the adjustment can only be calculated indirectly.

Concrete:

- With

It is

- the change in the weight of the connection from neuron to neuron ,

- a fixed learning rate with which the strength of the weight changes can be determined,

- the error signal of the neuron , corresponding to ,

- the target output of the output neuron ,

- the output of the neuron ,

- the actual output of the output neuron and

- the index of the subsequent neurons of .

Is in a single network . The output then corresponds to the inputs of the network.

Modification of the weights

The variable goes into the differentiation of the neurons: If the neuron lies in a hidden layer, its weighting is changed depending on the error that the following neurons generate, which in turn get their inputs from the neuron under consideration.

The weights can now be changed as follows:

- .

It is

- the new value of the weight,

- the old value of weight and

- the change in weight calculated above (based on )

extension

The choice of the learning rate is important for the method, since a value that is too high causes a strong change, whereby the minimum can be missed, while a learning rate that is too low slows the learning process unnecessarily.

Various optimizations of reverse propagation, e.g. B. Quickprop , are aimed primarily at accelerating error minimization; other improvements try primarily to increase reliability.

Backpropagation with a variable learning rate

In order to avoid an oscillation of the network, ie alternating connection weights, there are refinements of the method in which a variable learning rate is used.

Backpropagation with laziness term

By using a variable inertia term ( momentum ) , the gradient and the last change can be weighted, so that the weight adjustment also depends on the previous change. If the momentum is equal to 0, the change depends solely on the gradient, if the value is 1, it depends only on the last change.

Similar to a ball rolling down a mountain and whose current speed is determined not only by the current gradient of the mountain, but also by its own inertia, an inertia term can be added to the backpropagation:

It is

- the change in the weight of the connection from neuron to neuron at the time ,

- a learning rate,

- the error signal of the neuron and

- the input of the neuron ,

- the influence of the inertia term . This corresponds to the weight change at the previous point in time.

The current change in weight thus depends both on the current gradient of the error function (slope of the mountain, 1st summand) and on the weight change at the previous point in time (own inertia, 2nd summand).

The inertia term avoids problems of the backpropagation rule in steep gorges and flat plateaus. Since, for example, the gradient of the error function becomes very small in flat plateaus, the gradient descent would be "slowed down" without an inertia term; this "slowdown" is delayed by adding the inertia term so that a flat plateau can be overcome more quickly.

As soon as the error in the network becomes minimal, the learning can be completed and the multilayer network is now ready to classify the learned patterns.

Biological context

As a method of machine learning , backpropagation is a mathematically based learning mechanism of artificial neural networks and does not attempt to model actual neural learning mechanisms biologically. It is not the result of neurophysiological experiments and is therefore often criticized by neuroscientists. There is no neurophysiological evidence to suggest that backpropagation or a similar technique is used by biological neurons (this does not apply to gradient techniques in general). In the following some reasons for the biological implausibility of backpropagation are presented: (taken from Y. Bengio et al.)

- It is unclear how information about the target values can get into the synaptic cleft in the last layer of neurons

- Biological neurons communicate via binary changes of state (spikes), not via continuous values

- Biological neurons are time sensitive; H. their activity varies not only depending on the intensity of the input information, but also on its timing ( Spike Time Dependent Plasticity, STDP )

- Backpropagation requires perfectly synchronized, discrete steps

- A potential feedback mechanism would have to have the exact, non-linear derivatives of the neurons in the forward part, which vary immensely in structure and selectivity in the brain.

- The feedback mechanism should have exactly symmetrical weights ( weight transport problem ).

literature

- David E. Rumelhart , Geoffrey E. Hinton , Ronald J. Williams : Learning representations by back-propagating errors. In: Nature . Volume 323, 1986, pp. 533-536 ( nature.com ).

- Raúl Rojas : Theory of Neural Networks. Springer, 1996, ISBN 3-540-56353-9 . ( E-book of the English version ; PDF; 4.6 MB), p. 151 ff.

- Burkhard Lenze : Introduction to the mathematics of neural networks. Logos-Verlag, Berlin 2003, ISBN 3-89722-021-0 .

- Robert Callan : Neural Networks in Plain Text. Pearson studies, Munich 2003.

- Andreas Zell : Simulation of neural networks. R. Oldenbourg Verlag, Munich 1997, ISBN 3-486-24350-0 .

Web links

- Michael Nielsen : Neural Networks and Deep Learning Determination Press 2015 (Chapter 2, e-book)

- Backpropagator's Review (not maintained for a long time)

- A small overview of neural networks ( David Kriesel ) - free lecture notes in German on neural networks. Richly illustrated and clear. Contains a chapter on backpropagation including motivation, derivation and variations such as B. Inertia term, learning rate variations and the like. a.

- Membrain: free neural network editor and simulator for Windows

- Easy to understand tutorial on backpropagation with implementations (English)

- Blog post on the subject of back propagation and gradient descent including programming examples

swell

- ↑ Werner Kinnebrock: Neural Networks: Basics, Applications, Examples. R. Oldenbourg Verlag, Munich 1994, ISBN 3-486-22947-8 .

- ^ Stuart Dreyfus: Artificial Neural Networks, Back Propagation and the Kelley-Bryson Gradient Procedure. In: J. Guidance, Control and Dynamics. 1990.

- ↑ Eiji Mizutani, Stuart Dreyfus, Kenichi Nishio: On derivation of MLP backpropagation from the Kelley-Bryson optimal-control gradient formula and its application. In: Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN 2000). Como Italy, July 2000. (online) ( Page no longer available , search in web archives ) Info: The link was automatically marked as defective. Please check the link according to the instructions and then remove this notice.

- ^ A b c Jürgen Schmidhuber : Deep learning in neural networks: An overview. In: Neural Networks. 61, 2015, pp. 85–117. ArXiv

- ^ A b c Jürgen Schmidhuber: Deep Learning. In: Scholarpedia. 10 (11), 2015, pp. 328-332. Section on backpropagation

- ^ Henry J. Kelley: Gradient theory of optimal flight paths. In: Ars Journal. 30 (10), 1960, pp. 947-954. (on-line)

- ^ Arthur E. Bryson: A gradient method for optimizing multi-stage allocation processes. In: Proceedings of the Harvard Univ. Symposium on digital computers and their applications. April 1961.

- ^ Stuart Dreyfus: The numerical solution of variational problems. In: Journal of Mathematical Analysis and Applications. 5 (1), 1962, pp. 30-45. (on-line)

- ^ AE Bryson, WF Denham, SE Dreyfus: Optimal programming problems with inequality constraints. I: Necessary conditions for extremal solutions. In: AIAA J. 1, 11, 1963, pp. 2544-2550.

- ^ Stuart Russell, Peter Norvig : Artificial Intelligence A Modern Approach . S. 578 (English): "The most popular method for learning in multilayer networks is called Back-propagation."

- ↑ Arthur Earl Bryson, Yu-Chi Ho: Applied optimal control: optimization, estimation, and control . Blaisdell Publishing Company or Xerox College Publishing, 1969, p. 481 .

- ^ Seppo Linnainmaa : The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's Thesis (in Finnish), Univ. Helsinki, 1970, pp. 6-7.

- ^ Seppo Linnainmaa : Taylor expansion of the accumulated rounding error. In: BIT Numerical Mathematics. 16 (2), 1976, pp. 146-160.

- ^ Andreas Griewank: Who Invented the Reverse Mode of Differentiation? Optimization Stories. In: Documenta Matematica. Extra Volume ISMP, 2012, pp. 389-400.

- ^ Andreas Griewank, Andrea Walther: Principles and Techniques of Algorithmic Differentiation. 2nd Edition. SIAM, 2008.

- ^ Stuart Dreyfus: The computational solution of optimal control problems with time lag. In: IEEE Transactions on Automatic Control. 18 (4), 1973, pp. 383-385.

- ^ Paul Werbos : Beyond regression: New tools for prediction and analysis in the behavioral sciences. PhD thesis. Harvard University 1974.

- ^ Paul Werbos: Applications of advances in nonlinear sensitivity analysis. In: System modeling and optimization. Springer, Berlin / Heidelberg 1982, pp. 762-770. (on-line)

- ↑ David E. Rumelhart , Geoffrey E. Hinton , Ronald J. Williams : Learning representations by back-propagating errors. In: Nature . Volume 323, 1986, pp. 533-536.

- ↑ Eric A. Wan: Time series prediction by using a connectionist network with internal delay lines. In: Santa Fe Institute Studies in the Sciences of Complexity-Proceedings. Vol. 15, Addison-Wesley Publishing Co., 1993, pp. 195-195.

- ^ Y. Bengio et al .: Towards Biologically Plausible Deep Learning. arxiv : 1502.04156 2016.