Markov chain

A Markov chain ( English Markov chain ; also Markow process , after Andrei Andrejewitsch Markow ; other spellings Markov chain , Markoff chain , Markof chain ) is a special stochastic process . The aim of using Markov chains is to give probabilities for the occurrence of future events. A Markov chain is defined by the fact that knowledge of only a limited prehistory enables just as good prognoses about future developments as knowledge of the entire prehistory of the process.

A distinction is made between Markov chains of different orders. In the case of a Markov chain of the first order, this means: The future state of the process is only conditioned by the current state and is not influenced by past states. The mathematical formulation in the case of a finite quantity of states only requires the concept of discrete distribution and conditional probability , while in the case of continuous time the concepts of filtration and conditional expectation are required.

The terms Markov chain and Markov process are generally used synonymously. In some cases, however, Markov chains mean processes in discrete time (discrete state space ) and Markov processes mean processes in continuous time (continuous state space).

Introductory examples

Markov chains are very well suited to model random changes in the state of a system if there is reason to believe that the changes in state only influence one another over a limited period of time or are even memoryless . One example is the utilization of operating systems with memoryless arrival and operating times.

Discrete, finite Markov chain

A popular example of a time-discrete Markov chain with finite state space is the random walk on a discrete circle, modeled by the remainder class ring . This leads to the finite state space . Here you start in the equivalence class of 0, and move in each step from the current state with probability to or after (so clearly: one step to the left or to the right).

Discrete, infinite Markov chain

As an example of a countably infinite state space, you toss a coin over and over and note with each toss how often 'head' has appeared so far. The sequence of numbers formed in this way forms a (time-discrete) Markov chain, this time with a state space with the transition probability for the transition from to and for remaining in .

Another example of a Markov chain with infinite state space is the Galton-Watson process , which is often used to model populations.

Discrete time and at most a countable infinite set of states

definition

A family of random variables is given , all of which only assume values from the state space that can at most be counted . Then a (discrete) Markov chain is called if and only if

applies. The transition probabilities therefore only depend on the current state and not on the entire past. This is known as the Markov trait or memory loss . Be

the transition probabilities. These can then be summarized in a square transition matrix :

If the transition probabilities are independent of the point in time , i.e. if it applies to all , then the Markov chain is called homogeneous or a chain with stationary transition probabilities . If a chain is homogeneous, it is defined as the -step transition probability.

Markov chain of order n

Occasionally, Markov chains of the th order are also examined. With these, the future state depends on the previous states:

In this sense the Markov chains considered above are chains of the first order. Chains of higher order are not considered further here.

Basic properties

- The distribution of is sometimes referred to as the starting distribution or the initial distribution. If a start distribution is specified , all further distributions are clearly defined. For this reason, the shortened notation has become established to only specify the start distribution and the time step of interest:

- If you start in a clear state , it is usually written.

- For a finite state space, Markov chains can be described using the transition matrix and probability vectors. If you choose a stochastic start vector (as a row vector) as the start distribution, the distribution at time 1 is given by . It follows inductively . In this case, exactly the th entry of is the probability of being in the state at the time when the start distribution was started.

- According to the above description, in the case of homogeneity and finiteness of the state space, the -step transition probabilities can easily be calculated. These are then exact

- ,

- i.e. the entry that is in the -th row and -th column of the -th power of the transition matrix.

- In general, the Chapman-Kolmogorow equation applies . In the case of a finite state space, it is precisely the component-wise writing of the matrix multiplication.

- Markov chains are discrete dynamic systems . The period is the index of the random variable. The state space in the sense of the dynamic system is then formed by all distributions on the state space in the sense of the Markov chain. The operation then assigns the distribution in the -th time step to the distribution in the -th time step. In the case of a finite state space of the Markov chain, this is exactly the iterated application of the transition matrix as described above. Some terms from the theory of dynamic systems have a counterpart in the theory of Markov chains such as B. critical points and stationary distributions.

- Homogeneous Markov chains with a stationary distribution as the starting distribution are strongly stationary stochastic processes. Thus, time-discrete Markov chains with a countable state space are measure-maintaining dynamic systems if they start in their invariant distribution . If they are also positively recurrent and irreducible , they are even ergodic stochastic processes and allow statements from ergodic theory to be used, such as the individual ergodic theorem .

- The transition matrix defined above is infinitely dimensional if the state space is countably infinite. Only in the case of the finiteness of the state space is it a matrix in the sense of linear algebra .

Examples

Finite state space

We try to create a simple weather forecast using a Markov chain. To do this, we code 1 = "the sun is shining", 2 = "it's cloudy" and 3 = "it's raining". We choose a day as the time step. We know from experience that if the sun is shining today, the probability that it will rain tomorrow is around 80% and the probability that it is cloudy is around 20%. In addition, we make the assumption that these probabilities do not change and that the Markov chain is homogeneous. So now we know

But if it is cloudy, there is a probability of 0.5 it will rain the following day and with a probability of 0.5 the sun will shine. So it applies

If it rains today, the sun will shine with a probability of 0.1 and with a probability of 0.9 it will be cloudy. This follows for the transition probabilities

This completely describes the Markov chain. Such Markov chains can be clearly represented by transition graphs, as shown above. If one now arranges the transition probabilities in a transition matrix, one obtains

We now want to know how the weather will develop when the sun shines today. To do this, we specify the initial distribution in the form of the stochastic start vector . So we almost certainly start in state 1. Multiplication from the right with the transition matrix yields . So there is an eighty percent chance of raining. The third day applies . Thus the probability of rain on the third day is just over 50% and the probability of sunshine just under 40%. Thus, for any given weather on the start day, the rain and sun probability can be specified on any day. Questions such as: “Today the sun is shining. How big is the probability that it rained three days ago? ” Can be answered with Bayes' theorem .

Countably infinite state space

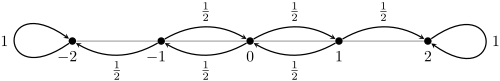

Let us now define a Markov chain on the state space and with transition probabilities

where applies. This can be illustrated as follows: If you start at any point, you either move with a probability of to the “right”, i.e. move to the next higher number. There is a likelihood of moving “left” to a lower number. According to this procedure, one then errs about the number line. Hence this Markov chain is also called the random walk on . The term random walk is occasionally used for such Markov chains . If we start in state 0, we have the transition probabilities above

It then follows . This shows a certain connection to the binomial distribution . But also applies . Certain states can only be visited at certain times, a property called periodicity .

If, more generally, is a sequence of independent and identically distributed random variables with values in , then is through

given a Markov chain with transition probabilities .

Classic examples

Some of the most famous Markov chains are

- The odyssey to as well as generalizations to graphs.

- The Galton-Watson process , which models the reproduction of a single-sexly reproductive species

- The Ehrenfest model for modeling the diffusion of molecules through membranes.

Attributes

Markov chains can have certain attributes that influence long-term behavior in particular. These include, for example, the following:

Irreducibility

Irreducibility is important for convergence towards a steady state. Put simply, a Markov chain is irreducible if it holds for all states and that the probability of getting from to in a finite time is genuinely positive. If this applies to fixed and , it is also said that and communicate with each other .

periodicity

Periodic Markov chains are given certain deterministic structures despite the randomness of the system. If a Markov chain is periodic with a period , it can at most return to its starting point every time step (but this is not mandatory).

Recurrence and transience

The recurrence and the transience describe the long-term behavior of a Markov chain. If a state is almost certainly visited infinitely often, it is called recurrent, otherwise transient. If all states are recurrent (transient), then the Markov chain is called recurrent (transient). The Green function is an important tool for determining recurrence .

Absorbent states

Absorbent states are states that cannot be left again after entering. Here one is particularly interested in the absorption probability, i.e. the probability of entering such a state.

Stationary distributions

In the application, stationary distributions are often particularly interesting. If these distributions are given as the start distribution of , then all subsequent distributions of the states are equal to the start distribution for anything. The interesting question here is when such distributions exist and when an arbitrary distribution converges to such a stationary distribution.

Reversibility

In the case of reversible Markov chains, it is not possible to distinguish whether they run forwards or backwards in time, so they are invariant under time reversal. In particular, the existence of a steady state follows from reversibility.

Modeling

In applications, one often has a model in which the changes in the state of the Markov chain are determined by a sequence of events that occur at random times (think of the above example of operating systems with random arrival and operating times). When modeling, it must be decided how the simultaneous occurrence of events (arrival vs. completion) is treated. Usually one decides to artificially introduce a sequence of simultaneous events. A distinction is usually made between the options Arrival First and Departure First .

Arrival First (AF)

In this discipline, operation is started at the beginning of a time step. After that, new requests arrive, and the service end only occurs at the end of a time step.

The advantage of this discipline is that incoming receivables always arrive before a possible service end and thus the PASTA property (Poisson Arrivals See Time Averages) applies. With the help of this property, for arrivals modeled as a Bernoulli process , properties that are important for operating systems, such as the probability of loss, can be calculated very easily .

As a disadvantage, a request that arrives in the time slot can be serviced in ready at the earliest . Under certain circumstances, this leads to a higher number of waiting places required in the modeled system.

Departure First (DF)

In the case of departure first , demands arrive in the system at the beginning of a time step. This is followed by the start of service times and, at the end of a time step, the end of service times.

With this approach, the PASTA property no longer applies , which generally leads to more complicated calculations than in the case of Arrival First . A claim can arrive in the same time step and be fully serviced.

simulation

Discrete Markov chains with finite state space can easily be simulated if standard random numbers are available. For this one defines

for everyone . Is now , then place if and only if is. This method is particularly efficient when a few are not equal to zero. It corresponds to the inversion method with the probability function . The MCMC method makes use of the possibility of simulating large Markov chains as well to simulate distributions that cannot be simulated using conventional methods.

Constant time and discrete state space

Similarly, the Markov chain can also be formed for continuous time and discrete state space. This means that the state changes suddenly at certain points in time.

Be a stochastic process with continuous time and discrete state space. Then applies to a homogeneous Markov process

Here, too, transition matrices can be formed: for all and (here, as usual, stands for the identity matrix ).

The Chapman-Kolmogorow equation applies and is accordingly a semigroup which, under certain conditions, has an infinitesimal generator , namely the Q matrix .

An example of this is the homogeneous Poisson process , which has the Q matrix , or more generally any birth and death process .

Discrete time and general state space

Markov chains can also be defined on general measurable state spaces. If the state space cannot be counted, the stochastic kernel is required for this as a generalization to the transition matrix. A Markov chain is already clearly determined by the start distribution on the state space and the stochastic core (also transition kernel or Markov kernel).

There are still many outstanding problems in the area of general Markov chains. Only Harris chains have been well researched . A classic example of a Markov process in continuous time and constant state space is the Wiener process , the mathematical modeling of Brownian motion .

General formulation

Inhomogeneous Markov processes can be defined using the elementary Markov property , homogeneous Markov processes can be defined using the weak Markov property for processes with constant time and with values in any space. In most cases, however, you limit yourself to Polish rooms for reasons of manageability . An exacerbation of the weak Markov property is the strong Markov property .

definition

A stochastic process is given for which the following applies:

- For the index set is valid as well and it is closed under addition.

- Everyone takes values in , accordingly takes values in . It is the Borel σ-algebra .

The process is called a Markov process with distributions on if:

- For all is a stochastic process on with

- There is a Markov kernel from to with

- for everyone .

- The weak Markov property applies .

Applications

Markov chains are used in different areas.

- In economics with the queuing theory . A homogeneous Markov chain is assumed here. The number of customers waiting for a period is considered there. The probabilities of the arrival or handling of a customer are time-invariant (independent of the period).

- Bioinformatics: Markov chains are used in bioinformatics to examine sequence sections for certain properties. These include B. the presence of CpG islands , since in these the transition probabilities between C - G and GC are increased.

- In health economics for probabilistic modeling in the course of a cost-benefit analysis of health technologies such as drugs.

- In actuarial mathematics , discrete Markov chains are used to include biometric risks (disability probabilities, death probabilities, ...).

- The financial mathematics used on the Wiener process based Markov processes for modeling stock prices and interest rates.

- In music for composing algorithmic works , for example with Iannis Xenakis .

- In quality management to determine the reliability of a system and its sub-components

- In physics for modeling the decay of a compound core and for deriving Master's equations in the Markov approximation

- In automated online marketing for the generation of texts, which are difficult to distinguish from human-written texts by automatic spam filters .

- Likewise for the detection of spam mails by means of a Markow spam filter

- Certain board games such as Monopoly and the ladder game can be understood as a Markov chain.

- The PageRank of a homepage can be interpreted as a Markov chain. In particular, this Markov chain is described by the stochastic Google matrix .

- To simulate distributions that are otherwise difficult to access, using the Markow chain Monte Carlo method

See also

literature

- Philipp von Hilgers, Wladimir Velminski (Ed.): Andrej A. Markov. Computable arts. diaphanes, Zurich / Berlin 2007, ISBN 978-3-935300-69-8 .

- Andrei A. Markov: An Example of Statistical Investigation of the Text Eugene Onegin Concerning the Connection of Samples in Chains. trans. Classical Text in Translation. In: Science in Context. 19.4, 2006, pp. 591-600.

- Pierre Brémaud: Markov Chains . Springer Verlag, 1999, ISBN 0-387-98509-3 .

- Ehrhard Behrends: Introduction to Markov Chains . Vieweg, 2000, ISBN 3-528-06986-4 .

- Olle Häggström: Finite Markov Chains and Algorithmic Applications . Cambridge University Press, 2002.

- Daniel W. Stroock: An introduction to Markov processes. (= Graduate Texts in Mathematics. 230). 2nd Edition. Springer / Heidelberg 2014, ISBN 978-3-642-40522-8 .

![[0]](https://wikimedia.org/api/rest_v1/media/math/render/svg/ee314d016dd522b7f685325ca45f5e5003081fd0)

![[i]](https://wikimedia.org/api/rest_v1/media/math/render/svg/55a0ff71c93eedf2804d8495fbac7e3c1bed3bd0)

![[i + 1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/4f106470807d2a6814fea3c324240a1721b1254a)

![[i-1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/d74d7c6edcf60667fd9951f4852f944b7279a441)

![{\ displaystyle p_ {ij} ^ {n} = \ left [M ^ {n} \ right] _ {i, j}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4a1093ab53bd17f3d4b46fe8f689a95bc2dbdc76)

![{\ displaystyle u_ {t} \ in [r_ {i} (j-1), r_ {i} (j)]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a9ca767f37637f49b1dba1cac68281e339fa3b58)

![P (t): = [p _ {{i, j}} (t)]](https://wikimedia.org/api/rest_v1/media/math/render/svg/90c31a7971617fadc4807561afdb36d1ffc60517)