Information retrieval

Information Retrieval [ ˌɪnfɚˈmeɪʃən ɹɪˈtɹiːvəl ] ( IR ) means to retrieve information . The department deals with computer-aided searches for complex content (e.g. no single words) and falls into the areas of information science , computer science and computational linguistics .

As apparent from the importance of word retrieval (German demand , restoring ) be seen, are complex text or image data, which are stored in large databases, for outsiders initially not accessible or retrievable. Information retrieval is about finding existing information, not discovering new structures (like knowledge discovery in databases , which includes data mining and text mining ).

Document retrieval is closely related , which mainly aims at (text) documents as information to be determined.

scope of application

Data retrieval methods are used in Internet search engines (e.g. Google ), but also in digital libraries (e.g. for literature searches) and image search engines. Response systems or spam filters also use IR techniques.

The problem of access to stored complex information lies in two phenomena:

- uncertainty

- Insufficient information about the content of the documents contained is stored in the database (texts, images, films, music, etc.) so that it returns incorrect answers or no answer at all. For texts this is e.g. B. Missing descriptions of homographs (words that are spelled the same; e.g. bank - financial institution, seating) and synonyms (bank and financial institution).

- vagueness

- The user cannot put the type of information he is looking for into precise and targeted search terms (such as in SQL in relational databases ). His search query therefore contains conditions that are too vague

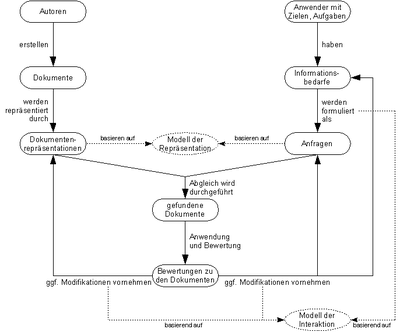

Generally, two groups of people (possibly overlapping) are involved in the IR (see figure on the right).

The first group of people are the authors of the information stored in an IR system, which they either store themselves or have read out from other information systems (as practiced e.g. by Internet search engines ). The documents placed in the system are converted by the IR system into a form that is favorable for processing (document representation) in accordance with the system-internal model of the representation of documents.

The second user group, the users, have specific goals or tasks that are acute at the time they are working on the IR system and that they lack the information to solve. Users want to cover these information needs with the help of the system. To do this, they must formulate their information needs in an adequate form as inquiries.

The form in which the information needs have to be formulated depends on the model used to represent documents. How the process of modeling information needs as an interaction with the system takes place (e.g. as a simple input of search terms) is determined by the model of the interaction.

Once the queries have been formulated, it is the task of the IR system to compare the queries with the documents set in the system using the document representations and to return a list of the documents that match the queries to the users. The user is now faced with the task of evaluating the found documents for the relevance to the solution according to his task. The results are the evaluations of the documents.

Users then have three options:

- You can make modifications to the representations of the documents (usually only within a narrow framework) (e.g. by defining new keywords for indexing a document).

- They refine their formulated queries (mostly to further narrow the search result)

- They change their information needs because after carrying out the research they find that they need further information that was not previously classified as relevant to solve their tasks.

The exact sequence of the three forms of modification is determined by the model of interaction. For example, there are systems that assist the user in reformulating the query by automatically reformulating the query using explicit document evaluations (i.e., some form of feedback communicated to the system by the user).

history

The term "information retrieval" was first used in 1950 by Calvin N. Mooers . Vannevar Bush described in the essay As We May Think in the Atlantic Monthly in 1945 how the use of existing knowledge could be revolutionized through the use of knowledge stores. His vision was called Memex . This system should store all types of knowledge carriers and enable targeted searches and browsing for documents by means of links. Bush was already thinking of using search engines and retrieval tools.

Information science received a decisive boost from the Sputnik shocks . On the one hand, the Russian satellite reminded the Americans of their own backwardness in space research, which the Apollo program successfully eliminated. On the other hand - and that was the crucial point for information science - it took half a year to crack the Sputnik's signal code. And that although the decryption code had long been read in a Russian magazine that was already in American libraries.

More information does not lead to more information. On the contrary. The so-called Weinberg Report is an expert opinion on this problem commissioned by the President. The Weinberg Report reports on an "information explosion" and states that experts are needed to cope with this information explosion. So information scientist. In the 1950s, Hans Peter Luhn worked on text-statistical methods that represent a basis for automatic summarizing and indexing. His goal was to create individual information profiles and highlight search terms. The idea of the push service was born.

Eugene Garfield worked on citation indexes in the 1950s to reflect different ways of conveying information in magazines. To do this, he copied tables of contents. In 1960 he founded the Institute for Scientific Information (ISI), one of the first commercial retrieval systems.

Germany

In Germany, Siemens developed two systems, GOLEM (large storage-oriented, list-organized determination method) and PASSAT (program for the automatic selection of keywords from texts). PASSAT works with the exclusion of stop words , forms word stems with the help of a dictionary and weights the search terms.

Information science has been established since the 1960s.

Early commercial information services

DIALOG is an interactive system between man and machine developed by Roger K. Summit. It is economically oriented and went online in 1972 through the government databases ERIC and NTIS. The ORIBIT project (now Questel-Orbit) was driven by research and development under the direction of Carlos A. Cuadra. In 1962 the retrieval system CIRC went online and various test runs were carried out under the code name COLEX. COLEX is the direct predecessor of Orbit, which went online in 1967 with a focus on US Air Force research . Later the focus shifts to medical information. The MEDLINE search system went online in 1974 for the MEDLARS bibliographical medical database. OBAR is a project initiated by the Ohio Bar Association in 1965. It ends in the LexisNexis system and primarily collects legal information. The system is based on the full text search, which works optimally for the Ohio judgments.

Search tools on the World Wide Web

With the Internet, information retrieval is becoming a mass phenomenon. A forerunner was the WAIS system , widespread from 1991 , which enabled distributed retrieval on the Internet. The early web browsers NCSA Mosaic and Netscape Navigator supported the WAIS protocol before the Internet search engines emerged and later switched to indexing non-HTML documents as well. The best known and most popular search engines currently include Google and Bing . Common search engines for intranets are Autonomy, Convera, FAST, Verity and the open source software Apache Lucene .

Basic concepts

Information needs

The need for information is the need for action-relevant knowledge and can be specific and problem-oriented. If there is a specific need for information, factual information is required. For example, "What is the capital of France?". The answer "Paris" completely covers the information needs. It is different with problem-oriented information needs. Several documents are needed here to meet the need. In addition, the problem-oriented need for information can never be completely met. If necessary, the information received may even result in a new requirement or a modification of the original requirement. When information is required, the user is abstracted. That is, the objective facts are considered.

Need for information

The need for information reflects the specific needs of the user making the request. It's about the subjective needs of the user.

Information indexing and information retrieval

In order to be able to formulate a search query as precisely as possible, one should actually know what one does not know. So there must be a basic knowledge in order to write an adequate search query. In addition, the natural language search query must be converted into a variant that can be read by the retrieval system. Here are some examples of search query formulations in various databases. We are looking for information about the actor "Johnny Depp" in the movie "Chocolat".

LexisNexis: HEADLINE :( "Johnny Depp" w / 5 "Chocolat")

DIALOGUE: (Johnny ADJ Depp AND Chocolat) ti

Google: “Chocolat” “Johnny Depp”

The user specifies how the retrieval process works, namely through the manner in which the search query is formulated in the system used. A distinction must be made between word and concept-oriented systems. Concept-oriented systems can recognize the ambiguities of words (e.g. Java = the island, Java = the coffee or Java = the programming language). The documentation unit (DE) is addressed via the search query. The DE represents the informational added value of the documents. This means that in the DE information on the author, year, etc. is given in a condensed form. Depending on the database, either the entire document or only parts of it are recorded.

Documentary reference unit and documentation unit

Neither the Documentary Reference Unit (DBE) nor the Documentation Unit (DE) are the original documents. Both are only representatives of the same in the database. First, the suitability of a document for documentation is checked. This takes place via catalogs of criteria in terms of form and content. If an object is found to be documentable, a DBE is created. This is where the form in which the document is saved is decided. Are individual chapters or pages taken as DBE or the document as a whole? This is followed by the practical information process. The DBE are formally described and the content condensed. This informational added value can then be found in the DE, which serves as a representative for the DBE. The DE represents the DBE and is therefore at the end of the documentation process. The DE enables the user to make a decision about whether he can use the DBE and request it or not. Information retrieval and information indexing are coordinated with one another.

Cognitive models

These are part of empirical information science, as they relate to the previous knowledge, socio-economic background, language skills, etc. of the users and use them to analyze information needs, usage and users.

Pull and push services

Marcia J. Bates describes the search for information as berrypicking ( picking berries ). It is not enough to just search for berries or information on a shrub or a database so that the basket is full. Several databases have to be queried and the search query has to be constantly modified based on new information. Pull services are made available wherever the user can actively search for information. Push services provide the user with information based on a stored information profile. These profile services, so-called alerts, save successfully formulated search queries and inform the user about the arrival of new relevant documents.

Information barriers

Various factors hinder the flow of information. Such factors include time, place, language, laws, and funding.

Recall and Precision

The recall describes the completeness of the number of hits displayed. The Precision, on the other hand, calculates the accuracy of the documents from the number of hits for a search query. Precision describes the proportion of all relevant documents in the selected documents of a search query and is therefore the measure of the documents contained in the hit list that are relevant to the task. Recall, on the other hand, describes the proportion of all relevant documents in the total number of relevant documents in the document collection. This is the measure of the completeness of a hit list. Both measures are key figures for an information retrieval system. An ideal system would select all relevant documents from a document collection in a search query, excluding documents that do not apply.

Recall:

Precision:

a = relevant search results found

b = found, non-relevant DE / ballast

c = relevant DE that were not found / loss

"C" cannot be measured directly, since you cannot know how many DEs were not found unless you know the content of the database or the DE that should have been displayed due to the search query. The recall can be increased at the expense of precision and vice versa. However, this does not apply to a question of fact. Here recall and precision are one and the same.

Relevance and pertinence

Knowledge can be relevant, but does not have to be pertinent. Relevance means that a document was output appropriately under the search query that was formulated. However, if the user already knows the text or does not want to read it because he does not like the author or does not want to read an article in another language, the document is not pertinent. Pertinence includes the subjective view of the user.

| Objective information needs | Subjective need for information (= information demand) |

| → relevance | → pertinence |

| A document is relevant to the satisfaction of an information requirement if it objectively: | A document is pertinent to the satisfaction of an information need if it is subjectively: |

| Serves to prepare a decision | Serves to prepare a decision |

| Closing a knowledge gap | Closing a knowledge gap |

| An early warning function fulfilled | An early warning function fulfilled |

The prerequisites for successful information retrieval are the right knowledge, at the right time, in the right place, to the right extent, in the right form, with the right quality. Whereby "correct" means that this knowledge has either pertinence or relevance.

usefulness

Knowledge is useful when the user generates new action-relevant knowledge from it and puts it into practice.

Aspects of relevance

Relevance is the relation between the search query in relation to the topic and the system aspects.

Binary approach

The binary approach states that a document is either relevant or not relevant. In reality this is not necessarily the case. Here one speaks more of “regions of relevance”.

Relevance distributions

For this purpose, topic chains can be created, for example. A topic can appear in several chains. The more frequently a topic occurs, the greater its weighting value. If the topic occurs in all chains, its value is 100; it does not appear in any chain, at 0. Investigations have revealed three different distributions. It should be noted that these distributions only come about with larger amounts of documents. With smaller amounts of documents, there may be no regularities at all.

Binary distribution

No relevance ranking is possible with binary distribution.

Inverse logistic distribution

- : Rank

- : Euler's number

- : Constant

Informetric distribution

- : Rank

- : Constant

- : concrete value between 1 and 2

The informetric distribution says: If the first-placed document has a relevance of one (at ), then the second-placed document has a relevance of 0.5 (at ) or 0.25 (at ).

Documents

It should be pointed out once again that in information science a distinction is made between the source document of the DBE and the DE. But when is “something” actually a document? Four criteria decide: the materiality (including the digital presence), the intentionality (the document has a certain meaning, a meaning), the development and the perception.

- "They have to be made into documents" Michael K. Buckland

Textual and non-textual objects

Objects can appear in text form, but do not have to be. Pictures and films are examples of non-textual documents. Textual and non-textual objects can appear in digital and non-digital form. If they are digital and more than two forms of media meet (a document consists of a video sequence, an audio sequence and images, for example), they are called multimedia. The non-digital objects need a digital representative in the database, such as a photo.

Formally published text documents

All documents that have gone through a formal publication process are referred to as formally published text documents. This means that the documents were checked (e.g. by an editor) before they were published. The so-called “gray literature” poses a problem. This has been checked but not published.

There are several levels of formally published documents. In the beginning there is the work, the creation of the author. Followed by the expression of this work, the concrete realization (e.g. different translations). This realization is manifested (e.g. in a book). At the bottom of this chain is the item, the individual copy. Usually the DBE is aimed at the manifestation. However, exceptions are possible.

Informally published texts

The informally published texts primarily include documents that have been published on the Internet. These documents have been published but not checked.

Wikis, for example, are an intermediate level between formally and informally published texts. These are published and cooperatively checked.

Unpublished texts

These include letters, invoices, internal reports, documents on the intranet or extranet. All documents that have never been made public.

Non-textual documents

There are two groups of non-textual documents. On the one hand there are digitally available or digitizable documents such as films, pictures and music and on the other hand non-digital and non-digitizable documents. The latter include facts such as chemical substances and their properties and reactions, patients and their symptoms and museum objects. Most of the documents that cannot be digitized come from the disciplines of chemistry, medicine and economics. They are represented in the database by the DE and are often also represented by images, videos and audio files.

Typology of retrieval systems

Structure of texts

A distinction is made between structured, weakly structured and non-structured texts. The weakly structured texts include all types of text documents that have a certain structure. These include chapter numbers, titles, sub-headings, illustrations, page numbers, etc. Structured data can be added to the texts using added information. Non-structured texts rarely appear in reality. Information science is mainly concerned with weakly structured texts. It should be noted that it is only about formal, not syntactic structures. There is a problem with the context of the content.

“The man saw the pyramid on the hill with the telescope.” This sentence can be interpreted in four ways. Therefore, some providers prefer human indexers because they recognize the context and can process it correctly.

Information retrieval systems can work either with or without terminological control. If you work with terminological control, there are opportunities to index both intellectually and automatically. Retrieval systems that work without terminological control either process the plain text or the process runs automatically.

Retrieval systems and terminological control

Terminological control means nothing more than the use of controlled vocabulary. This is done using documentation languages (classifications, keyword method, thesauri, ontologies). The advantage is that the researcher and the indexer have the same expressions and formulation options. Therefore there are no problems with synonyms and homonyms. Disadvantages of controlled vocabulary include the lack of consideration for language developments and the problem that these artificial languages are not used correctly by every user. Of course, the price also plays a role. Intellectual indexing is much more expensive than automatic.

A total of four cases can be distinguished:

| Researcher | Indexer |

|---|---|

| Controlled vocabulary → professionals | Controlled vocabulary |

| Natural language → Controlled vocabulary works in the background through search query expansion using general and subordinate terms | Natural language → Controlled vocabulary works in the background through search query expansion using general and subordinate terms |

| Natural language → system does translation work | Controlled vocabulary |

| Controlled vocabulary | Natural language vocabulary |

In the case of the variant without terminological control, it is best to work with the full texts. However, this only works with very small databases. The users must be familiar with the terminology of the documents. The process with terminological control requires information linguistic processing (Natural Language Processing = NLP) of the documents.

Information linguistic text processing

The information linguistic text processing is carried out as follows. First the writing system is recognized. For example, is it a Latin or Arabic writing system. Then speech recognition follows. Now text, layout and navigation are separated from each other. There are two options at this point. On the one hand, the breakdown of the words into n-grams or word recognition. No matter which method you choose, stop word marking, input error detection and correction as well as proper name recognition and the formation of basic or stem forms follow. Compounds are broken down, homonyms and synonyms are recognized and compared and the semantic environment or the environment is examined for similarity. The last two steps are the translation of the document and the resolution of the anaphora. It may be necessary for the system to contact the user during the process.

Retrieval models

There are several competing retrieval models that do not have to be mutually exclusive. These models include the Boolean and the extended Boolean model . The vector space model and the probabilistic model are models based on the text statistics . The link topological models include the Kleinberg algorithm and PageRank . Finally there is the network model and the user / usage models, which examine text usage and the user at their specific location.

Boolean model

George Boole published his "Boolean Logic" and its binary view of things in 1854. His system has three functions or operators: AND, OR and NOT. Sorting by relevance is not possible with this system. In order to enable relevance ranking, the Boolean model was expanded to include weighting values and the operators had to be reinterpreted.

Text statistics

The terms appearing in the document are analyzed in the text statistics. The weighting factors are called WDF and IDF here.

Within-document Frequency (WDF): Number of terms occurring / number of all words

The WDF describes the frequency of a word in a document. The more often a word appears in a document, the larger its PDF

Inverse document frequency English Inverse document frequency weight (IDF) Total number of documents in the database / number of documents with the term

The IDF describes the frequency with which a document with a certain term appears in a database. The more often a document with a certain term occurs in the database, the smaller its IDF.

The two classic models of text statistics are the vector space model and the probabilistic model. In the vector space model, n-words span an n-dimensional space. The similarity of the words to one another is calculated using the angle of their vectors to one another. The probabilistic model calculates the probability that a document will match a search query. Without additional information, the probabilistic model is similar to the IDF.

Link topological models

Documents are linked to one another and to one another on the WWW. They thus form a space from the left. The Kleinberg algorithm calls these links “Hub” (outgoing links) and “Authority” (incoming links). The weighting values are based on the extent to which hubs meet "good" authorities and authorities are linked by "good" hubs. Another linktopological model is the PageRank by Sergey Brin and Lawrence Page. It describes the probability that a randomly surfing user will find a page.

Cluster model

Cluster processes attempt to classify documents so that similar or related documents are combined in a common document pool. This accelerates the search process, since all relevant documents can be selected with a single access in the best case. In addition to document similarities, synonyms also play an important role as semantically similar words. A search for the term “word” should also present a hit list for a comment, remark, assertion or term.

Problems arise from the way documents are summarized:

- The clusters must be stable and complete.

- The number of documents in a cluster and thus the resulting hit list can be very high for special documentation with homogeneous documents. In the opposite case, the number of clusters can grow up to the extreme case in which the cluster only consists of one document each.

- The overlap rate of documents that are in more than one cluster can hardly be controlled.

User usage model

In the user usage model, the frequency of use of a website is a ranking criterion. In addition, background information, for example about the location of the user, is included in geographic queries.

Systematic searches result in feedback loops. These either run automatically or the user is repeatedly prompted to mark results as relevant or non-relevant before the search query is modified and repeated.

Surface web and deep web

The surface web is on the web and can be accessed free of charge for all users. In the deep web, for example, there are databases whose search interfaces can be accessed via the surface web. However, your information is usually chargeable. There are three types of search engines. Search engines like Google work algorithmically, the Open Directory Project is an intellectually created web catalog and meta search engines get their content from several other search engines that address each other. As a rule, intellectually created web catalogs only use the entry page of a website as a reference source for the DBE. Every website is used in algorithmic search engines.

Architecture of a retrieval system

There are digital and non-digital storage media, such as steep maps, library catalogs and sight cards. Digital storage media are developed by computer science and are an area of activity in information science. A distinction is made between the file structure and its function. In addition, there are interfaces between the retrieval system and the documents and their users. When it comes to the interface between system and document, a distinction is made again between three areas. Finding documents, known as crawling, checking these documents for updates and classifying them in a field scheme. The documents are either recorded intellectually or automatically and processed further. The DE are saved twice. Once as a document file and also as an inverted file, which is intended as a register or index to facilitate access to the document file. The user and the system come into contact in the following way. The user writes

- an inquiry formulation

- a hit list, can

- display and process the documentation units

- further locally.

Character sets

In 1963 the ASCII code (American Standard Code for Information Interchange) was created. Its 7 bit code could capture and map 128 characters. It was later expanded to 8 bits (= 256 characters). The largest character set to date, Unicode, comprises 4 bytes, i.e. 32 bits, and is intended to map all characters that are actually used in the world. The ISO 8859 (International Organization for Standardization) also regulates language-specific variants, such as the "ß" in the German language.

Inclusion of new documents in the database

New documents can be added to the database both intellectually and automatically. When new documents are intellectually recorded, an indexer is responsible and decides which documents are recorded and how. The automatic process is carried out by a “robot” or a “crawler”. The basis is a known set of web documents, a so-called “seed list”. The links of all websites that contain this list is now the responsibility of the crawler. The URL of the respective pages is checked to see whether it already exists in the database or not. In addition, mirrors and duplicates are recognized and deleted.

Crawler

Best-first crawler

One of the best-first crawlers is the page rank crawler. It sorts the links according to the number and popularity of the incoming pages. Two more are the fish search and the shark search crawler. The former limits his work to areas of the web where relevant pages are concentrated. The Shark Search Crawler refines this method by pulling additional information, for example from the anchor texts, in order to make a relevance judgment. Every site operator has the option of locking his site against crawlers.

Crawling the deep web

In order for a crawler to work successfully in the deep web, it must meet various requirements. On the one hand, he has to “understand” the database's search mask in order to be able to formulate an adequate search query. In addition, he must understand hit lists and be able to display documents. However, this only works with free databases. It is important for deep web crawlers to be able to formulate search arguments in such a way that all documents in the database are displayed. If there is a year field in the search mask, the crawler would have to query all years in order to get to all documents. An adaptive strategy makes the most sense for keyword fields. Once the data has been recorded, the crawler only has to record the updates of the pages found. There are several ways to keep the DE as up-to-date as possible. Either the pages are regularly visited at the same interval, which would, however, far exceed the resources and therefore impossible, or the visit is random, which, however, works rather suboptimally. A third option would be to visit based on priorities. For example, according to the frequency of their changes (page-centered) or the frequency of their views or downloads (user-centered). Further tasks of the crawler are to detect spam, duplicates and mirrors. The detection of duplicates is usually done by comparing the paths. Avoiding spam is a little more difficult because spam is often hidden.

FIFO (first in first out) crawler

To the FIFO - crawlers of the breadth-first crawler that all links are one side follows this executes more, follow the links to the pages found and the depth-first crawler. In the first step, this works like the breadth-first crawler, but in the second step it makes a selection as to which links to follow and which not.

Thematic crawlers

Thematic crawlers specialize in one discipline and are therefore suitable for subject matter experts. Pages that are not thematically relevant are identified and "tunneled". Nevertheless, the links on these tunneled pages will be followed up in order to find further relevant pages. Distillers , meanwhile, find a good starting point for crawlers by using taxonomies and sample documents. Classifiers determine these pages for relevance. The whole process is semi-automatic, as taxonomies and sample documents have to be updated regularly. In addition, an order of terms is required.

Save and index

The documents found are copied into the database. Two files are created for this, on the one hand the document file and on the other an inverted file . In the inverted file, all words or phrases are sorted and listed according to alphabet or some other sorting criterion. Whether you use a word index or a phrase index depends on the field. For an author field, for example, the phrase index is much better than the word index. The inverted file contains information about the position of the words or phrases in the document and structural information. Structural information can be useful for relevance ranking. If, for example, it is stated that a word was written larger, this can also be weighted higher. The words and phrases are written in the correct order as well as filed backwards. This enables an open link structure. The inverted file is saved in a database index .

Classification of retrieval models

The figure below shows a two-dimensional classification of IR models. The following properties can be observed in the various models depending on their classification in the matrix:

- Dimension: mathematical foundation

- Algebraic models represent documents and queries as vectors, matrices or tuples, which are converted into a one-dimensional similarity measure using a finite number of algebraic arithmetic operations in order to calculate pairwise similarities.

- Set theoretical models are characterized by the fact that they map natural language documents to sets and that the determination of the similarity of documents (primarily) is based on the use of set operations.

- Probabilistic models see the process of document search or the determination of document similarities as a multi-stage random experiment . In order to map document similarities, probabilities and probabilistic theorems (especially Bayes' theorem ) are used.

- Dimension: properties of the model

- Models with immanent term interdependencies are characterized by the fact that they take into account existing interdependencies between terms and thus - in contrast to models without term interdependencies - they are not based on the implicit assumption that terms are orthogonal or independent of one another. The models with the immanent term interdependencies differ from the models with the transcendent term interdependencies in that the extent of an interdependence between two terms is derived from the document inventory in a way determined by the model - i.e. is inherent in the model. In this class of models, the interdependence between two terms is derived directly or indirectly from the co-occurrence of the two terms. Co-occurrence is understood as the joint occurrence of two terms in a document. This model class is based on the assumption that two terms are interdependent if they often appear together in documents.

- Models without term interdependence are characterized by the fact that two different terms are viewed as completely different and in no way connected to one another. This fact is often referred to in the literature as the orthogonality of terms or as the independence of terms.

- As with the models with immanent term interdependencies, the models with transcendent term interdependencies are not based on any assumption about the orthogonality or independence of terms. In contrast to the models with immanent term interdependencies, the interdependencies between the terms in the models with transcendent term interdependencies cannot be derived exclusively from the document inventory and the model. This means that the logic underlying the term interdependencies is modeled as going beyond the model (transcendent). This means that in the models with transcendent term interdependencies the existence of term interdependencies is explicitly modeled, but that the concrete expression of a term interdependence between two terms must be specified directly or indirectly from outside (e.g. by a person).

Information retrieval has cross-references to various other areas, e.g. B. Probability Theory of Computational Linguistics .

literature

- James D. Anderson, J. Perez-Carballo: Information retrieval design: principles and options for information description, organization, display, and access in information retrieval databases, digital libraries, and indexes ( Memento December 31, 2008 in the Internet Archive ) University Publishing Solutions, 2005.

- Michael C. Anderson: Retrieval. In: AD Baddeley , MW Eysenck , MC Anderson. Memory. Psychology Press, Hove, New York 2009, ISBN 978-1-84872-001-5 , pp. 163-189.

- R. Baeza-Yates, B. Ribeiro-Neto: Modern Information Retrieval. ACM Press, Addison-Wesley, New York 1999.

- Reginald Ferber: Information Retrieval . dpunkt.verlag, 2003, ISBN 3-89864-213-5 .

- Dominik Kuropka: Models for the Representation of Natural Language Documents. Ontology-based information filtering and retrieval with relational databases . ISBN 3-8325-0514-8 .

- Christopher D. Manning, Prabhakar Raghavan, and Hinrich Schütze: Introduction to Information Retrieval . Cambridge: Cambridge university press, 2008, ISBN 978-0-521-86571-5 .

- Dirk Lewandowski: Understanding search engines. Springer, Heidelberg 2015, ISBN 978-3-662-44013-1 .

- Dirk Lewandowski: Web Information Retrieval . In: Information: Wissenschaft und Praxis (nfd). 56 (2005) 1, pp. 5-12, ISSN 1434-4653

- Dirk Lewandowski: Web Information Retrieval, technologies for information search on the Internet . (= Information science. 7). DGI font. Frankfurt am Main 2005, ISBN 3-925474-55-2 .

- Eleonore Poetzsch: Information Retrieval - Introduction to Basics and Methods. E. Poetzsch Verlag, Berlin 2006, ISBN 3-938945-01-X .

- Gerard Salton , Michael J. McGill: Introduction to modern information retrieval. McGraw-Hill, New York 1983.

- Wolfgang G. Stock : Information Retrieval. Search and find information. Oldenbourg, Munich / Vienna 2007, ISBN 978-3-486-58172-0 .

- Alexander Martens: Visualization in Information Retrieval - Theory and practice applied in Wikis as an alternative to the Semantic Web. BoD, Norderstedt, ISBN 978-3-8391-2064-4 .

- Matthias Nagelschmidt, Klaus Lepsky, Winfried Gödert: Information indexing and automatic indexing: A textbook and workbook. Springer, Berlin, Heidelberg 2012, ISBN 978-3-642-23512-2 .

Web links

- Information Retrieval Section of the Society for Computer Science

- Norbert Fuhr: Lecture "Information Retrieval" at the University of Duisburg-Essen, 2006, materials

- Karin Haenelt: Seminar "Information Retrieval" University of Heidelberg, 2015

- Heinz-Dirk Luckhardt: Information Retrieval , Saarland University, in the Virtual Information Management Manual ( Memento from November 30, 2001 in the Internet Archive )

- UPGRADE, The European Journal for the Informatics Professional, Information Retrieval and the Web Volume III, No. 3, June 2002.

- CJ van Rijsbergen: Information Retrieval, 1979

- Information Retrieval Facility (IRF)

Individual evidence

- ↑ Information Retrieval 1, Basics, Models and Applications , Andreas Henrich, Version: 1.2 (Rev: 5727, Status: January 7, 2008), Otto-Friedrich-Universität Bamberg, Chair for Media Informatics, 2001 - 2008