Software test

A software test checks and evaluates software for compliance with the requirements defined for its use and measures its quality . The knowledge gained is used to identify and correct software errors. Tests during software development are used to put the software into operation with as few errors as possible.

This term, which designates a single test measure, is to be distinguished from the identical designation 'test' (also 'testing'), under which the totality of the measures for checking the software quality (including planning, preparation, control, implementation, documentation, etc .; see also definitions ).

Software testing cannot provide evidence that there are no (no longer) errors. It can only determine in a case- sensitive manner that certain test cases were successful. Edsger W. Dijkstra wrote: “Program testing can be used to show the presence of bugs, but never show their absence!” (The testing of programs can show the existence of bugs, but never their non-existence). The reason is that all program functions and also all possible values in the input data would have to be tested in all their combinations - which is practically impossible (except for very simple test objects ). For this reason, various test strategies and concepts deal with the question of how a large test coverage can be achieved with the smallest possible number of test cases .

Pol, Koomen, Spillner explain 'testing' as follows: “Tests are not the only measure in quality management in software development, but they are often the last possible. The later errors are discovered, the more time-consuming it is to correct them, which leads to the reverse conclusion: Quality must be implemented (throughout the course of the project) and cannot be 'tested'. ”And:“ When testing in software development, i. d. Usually a more or less large number of errors is assumed or accepted as 'normal'. There is a considerable difference here from industry: In the 'quality control' process section, errors are often only expected in extreme situations. "

definition

There are different definitions for the software test:

According to ANSI / IEEE Std. 610.12-1990 , testing is "the process of operating a system or component under specified conditions, observing or recording the results and making an evaluation of some aspects of the system or component. "

Ernst Denert provides a different definition , according to which the "test [...] is the verifiable and repeatable proof of the correctness of a software component relative to the previously defined requirements" .

Pol, Koomen and Spillner use a more extensive definition: Testing is the process of planning, preparation and measurement with the aim of determining the properties of an IT system and showing the difference between the actual and the required state. Noteworthy here: The measured variable is 'the required state', not just the (possibly incorrect) specification .

'Testing' is an essential part of the quality management of software development projects .

standardization

In September 2013, the ISO / IEC / IEEE 29119 Software Testing standard was published, which for the first time internationalized many (older) national software testing standards, such as B. IEEE 829 , summarized and replaced. The ISO / IEC 25000 series of standards supplements the software engineering side as a guide for (the common) quality criteria and replaces the ISO / IEC 9126 standard .

aims

The global goal of software testing is to measure the quality of the software system . Defined requirements serve as a test reference by means of which any errors that may be present are uncovered. ISTQB : The effect of errors (in productive operation) is thus prevented .

A framework for these requirements can be the quality parameters according to ISO / IEC 9126 , each of which has specific detailed requirements, e.g. B. can be assigned to functionality, usability, security, etc. In particular, compliance with legal and / or contractual requirements must also be proven.

The test results (which are obtained through various test procedures) help assess the real quality of the software - as a prerequisite for its release for operational use. Testing is designed to create trust in the quality of the software .

Individual test goals: Since software testing consists of numerous individual measures that i. d. Usually carried out over several test levels and on many test objects , there are individual test objectives for each individual test case and for each test level - such as B. Arithmetic function X tested in program Y, interface test successful, restart tested, load test successful, program XYZ tested, etc.

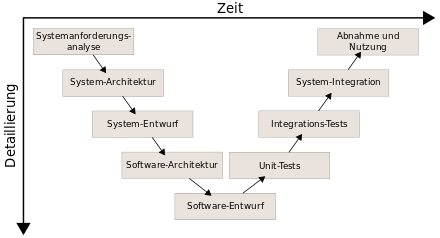

Test levels

The classification of the test levels (also called test cycles) follows the development status of the system according to the V-model . Their content is based on the development stages of projects. In each test level (right side in 'V') tests are carried out against the system designs and specifications of the associated development level (left side), i. H. the test objectives and test cases are based on the respective development results. However, this process principle can only be used if any changes made in later development stages have been updated in the older specifications.

In reality, these characteristics are further subdivided depending on the size and complexity of the software product. For example, the tests for the development of safety-relevant systems in transport security technology could be subdivided as follows: component test on the development computer, component test on the target hardware, product integration tests, product tests, product validation tests, system integration tests, system tests, system validation tests , Field tests and acceptance test.

In practice, the test levels described below are often not sharply demarcated from one another, but can, depending on the project situation, run smoothly or through additional intermediate levels. For example, the system could be approved on the basis of test results (reviews, test protocols) from system tests.

Unit test

The module test , and unit test or unit test called, is a test for the level of each of the software modules. The subject of the test is the functionality within individually definable parts of the software ( modules , programs or sub- programs , units or classes ). The aim of these tests, which are often carried out by the software developer himself, is to prove the technical operability and correct technical (partial) results.

Integration test

The integration test or interaction test tests the cooperation of interdependent components. The test focus is on the interfaces of the components involved and should prove correct results across entire processes.

System test

The system test is the test level at which the entire system is tested against all requirements (functional and non-functional requirements). Usually the test takes place in a test environment and is carried out with test data . The test environment is intended to simulate the customer's productive environment, i.e. H. be as similar as possible to her. As a rule, the system test is carried out by the implementing organization.

Acceptance test

An acceptance test, process test, acceptance test or user acceptance test (UAT) is the testing of the software supplied by the customer. The successful completion of this test level is usually a prerequisite for the legally effective takeover of the software and its payment. Under certain circumstances (e.g. for new applications) this test can already be carried out in the production environment with copies of real data.

The black box method is used especially for system and acceptance tests , i.e. H. the test is not based on the software code , but only on the behavior of the software in specified processes (user inputs, limit values for data acquisition, etc.).

Test process / test phases

Pol, Koomen and Spillner describe in Chap. 8.1 'TMap' a procedure based on a phase model . You call this procedure the test process , consisting of the test phases test preparation, test specification, test execution and evaluation, test completion. At the same time, the test process provides the framework functions of planning and administration. The procedure is generic , i.e. H. it is used - as required - for different levels, for the overall project, for each test level and ultimately for each test object and test case.

With other authors or institutes, there are sometimes other groups and other names, but their content is almost identical. For example, at ISTQB the fundamental test process is defined with the following main activities: test planning and control, test analysis and test design, test implementation and test execution, evaluation of end criteria and report, completion of test activities

Test activities are grouped (according to Pol, Koomen and Spillner) role-specific to so-called test functions : Testing, test management, methodical support, technical support, functional support, administration, coordination and consulting, application integrator, TAKT architect and TAKT engineer (when using test automation ; TAKT = testing, automation, knowledge, tools). These functions (roles) focus on certain test phases; they can be set up in the project itself or be included via specialized organizational units.

Person-related can u. a. the following roles can be distinguished in testing:

- Test manager (leadership): The test manager develops the test strategy, plans the resources and serves as a contact person for project management and management. Important character traits are reliability and integrity.

- Test architect, test engineer: The test engineer supports the test manager in developing the test strategy. He is also responsible for the optimal selection of test methods and test tools. The planning and development of a project-specific test infrastructure is also his area of responsibility.

- Test analyst: The test analyst determines the necessary test scenarios by deriving them from the requirements. He also defines which test data are necessary.

- Test data manager: The test data manager takes care of the procurement and timeliness of the test data. He works closely with the test analyst. This role is mostly underestimated, but without the right test data, the test case statement is useless.

- Tester (specialist): The tester's task is to carry out the tests reliably and precisely. In addition, it should document the test results precisely and without value. He can support the test analysts and IT specialists in troubleshooting. In general, this role is often viewed as a tester, forgetting the other roles.

Test planning

Result of this i. d. The phase that usually takes place parallel to the software development is i. W. the test plan . It is developed for each project and is intended to define the entire test process. In TMap is running this: Both the increasing importance of information systems for operational processes as well as the high cost of testing justify a perfectly manageable and structured testing process. The plan can and should be updated and specified for each test level so that the individual tests can be carried out appropriately and efficiently.

Contents in the test plan should e.g. B. the following aspects: test strategy (test scope, test coverage, risk assessment); Test objectives and criteria for test start, test end and test termination - for all test levels; Procedure (test types); Aids and tools for testing; Documentation (definition of type, structure, level of detail); Test environment (description); Test data (general specifications); Test organization (appointments, roles), all resources, training needs; Test metrics ; Problem management.

Test preparation

Based on the test planning, the facts specified there are prepared and made available for operational use.

Examples for individual tasks (global and per test level): Provision of the documents of the test basis; Making available (e.g. customizing ) tools for test case and error management; Setting up the test environment (s) (systems, data); Transfer of the test objects as a basis for test cases from the development environment to the test environment; Create users and user rights; ...

Examples for preparations (for individual tests): Transfer / provision of test data or input data in the test environment (s).

Test specification

Here, all specifications and preparations are made that are required to be able to execute a certain test case (differentiate between logical and concrete test case).

Examples for individual activities: test case identification and test case optimization (based on test objectives and test path categories, if applicable ); Describe each test case (what exactly is to be tested); Preconditions (including definition of dependencies on other test cases); Defining and creating the input data; Specifications for the test procedure and the test sequence; Setting target result; Condition (s) for 'test fulfilled'; ...

Test execution

In dynamic tests, the program to be tested is executed; in static tests it is the subject of analytical tests.

Examples of individual activities: Selecting the test cases to be tested; Starting the test item - manually or automatically; Providing the test data and the actual result for evaluation; Archive environmental information for the test run, ...

Further note: A test object should not be tested by the developer himself, but by other, if possible independent, persons.

Test evaluation

The results from tests carried out (for each test case) are checked. The actual result is compared with the target result and a decision on the test result (ok or error ) is then made.

- In the event of errors: classification (e.g. according to the cause of the error, severity of the error, etc., see also error classification ), appropriate error description and explanation, transfer to error management ; Test case remains open

- If OK: the test case is considered completed

- For all tests: documentation, historizing / archiving of documents

Test completion

Closing activities take place at all test levels: test case, test object, test level, project. The status of the completion of test levels is documented and communicated (e.g. with the help of test statistics), decisions are to be made and documents to be archived. A basic distinction is made between:

- Rule completion = goals achieved, take next steps

- Alternatively possible: end or interrupt the test stage prematurely if necessary (for various reasons to be documented); in cooperation with the project management

Classification for test types

In hardly any other discipline of software development has such a large variety of terms developed as in software testing, corresponding to the complexity of the task of 'testing'. This is particularly true for the designations used to name test types / test variants.

They are usually derived from the different situations in which they are performed and the test objectives they are aimed at. This results in a large number of terms. In accordance with this multi-dimensionality, the names of several test types can apply to a specific test. Example: A developer test can be a dynamic test, black box test, error test, integration test, equivalence class test, batch test, regression test etc. at the same time .

In literature and practice, these terms are mostly only used in part, sometimes with meanings that differ in details. In practical use, certain tests (for example) could simply be referred to as functional tests - and not as error tests , batch tests, high-level tests, etc. The test efficiency is not impaired by this - if the tests are otherwise properly planned and executed. In the sense of efficient test processes, it is important to cover several test goals with just one test case, e. B. to check the user interface, a calculation formula, correct value range checks and data consistency.

One means of understanding this variety of terms is the classification used below - in which test types are broken down according to different criteria, appropriate test types are listed and their test objectives are briefly explained.

Classification according to the test technique

Analytical measures

Software tests that can only be carried out after the test item has been created are defined as analytical measures. Liggesmeyer classifies these test methods as follows (abbreviated and partly commented):

Static test (test without program execution)

Dynamic test (test with program execution)

-

Structure-oriented test

- Control flow-oriented (measure of the coverage of the control flow)

- Statement, branch , condition, and path coverage tests

- Data flow-oriented (measure of the coverage of the data flow)

- Defs- / Uses criteria, required k-tuple test, data context coverage

- Control flow-oriented (measure of the coverage of the control flow)

-

Function-oriented test (test against a specification)

- Functional equivalence class formation , state-based test , cause-effect analysis (e.g. using a cause-effect diagram ), syntax test, transaction flow-based test, test based on decision tables

- Positive test (tries to verify the requirements) and negative test (checks the robustness of an application)

-

Diversifying test (comparison of test results from several versions)

- Regression test , back-to-back test , mutation test

-

Others (cannot be clearly assigned, or mixed forms)

- Area testing or domain testing (generalization of equivalence class formation), error guessing, limit value analysis, assurance techniques

Constructive measures

The analytical measures in which test objects are 'checked' are preceded by the so-called constructive measures, which are already carried out in the course of the software creation for quality assurance. Examples: requirements management , prototyping , review of specifications .

Specification techniques

Furthermore, the specification techniques are to be distinguished from the test techniques: They do not designate any test types with which test objects are actively tested, but only the procedures according to which the tests are prepared and specified.

Example designations are equivalence class test and coverage test ; Test cases are identified and specified according to these procedures, but specifically checked, e.g. B. in an integration test , batch test, security test etc.

Classification according to the type and scope of the test objects

- Debugging

- For individual code parts: Checking the program code under step-by-step or section-by-section control and, if necessary, modification by the developer.

- Module test, unit test or component test

- Testing of the smallest possible testable functionalities isolated from others; is also considered a test level.

- Integration test

- Test of functionality when mutually independent components work together; is also called interoperability test ; is also considered a test level.

- System test

- Test level with tests across the entire system.

- Interface test

- Testing whether the interfaces between mutually calling components are implemented correctly (i.e. in particular with regard to the possible parameter combinations); mostly according to the specification, for example with the help of mock objects .

- Batch test / dialog test

- Tests of batch programs or tests for dialog programs are called tests .

- Web test

- Test of Internet or intranet functions; also called browser test .

- Hardware test

- Testing of specific load and other criteria relating to hardware components - such as network load, access time, parallel storage technology, etc.

Classification according to special points of view

The respective test type tests ...

Functional view of users / users

- Business process test: ... the interaction of program parts of a business process.

- similar to end-to-end test : ... functions of the system across all steps (e.g. from the user interface to the database).

- Behavioral test ( behavior test ): ... the application from the perspective of users; A distinction is made between:

- Feature test (or function test ): ... a single function that can be executed by the user.

- Capability test : ... whether a certain user activity i. Z. can be executed with the tested functions.

- Acceptance test (also user acceptance test UAT ): ... whether the software meets defined requirements / expectations, especially with regard to its user interface.

- Surface test: ... the user interfaces of the system (e.g. comprehensibility, arrangement of information, help functions); for dialog programs also called GUI test or UI test .

Software-technical relationships

- Data consistency test: ... Effect of the tested function on the correctness of data sets (test designations: data cycle test, value range test, semantic test , CRUD test )

- Restart test: ... whether a system can be restarted after a shutdown or breakdown (e.g. triggered by a stress test).

- Installation test: ... routines for software installation, if necessary in different system environments (e.g. with different hardware or different operating system versions)

- Stress test: ... the behavior of a system in exceptional situations.

- Crash test: is a stress test that tries to make the system crash .

- Load test : ... the system behavior under particularly high memory, CPU, or similar requirements. Special types of load tests can be multi-user tests (many users access a system, simulated or real) and stress tests , in which the system is pushed to the limits of its performance.

- Performance test: ... whether correct system behavior is ensured for certain memory and CPU requirements.

- Computer network test: ... the system behavior in computer networks (e.g. delays in data transmission, behavior in the event of problems in data transmission ).

- Security test : ... potential security holes.

Software quality features

A large number of tests can be derived from the quality features of software (e.g. according to ISO / IEC 9126 - which can form the framework for most test requirements). Test type names according to this classification are, for example:

- Functional test and functional test : ... a system in terms of functional requirement characteristics such as correctness and completeness .

- Non-functional test: ... the non-functional requirements, such as B. the security , the usability or the reliability of a system. Examples of specific test types for this are security tests, restart tests, GUI tests, installation tests, and load tests . The focus is not on the function of the software (what does the software do?), But on its functionality (how does the software work?).

- Error test: ... whether the processing of error situations is correct, i. H. as defined, takes place.

Other classifications for test types

- Time of the test execution

- The most important test type designations according to this aspect and also mostly used in common parlance are the test levels , which are referred to as component test, integration test, system test, acceptance test .

- One type of early test is the alpha test (initial developer tests ). A preparatory test initially only checks essential functions. In the later beta test, selected users will check preliminary versions of the almost finished software for suitability.

- Production tests are carried out in test environments configured similarly to production or even in the production environment itself, sometimes even when the software is in productive operation (only suitable for non-critical functions). Possible cause: Only the production environment has certain components required for testing.

- Test repetitions are also part of the test timing aspect: Such tests are called regression tests , retests or similar .

- Indirectly with a time reference, the following should be mentioned: developer test (before user test ...), static testing (before dynamic testing).

- Selected methodological approaches for testing

- Special test strategies: SMART testing, risk based testing, data driven testing, exploratory testing , top-down / bottom-up, hardest first, big-bang.

- Special methods are the basis for test type designations for decision table tests , use case or application case -based tests, state transition / state-related tests, equivalence class tests, mutation tests (targeted changing of source code details for test purposes) and pair-wise tests .

- Test intensity

- Different degrees of test coverage (Test Coverage, or code coverage) are mixed with coverage testing achieved which (due to the small size of the test objects) particularly for components tests are suitable. Test type designations for this: instruction (C0 test, C1 test), branch, condition and path coverage test .

- For the time being, only important main functions are tested with preparatory tests.

- Without systematically specified test data / -cases be Smoke tests performed tests to be where only tried whether the test object, anything at doing - without abzurauchen '- for example as part of a preparatory tests or as a final sample at the conclusion of a test level.

- In error guessing, (experienced) testers deliberately provoke errors.

- Information status about the components to be tested

... which is used when specifying / performing tests:

- Black box tests are developed without knowledge of the internal structure of the system to be tested, but on the basis of development documents. In practice, black box tests are usually not developed by software developers, but by technically oriented testers or by special test departments or test teams. This category also includes requirements tests (based on special requirements) and stochastic testing (statistical information as a test basis)

- White box tests , also called structure-oriented tests, are developed based on knowledge of the internal structure of the component to be tested. Developer tests are i. d. R. White box testing - when the developer and tester are the same person. There is a risk that misunderstandings on the part of the developer will inevitably lead to 'wrong' test cases, i.e. the error will not be recognized.

- Gray box tests are sometimes called tests in which a combination of white and black box tests is practiced in parallel or which are only based on partial knowledge of the code.

- Low-level test / high-level test: Conceptually summarizes test types according to whether they are aimed at specific technical components (such as debugging, white box test for low levels) or are carried out / specified on the basis of implementation requirements , e.g. B. Requirement test (requirement test) , black box test for high level.

- Who runs or specifies the tests?

- Developer tests (programmer tests), user tests, user tests, user acceptance tests (UAT) are carried out by the respective test group.

- The departments responsible for the software carry out acceptance tests .

- Operational tests , installation tests, restart tests, emergency tests , etc. a. also representatives of the 'data center' - to ensure operational readiness according to defined specifications.

- Crowd testing ; according to the principle of crowdsourcing , test tasks are outsourced to a large number of users on the Internet (the crowd).

- Type of software action

This category is of minor importance; it results in test terms like the following:

- Maintenance tests and regression tests are carried out in maintenance projects ; i. d. Usually already existing test cases and test data are used.

- In migration projects are migration tests carried out; the data transfer and special migration functions are z. B. Test content.

Other aspects of testing

Test strategy

Pol, Koomen and Spillner describe the test strategy as a comprehensive approach: A test strategy is necessary because a complete test, i.e. H. a test that checks all parts of the system with all possible input values under all preconditions is not feasible in practice. For this reason, it must be determined in the test planning based on a risk assessment how critical the occurrence of a fault in a system part is to be assessed (e.g. only financial loss or danger to human life) and how intensively (taking into account the available resources and the budget) a system part must or can be tested.

Accordingly, the test strategy must specify which parts of the system are to be tested with which intensity, using which test methods and techniques, using which test infrastructure and in which order (see also test levels ).

It is developed by test management as part of test planning, documented and specified in the test plan and used as a basis for the testing (by the test teams).

According to another interpretation, "test strategy" is understood as a methodical approach according to which testing is applied.

So names z. B. ISTQB specifications for test strategies as follows:

- top-down: test main functions before detailed functions; Subordinate routines are initially ignored during the test or simulated (using "stubs" )

- bottom-up: test detailed functions first; Superordinate functions or calls are simulated using "test drivers"

- hardest first: Depending on the situation, the most important things first

- big-bang: all at once

Other principles and techniques for test strategies are:

- Risk based testing: test principle according to which the test coverage is aligned to the risks that can occur in the test objects (in the event that errors are not found).

- Data driven testing: Test technology with which the data constellations can be changed specifically via settings in the test scripts in order to be able to efficiently test several test cases one after the other

- Test-driven development

- SMART : Test principle "Specific, Measurable, Achievable, Realistic, time-bound"

- Keyword driven testing

- framework based testing: test automation using test tools for specific development environments / programming languages

- Testing according to ISO / IEC 25000 : The ISO / IEC standard 25000 is a standard for quality criteria and evaluation methods for software and systems. Accordingly, an approach to a test strategy can be based on the standards available in this standard.

Example of risk-based testing - according to the RPI method

In principle, for reasons of time and / or financial resources, it is never possible to completely test software (or parts of software). For this reason, it is important to prioritize tests so that they make sense. A proven method for prioritizing tests is the risk-based approach, also called RPI method, with RPI for R isiko- P rioritäts- I is ndex.

In the RPI method, the requirements are first assigned to groups. Then criteria are defined which are important for the end product. These criteria will later be used to assess the requirement groups. In the following, the three criteria that have proven themselves in practice are discussed. With the questions presented, it should be possible to distinguish the requirements as well as possible, which enables the assessment.

Business relevance

- How great is the benefit for the end user?

- How great is the damage if this requirement is not met?

- How many end users would be affected if this requirement is not met?

- How important is this requirement compared to other requirements? Must / should / can it be fulfilled?

Discoverability

- Can the error or non-compliance with the requirement be found quickly?

- Is the error even noticed?

- How do you see the error?

- Does everyone find the mistake or rather users who have more knowledge about the product?

complexity

- How complex is the requirement?

- How much does the requirement depend on other parts? How many other requirements depend on it?

- How complex is the implementation of the requirement?

- Are technologies in use that are rather simple or complex?

Once the requirements have been grouped and the criteria defined, all requirement groups go through the three criteria. The requirements are rated from 1 to 3, with 3 being the highest value (in terms of importance) (the scale can be adjusted as required for a broader distribution of requirements). Once this is done, the product is formed from the three assessed criteria - from which the importance of the requirements results.

Explanations of the test strategy according to ISO 25000

ISO 25000 defines 8 dimensions for software quality characteristics. These dimensions are the following:

- Functional suitability

- reliability

- Usability

- Power efficiency

- Maintainability

- Transferability

- safety

- compatibility

The test strategy is based on these 8 quality characteristics based on experience and standards. Test cases specifically created for this purpose are intended to ensure that these criteria are complied with / supported by the software to be tested - insofar as they are relevant.

documentation

The preparation of the documentation is also part of the test planning. The IEEE 829 standard recommends a standardized procedure for this . According to this standard, complete test documentation includes the following documents:

- Test plan

- Describes scope, procedure, schedule, test items.

- Test design specification

- Describes the procedures mentioned in the test plan in detail.

- Test case specifications

- Describes the environmental conditions, inputs and outputs of each test case.

- Test procedure specifications

- Describes in individual steps how each test case is to be carried out.

- Test object transfer report

- Logs when which test items were handed over to which testers.

- Test protocol

- Lists all relevant processes during the test in chronological order.

- Test incident report

- Lists all events that require further investigation.

- Test result report

- Describes and rates the results of all tests.

Test automation

Automation is particularly advisable for tests that are repeated frequently. This is especially the case with regression tests and test-driven development . In addition, test automation is used for tests that are difficult or impossible to carry out manually (e.g. load tests ).

- Through regression testing is to software changes usually as part of the system or acceptance testing of the error-free receipt of the previous functionality checked.

- With test-driven development , the tests are ideally supplemented in the course of software development before each change and carried out after each change.

In the case of non-automated tests, the effort is so great in both cases that the tests are often not performed.

Overviews / connections

Terms used in testing

The graphic on the right shows terms that appear in the context of 'testing' - and how they relate to other terms.

Interfaces in testing

The graphic shows the most important interfaces that occur during testing. For the 'partners' mentioned by Thaller during testing, the following is an example of what is communicated or exchanged in each case.

- Project management: time and effort framework, status of each test object ('testready'), documentation systems

- Line management (and line department): Technical support, test acceptance, providing technical testers

- Data center: Provide and operate test environment (s) and test tools

- Database administrator: Install, load and manage test data sets

- Configuration management: setting up a test environment, integrating the new software

- Development: basic test documents, test items, support for testing, discussing test results

- Problem and CR management : error messages, feedback on retest, error statistics

- Steering committee: decisions on test (stage) acceptance or test termination

literature

- Andreas Spillner, Tilo Linz: Basic knowledge of software testing. Training and further education to become a Certified Tester. Foundation level according to the ISTQB standard . 5th, revised and updated edition. dpunkt.verlag, Heidelberg 2012, ISBN 978-3-86490-024-2 .

- Harry M. Sneed , Manfred Baumgartner, Richard Seidl : The System Test - From Requirements to Quality Proof . 3rd, updated and expanded edition. Carl Hanser, Munich 2011, ISBN 978-3-446-42692-4 .

- Georg Erwin Thaller: software test. Verification and validation . 2nd, updated and expanded edition. Heise, Hannover 2002, ISBN 3-88229-198-2 .

- Richard Seidl, Manfred Baumgartner, Thomas Bucsics, Stefan Gwihs: Basic knowledge of test automation - concepts, methods and techniques . 2nd, updated and revised edition. dpunkt.verlag, 2015, ISBN 978-3-86490-194-2 .

- Mario Winter, Mohsen Ekssir-Monfared, Harry M. Sneed, Richard Seidl, Lars Borner: The integration test. From design and architecture to component and system integration . Carl Hanser, 2012, ISBN 978-3-446-42564-4 .

- Bill Laboon: A Friendly Introduction to Software Testing . CreateSpace Independent, 2016, ISBN 978-1-5234-7737-1 .

Web links

- Glossary "Test Terms" from ISTQB

- Detailed listing of test management software and explanation of the management tool types in software tests

- Testtool Review - Information portal about the international market offer in the field of software test tools

Individual evidence

- ↑ a b c d e f Martin Pol, Tim Koomen, Andreas Spillner: Management and optimization of the test process. A practical guide to successfully testing software with TPI and TMap. 2nd updated edition. dpunkt.Verlag, Heidelberg 2002, ISBN 3-89864-156-2 .

- ^ Ernst Denert : Software engineering. Methodical project management. Springer, Berlin et al. 1991, ISBN 3-540-53404-0 .

- ↑ ISTQB Certified Tester Foundation Level Syllabus Version 2011 1.0.1 International Software Testing Qualifications Board. Retrieved May 20, 2019 . , German language edition. Published by the Austrian Testing Board, German Testing Board eV & Swiss Testing Board, Chap. 1.4

- ^ Peter Liggesmeyer : Software quality. Testing, analyzing and verifying software. Spektrum Akademischer Verlag, Heidelberg et al. 2002, ISBN 3-8274-1118-1 , p. 34.

- ^ Toby Clemson: Testing Strategies in a Microservice Architecture. In: Martin Fowler. November 18, 2014, accessed March 13, 2017 .

- ↑ Tobias Weber, University of Cologne, Planning of software projects ( Memento from August 9, 2016 in the Internet Archive ) White Black and Gray Box Test

- ^ Dominique Portmann: The Noser Way of Testing . Ed .: Noser Engineering AG. 7th edition. January 2016.

- ↑ IEEE Standard for Software Test Documentation (IEEE Std. 829-1998)

- ↑ IEEE Standard for Software and System Test Documentation (IEEE Std. 829-2008)

- ^ Georg Erwin Thaller: Software test. Verification and validation. 2nd, updated and expanded edition. Heise, Hannover 2002, ISBN 3-88229-198-2 .